Large language models (LLMs) excel at natural language tasks but remain brittle in domains requiring precise logical and symbolic reasoning. Chaotic dynamical systems provide an especially demanding test because chaos is deterministic yet frequently conflated with randomness, complexity, or mere nonlinearity. This paper introduces CHAOSBENCH-LOGIC, a benchmark that evaluates LLM reasoning across 30 diverse dynamical systems using a unified first-order logic ontology. Each system is annotated with truth assignments for 11 semantic predicates, and 621 questions are generated across seven reasoning categories, including multi-hop implications, cross-system analogies, counterfactual reasoning, bias probes, and multi-turn dialogues. Metrics are defined for logical accuracy, implication consistency, dialogue coherence, and contradiction, and an open-source evaluation pipeline is released. Initial experiments show that frontier LLMs such as GPT-4, Claude 3.5 Sonnet, Gemini 2.5 Flash, and the open-source LLaMA-3 70B achieve 91-94% per-item accuracy, yet still score 0% on compositional items and exhibit fragile global coherence: dialogue-level accuracy ranges from 53.1% (GPT-4 CoT) to 75.5% (LLaMA-3 zeroshot). CHAOSBENCH-LOGICprovides a rigorous testbed for diagnosing these failures and a foundation for developing neuro-symbolic approaches that improve scientific reasoning in LLMs. Code (GitHub)https://github.com/11NOel11/ChaosBench-Logic Dataset (Hugging Face) -https: //huggingface.co/datasets/11NOel11/ChaosBench-Logic

Large language models transform natural language processing, demonstrating strong abilities in translation, summarisation, question answering, and code synthesis. Despite this progress, they remain surprisingly brittle when confronted with tasks requiring precise logical or symbolic reasoning. In scientific domains such as dynamical systems and chaos theory, accurate reasoning is vital: one must distinguish whether a system is chaotic, periodic, quasi-periodic, or random; infer implications of qualitative properties; and reason about parameter perturbations and bifurcations. Errors in such reasoning can lead to incorrect scientific conclusions and undermine trust in LLM-based scientific tools.

Chaotic dynamics provide a stringent test of reasoning because “chaos” is often misunderstood even by experts. Chaos is deterministic yet exhibits sensitive dependence on initial conditions, positive Lyapunov exponents, and complex attractor geometry [19,14,3]. It is not equivalent to randomness, nonlinearity, or complexity; confusing these concepts causes systematic misinterpretations of physical systems. Existing LLM benchmarks do not adequately test this mix of domain semantics and formal reasoning depth. This paper therefore develops a benchmark that systematically evaluates and analyses LLM reasoning about chaotic and non-chaotic systems under formal logical constraints.

This paper makes the following key contributions:

- CHAOSBENCH-LOGIC Benchmark. A benchmark of 621 reasoning questions over 30 dynamical systems spanning ODEs, maps, PDEs, neuronal and chemical models, and stochastic processes [20,21]. 2. first-order logic Ontology. A unified logical vocabulary (11 predicates) and a compact axiom system capturing widely accepted implications between chaos, determinism, Lyapunov behavior, randomness, attractor types, and predictability. 3. Seven Task Families. Atomic QA, multi-hop implications, cross-system analogies and non-analogies, counterfactual reasoning, bias probes, multi-turn dialogues, and hard compositional questions. 4. Metrics and Pipeline. Reproducible evaluation for accuracy, implication consistency, dialogue coherence, contradiction detection, and axiom-violation counts.

achieve high per-item accuracy (91-94%) but fail on compositional items (0%) and show fragile multi-turn coherence.

Logical and Symbolic Reasoning in LLMs. Recent work assesses LLMs on deductive and symbolic tasks, including BIG-Bench [18], ReClor [25], LogiQA [10], and related logic benchmarks. These datasets reveal persistent brittleness in multi-step inference, contradiction avoidance, entailment, and reasoning under explicit constraints. Several efforts introduce structured prompting (e.g., chain-ofthought) or tool-augmented reasoning, yet models still hallucinate intermediate steps or fail to maintain coherence across turns [24]. CHAOSBENCH-LOGICextends this line of inquiry to a scientific domain where reasoning must stay consistent with formal definitions of chaos-related semantics.

Scientific QA and Domain Reasoning. Benchmarks such as SciBench [23] and MATH [6] evaluate quantitative or symbolic scientific problem solving, highlighting limitations in equation manipulation and multi-step reasoning. However, none target dynamical systems theory, nor do they ground questions in a shared logical ontology. CHAOSBENCH-LOGICdiffers by anchoring all questions in a unified first-order logic structure and scoring models for logical coherence rather than only final-answer plausibility.

Chaos and Machine Learning. Prior ML-for-chaos work primarily evaluates forecasting or attractor reconstruction (e.g., reservoir computing and deep learning baselines) rather than formal reasoning about qualitative regimes [15,4]. CHAOSBENCH-LOGICcomplements these benchmarks by focusing on symbolic and logical competence: distinguishing chaos from randomness and enforcing consistent implication structure across diverse systems.

Bias and Misconception Diagnostics. Evaluations such as TruthfulQA [9] examine systematic model errors and truthfulness failures. Yet domain-specific misconceptions (e.g., chaos-randomness confusion, nonlinearity-chaos conflation) remain largely unexplored. CHAOSBENCH-LOGICintroduces explicit bias-probe families tailored to chaos theory semantics.

This section formalizes CHAOSBENCH-LOGIC, describes the ontology and axiom system, details dataset construction, and defines evaluation metrics.

This paper considers reasoning over a collection of dynamical systems S = {s 1 , . . . , s 30 } including continuoustime flows, discrete-time maps, PDEs, neuronal oscillators, chemical reaction models, and stochastic processes.

Each system s ∈ S is associated with semantic properties expressed as unary logical predicates from a fixed vocabulary P. Given a natural-language query about a system s, a model must predict the logically correct answer according to the system’s ground-truth property assignment and a global axiom system.

To make the benchmark auditable and to reduce ambiguity, each predicate is treated as a semantic label over a named system (or canonical regime) rather than as an invitation for models to interpret definitions ad hoc. In particular, the benchmark is designed so that a system can appear random in its trajectories and still be labeled deterministic, reflecting the core distinction between stochasticity and sensitive determinism. This paper uses the following annotation principles:

• Determinism vs. randomness. Deterministic(s) indicates that the system evolution is fully specified by its state and update rule. Random(s) is reserved for systems with inherent stochasticity (e.g., explicit noise terms or stochastic differential equations). • Chaos as a regime label. Chaotic(s) indicates a canonical parameter regime widely treated as chaotic in the literature for that named system. Borderline regimes are avoided and disputed cases are removed during curation. • Predictability predicates. PointUnpredictable(s) captures practical loss of long-horizon point forecasts even under perfect modeling, while StatPredictable(s) reflects that coarse statistical structure can remain predictable or stable over time (e.g., invariant measures). • Attractor-type predicates. Periodic(s), QuasiPeriodic(s), and FixedPointAttr(s) are mutually exclusive indicators of common non-chaotic behavior classes, used to generate non-analogies and “do not overgeneralize” items.

These principles make CHAOSBENCH-LOGICcloser to a controlled reasoning benchmark than a definition-discovery task: models are evaluated on logical consistency under a fixed ontology and ground truth.

CHAOSBENCH-LOGICspecifies a set Φ of global axioms encoding widely used implications in dynamical systems [19,14,3]. Each implication is written explicitly:

Lyapunov & predictability.

Attractor-type implications.

∀s : StrangeAttr(s) ⇒ Chaotic(s), (9) ∀s : Periodic(s) ⇒ ¬Chaotic(s), (10) ∀s : QuasiPeriodic(s) ⇒ ¬Chaotic(s), (11) ∀s : FixedPointAttr(s) ⇒ ¬Chaotic(s).

(

Randomness vs determinism.

Design choice: no converse rules. Crucially, reverse implications are not assumed (e.g., Sensitive ⇒ Chaotic). This avoids overspecification and forces models to respect directionality: many scientific misconceptions arise from treating one-way implications as equivalences.

The benchmark ground truth for each system is a truth assignment for the 11 predicates that is consistent with Φ. For implication questions, the correct answer is computed by applying forward chaining under Φ.

Let A s denote the set of all literals known true/false for a system s. The logical closure under Φ is defined as:

where lfp is the least fixed point reached by repeatedly applying all applicable implications. Because the system annotations satisfy Φ, the closure remains consistent. For model outputs, closure and axiom checks expose contradictions and implication violations.

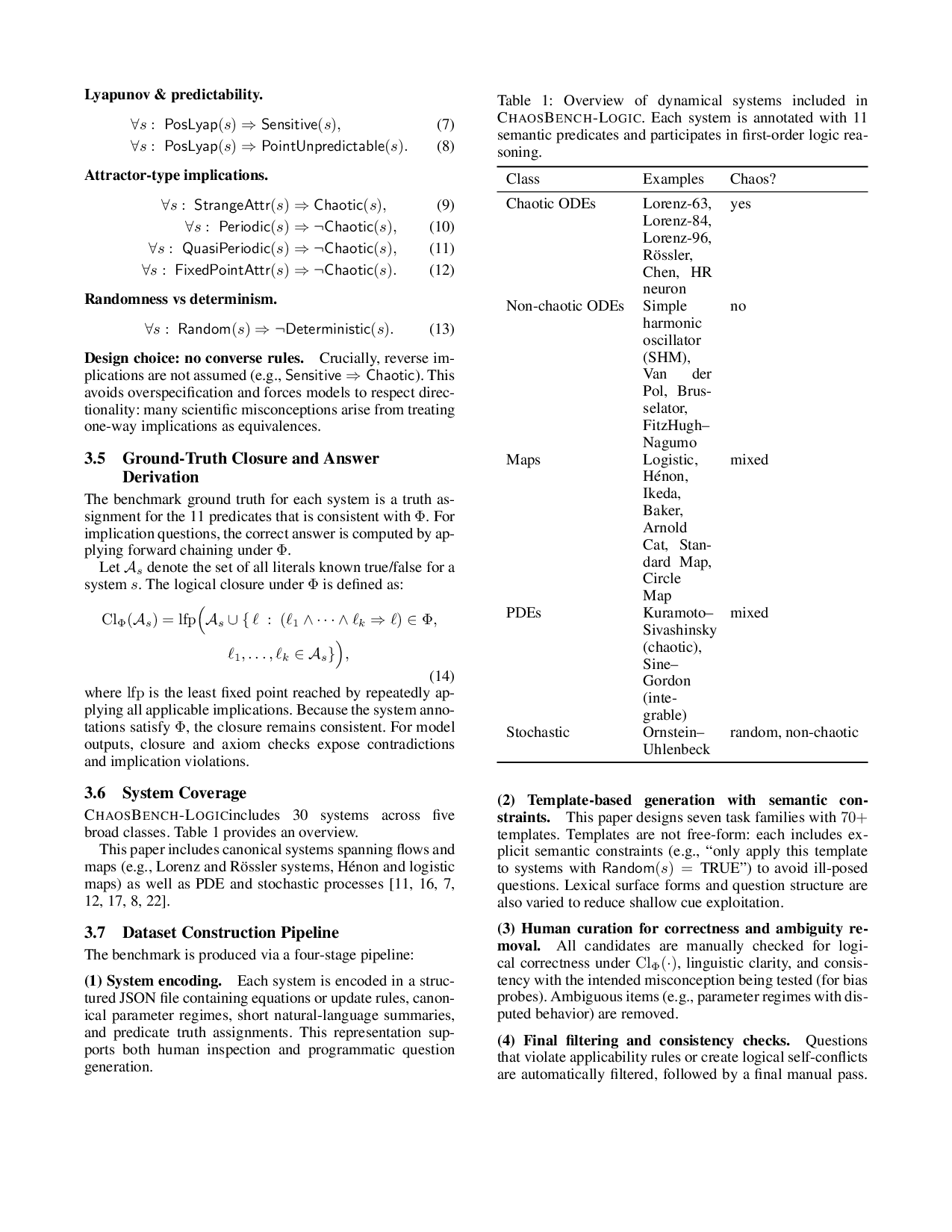

CHAOSBENCH-LOGICincludes 30 systems across five broad classes. Table 1 provides an overview.

This paper includes canonical systems spanning flows and maps (e.g., Lorenz and Rössler systems, Hénon and logistic maps) as well as PDE and stochastic processes [11,16,7,12,17,8,22].

The benchmark is produced via a four-stage pipeline:

(1) System encoding. Each system is encoded in a structured JSON file containing equations or update rules, canonical parameter regimes, short natural-language summaries, and predicate truth assignments. This representation supports both human inspection and programmatic question generation. (2) Template-based generation with semantic constraints. This paper designs seven task families with 70+ templates. Templates are not free-form: each includes explicit semantic constraints (e.g., “only apply this template to systems with Random(s) = TRUE”) to avoid ill-posed questions. Lexical surface forms and question structure are also varied to reduce shallow cue exploitation.

(3) Human curation for correctness and ambiguity removal. All candidates are manually checked for logical correctness under Cl Φ (•), linguistic clarity, and consistency with the intended misconception being tested (for bias probes). Ambiguous items (e.g., parameter regimes with disputed behavior) are removed.

(4) Final filtering and consistency checks. Questions that violate applicability rules or create logical self-conflicts are automatically filtered, followed by a final manual pass. In addition to basic sanity checks, each item is verified to be answerable from the system labels and Φ alone (i.e., without numerical simulation).

Each benchmark item includes a system identifier and a natural-language question, plus structured metadata used in evaluation. The current release includes fields such as system id, family, template id, and (for dialogues) dialogue id and turn index. This makes it possible to compute aggregate metrics per family and to audit failures at the level of a specific template or system. For compositional items, the metadata explicitly records which sub-skills are required (e.g., implication chaining plus analogy rejection), enabling more fine-grained analysis than a single global accuracy score.

Each dialogue is grouped under a dialogue id, with 3-6 turns. Correctness requires per-turn accuracy and intradialogue coherence: answers must not contradict earlier commitments under Φ. This targets persistent reasoning and belief-state stability.

A key design choice is that dialogue turns intentionally mix “easy” atomic questions with implication-driven follow-ups. This stresses whether a model can (i) answer a local question, (ii) remember its own earlier commitment, and (iii) apply the same commitment consistently when queried from a different angle later in the conversation.

This paper identifies six systematic reasoning biases: 1. Chaos-Randomness Bias 2. Chaos-Nonlinearity Bias 3. Complexity-Chaos Bias 4. Fractal-Chaos Bias 5. PDE-Chaos Overgeneralisation 6. Name/Analogy Bias (“Lorenz-like → chaotic”)

These biases are encoded at the template level in the current release and can be refined into per-item labels in future versions.

This paper introduces metrics that separate local correctness from global coherence.

Logical accuracy. Given N eval questions with valid normalized predictions:

Dialogue accuracy. For a dialogue d with turns t = 1, . . . , T d :

This metric is strict by design: a single incoherent turn collapses a whole dialogue to 0.

Contradiction & axiom-violation checks. Beyond correctness, this work evaluates whether a model’s set of commitments is internally consistent. Contradictions (e.g., predicting both Chaotic(s) and Random(s)) are flagged and violations of implications in Φ are counted within a dialogue. These diagnostics explain how and why dialogues fail even when per-turn answers look locally plausible.

Models. This paper evaluates representative frontier and open-source LLMs: GPT-4 (OpenAI), Claude 3.5 Sonnet (Anthropic), Gemini 2.5 Flash (Google), and LLaMA-3 70B (Meta) [13,2,5,1]. All models are queried through their official APIs or hosted endpoints.

Prompting. Zero-shot prompts request a final YES/NO decision. For chain-of-thought (CoT) prompting, each model is asked to write reasoning and end with FINAL ANSWER: YES/NO [24]. The evaluation script extracts and normalizes the final label. Hyperparameters and determinism. All models are run at temperature 0 (or nearest deterministic setting) to reduce sampling variance and emphasize reasoning consistency failures.

Evaluation pipeline. Responses are normalized using a robust pattern matcher. If a response contains multiple answers, the final explicit marker (CoT) or the last YES/NO token (zero-shot) is preferred. This avoids over-crediting models that hedge or self-correct mid-output.

This section presents empirical results on CHAOSBENCH-LOGIC. All accuracies are computed over items where a valid normalized answer can be extracted; in the final runs, this is 621/621 items for all configurations.

Table 3 summarizes performance. All evaluated models achieve 91-94% per-item accuracy, yet dialogue-level coherence is substantially lower.

Why DlgAcc is much lower than Acc. Dialogue accuracy compounds errors: for a dialogue of length T , even an independent per-turn accuracy p yields an “all-correct” probability of approximately p T . With p ≈ 0.92 and T ∈ [3,6], this ranges from 0.92 3 ≈ 0.78 to 0.92 6 ≈ 0.61, matching the observed DlgAcc range (53-76%). In practice, dialogue errors are not independent: models often drift in their interpretation of a system across turns, causing clustered failures rather than isolated mistakes.

Local competence vs. global coherence. The high Acc values show that most single questions are solvable for current LLMs. The lower DlgAcc values indicate that models struggle to maintain a stable belief state across turns, even when the required beliefs are simple boolean properties with deterministic implications.

Table 4 reports the best-performing model for key task families. Models are strong on many atomic and multi-hop items but fail completely on compositional questions. What makes compositional items qualitatively harder.

Compositional questions deliberately combine multiple constraints, such as: (i) chaining two or more implications under Φ; (ii) rejecting a misleading analogy cue; (iii) applying a counterfactual change shown elsewhere in the dialogue; and (iv) producing an answer consistent with prior turns. In practice, models often succeed on each component in isolation but fail when the components must be integrated into a single coherent decision.

A key observed failure mode: converse assumptions.

Because Φ contains only one-way implications, compositional items often punish the common LLM heuristic “if A implies B, then B implies A.” For example, models may incorrectly infer Chaotic(s) from Sensitive(s) or from PosLyap(s) even though the ontology does not assume those converses. This is not a trick: it reflects real scientific reasoning discipline about implication directionality.

A major advantage of CHAOSBENCH-LOGICis that inconsistency is measurable. Two models can achieve similar peritem accuracy while differing sharply in how they fail. In particular, some errors are local (a single wrong predicate assignment) while others represent global constraint failures (e.g., asserting both Random(s) and Deterministic(s), or implying chaos from a non-converse symptom). These failures matter operationally: in downstream scientific workflows, a locally incorrect answer may be caught by a user, but a globally inconsistent belief state can produce confident yet mutually incompatible explanations across time. This is precisely the failure mode that multi-turn dialogues and axiom-violation tracking are designed to surface.

CoT is frequently assumed to improve reasoning [24], but on CHAOSBENCH-LOGICit reduces both accuracy and dialogue-level coherence.

Interpretation. CoT outputs are longer and appear more rigorous, but they can also introduce extra unsupported assumptions (e.g., implicitly treating “random-looking” as “random”) and amplify converse reasoning errors. CoT can therefore increase explanatory confidence without improving logical soundness. The larger drop in DlgAcc suggests that CoT especially harms belief-state stability in multi-turn settings. Why scientific domains make this failure more obvious.

In everyday QA, inconsistent answers can be rationalized away as ambiguity. In dynamical systems, however, core relationships are crisp: “random ⇒ non-deterministic” and “chaotic ⇒ deterministic and not random” are definitional in this ontology [19,14,3]. CHAOSBENCH-LOGICturns these into explicit constraints that models must respect, revealing when a model’s internal reminders are not globally stable.

The structure of CHAOSBENCH-LOGICmakes it a natural evaluation target for hybrid approaches:

• Symbolic verifier / constraint checker. An LLM can propose answers while a symbolic module checks whether the answers violate Φ, triggering self-correction. • Logic-guided decoding. Outputs can be constrained so that answers cannot jointly violate implications already committed within a dialogue. • Training with consistency losses. Fine-tuning can penalize axiom violations across turns, not only wrong final labels.

Because CHAOSBENCH-LOGICuses a compact and explicit ontology, these methods are implementable without full theorem proving.

CHAOSBENCH-LOGICdeliberately emphasizes logical structure over numerical simulation. This makes the benchmark lightweight and auditable, but it also means that each named system is treated as having a canonical regime label. Future releases can extend the benchmark with (i) multiple parameter regimes per system, (ii) items that require extracting regime information from equations or parameter values, and (iii) hybrid items that combine symbolic logic with toolverified numeric diagnostics (e.g., Lyapunov estimation).

This paper introduces CHAOSBENCH-LOGIC, a benchmark for diagnosing logical and symbolic reasoning failures in LLMs within the domain of chaotic dynamical systems. By grounding all questions in an explicit first-order logic ontology, CHAOSBENCH-LOGICmeasures not only per-item accuracy but also implication consistency and multi-turn coherence. Experiments show that frontier and open-source LLMs achieve strong local performance (91-94% per-item accuracy) yet remain globally brittle: dialogue-level accuracy is substantially lower and all tested models fail on compositional items (0%). CHAOSBENCH-LOGICsupports progress toward scientific AI systems that maintain globally consistent beliefs, resist domain misconceptions, and integrate symbolic structure with fluent language understanding.

This content is AI-processed based on open access ArXiv data.