KOINEU

Global Academic Research Archive

A Space-time Smooth Artificial Viscosity Method For Nonlinear Conservation Laws

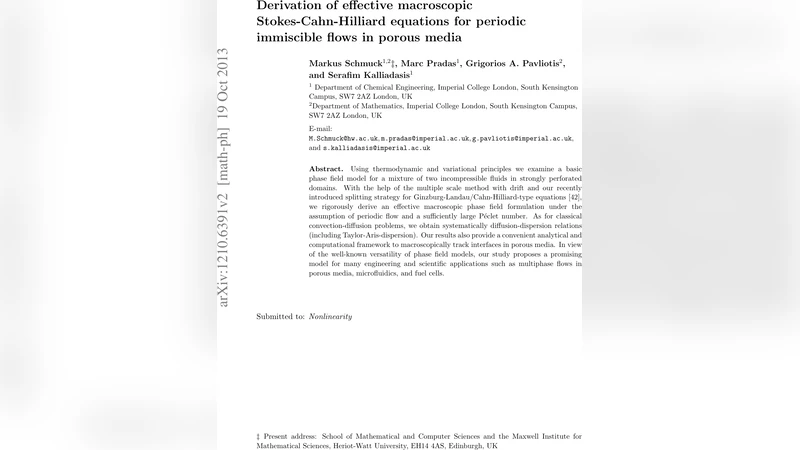

Derivation of effective macroscopic Stokes-Cahn-Hilliard equations for periodic immiscible flows in porous media

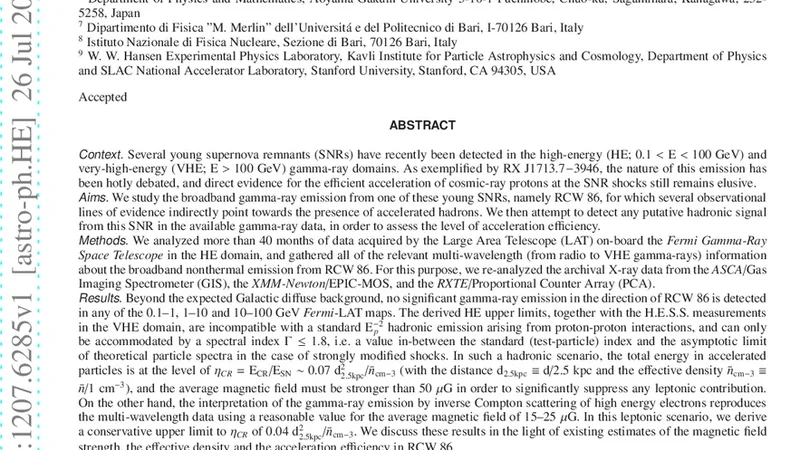

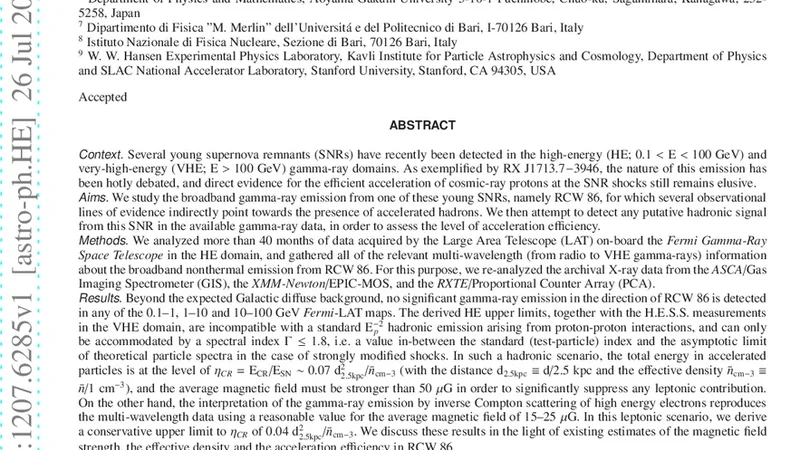

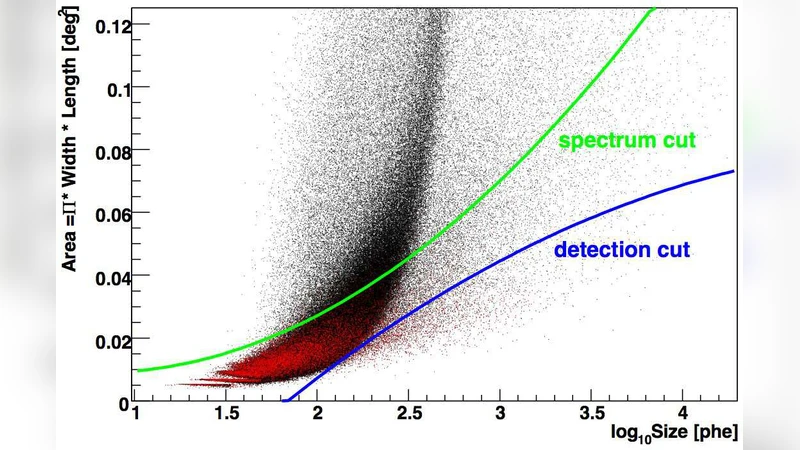

Constraints on cosmic-ray efficiency in the supernova remnant RCW 86 using multi-wavelength observations

Efficient Group Key Management Schemes for Multicast Dynamic Communication Systems

General Science (1453)

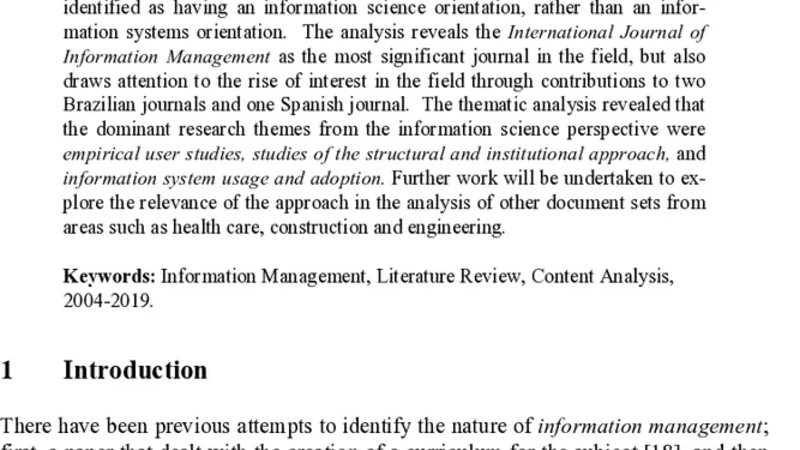

Where is Information Management Research?

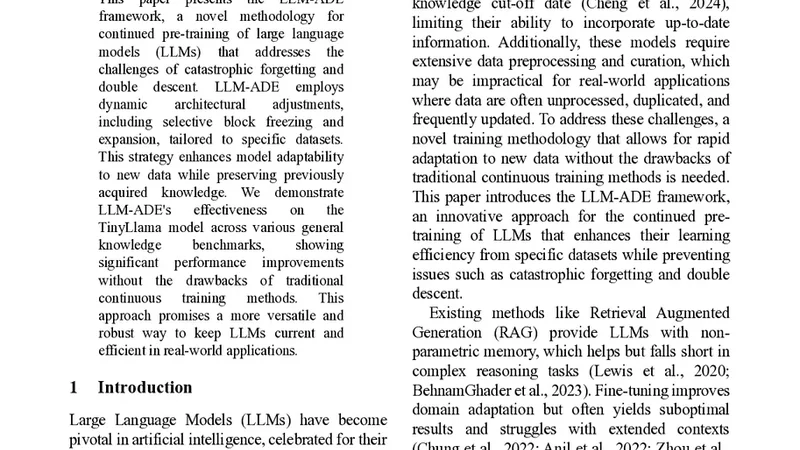

When Life gives you LLMs, make LLM-ADE: Large Language Models with Adaptive Data Engineering

Use of Ground Penetrating Radar to Map the Tree Roots

Time-Varying Coronary Artery Deformation: A Dynamic Skinning Framework for Surgical Training

Three-dimensional crustal deformation analysis using physics-informed deep learning

The Verification-Value Paradox: A Normative Critique of Gen AI in Legal Practice

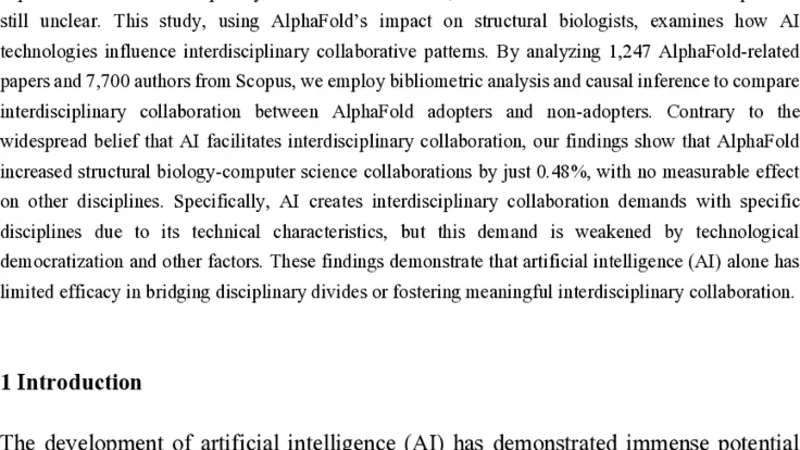

The Role of AI in Facilitating Interdisciplinary Collaboration: Evidence from AlphaFold

The Multiverse: a Philosophical Introduction

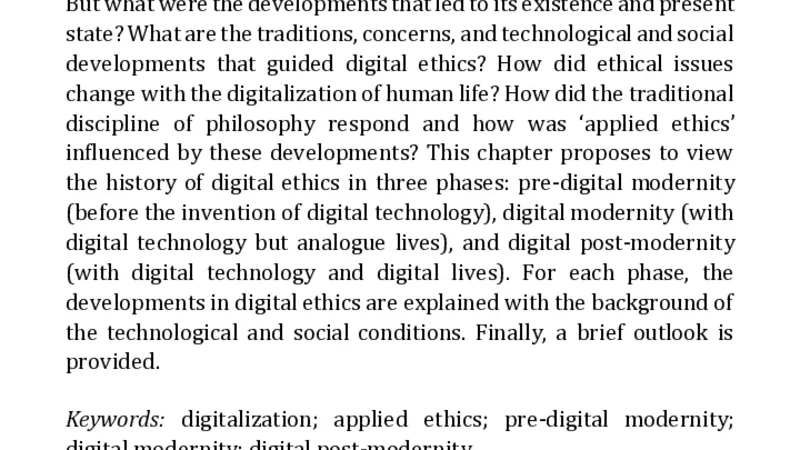

The history of digital ethics

High Energy Astrophysics (652)

Constraints on cosmic-ray efficiency in the supernova remnant RCW 86 using multi-wavelength observations

Mass of highly magnetized white dwarfs exceeding the Chandrasekhar limit: An analytical view

Anisotropic inverse Compton scattering of photons from the circumstellar disc in PSR B1259-63

Fully General Relativistic Simulations of Core-Collapse Supernovae with An Approximate Neutrino Transport

Origin of the X-ray disc-reflection steep radial emissivity

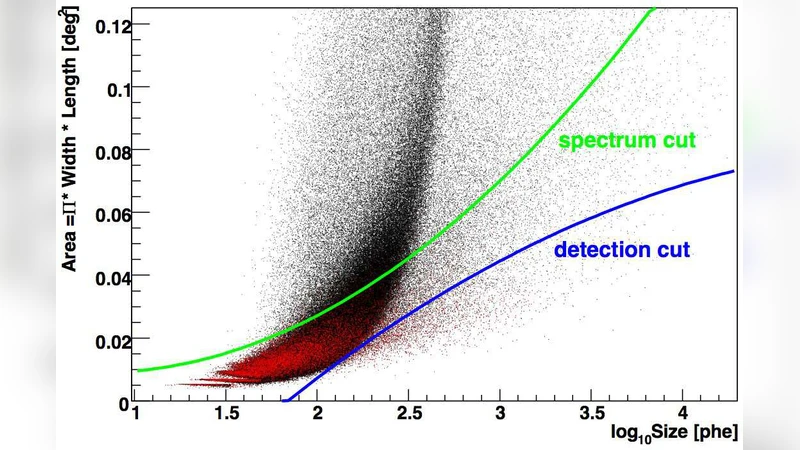

High zenith angle observations of PKS 2155-304 with the MAGIC-I telescope

Hybrid Stars in an SU(3) Parity Doublet Model

Bending of electromagnetic wave in an ultra-strong magnetic field

Astrophysical point source search with the ANTARES neutrino telescope

Information Theory (120)

Transforming Monitoring Structures with Resilient Encoders. Application to Repeated Games

Extending Monte Carlo Methods to Factor Graphs with Negative and Complex Factors

Construction of LDGM lattices

Adaptive Context Tree Weighting

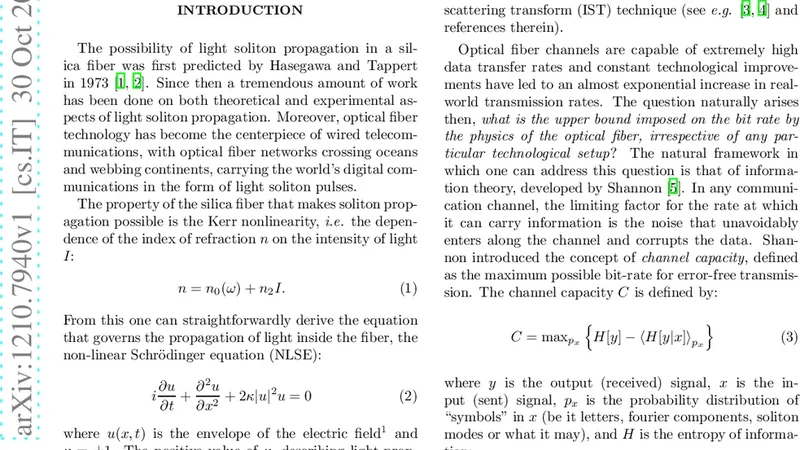

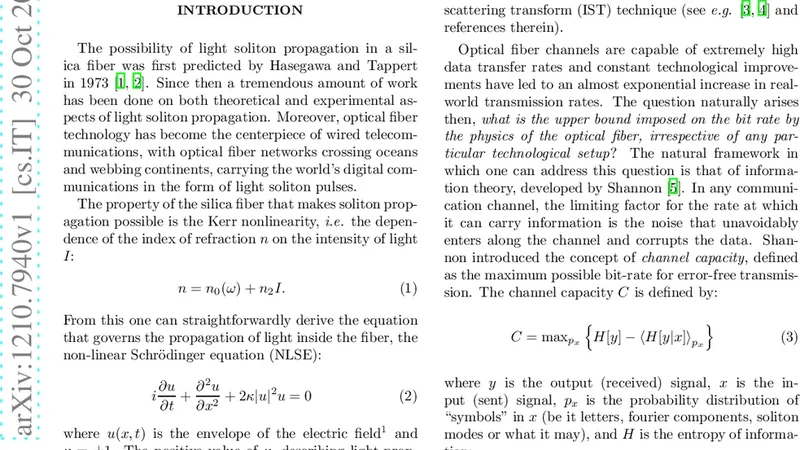

Transmission of information via the non-linear Scroedinger equation: The random Gaussian input case

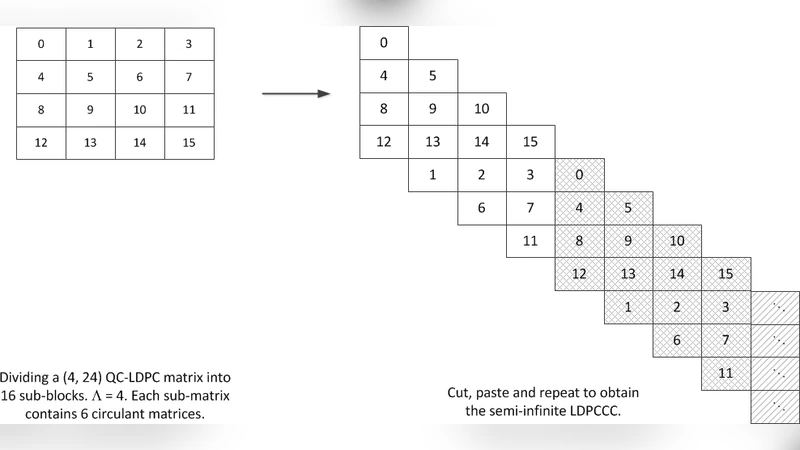

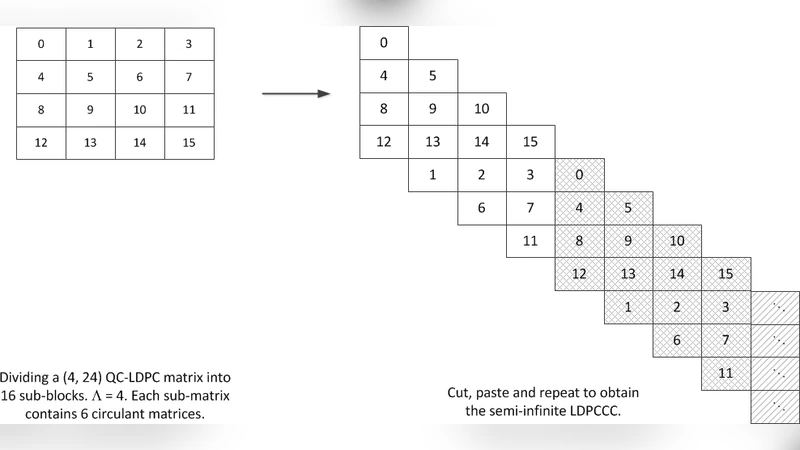

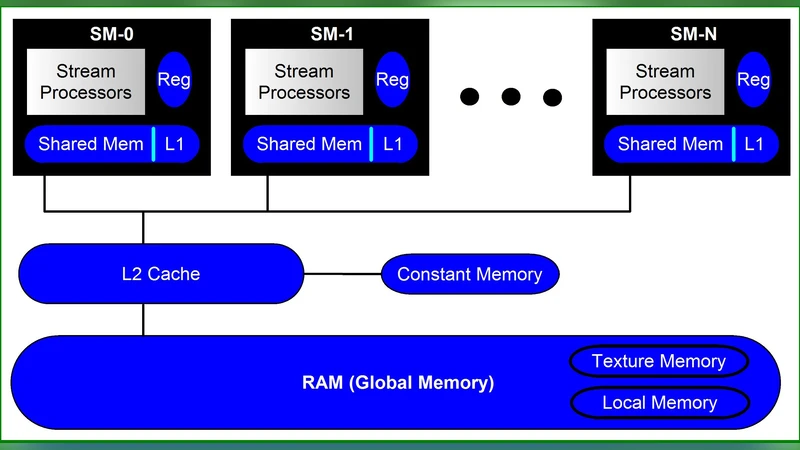

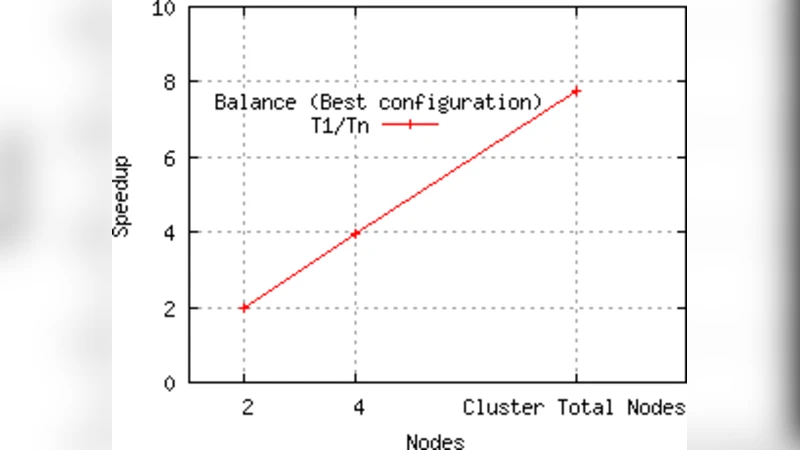

Implementation Of Decoders for LDPC Block Codes and LDPC Convolutional Codes Based on GPUs

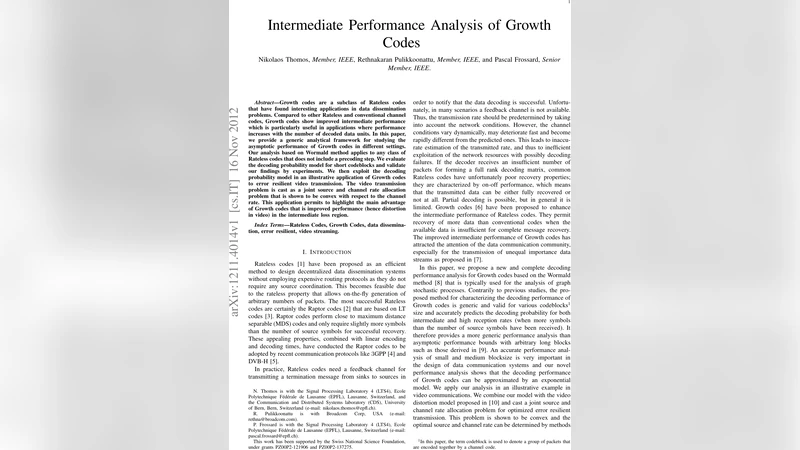

Intermediate Performance Analysis of Growth Codes

Convolutional Compressed Sensing Using Deterministic Sequences

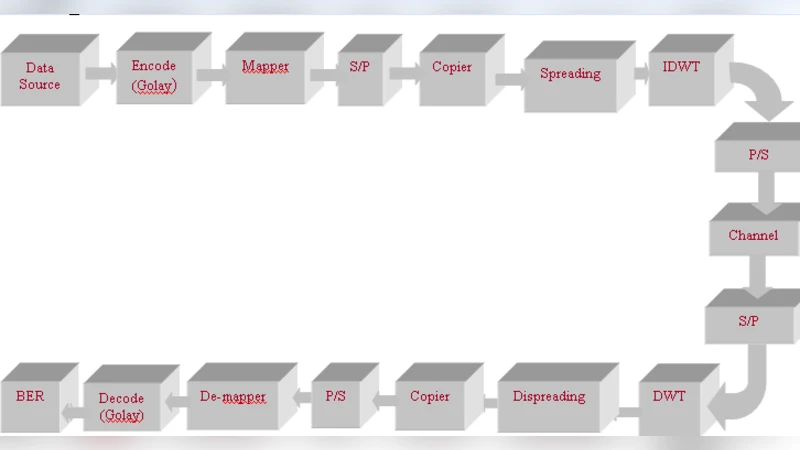

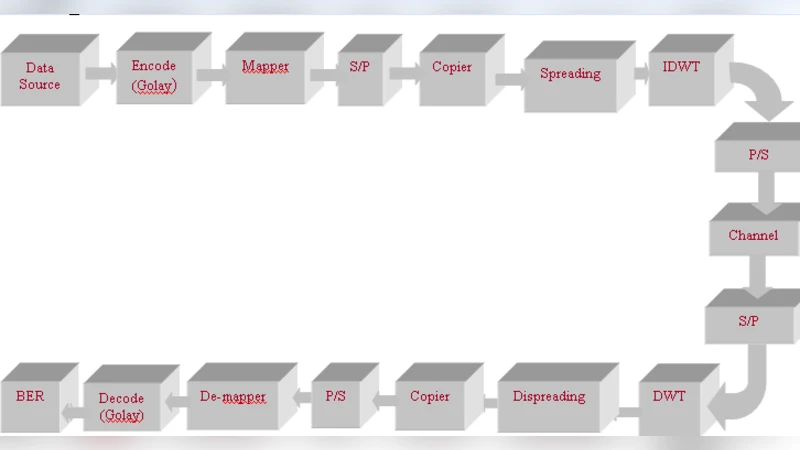

Impact of Different Spreading Codes Using FEC on DWT Based MC-CDMA System

Information Theory (120)

Transforming Monitoring Structures with Resilient Encoders. Application to Repeated Games

Extending Monte Carlo Methods to Factor Graphs with Negative and Complex Factors

Construction of LDGM lattices

Adaptive Context Tree Weighting

Transmission of information via the non-linear Scroedinger equation: The random Gaussian input case

Implementation Of Decoders for LDPC Block Codes and LDPC Convolutional Codes Based on GPUs

Intermediate Performance Analysis of Growth Codes

Convolutional Compressed Sensing Using Deterministic Sequences

Impact of Different Spreading Codes Using FEC on DWT Based MC-CDMA System

Cosmology (165)

Mass of highly magnetized white dwarfs exceeding the Chandrasekhar limit: An analytical view

Origin of the X-ray disc-reflection steep radial emissivity

High zenith angle observations of PKS 2155-304 with the MAGIC-I telescope

MOJAVE: Monitoring of Jets in Active Galactic Nuclei with VLBA Experiments. VIII. Faraday rotation in parsec-scale AGN jets

Polarization of synchrotron emission from relativistic reconfinement shocks with ordered magnetic fields

Two-Phase ICM in the Central Region of the Rich Cluster of Galaxies Abell 1795: A Joint Chandra, XMM-Newton, and Suzaku View

A new connection between the opening angle and the large-scale morphology of extragalactic radio sources

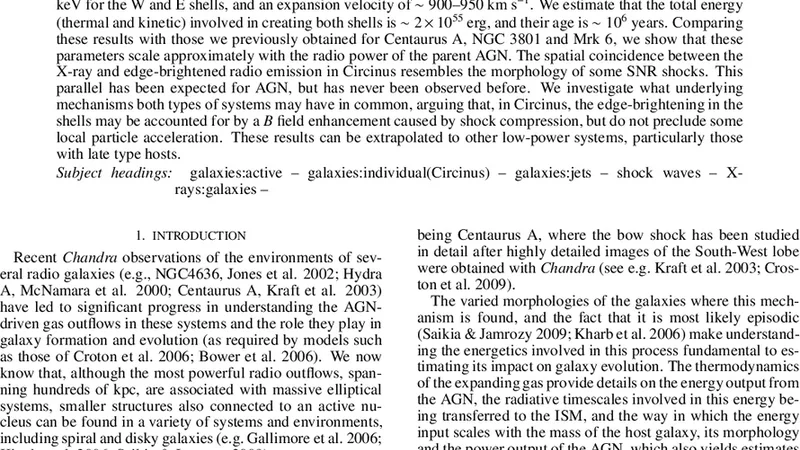

Shocks, Seyferts and the SNR connection: a Chandra observation of the Circinus galaxy

Determination of neutrino mass hierarchy by 21 cm line and CMB B-mode polarization observations

Computational Physics (389)

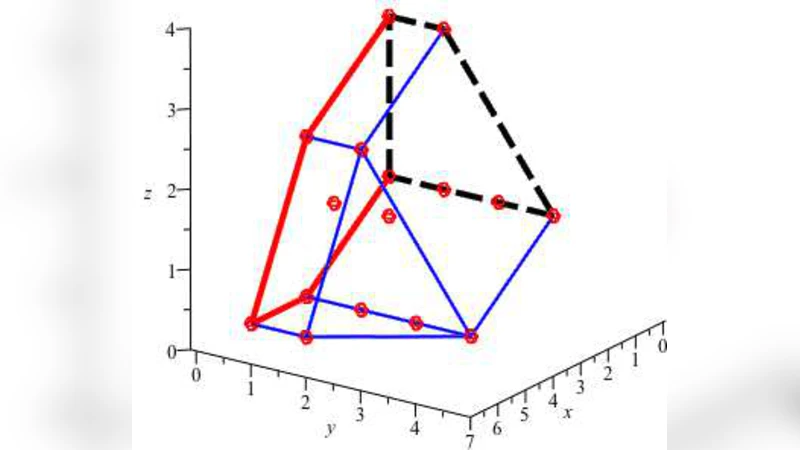

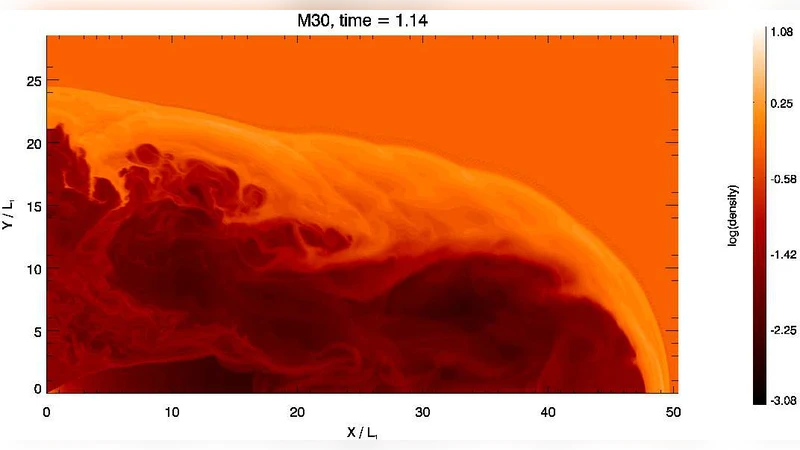

A Space-time Smooth Artificial Viscosity Method For Nonlinear Conservation Laws

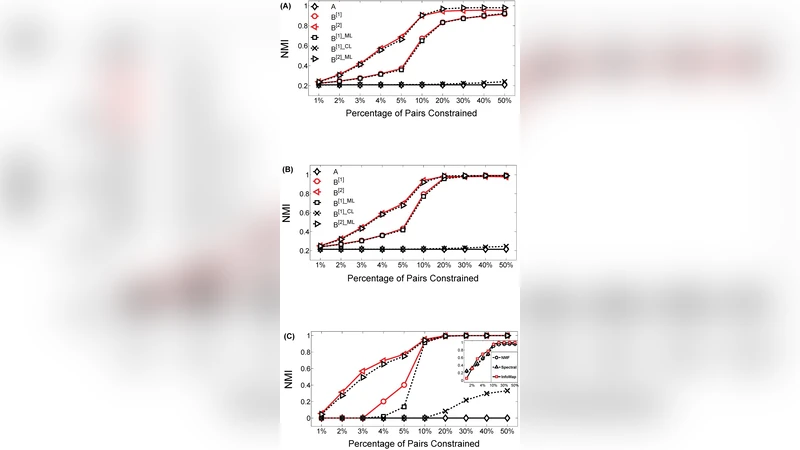

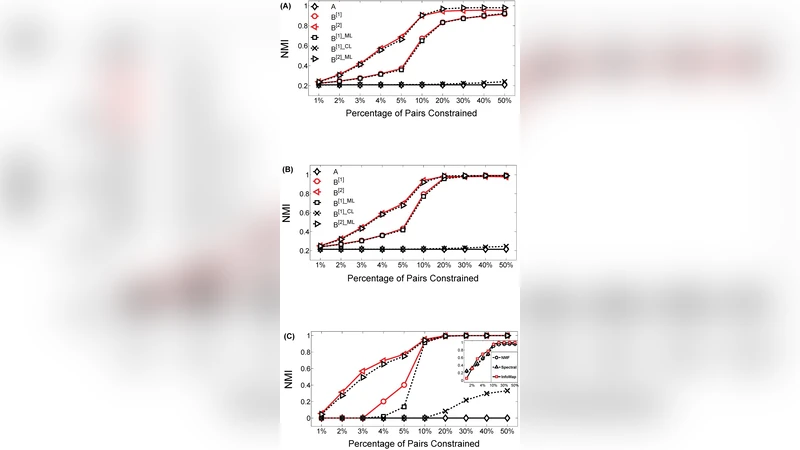

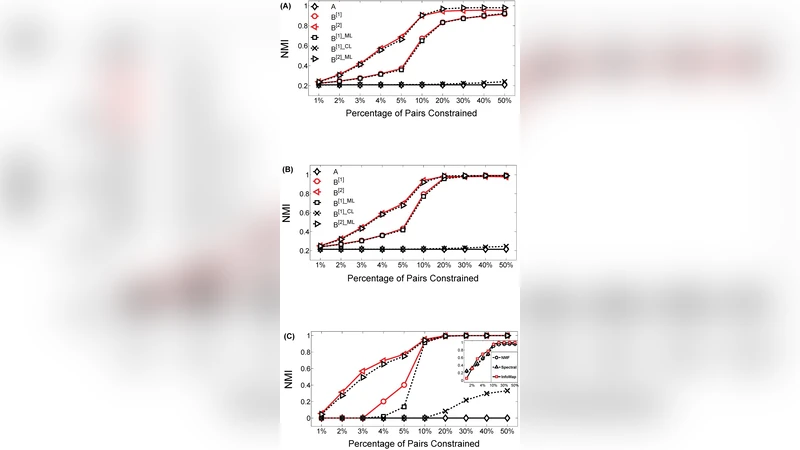

Enhanced Community Structure Detection in Complex Networks with Partial Background Information

Derivation of effective macroscopic Stokes-Cahn-Hilliard equations for periodic immiscible flows in porous media

Asymptotic Derivation and Numerical Investigation of Time-Dependent Simplified Pn Equations

The effect of the frozen and pinned surface approximations on the spatial distribution of incompressible and compressible strips in quantum Hall regime

Overspill avalanching in a dense reservoir network

NLSEmagic: Nonlinear Schr"odinger Equation Multidimensional Matlab-based GPU-accelerated Integrators using Compact High-order Schemes

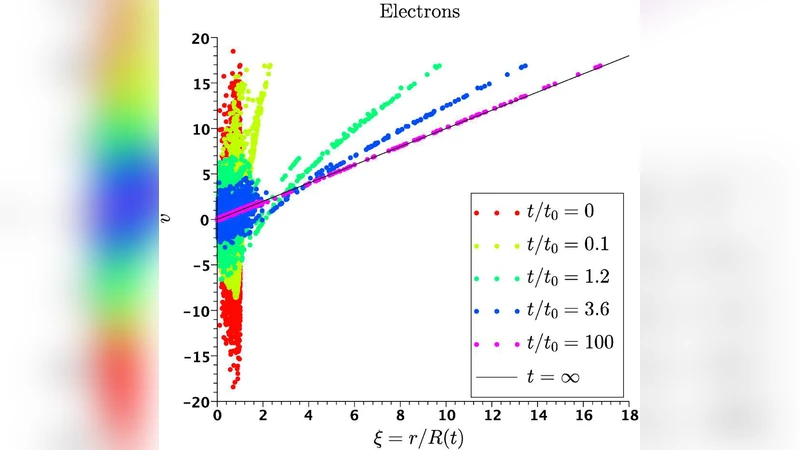

On the unconstrained expansion of a spherical plasma cloud turning collisionless : case of a cloud generated by a nanometer dust grain impact on an uncharged target in space

Fracturing highly disordered materials

Data Structures and Algorithms (342)

Deciding Monotone Duality and Identifying Frequent Itemsets in Quadratic Logspace

Rerouting shortest paths in planar graphs

Relationships in Large-Scale Graph Computing

Counting inequivalent monotone Boolean functions

Algorithms and Complexity Results for Exact Bayesian Structure Learning

Search Algorithms for Conceptual Graph Databases

The Diffusion of Networking Technologies

Upper bounds for the formula size of the majority function

Optimal Rectangle Packing: An Absolute Placement Approach

Nonlinear Systems (126)

Liouville-Arnold integrability for scattering under cone potentials

4-dimensional Frobenius manifolds and Painleve VI

A tunable macroscopic quantum system based on two fractional vortices

IST of KPII equation for perturbed multisoliton solutions

Modelling of light driven CO2 concentration gradient and photosynthetic carbon assimilation flux distribution at the chloroplast level

N-order bright and dark rogue waves in a Resonant erbium-doped Fibre system

Regularization of the Kepler problem on the Sphere

Transmission of information via the non-linear Scroedinger equation: The random Gaussian input case

Hyperkahler manifolds and nonabelian Hodge theory of (irregular) curves

Artificial Intelligence (686)

Role-Dynamics: Fast Mining of Large Dynamic Networks

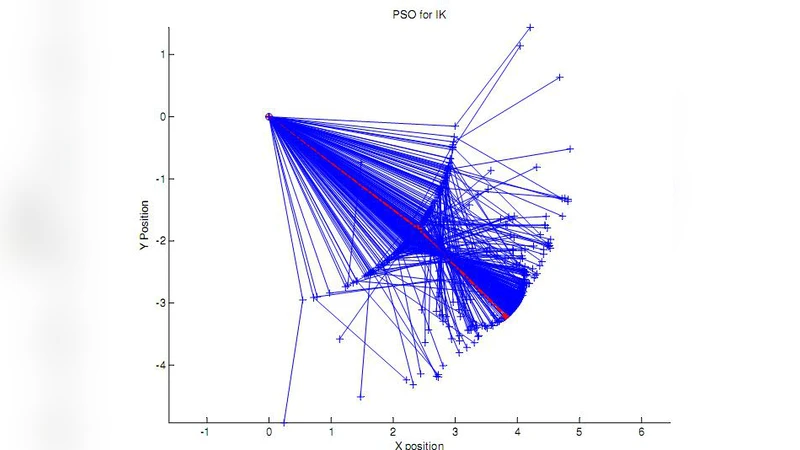

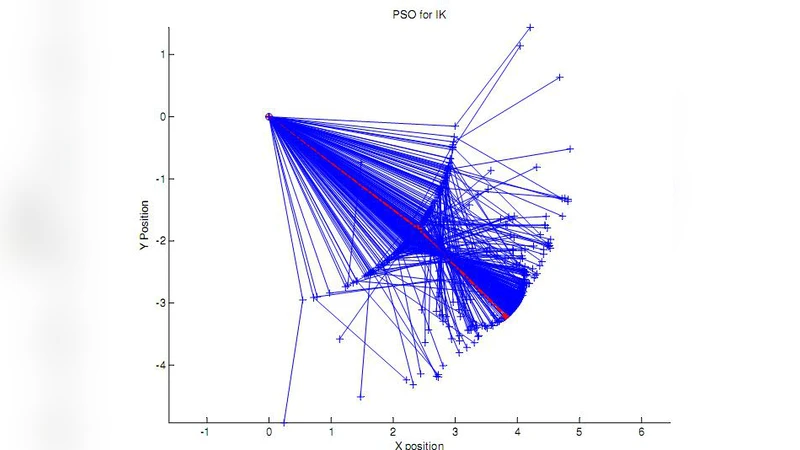

IK-PSO, PSO Inverse Kinematics Solver with Application to Biped Gait Generation

Deciding Monotone Duality and Identifying Frequent Itemsets in Quadratic Logspace

Distributed Power Allocation with SINR Constraints Using Trial and Error Learning

Critical behavior in a cross-situational lexicon learning scenario

Distribution of the search of evolutionary product unit neural networks for classification

Distributional Framework for Emergent Knowledge Acquisition and its Application to Automated Document Annotation

Modelling and simulation of complex systems: an approach based on multi-level agents

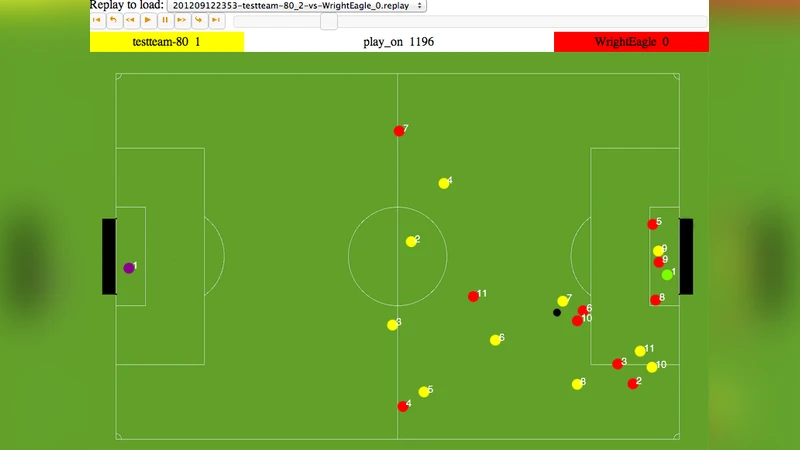

Gliders2012: Development and Competition Results

Networking (162)

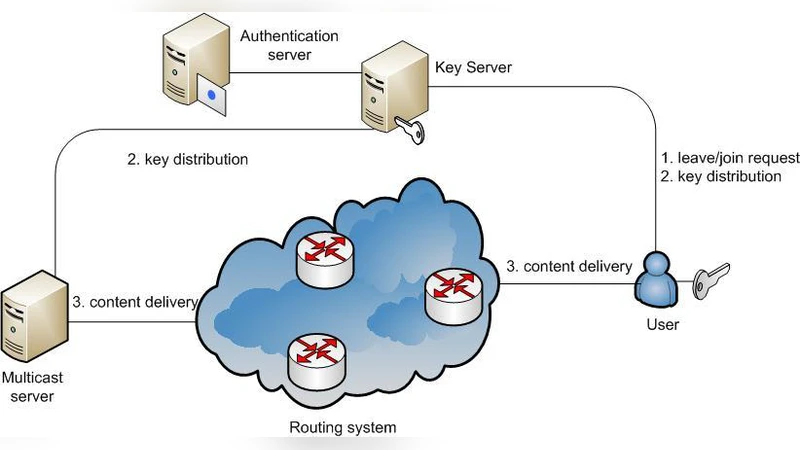

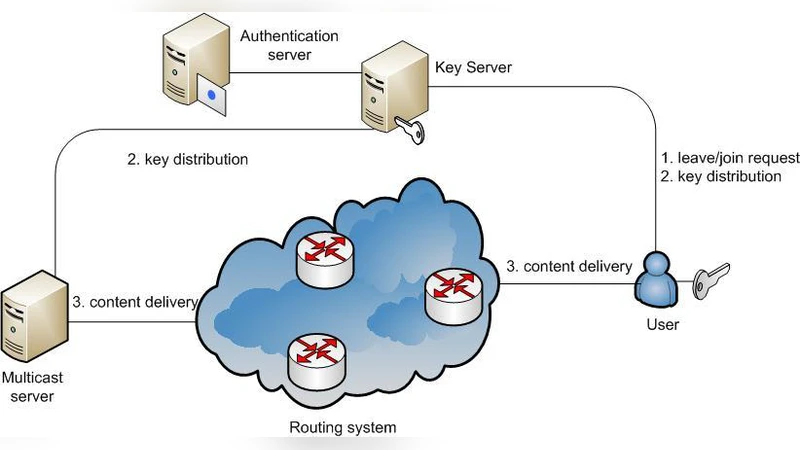

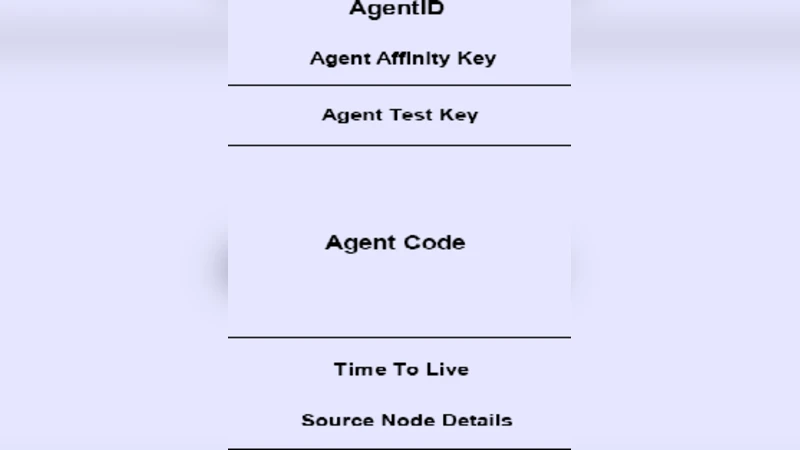

Efficient Group Key Management Schemes for Multicast Dynamic Communication Systems

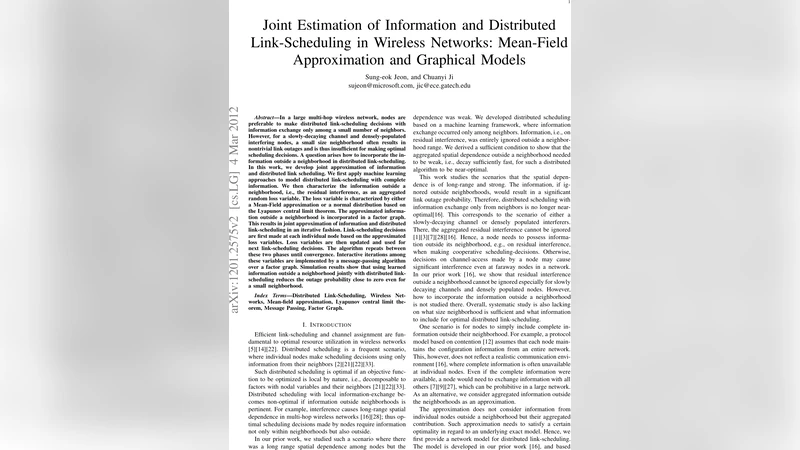

Joint Approximation of Information and Distributed Link-Scheduling Decisions in Wireless Networks

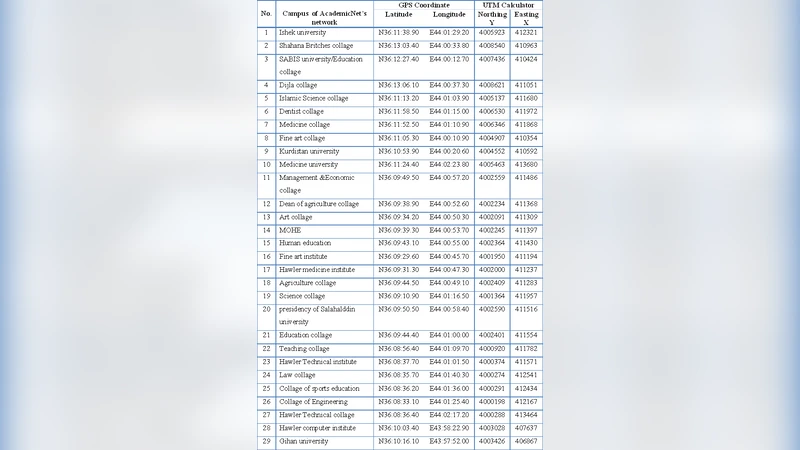

New Technique for Proposing Networks Topology using GPS and GIS

The Diffusion of Networking Technologies

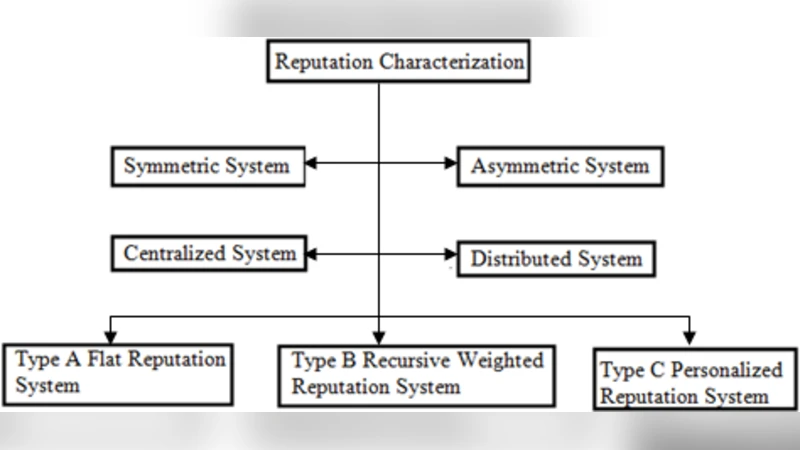

Referencing Tool for Reputation and Trust in Wireless Sensor Networks

DQSB: A Reliable Broadcast Protocol Based on Distributed Quasi-Synchronized Mechanism for Low Duty-Cycled Wireless Sensor Networks

Bottom-up Broadband Initiatives in the Commons for Europe Project

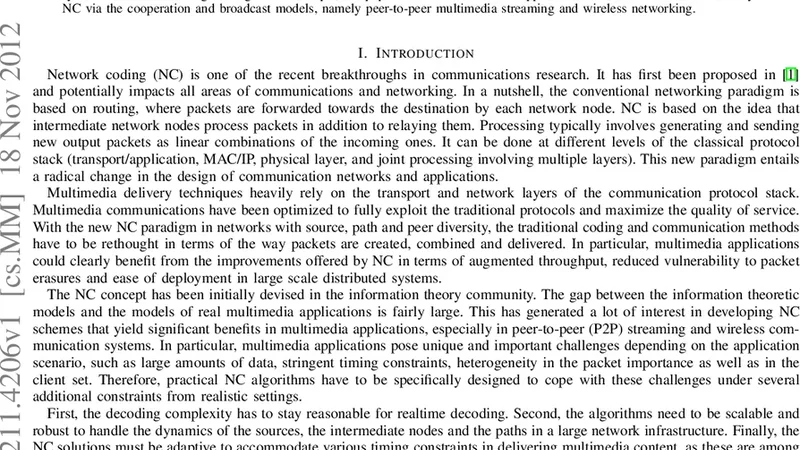

Network Coding Meets Multimedia: a Review

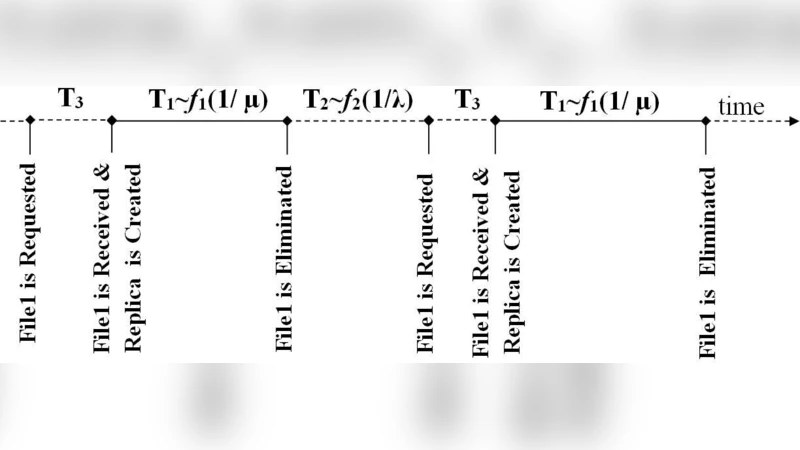

Scaling Laws of the Throughput Capacity and Latency in Information-Centric Networks

Other Categories

A Space-time Smooth Artificial Viscosity Method For Nonlinear Conservation Laws

Enhanced Community Structure Detection in Complex Networks with Partial Background Information

Mass of highly magnetized white dwarfs exceeding the Chandrasekhar limit: An analytical view

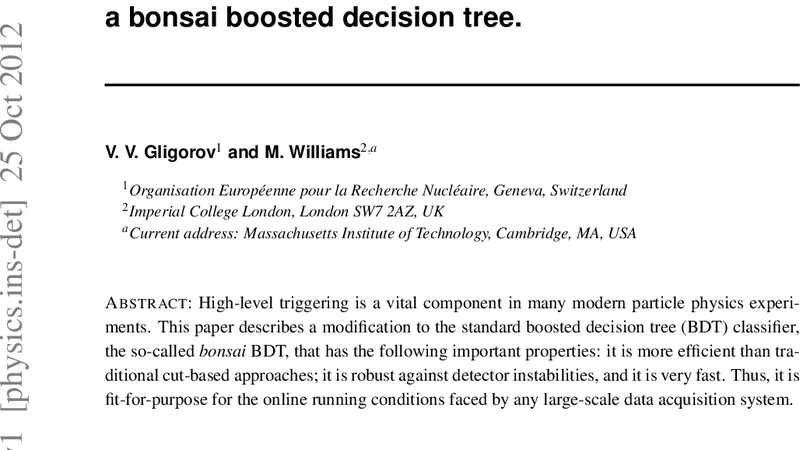

Efficient, reliable and fast high-level triggering using a bonsai boosted decision tree

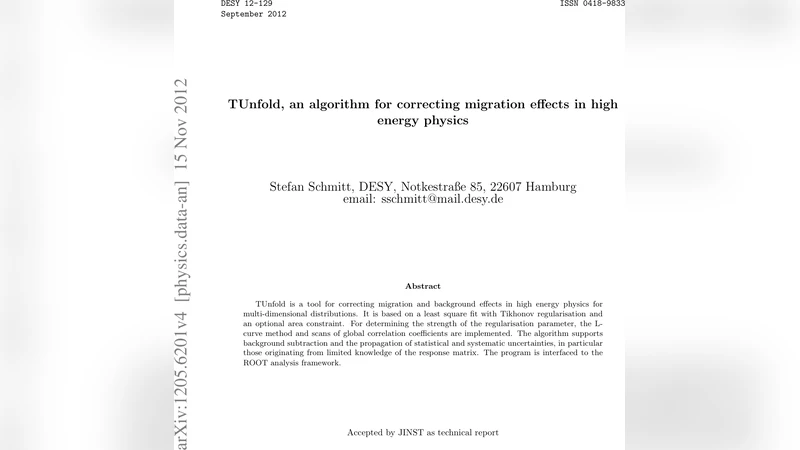

TUnfold: an algorithm for correcting migration effects in high energy physics

The Evolutionary Robustness of Forgiveness and Cooperation

Role-Dynamics: Fast Mining of Large Dynamic Networks

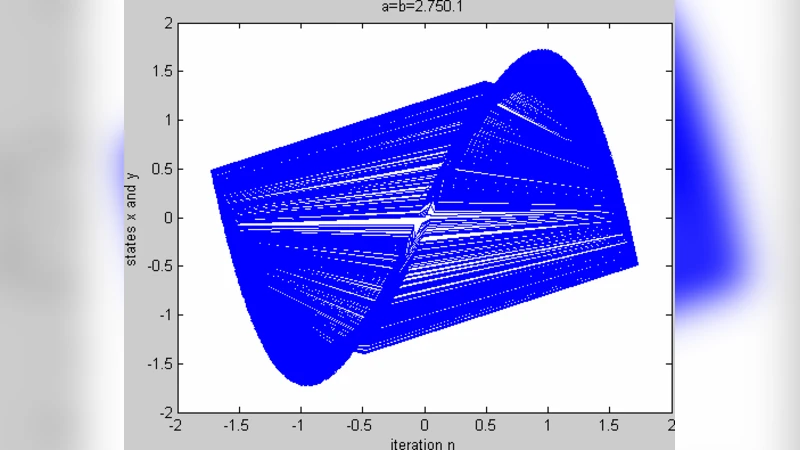

Message Embedded cipher using 2-D chaotic map

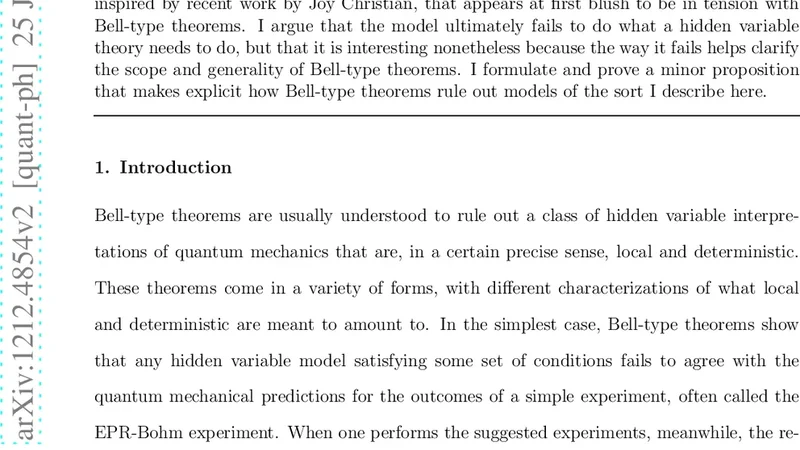

The Scope and Generality of Bells Theorem

Bio Inspired Approach to Secure Routing in MANETs

IK-PSO, PSO Inverse Kinematics Solver with Application to Biped Gait Generation

Concrete Semantics of Programs with Non-Deterministic and Random Inputs

Irregular transcription dynamics for rapid production of high-fidelity transcripts

An efficient strategy to suppress epidemic explosion in heterogeneous metapopulation networks

Deciding Monotone Duality and Identifying Frequent Itemsets in Quadratic Logspace

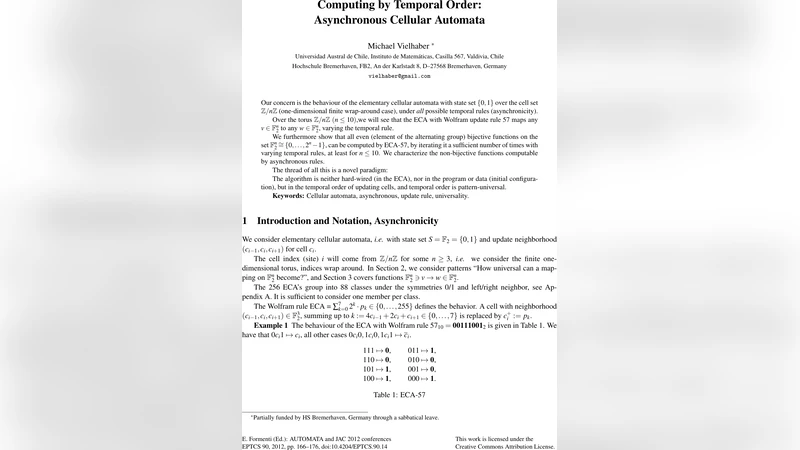

Computing by Temporal Order: Asynchronous Cellular Automata

Timed Test Case Generation Using Labeled Prioritized Time Petri Nets

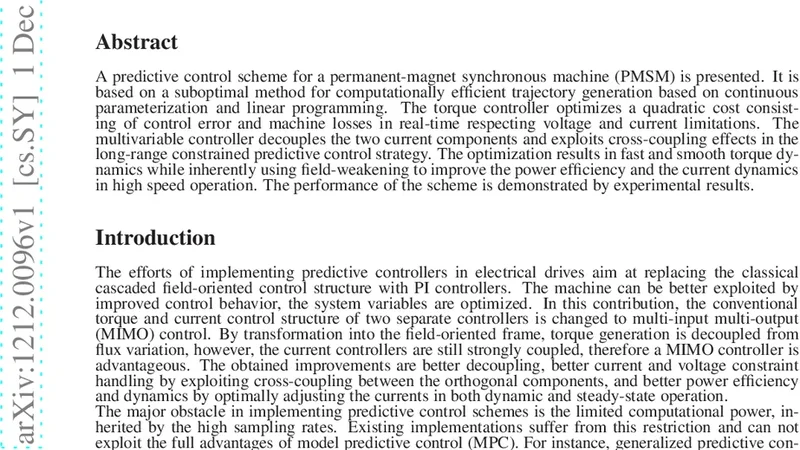

Predictive Control of a Permanent Magnet Synchronous Machine based on Real-Time Dynamic Optimization

Exploring mutexes, the Oracle RDBMS retrial spinlocks