Digital Twin AI Opportunities and Challenges from Large Language Models to World Models

📝 Original Paper Info

- Title: Digital Twin AI Opportunities and Challenges from Large Language Models to World Models- ArXiv ID: 2601.01321

- Date: 2026-01-04

- Authors: Rong Zhou, Dongping Chen, Zihan Jia, Yao Su, Yixin Liu, Yiwen Lu, Dongwei Shi, Yue Huang, Tianyang Xu, Yi Pan, Xinliang Li, Yohannes Abate, Qingyu Chen, Zhengzhong Tu, Yu Yang, Yu Zhang, Qingsong Wen, Gengchen Mai, Sunyang Fu, Jiachen Li, Xuyu Wang, Ziran Wang, Jing Huang, Tianming Liu, Yong Chen, Lichao Sun, Lifang He

📝 Abstract

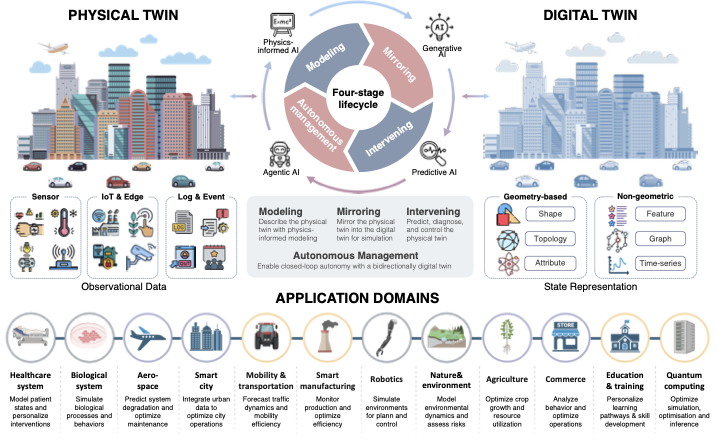

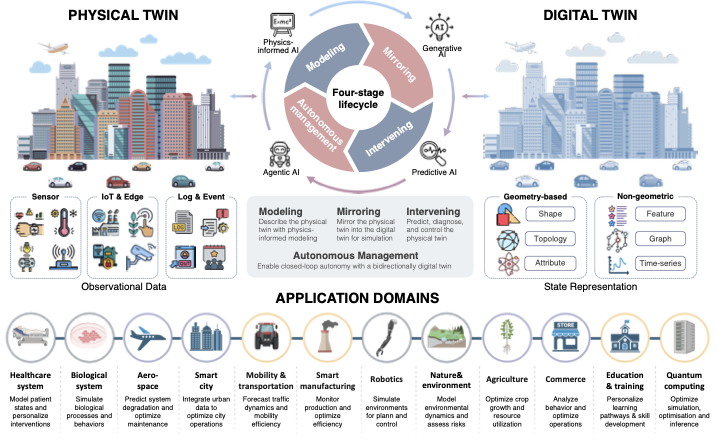

Digital twins, as precise digital representations of physical systems, have evolved from passive simulation tools into intelligent and autonomous entities through the integration of artificial intelligence technologies. This paper presents a unified four-stage framework that systematically characterizes AI integration across the digital twin lifecycle, spanning modeling, mirroring, intervention, and autonomous management. By synthesizing existing technologies and practices, we distill a unified four-stage framework that systematically characterizes how AI methodologies are embedded across the digital twin lifecycle: (1) modeling the physical twin through physics-based and physics-informed AI approaches, (2) mirroring the physical system into a digital twin with real-time synchronization, (3) intervening in the physical twin through predictive modeling, anomaly detection, and optimization strategies, and (4) achieving autonomous management through large language models, foundation models, and intelligent agents. We analyze the synergy between physics-based modeling and data-driven learning, highlighting the shift from traditional numerical solvers to physics-informed and foundation models for physical systems. Furthermore, we examine how generative AI technologies, including large language models and generative world models, transform digital twins into proactive and self-improving cognitive systems capable of reasoning, communication, and creative scenario generation. Through a cross-domain review spanning eleven application domains, including healthcare, aerospace, smart manufacturing, robotics, and smart cities, we identify common challenges related to scalability, explainability, and trustworthiness, and outline directions for responsible AI-driven digital twin systems.💡 Summary & Analysis

1. **Concept and Integration of Digital Twins with AI**: - Digital twins are precise replicas of physical systems, enhanced by AI integration. - For example, automotive manufacturers can significantly reduce development time and costs using digital twins.-

Modeling Physical Systems:

- Physics-based modeling is essential for understanding complex systems but becomes even more effective when combined with AI.

- Digital twins synchronize with real-world systems to analyze and predict in real-time.

-

Impact of AI on Digital Twins:

- AI technologies enable automation and optimization of digital twins.

- Large Language Models (LLMs) and generative AI transform digital twins from simple simulation tools into advanced cognitive systems capable of reasoning and imagination.

📄 Full Paper Content (ArXiv Source)

1.1

Introduction

“What I can’t create, I don’t understand.”

– Richard Feynman

Digital twins (DT), as precise digital representations of physical twins (real-world entities or systems), are meticulously designed to maintain a bidirectional connection with their real-world systems, enabling state synchronization for monitoring, prediction, optimization, and decision support . Beyond mere replication, digital twins embody a paradigm shift from static digital mirrors to dynamic, continuously learning reflections of reality. Due to the inherent advantages of predictive analytics, dynamic system simulation, and operational optimization that digital twins provide, this technology has been widely used for healthcare , biological domain , urban planning and management , manufacture , and science . As NVIDIA’s founder and CEO, Jensen Huang, stated in a keynote at the Berlin Summit for the Earth Virtualization Engines initiative, AI and accelerated computing will revolutionize our understanding of complex systems , highlighting a new era where digital twins evolve from analytical tools into intelligent agents that learn, predict, and act upon the physical world.

Technically, a digital twin operates by integrating comprehensive sensor data from a studied object, such as a wind turbine, where sensors are strategically placed to monitor crucial performance metrics including energy output, temperature, and weather conditions. This data is continuously transmitted to a processing system, which applies it to a virtual replica of the physical object. Utilizing this up-to-date digital model, various simulations can be conducted to analyze performance issues and devise potential improvements. The ultimate goal of this process is to extract insightful knowledge from the simulations, which can then be applied to enhance the real-world object, optimizing its efficiency and functionality. A more familiar example of a digital twin is Google Map, which fuses satellite imagery, GPS data, and real-time traffic to maintain a constantly updated mirror of the physical world . This continuous feedback loop between sensing, modeling, and adaptation forms the conceptual foundation of AI-driven digital twins—systems that not only reflect the world but also learn from it to guide real-world actions.

Over the years, the concept of digital twins has evolved significantly, increasingly integrating with artificial intelligence (AI) breakthroughs to transform how we simulate and predict the behaviors of physical systems . Digital twins and machine learning (ML) are closely intertwined, enhancing predictive maintenance and decision-making across various industries. Early systems relied on traditional machine learning algorithms to support predictive maintenance and fault detection . However, as data volumes and system complexity expanded, deep learning (DL) emerged as the cognitive core of digital twins, empowering them to extract intricate spatiotemporal patterns and emulate complex dynamics. Architectures such as convolutional , recurrent , and graph neural networks have enabled digital twins to move from modeling observed behavior to reasoning about unobserved mechanisms. This integration marks a fundamental transition: AI is no longer merely a component within digital twins but the intelligence that animates them.

/>

/>

With the advent of large-scale AI models and foundation architectures, the synergy between AI and digital twins has entered an unprecedented phase . Recent breakthroughs, from LLM-based autonomous agents to world models , demonstrate how AI can emulate, reason, and even imagine complex physical systems. For instance, a multi-agent system framework that applies an LLM is utilized to automate the parametrization of process simulations in digital twins . Moreover, NVIDIA Cosmos provides a world foundation model that generates photorealistic synthetic environments for digital-twin simulations in robotics and autonomous systems .

The convergence of AI technology and digital twins promises to not only enhance the fidelity and responsiveness of these virtual models but also to redefine the boundary between simulation and intelligence. Augmented by adaptive learning and generative reasoning, AI-driven digital twins can anticipate faults before they occur, personalize interventions, and autonomously manage complex systems. Such capabilities herald a future where digital twins evolve into trustworthy, explainable, and human-aligned partners in science, industry, and healthcare. .

Given this transformation, there is an urgent need to consolidate knowledge across the rapidly diversifying landscape of AI-powered digital twins. This paper provides a comprehensive, AI-centered overview of digital twin technologies. We begin by tracing the history of digital twins to establish the conceptual foundation. We then present a four-stage lifecycle that organizes how AI empowers digital twins: modeling the physical twin through physics-based methods and data integration, mirroring it into executable simulators, intervening through prediction, anomaly detection, and optimization, and ultimately achieving autonomous management via large language models and intelligent agents. Given the diverse applications of digital twins, we will examine how AI technology enhances their implementation across different domains such as healthcare, biological systems, and industry. Lastly, we will discuss the existing challenges and issues in using AI technology for digital twins and offer insightful recommendations for future research directions.

Major Contributions

To the best of our knowledge, this paper provides the AI-centered conceptual synthesis of digital twins as evolving intelligent systems. Unlike prior domain-specific reviews, we present a unified framework that connects the physical, digital, and cognitive layers of this emerging paradigm. The contributions of this paper are summarized as follows.

-

We conceptualize digital twins as evolving AI systems, distilling a four-stage lifecycle: describing the physical twin, mirroring the physical twin to digital twin, intervening in the physical twin, and autonomously managing the physical twin. This layered perspective reveals how AI continuously enhances the fidelity, intelligence, and autonomy of digital twins.

-

We provide an in-depth analysis of the integration between physics-based modeling and data-driven learning, highlighting the transition from traditional numerical methods to physics-informed neural networks, neural operators, and foundation models for physical systems. This synthesis clarifies how physical principles and learning algorithms can jointly improve interpretability, generalization, and reliability in digital twin modeling.

-

We analyze the rapid development of generative AI, including large language models, diffusion models, and world simulators, and examine their role in enabling reasoning, communication, and imagination within digital twins. These technologies transform digital twins from passive simulation tools into proactive, self-improving cognitive systems capable of autonomous understanding and creative scenario generation.

-

Through extensive review across eleven application domains, we identify both universal challenges such as scalability, explainability, and trustworthiness, as well as domain-specific requirements in areas including healthcare, aerospace, energy, and education. These observations reveal how AI-driven digital twins are evolving toward more intelligent, interoperable, and ethically responsible ecosystems, highlighting key directions for future exploration and interdisciplinary collaboration.

Organization

To guide readers through this interdisciplinary synthesis, this paper is organized into seven main sections. Section 2, History of Digital Twins, reviews the conceptual origins and technological evolution of digital twin systems. Sections 3–6 form the methodological core of this work, presenting a progressive framework that models, mirrors, intervenes, and autonomously manages the physical world through its digital counterpart. Specifically, Section 3, Modeling the Physical Twin, describes how physical systems are represented through physics-based and data-driven modeling. Section 4, Mirroring the Physical Twin into the Digital Twin, explains how these models are instantiated and visualized within virtual simulators. Section 5, Intervening in the Physical Twin via the Digital Twin, focuses on predictive modeling, anomaly detection, and optimization techniques that enable human-in-the-loop decision-making. Section 6, Towards Autonomous Management of the Digital Twin, advances this paradigm toward AI-driven autonomy, highlighting large language models, foundation models, and intelligent agents as enablers of self-managing digital twins. Finally, Section 7, Applications, demonstrates how these methodological principles are applied across diverse domains such as healthcare, aerospace, smart manufacturing, and robotics, illustrating the broad impact of digital twin technologies in real-world systems. Finally, Section 8, Open Challenges and Future Directions, discusses the key open problems and outlines future research directions for building scalable, trustworthy, and autonomous digital twin systems.

History of Digital Twins

The concept of the digital twin was formally introduced in 2002 by Michael Grieves during a presentation at the University of Michigan. This presentation emphasized the establishment of a product lifecycle management center that integrated both real and virtual spaces, along with data flows to enhance efficiency and innovation in product development and management . While the terminology around digital twins has evolved over the years, the fundamental idea of merging digital and physical twins has remained constant.

Interestingly, the practice of digital twinning dates back to the 1960s, long before the term was coined. NASA was among the early adopters, using basic forms of digital twins for space missions. One notable example is the Apollo 13 mission, where simulations using digital twin concepts played a critical role in bringing the crew safely back to Earth . These early applications demonstrated the potential of digital twins in enhancing design, maintenance, and operational efficiency across various industries.

Companies like Rolls-Royce have been pioneers in adopting digital twin technology. They have utilized digital twins to customize repair processes for engine parts, automating and optimizing maintenance practices based on the specific geometries of these components . Similarly, in the aerospace industry, Boeing employed digital twins for the 787 Dreamliner’s battery systems to improve safety and manage risks more effectively . Airbus has also embraced this technology, using digital twins for their A350 XWB aircraft to enable real-time performance monitoring, which has led to substantial improvements in fuel efficiency and reductions in emissions .

Recent advancements in digital twin technology have continued to drive significant innovations across multiple industries. In manufacturing, healthcare, construction, automotive, and urban planning, digital twins are becoming indispensable tools. For instance, Tesla has harnessed digital twins to accelerate vehicle development, while the Mayo Clinic has used them to advance personalized medicine . Additionally, architects and city planners are leveraging digital twins to enhance project management and urban development, integrating AI and IoT to optimize resource use and improve outcomes.

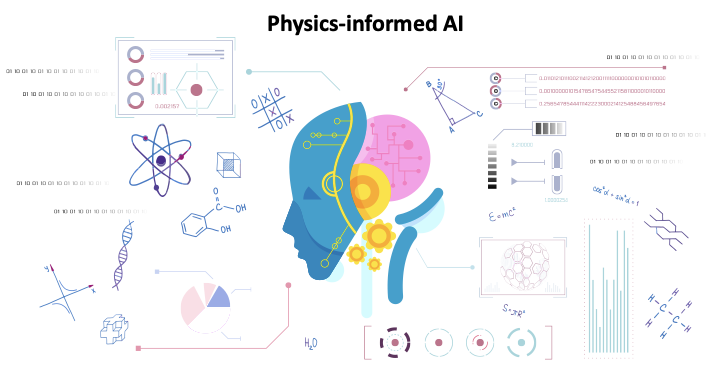

Modeling the Physical Twin

In today’s technological landscape, the integration of Artificial Intelligence (AI) with digital twins is gaining widespread attention and driving diverse applications. AI technologies have the potential to enhance the intelligence and autonomy of digital twins. For example, compared to traditional methods based on physics, the physics-informed AI system can significantly improve digital twins in numerous ways, such as automating the modeling process and improving computational efficiency . Furthermore, by learning from sensor data of physical systems and simulation data, AI can provide more effective and efficient predictions and fault detection . In addition, in the past two years, generative AI and Large Language Models (LLMs) have profoundly impacted digital twins, particularly in tasks related to simulation. The ongoing advancements in AI continue to push the boundaries of what digital twins can achieve, heralding a new era of smart, interconnected systems across various industries.

Physical System Modeling

Physics knowledge has long been a foundation in traditional digital twins , providing essential tools for understanding and predicting complex systems through mathematical representations of physical laws. Physics-informed AI effectively addresses a range of limitations inherent in traditional physics-based methods by combining AI techniques with physical knowledge . In this section, we review physics-based methods used in digital twins, highlight their limitations, and summarize new insights from recent physics-informed AI models.

style="width:90.0%" />

style="width:90.0%" />

Fundamental Physics-Based Methods

Before the advent of AI, researchers predominantly relied on physics-based methods for tasks such as simulation, prediction, analysis, and control in digital twins, with computational outcomes derived from numerical methods. In this section, we will introduce several important fields in these paradigms, beginning with constructing Partial Differential Equations (PDEs) , followed by solving these PDEs through numerical methods , and finally evaluating the error through uncertainty quantification .

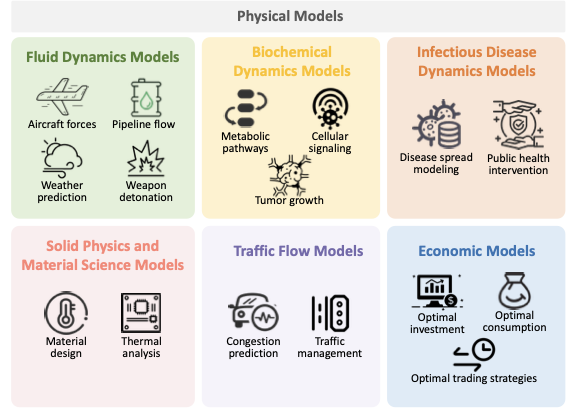

Partial Differential Equations (PDE). Computational physics has long been a foundational discipline in building traditional simulations for digital twins, focusing primarily on numerical solutions for PDEs. For instance, in constructing simulators for fluid phenomena, it is often necessary to solve the Navier-Stokes equations . These equations have been extensively utilized in simulators designed for aerospace , aircraft , weather , and oil pipelines , all of which involve fluid dynamics. Similarly, in solid physics and materials science, heat equations are used to simulate the heat conduction process, and plasticity equations are used to simulate stress variations within materials. These simulations are then applied in the creation of virtual twins for architecture , manufacturing , and other industries . In addition, reaction-diffusion equations are commonly used to model diffusion processes of substances or signals, such as biochemical reactions and tumor growth . These equations play a crucial role in the simulation of digital twins, especially within the health industry and for biological entities . In addition to describing natural phenomena, PDEs are also commonly used to model various social phenomena. For instance, the spread of infectious diseases is often described by the Susceptible-Infectious-Recovered model ; traffic flow models are employed to represent traffic conditions and manage congestion prediction . In economics and finance, investment strategies and option pricing are frequently described using the Hamilton-Jacobi-Bellman equation and the Black-Scholes equation, respectively.

Numerical Methods. To accurately obtain numerical solutions for equations in computational physics, it is necessary to perform a discretization process. This is done by dividing the domain into a discrete grid and subsequently approximating the solutions at these grid points . Based on this strategy, several numerical methods have been developed, each tailored to meet specific simulation challenges. The finite difference method simplifies implementation by using the difference of function values to approximate derivatives at grid points; however, it often lacks precision and efficiency . Spectral methods , on the contrary, represent the solution globally using different basis functions, offering high resolution (i.e., spectral accuracy ). Despite their high precision, spectral methods are generally suitable only for relatively regular domains, limiting their applicability to other downstream tasks . The finite element method (FEM) segments the domain into various small elements, such as triangles or tetrahedrons, and approximates the solution within each using low-order polynomials. Due to its flexible meshing strategy, FEM can adapt to a wide range of scenarios and complex real-world conditions . A notable example of FEM applied in simulation is the ICOsahedral Nonhydrostatic (ICON) model , which initially discretizes the Earth into an icosahedron consisting of 20 triangular faces, facilitating the calculation of numerical solutions. The ICON model demonstrates the power of FEM by converting a set of partial differential equations, which describe various weather conditions, into algebraic equations and solving them using a supercomputer. This approach has been integrated into the Earth2 system by NVIDIA.

Uncertainty Quantification. Simulation in digital twins aims to achieve a precise one-to-one correspondence between a physical system and its virtual representation , demonstrating the importance of quantifying the uncertainties inherent in both physical measurements and computational models . The primary techniques used for quantifying uncertainty and its propagation in simulators include Monte Carlo methods , Bayesian Inference , and Sensitivity Analysis . Monte Carlo methods involve random sampling of input parameters to create a distribution of possible outcomes, enabling estimation of output uncertainties. It is particularly effective in handling complex and high-dimensional problems, which are further enhanced with variance reduction techniques such as importance sampling and stratified sampling , focusing on the most critical parts of the input space . Bayesian Inference, on the other hand, uses Bayes’ Theorem to update the probability distribution of model parameters based on prior knowledge and new data. This approach provides a systematic framework for incorporating uncertainty into model predictions, making it possible to refine the model as more data becomes available. Techniques like Markov Chain Monte Carlo are crucial in this context, as they allow the practical application of Bayesian inference by approximating posterior distributions in high-dimensional spaces . Moreover, Sensitivity Analysis evaluates how variations in input parameters affect model outputs, and identifies the key parameters that significantly influence the results. Global sensitivity analysis methods , such as the Sobol index , offer a comprehensive understanding of how input uncertainties propagate through the model, thus highlighting the most influential parameters and guiding efforts to reduce uncertainty .

Physics-Informed AI Models

In recent years, the rapid advancement of AI technologies has introduced innovative methodologies to address modeling and computation challenges in traditional physics-based methods. One approach involves using AI to explicitly extract underlying PDEs from data for further modeling process . Alternatively, another strategy involves embedding some or all of the known physical knowledge directly into AI models . Additionally, AI-assisted computation can reduce computational costs by enhancing the numerical computation steps with AI technologies . This integration streamlines processes and boosts efficiency in modeling and computation tasks.

PDEs Discovery for Modeling. In the traditional digital twins modeling process, a significant challenge arises when certain physical laws are unclear or only partially understood, making it difficult to establish accurate mathematical models . This situation is particularly common in complex systems, such as turbulence , multiphase flows , and materials science . To address these modeling challenges, researchers have begun to explore the use of AI techniques to directly learn underlying physical knowledge and models from data. One of the earlier and highly influential works is by Brunton et al. , who introduced the Sparse Identification of Nonlinear Dynamics (SINDy) method, which can discover governing equations from time-series data. This algorithm is based on the assumption that physical laws are often simple, leading to sparsity in the indices. Using the concept of the Koopman Operator , SINDy transforms the problem of finding low-dimensional governing equations into a high-dimensional linear regression problem. A series of subsequent studies have expanded the applicability of SINDy to a wider range of scenarios, including the discovery of PDEs , handling noisy data , handling multiscale physics , and jointly processing control inputs . In particular, recent research has begun to utilize SINDy to construct simulators for digital twins in various application domains, such as manufacturing , chemical engineering , and other industrial scenarios. More recently, researchers have continued to advance the field of PDE discovery from data by utilizing deep learning. Early influential works include the numerical method-based PDE-Net and the symbol regression-based approaches followed two parallel trajectories. Further studies, such as PDE-Net 2.0 , have mixed numerical methods with symbolic approaches. Additionally, a series of works have combined deep learning techniques to further enhance the applicability and dimensionality of the SINDy algorithm.

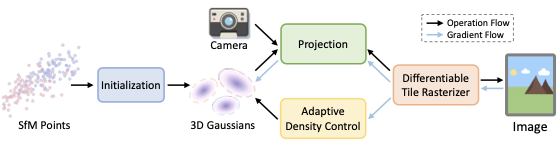

Solving PDEs for Simulation. Besides utilizing AI to explicitly uncover the underlying physical knowledge and mathematical models from data, another approach is to embed some or all of the known physical knowledge based on PDEs into AI models, aiming to combine the flexibility of data-driven methods with the interpretability of physical models to solve PDEs. Following this guidance, the most well-known work is the Physics-Informed Neural Networks (PINNs) . By directly incorporating PDEs into the loss function as penalty terms, PINNs allow physical information to constrain the neural network outputs to some extent.

\begin{equation}

\mathcal{L}_{\text{PINN}} = \lambda_{\text{data}} \mathcal{L}_{\text{data}} + \lambda_{\text{PDE}} \mathcal{L}_{\text{PDE}}

\end{equation}where $`\lambda_{\text{data}}`$, $`\lambda_{\text{physics}}`$ are weights that balance the contributions of each term. The loss term for PDEs often consists of two independent parts: one part satisfies the physical equations, and the other part satisfies the boundary conditions.

\begin{equation}

\mathcal{L}_{\text{PDE}}=\mathcal{L}_{\text{physics}}+\mathcal{L}_{\text{boundary}}=\frac{1}{M} \sum_{j=1}^{M} \left( \mathcal{N}[u](x_j, t_j) \right)^2+\frac{1}{P} \sum_{k=1}^{P} \left( \mathcal{B}[u](x_k, t_k) - g(x_k, t_k) \right)^2

\end{equation}where $`\mathcal{N}[u](x_j, t_j)`$ is the residual of the PDE at collocation points $`(x_j, t_j)`$. And $`\mathcal{B}[u] = g`$ is the boundary condition. Subsequently, researchers developed PINN variants, each enhancing PINNs’ performance and applicability from different perspectives. Variational PINNs introduced a variational formulation to improve training stability and accuracy, Conservative PINNs ensured the conservation of physical quantities, and Adaptive PINNs employed adaptive activation functions to enhance learning efficiency. Probabilistic PINNs incorporated probabilistic models to quantify uncertainty in the predictions. PINNs and their variants have been applied in a wide range of simulation domains, such as fluid dynamics , structural mechanics , and biomedical engineering .

Currently, Neural Operators have emerged as another class of methods for embedding physical knowledge. The earliest work is DeepONet , which leveraged the universal approximation theorem to directly learn differential operators in PDEs, rather than the solutions to PDEs. This approach sparked the concept of Neural Operators. Further advancements led to the Fourier Neural Operator (FNO), which significantly improved performance by filtering out high-frequency information through multiple Fourier layers. An even more advanced development based on the FNO structure is the FourCastNet , which is used for weather forecasting and has been integrated into the Earth2 system. Other variants based on FNO include Adaptive FNO , Multiwavelet Fourier Feature Operators (MWFF) . Both Neural Operators and PINNs focus the majority of computational effort during the model training phase. Once trained, these physics-informed AI models can generate predictions at remarkable speeds, often orders of magnitude faster than conventional numerical solvers . This shift in computational paradigm offers significant advantages in scenarios requiring repeated simulations or real-time predictions. For instance, Lu et al. demonstrated that their physics-informed DeepONet could solve partial differential equations up to 1000 times faster than traditional numerical methods. Similarly, Hennigh et al. showed that AI-based turbulence models could accelerate CFD simulations by up to two orders of magnitude. This dramatic reduction in inference time not only enables rapid what-if analyses and design optimizations but also opens up new possibilities for real-time control and decision-making in complex systems . However, it is important to note that the training process for these AI models can be computationally intensive and may require significant amounts of data or carefully designed loss functions incorporating physical constraints. However, the potential for rapid and accurate predictions makes physics-informed AI models an increasingly attractive option for a wide range of tasks for digital twins.

Observational Data Integration

A key challenge in modeling the physical twin is ensuring that real-world observations can be effectively incorporated into the model. This process unfolds in two steps. Section 3.2.1 addresses acquisition and alignment, where heterogeneous data from sensors, IoT devices, or logs are cleaned, synchronized, and transformed into consistent observational evidence. Section 3.2.2 then focuses on data assimilation, the stage where these prepared observations are combined with the model to update its states and parameters. In other words, acquisition and alignment ensure that the data are trustworthy and comparable, while assimilation ensures that the model itself adapts to the incoming evidence. Together, they form the methodological bridge that keeps the physical twin closely tied to the evolving reality.

Acquisition and Alignment

The construction of digital twins begins with the acquisition of observational data, which forms the bridge between physical systems and computational models. In practice, data originate from diverse sources, and their heterogeneity introduces challenges of noise, missing values, inconsistent sampling, and semantic mismatches. Acquisition and alignment methods aim to transform these disparate inputs into clean, reliable, and interoperable sequences that can serve as evidence for downstream modeling and assimilation.

Sensor Data. The most direct form of observational data is produced by physical sensors that measure variables such as temperature, pressure, vibration, voltage, location, audio, or video. Raw sensor signals are rarely usable without processing, as they are often affected by noise, baseline drift, or intermittent dropout. Signal processing techniques such as low-pass filtering and detrending are standard approaches for mitigating these issues . Anomaly detection is also necessary to identify faulty measurements or unexpected spikes, which may otherwise corrupt the dataset. A comprehensive survey of statistical and machine learning methods for anomaly detection is provided by Hodge and Austin . Missing values caused by sensor dropout are typically reconstructed through interpolation or imputation methods, ranging from classical statistical approaches to probabilistic models . Together, these preprocessing steps convert raw sensor feeds into stable sequences suitable for integration.

IoT and Edge Data. Beyond dedicated sensors, observational data are increasingly collected through the Internet of Things (IoT), which refers to distributed devices connected via wireless communication networks such as Wi-Fi, Bluetooth, Zigbee and LTE/5G. IoT infrastructures generate vast quantities of heterogeneous data, ranging from environmental readings to user interactions . Their communication is usually based on lightweight protocols such as MQTT or CoAP, designed for constrained environments. However, large-scale IoT deployments raise challenges including latency, packet loss, and inconsistent device configurations. To mitigate these problems, edge computing has emerged as a complementary paradigm: instead of sending all raw data to the cloud, computation is partially performed on gateways or embedded processors close to the data source . This strategy reduces bandwidth usage and response time while allowing local preprocessing such as compression or anomaly detection. More recently, the integration of AI models into edge devices has enabled real-time feature extraction and adaptive decision support, a direction often referred to as edge intelligence .

Log and Event Data. In addition to continuous signals, digital twins incorporate discrete logs and event records that capture system-level behaviors. Logs may include textual records from control systems, operational software, or user interactions. Event data typically represent discrete occurrences, such as failures or maintenance actions. Unlike sensor and IoT data, logs are semi-structured or unstructured and require dedicated parsing methods. Log parsing frameworks such as Drain convert free-text records into structured templates suitable for analysis . A recurring challenge is inconsistent timestamps across distributed systems, which necessitates synchronization and normalization . Once standardized, logs and events can be serialized and aligned with continuous data streams, enabling their integration into digital twin workflows. These data forms highlight the importance of sequence modeling, anomaly detection, and temporal reasoning in AI-based integration approaches.

Alignment of Heterogeneous Data. Regardless of source, acquired data streams must be aligned to ensure interoperability. Temporal alignment is the first requirement: sensors may operate at different sampling rates, IoT devices may report intermittently, and logs may record discrete events. Synchronization protocols such as the Network Time Protocol (NTP) establish a common reference clock for distributed devices . When perfect synchronization is not possible, interpolation and resampling methods are applied to harmonize signals on a shared timeline. Sliding-window aggregation can further reconcile high-frequency sensor data with lower-frequency records. Beyond time, semantic and unit normalization is necessary to prevent conflicts across heterogeneous datasets. Ontology-based mapping and dictionary-driven label alignment are commonly used for harmonizing variable names, while unit conversion ensures comparability of physical quantities such as temperature or flow rate . Together, these procedures transform heterogeneous sources into coherent datasets ready for integration with digital twin models.

Acquisition and alignment provide the methodological foundation for digital twin data integration. Sensor data, IoT and edge platforms, and log or event systems all contribute valuable but heterogeneous evidence. Through preprocessing, synchronization, and semantic normalization, raw observations are transformed into harmonized sequences that preserve fidelity while achieving interoperability. These steps also highlight opportunities for AI researchers, such as anomaly detection for noisy signals, lightweight models for edge-level feature extraction, and semantic alignment driven by representation learning.

Data Assimilation

Once observational data have been collected and aligned, the next step is to integrate them with the model in order to estimate the true system state and calibrate unknown parameters. This process, known as data assimilation (DA), provides a principled framework for merging model predictions with observational evidence. In the context of digital twins, DA is critical: without it, the model would drift away from reality due to imperfect initial conditions, incomplete parameterization, or unmodeled disturbances; with DA, the model remains dynamically consistent with the physical twin. Put simply, acquisition and alignment make the data usable, while assimilation makes the model responsive to the data. Formally, DA can be seen as a Bayesian estimation problem, where the model forecast provides a prior, the observations provide a likelihood, and the assimilation step produces a posterior estimate of the system state. Beyond updating the state, DA also delivers estimates of uncertainty, which are essential for downstream tasks such as prediction, optimization, and decision-making. Over the past decades, DA methods have evolved along three major lines: sequential approaches, variational approaches, and more recently, hybrid and learning-based approaches.

Sequential Data Assimilation. Sequential methods update the system state step by step as new data arrive. The classical example is the Kalman Filter (KF), which provides an optimal linear estimator under Gaussian assumptions . To handle nonlinear dynamics, the Extended Kalman Filter (EKF) approximates the system by local linearization, while the Unscented Kalman Filter (UKF) employs deterministic sampling to capture nonlinear effects more robustly . For large-scale, high-dimensional systems such as geophysical models, the Ensemble Kalman Filter (EnKF) has become the method of choice . By representing error statistics with an ensemble of model trajectories, EnKF provides a scalable solution that is widely used in weather forecasting and ocean modeling. Sequential methods are attractive for digital twins because of their ability to process data streams in real time, although they may suffer from sampling errors or loss of variance in very high-dimensional settings.

Variational Data Assimilation. Variational approaches cast DA as an optimization problem. The objective is to minimize a cost function that balances model fidelity with observational fit over a given time window. In 3D-Var, the optimization is performed at a single analysis time, combining the background forecast with new observations . In 4D-Var, the assimilation extends over a temporal window, allowing the system dynamics to constrain the analysis . These methods exploit all available observations within the assimilation window and yield dynamically consistent state trajectories. They are particularly effective for large-scale applications such as numerical weather prediction. However, their reliance on adjoint models and high-dimensional optimization makes them computationally demanding, which may limit their direct applicability in some digital twin scenarios.

Hybrid and Learning-Based Approaches. Recent developments have sought to combine the strengths of sequential and variational schemes with machine learning. One active direction is to learn or approximate components of the assimilation pipeline. For example, neural networks can serve as surrogate observation operators, mapping model state variables to observation space when the true operator is highly nonlinear or computationally expensive . Another line of work integrates machine learning to improve error covariance modeling, a long-standing challenge in EnKF and variational schemes . The concept of Neural Data Assimilation has also emerged, where deep learning architectures are trained to emulate the update step directly, providing a data-driven approximation of Bayesian inference. Furthermore, differentiable programming frameworks have enabled the formulation of differentiable DA, where the assimilation process itself is embedded into a computation graph, facilitating end-to-end learning of model and assimilation parameters. PINNs, although already introduced in Section 3.1.2, can also be incorporated into assimilation frameworks as constraints that guide state estimation . These hybrid methods highlight a promising synergy: traditional DA offers a principled statistical framework, while AI methods bring flexibility, scalability, and the ability to exploit large datasets.

Data assimilation represents the methodological bridge between data and models in digital twins. Sequential methods emphasize real-time, step-by-step updating, variational methods leverage optimization over temporal windows, and hybrid methods incorporate machine learning to overcome long-standing limitations. Together, they ensure that the physical twin is not merely simulated but continuously synchronized with reality. In doing so, data assimilation transforms the digital twin into a dynamic mirror of its physical counterpart, capable of supporting reliable prediction, optimization, and decision-making in complex environments.

Mirroring the Physical Twin into the Digital Twin

Learning a distribution based on real data observations is a fundamental challenge in generative AI. Digital twins, which vary in their representation dimensions, require different frameworks for modeling. This section will go through some fundamental approaches and then cover recent state-of-the-art methods built upon for learning digital twins in 2D and 3D dimensions with dynamic modeling.

style="width:95.0%" />

style="width:95.0%" />

Variational Autoencoders (VAEs). VAEs are designed to learn an encoder-decoder pair that maps input data into a continuous latent space with two Gaussian proxy distributions. In such a framework, we train the encoder model to match the prior distribution of the latent variable $`\mathbf{z}`$ and train the decoder model to reconstruct the original image. In the encoder part, we employ a Gaussian proxy distribution $`q_\phi(\mathbf{z}\mid\mathbf{x})`$ parameterized by a neural network $`\phi`$ to approximate the intractable posterior distribution $`q(\mathbf{z}\mid\mathbf{x})`$. We seek to predict the mean and variance of the latent variable $`\mathbf{z}`$ from the input image $`\mathbf{x}`$, i.e., $`\mu_\phi(\mathbf{x})`$ and $`\sigma_\phi(\mathbf{x})`$, to align the encoded distribution closer to pre-defined prior distribution ($`p(\mathbf{z})=\mathcal{N}(\mathbf{z}; \mathbf{0}, \mathbf{I})`$). In the decoder part, assuming the images follow a Gaussian distribution, we essentially train the decoder $`p_\theta(\mathbf{x}\mid\mathbf{z})`$ to reconstruct the original image from the latent variable $`\mathbf{z}`$. To achieve these two objectives, we can maximize the evidence lower bound (ELBO) , which is defined as

\begin{align}

\mathcal{L}(\boldsymbol{\phi}, \boldsymbol{\theta}; \mathbf{x})

&= \mathbb{E}_{q_{\boldsymbol{\phi}}(\mathbf{z} \mid \mathbf{x})}\left[ \log p_{\boldsymbol{\theta}}(\mathbf{x},\mathbf{z}) - \log q_{\boldsymbol{\phi}}(\mathbf{z}\mid\mathbf{x}) \right], \\

&= \mathbb{E}_{q_{\boldsymbol{\phi}}(\mathbf{z} \mid \mathbf{x})}\left[\log p_{\boldsymbol{\theta}}(\mathbf{x} \mid \mathbf{z})\right] - \mathbb{D}_{\mathrm{KL}}\left(q_{\boldsymbol{\phi}}(\mathbf{z} \mid \mathbf{x}) \| p(\mathbf{z})\right),

\label{eq:elbo}

\end{align}with the constraint $`\mathcal{L}(\phi, \theta; \mathbf{x}) \leq \log p_\theta(\mathbf{x})`$. Since the loss function in Eq. [eq:elbo] is differentiable, we can train the encoder $`\phi`$ and the decoder $`\theta`$ using gradient descent in an end-to-end manner. During inference time, we can sample latent variables from $`p(\mathbf{z})`$ and then feed them into the decoder to generate new images.

Normalizing Flows. Normalizing flows are a powerful class of generative models that enable flexible and tractable density estimation by transforming a simple probability distribution into a more complex one through a series of invertible transformations. The core idea of normalizing flows is to start with a simple distribution, typically a multivariate Gaussian , and apply a sequence of bijective (invertible and differentiable) mappings to transform this simple distribution into one that matches the target data distribution. Specifically, let $`\mathbf{z}_0 \sim p_{\mathbf{z}_0}(\mathbf{z}_0)`$ represent a random variable drawn from a simple base distribution $`p_{\mathbf{z}_0}`$, such as a standard normal distribution. A normalizing flow applies a sequence of invertible transformations $`f_i: \mathbb{R}^d \rightarrow \mathbb{R}^d`$ for $`i = 1, 2, \dots, K`$, resulting in the transformed variable $`\mathbf{z}_K = f_K \circ f_{K-1} \circ \dots \circ f_1 (\mathbf{z}_0)`$. The probability density function of $`\mathbf{z}_K`$ under the transformation is given by the change of variables formula:

\begin{equation}

\label{eq:normalizing_flow}

p_{\mathbf{z}_K}(\mathbf{z}_K) = p_{\mathbf{z}_0}(\mathbf{z}_0) \left| \det \left( \frac{\partial f^{-1}}{\partial \mathbf{z}_K} \right) \right| = p_{\mathbf{z}_0}(\mathbf{z}_0) \prod_{i=1}^{K} \left| \det \left( \frac{\partial f_i}{\partial \mathbf{z}_{i-1}} \right) \right|^{-1},

\end{equation}where $`\det \left( \frac{\partial f_i}{\partial \mathbf{z}_{i-1}} \right)`$ is the determinant of the Jacobian matrix of the $`i`$-th transformation. This formulation allows for exact likelihood computation, making normalizing flows highly effective for density estimation.

While normalizing flow offers several advantages, such as exact likelihood estimation and invertibility, they also have some limitations. One of the primary challenges is the trade-off between the expressiveness of the transformations and the computational complexity of calculating the Jacobian determinants . Simple transformations lead to efficient computation but may lack the flexibility to model complex distributions, whereas more complex transformations can capture intricate structures in the data but at the cost of increased computational burden. To tackle these challenges, recent researches attempt to enhance the expressiveness of normalizing flows, such as Neural Spline Flows and Residual Flows , which balance expressiveness and computational efficiency.

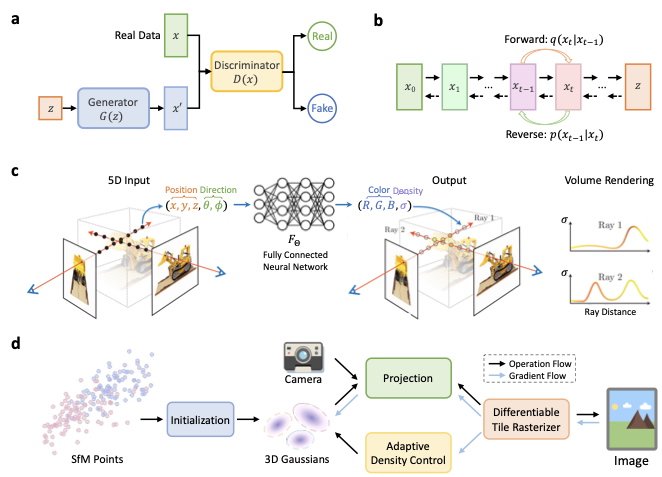

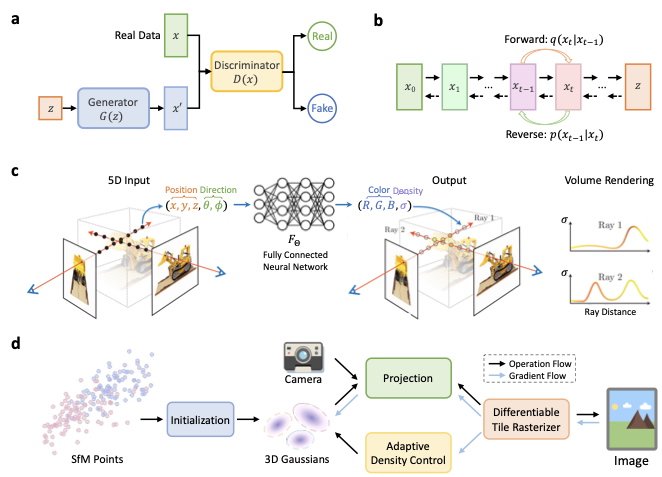

Generative Adversarial Neural Networks (GANs). GANs is a kind of powerful framework (as shown in Figure 3) for learning data distributions, consisting of two main components: a generator ($`G`$) and a discriminator ($`D`$). These components are typically implemented as differentiable neural networks that map input data from one space to another. The optimization of GANs can be formulated as a min-max game between the generator and discriminator, with the following objective:

\begin{equation}

\min_G \max_D \mathbb{E}_{\mathbf{x} \sim p{\text{data}}(\mathbf {x})}[\log D(\mathbf{x})] + \mathbb{E}_{\mathbf{z} \sim p{\mathbf{z}}(\mathbf{z})}[\log (1 - D(G(\mathbf{z})))].

\end{equation}The generator’s goal is to create new examples that closely resemble the real data distribution, while the discriminator aims to accurately distinguish between real and generated examples. The training process reaches an equilibrium point, known as the Nash equilibrium , where the generator has effectively captured the true data distribution. However, GANs often face training instability issues due to the non-overlapping support between the real and generated data distributions. One approach to mitigate this problem is to introduce noise into the discriminator’s input, thereby widening the support of both distributions. Wang et al. (2022) propose an adaptive noise injection scheme based on a diffusion model to stabilize GAN training. Due to the one-shot generation nature, GAN can be an efficient alternative compared to the more capable diffusion models, which require iterative multi-step and time-consuming denoising during inference.

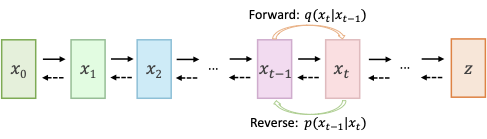

Denoising Diffusion Probabilistic Models (DDPMs). DDPMs use two Markov chains: a forward chain adding noise and a reverse chain removing it. The forward chain transforms data into a simple prior distribution, while the reverse chain, using neural networks, inverts this process. Given a data distribution $`\mathbf{x}_0 \sim q(\mathbf{x}_0)`$, the forward process generates a sequence $`\mathbf{x}_1, \mathbf{x}_2, \dots, \mathbf{x}_T`$ with transition kernel $`q(\mathbf{x}_t\mid\mathbf{x}_{t-1})`$. The joint distribution is the product of these transitions. The transition kernel is typically a Gaussian perturbation: $`q(\mathbf{x}_t\mid\mathbf{x}_{t-1}) = \mathcal{N}(\mathbf{x}_t; \sqrt{1-\beta_t} \mathbf{x}_{t-1}, \beta_t \mathbf{I}),`$ where $`\beta_t \in (0,1)`$ is a hyperparameter. This allows for analytical marginalization: $`q(\mathbf{x}_t\mid\mathbf{x}_0) = \mathcal{N}(\mathbf{x}_t; \sqrt{\bar{\alpha}_t} \mathbf{x}_0, (1-\bar{\alpha}_t) \mathbf{I}),`$ with $`\alpha_t = 1 - \beta_t`$ and $`\bar{\alpha}_t = \prod_{s=0}^{t} \alpha_s`$. The reverse process uses a learnable transition kernel:

\begin{equation}

p_\theta(\mathbf{x}_{t-1}\mid\mathbf{x}_t) = \mathcal{N}(\mathbf{x}_{t-1}; \mu_{\theta}(\mathbf{x}_t, t), \Sigma_{\theta}(\mathbf{x}_t, t)),

\end{equation}where $`\theta`$ denotes model parameters. It’s trained to match the time reversal of the forward process by minimizing KL divergence. The simplified loss function is:

\begin{equation}

\ell^{\text {simple }}_t(\theta)=\mathbb{E}_{\mathbf{x}_0, t, \epsilon_t}\left\|\epsilon_\theta\left(\mathbf{x}_t, t\right)-\epsilon\right\|_2^2.

\end{equation}The denoising process proceeds step-by-step using a formula involving the learned noise prediction $`\epsilon_\theta`$ and Gaussian noise $`\mathbf{z}`$.

Sampling efficiency was enhanced through techniques like DDIM , which enables faster sampling by constructing non-Markovian diffusion processes. The EDM framework further improved efficiency and quality by refining the design space. Score-based models provided a unified view of diffusion models through stochastic differential equations. For discrete data, D3PMs extended diffusion models to discrete state spaces. Practical applications were advanced by works like GLIDE and Stable Diffusion , which enabled high-quality text-to-image generation. Classifier guidance and cross-attention control further improved conditional generation and editing.

Radiance Field Modeling with Implicit and Explicit Methods. A radiance field provides a three-dimensional light distribution model that describes the interaction of light with surfaces and materials within an environment . It can be mathematically expressed as a function $`L \!:\! \mathbb{R}^5 \!\mapsto\! \mathbb{R}^+`$, where $`L(x, y, z, \theta, \phi)`$ denotes the mapping of a spatial point $`(x, y, z)`$ and a direction given by spherical coordinates $`(\theta, \phi)`$ to a non-negative radiance value. Radiance fields are typically represented in two forms: implicit or explicit, each offering distinct advantages for scene depiction and rendering . An implicit radiance field models the light distribution within a scene indirectly without defining the scene’s geometry explicitly . In the context of deep learning, this often involves employing neural networks to learn a continuous representation of the volumetric scene . A notable example is NeRF , where a neural network, often a multi-layer perceptron (MLP), maps spatial coordinates $`(x,y,z)`$ and viewing directions $`(\theta, \phi)`$ to corresponding color and density values . The radiance at any point is dynamically computed by querying the MLP rather than being stored directly. This approach provides a compact and differentiable representation of complex scenes but typically requires significant computational resources during rendering due to the need for volumetric ray marching . Conversely, an explicit radiance field explicitly encapsulates the light distribution using discrete spatial structures like voxel grids or point sets . Each component of this structure encodes the radiance data for its specific spatial location, facilitating faster and more direct radiance retrieval, albeit at the expense of increased memory demands and reduced resolution .

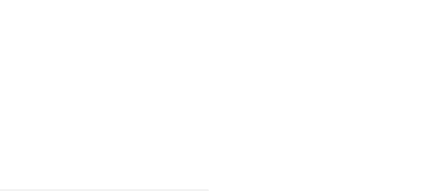

3D Gaussian Splatting (3DGS). 3DGS integrates the benefits of both implicit and explicit radiance fields through the use of adjustable 3D Gaussians. This method, optimized by multi-view image supervision, provides an efficient and flexible representation capable of accurately depicting scenes. It merges neural network-based optimization with structured data storage, targeting real-time, high-quality rendering with efficient training, especially for intricate and high-resolution scenes. The 3DGS model is described as:

\begin{equation}

L_{\text{3DGS}}(x, y, z, \theta, \phi) = \sum_{i} G(x, y, z, \bm{\mu}_i, \bm{\Sigma}_i) \cdot c_i(\theta, \phi),

\end{equation}where $`G`$ symbolizes the Gaussian function, defined by the mean $`\bm{\mu}_i`$ and covariance $`\bm{\Sigma}_i`$, and $`c`$ indicates the view-dependent color. Specifically, learning 3D GS involves two main processes as depicted in Figure 3:

-

Rendering: The rendering process in 3D GS differs significantly from the volumetric ray marching used in implicit methods like NeRF. Instead, it employs a splatting technique that projects 3D Gaussians onto a 2D image plane. This process involves several key steps. First, in the frustum culling step, Gaussians outside the camera’s view are excluded from rendering. Then, in the splatting step, 3D Gaussians are projected into 2D space using a transformation involving the viewing transformation and the Jacobian of the projective transformation. In the final step, the color of each pixel is computed using alpha compositing, which blends the colors of overlapping Gaussians based on their opacities.

-

Optimization: To achieve real-time rendering, 3D GS employs several optimization techniques, including the use of tiles (patches) for parallel processing and efficient sorting of Gaussians based on depth and tile ID. The learning process in 3D GS involves optimizing the properties of each Gaussian (position, opacity, covariance, and color) as well as controlling the density of Gaussians in the scene. The optimization is guided by a loss function combining L1 and D-SSIM losses:

MATH\begin{equation} \mathcal{L} = (1-\lambda)\mathcal{L}_1 + \lambda\mathcal{L}_{\text{D-SSIM}}, \end{equation}Click to expand and view morewhere $`\lambda`$ is a weighting factor. The density of Gaussians is controlled through point densification and pruning processes. Densification involves cloning or splitting Gaussians based on positional gradients, while pruning removes unnecessary or ineffective Gaussians.

Since the introduction of 3D Gaussian Splatting for real-time radiance field rendering , several significant advancements have been made in the field. Plenoxels introduced a neural network-free approach for photorealistic view synthesis using a sparse 3D grid with spherical harmonics. Dynamic 3D Gaussians extended the concept to dynamic scenes, enabling six-degree-of-freedom tracking and novel-view synthesis. Animatable and Relightable Gaussians focused on high-fidelity human avatar modeling from RGB videos. Other notable works include Floaters No More , which addressed background collapse in NeRF acquisition, and 3DGS-Avatar , which achieved real-time rendering of animatable human avatars. These advancements highlight the ongoing evolution and diversification of techniques in the realm of 3D scene representation and rendering.

Simulator Building

Foundational Modeling and State Representation

A digital twin simulator must first decide how to represent the physical system it seeks to emulate. In some cases, the simulator requires an explicit geometric description to reproduce spatially governed behaviors such as deformation, fluid flow, or molecular interactions. In other cases, geometry is unnecessary, and the system can be modeled through relational or temporal dependencies that capture how components interact or evolve over time. These two complementary perspectives lead to two types of state representation: geometry-based, which encodes the physical structure of the system, and abstract, which focuses on the data-driven or relational aspects of system behavior.

Geometry-Based State Representation. In geometry-based representations, the physical system is modeled through explicit spatial constructs that define its shape, structure, and material properties. These representations form the backbone of digital twins in domains where spatial configuration directly governs system dynamics and behavior, such as engineering design, manufacturing, and biomedical modeling. They answer fundamental questions about the physical world: What does the object look like? How are its components connected? What are its physical properties?

Shape and Geometry. “What is the shape of an object?” - This question defines the geometric configuration of the physical entity in space. Shape representation establishes the foundation for all subsequent modeling, determining how the object occupies and interacts with its environment. Common formulations include meshes used in finite element and finite volume methods (FEM/FVM), which discretize continuous domains for numerical analysis ; point clouds capturing dense surface samples for 3D reconstruction and inspection ; voxel or grid representations used in volumetric modeling and medical imaging ; and parametric models such as CAD and Building Information Models (BIM) that encode both geometry and semantics . Neural fields, including Neural Radiance Fields (NeRF) , Signed Distance Fields (SDF) , and occupancy networks , further generalize geometry into continuous implicit functions, offering differentiable and compact scene representations. Together, these formulations provide the means to reconstruct, visualize, and simulate the spatial state of the physical world.

Topology and Connectivity. “How are its components connected?” Beyond local geometry, topology describes the relationships and connectivity that define structural integrity and motion constraints. In mechanical systems, mesh connectivity specifies adjacency relations between elements and supports stress or deformation analysis . In articulated systems such as robotic manipulators, kinematic chains describe hierarchical dependencies and degrees of freedom among joints and links . Assembly graphs further encode how individual parts interface or move relative to one another, enabling simulation of multi-body dynamics and structural coupling . Accurate topological modeling ensures that the digital twin preserves the structural logic of the physical system, allowing analyses such as load propagation, collision detection, and deformation tracking under physical constraints.

Physical Attributes. “What are its physical properties?” Geometry and topology alone cannot determine the system’s behavior without describing its intrinsic material and boundary properties. These attributes define how the physical entity responds to external forces, heat, or other environmental stimuli. Key descriptors include material properties such as density, elasticity, and viscosity; boundary conditions specifying loads, fixed supports, or fluid interfaces; thermal parameters including conductivity and specific heat; and initial conditions that define the starting state of fields such as temperature or velocity. Together, these quantities enable accurate numerical simulation and predictive modeling through governing physical equations. The inclusion of such physical attributes transforms geometric models from static visualizations into dynamic computational entities that mirror the real-world system’s mechanical, thermal, or electromagnetic responses.

Non-Geometric State Representation. Not all simulators require explicit geometry to capture system behavior. In many digital twins, the key dynamics arise from relationships, temporal patterns, or statistical dependencies rather than spatial form. Non-Geometric State Representations therefore describe systems through symbolic, relational, or feature-based structures, enabling modeling of processes where geometry is unavailable, irrelevant, or computationally unnecessary. Such representations are widely used in cyber-physical, social, and biological systems to support large-scale reasoning and prediction.

Feature-based Representation. Feature-based representations encode complex system states into compact numerical vectors, allowing efficient computation and integration across modalities. These embeddings can be extracted from text, images, and sensor measurements, or learned through self-supervised and multimodal models . Large-scale foundation models have further generalized this concept by learning unified latent spaces capable of representing diverse system behaviors and attributes. Within digital twin simulators, embeddings provide scalable interfaces for high-dimensional inference, supporting fast querying, cross-domain adaptation, and intelligent decision-making.

Time-Series Representation. Time-Series representations describe how system states evolve over time. They are central to digital twins that monitor and forecast behaviors from continuous data streams, such as sensor signals, physiological measurements, or environmental variables . Recurrent and attention-based models, including RNNs, TCNs, and Transformers , have shown strong ability to capture both short- and long-term dependencies. In healthcare twins, these representations enable dynamic patient monitoring and disease progression modeling ; in industrial twins, they support predictive maintenance and anomaly detection based on telemetry data .

Graph-based Representation. Graph-based representations model a system as a collection of entities and their interactions, providing a natural framework for describing relational dynamics. This formulation has proven effective for networked infrastructures such as transportation systems , supply chains , and energy grids , as well as for semantic and biomedical knowledge graphs . Graph Neural Networks (GNNs) extend these ideas by learning representations over structured dependencies, enabling simulation of flow, fault propagation, and system-level optimization.

Non-Geometric State Representations thus complement geometry-based models by focusing on relationships and dynamics rather than spatial fidelity. Together, they define two foundational paradigms for simulator construction: one grounded in physical form, the other in data and interaction. Modern digital twins often integrate both perspectives. For example, structural components can be modeled geometrically, while control, communication, or biological processes are represented through graphs or embeddings. This hybrid approach enables simulators to capture both the physical dynamics and semantic interactions of complex systems.

Behavior and Process Simulation

State-Space Simulation. State-space simulation represents one major direction for building simulators. The goal is to learn system behavior directly from data, treating simulation as function approximation that maps inputs to outputs or as sequence prediction that forecasts temporal evolution. Rather than solving governing equations explicitly, these models infer the underlying dynamics from observational evidence, allowing digital twins to emulate complex processes efficiently when analytical formulations are unavailable or computationally prohibitive .

Feedforward models such as MLPs, CNNs, and ResNets learn steady-state mappings between design or control variables and resulting performance indicators. They are often used as differentiable surrogates for engineering optimization—for instance, predicting aerodynamic lift from airfoil geometry, estimating heat dissipation from material parameters, or forecasting drug release rate from formulation properties . By replacing expensive finite-element simulations, such neural surrogates enable rapid design iterations and sensitivity analysis. When temporal dependencies dominate, recurrent and attention-based networks including LSTMs, GRUs, and Transformers capture the dynamic evolution of system states. These models treat simulation as sequence forecasting, learning how a process unfolds over time. In industrial digital twins, they are applied to predict sensor trajectories for fault detection ; in healthcare, they model disease progression using patient time-series data ; in transportation, they forecast multi-step traffic flow and congestion propagation across urban networks .

For systems with explicit spatial or relational structure, GNNs extend this paradigm by learning interactions among interconnected components. Each node represents a physical or logical entity, and edges describe dependencies such as force transmission or resource exchange. This allows GNN-based simulators to reproduce mesh deformation in mechanical systems, voltage propagation in power grids, and flow redistribution in transportation networks. Through message passing, GNNs enable digital twins to model how local perturbations collectively shape global dynamics. Beyond discrete representations, neural operators such as DeepONet and the Fourier Neural Operator (FNO) generalize state-space learning to functional mappings. Instead of approximating a single trajectory, they learn solution operators of partial differential equations, directly mapping boundary conditions or source terms to entire solution fields. Once trained, these operators provide fast and high-fidelity surrogates for simulating fluid flow, heat transfer, and material deformation , achieving speedups of several orders of magnitude over traditional solvers.

In summary, state-space simulation focuses on learning how systems respond and evolve, either by approximating steady mappings or by predicting temporal sequences. From predicting equipment failure to optimizing engineering designs and accelerating physical simulations, this approach forms a central computational pathway for building intelligent digital twins that learn, adapt, and generalize across physical domains.

Visual World Simulation. Recent advances in generative AI have introduced a new paradigm for simulation by directly generating realistic visual observations of the world rather than explicitly modeling its internal states. In this view, predicting future frames or synthesizing dynamic scenes becomes equivalent to simulating the evolution of the physical environment. Such world simulators enable embodied agents, including robots and autonomous vehicles, to learn, plan, and interact within controllable, data-driven virtual worlds without relying solely on costly physical experiments.

Video diffusion models have emerged as one of the most powerful frameworks for generative simulation. Leveraging large-scale video datasets and diffusion-based architectures, these models can synthesize high-fidelity and temporally coherent videos that approximate real-world physics and dynamics. For instance, VideoComposer introduces a compositional video synthesis framework conditioned on text, spatial layout, and temporal cues, allowing precise control over generated motion and scene composition. DynamiCrafter extends this capability by animating still images through motion priors learned from text-to-video diffusion models, effectively turning static scenes into dynamic simulations. Most notably, Sora demonstrates the remarkable potential of text-to-video diffusion models as universal world simulators, capable of generating physically consistent, photorealistic videos from natural language descriptions.

While realism is critical, controllability remains essential for simulation-driven learning. Several recent studies have focused on integrating structured control into video generation models to produce task-relevant, interactive environments. Seer introduces a frame-sequential text decomposer that translates global instructions into temporally aligned sub-instructions, enhancing fine-grained control over the resulting video trajectories. Video Adapter provides a lightweight adaptation mechanism for large pre-trained video diffusion models, enabling efficient domain customization without full finetuning. At a larger scale, the Cosmos World Foundation Model Platform offers an integrated framework for constructing controllable video-based world models, including video curation pipelines, tokenizers, and pre-trained foundation simulators for physical AI applications such as robotics and autonomous driving.

Beyond pure video generation, interactive world models like Genie and its successor Genie 2 extend generative simulation into embodied, action-controllable 3D environments. These systems can generate playable, open-ended virtual worlds conditioned on text, sketches, or other multimodal prompts, enabling autonomous agents to learn and act within dynamic visual environments.

Video generation as world simulation thus represents a paradigm shift from explicit physics-based modeling to observation-driven synthesis. By learning the visual and temporal structure of reality, such models offer controllable, scalable, and photorealistic environments for training, testing, and reasoning. Within digital twin systems, they bridge simulation and perception, allowing virtual agents to both observe and interact with realistic representations of the physical world.

Simulator Visualization

Scene Modeling

In digital twins, scene modeling supports visualization by defining how the simulated world appears in three-dimensional space. Once the simulator engine has been built to model system behavior, visualization focuses on reconstructing or synthesizing the world’s visible structure. Scene modeling determines what the twin “looks like.” Scene modeling aims to represent the spatial configuration and visual appearance of real or virtual environments by learning how light interacts with matter through radiance field representations. With advances in neural rendering, data-driven methods can now recover highly detailed and spatially consistent 3D and even 4D scenes directly from multi-view images or sensor data. This section introduces two main directions in this field: large-scale static scene reconstruction and dynamic scene modeling.

Static Scene Reconstruction. Scaling neural rendering techniques to large urban environments has been a significant focus of recent research. These methods aim to capture the complexity of city-scale scenes while maintaining high visual fidelity and efficient rendering. Block-NeRF introduces a variant of NeRF that can represent large-scale environments by decomposing the scene into individually trained NeRFs. This approach decouples rendering time from scene size, enabling rendering to scale to arbitrarily large environments. Urban Radiance Fields extends NeRF to handle asynchronously captured lidar data and address exposure variation between captured images, producing state-of-the-art 3D surface reconstructions and high-quality novel views for street scenes. TensoRF proposes modeling the radiance field as a 4D tensor and introduces vector-matrix decomposition to achieve fast reconstruction with better rendering quality and smaller model size compared to NeRF. NeRF in the Wild addresses the challenges of unconstrained photo collections, enabling accurate reconstructions from internet photos of famous landmarks. More recent works have focused on further improving the scalability and quality of large-scale scene reconstruction. K-Planes introduces a white-box model for radiance fields using planes to represent d-dimensional scenes, providing a seamless way to go from static to dynamic scenes. BungeeNeRF achieves level-of-detail rendering across drastically varied scales, addressing the challenges of extreme multi-scale scene rendering. Global-guided Focal Neural Radiance Field proposes a two-stage architecture to achieve high-fidelity rendering of large-scale scenes while maintaining scene-wide consistency. CityGaussian employs a novel divide-and-conquer training approach and Level-of-Detail strategy for efficient large-scale 3D Gaussian Splatting training and rendering.

Dynamic Scene Modeling. Extending static representations to model dynamic scenes with moving objects has been another important direction in neural rendering research. These methods aim to capture both the spatial and temporal aspects of complex real-world environments. 4D Gaussian Splatting approximates the underlying spatio-temporal 4D volume of a dynamic scene by optimizing a collection of 4D primitives, enabling real-time rendering of complex dynamic scenes. Scalable Urban Dynamic Scenes (SUDS) introduces a factorized scene representation using separate hash table data structures to efficiently encode static, dynamic, and far-field radiance fields. Street Gaussians proposes a novel pipeline for modeling dynamic urban street scenes, using a combination of static and dynamic 3D Gaussians with optimizable tracked poses for moving objects. Deformable 3D Gaussians introduces a method that reconstructs scenes using 3D Gaussians and learns them in canonical space with a deformation field to model monocular dynamic scenes. DynMF presents a compact and efficient representation that decomposes a dynamic scene into a few neural trajectories, allowing for real-time view synthesis of complex dynamic scene motions. Multi-Level Neural Scene Graphs proposes a novel, decomposable radiance field approach for dynamic urban environments, using a multi-level neural scene graph representation that scales to thousands of images with hundreds of fast-moving objects.

Interactive Visualization and Interfaces

While scene modeling establishes the structural and visual substrate of the virtual environment, interactive visualization determines how humans and AI agents see, explore, and make sense of that environment. This layer transforms simulated or reconstructed worlds into perceivable, analyzable views, supporting situational awareness, hypothesis testing, and collaborative understanding through intuitive visual interfaces.

Immersive and Real-Time Visualization. Advances in rendering pipelines and GPU acceleration now allow digital twins to achieve photorealistic, real-time visualization of large-scale environments. Neural rendering techniques such as 3D Gaussian Splatting make it possible to maintain interactive frame rates without sacrificing fidelity, while compact scene representations like Instant-NGP further improve efficiency for view synthesis. Immersive visualization systems, including AR/VR headsets and CAVE displays—bring depth perception and spatial presence to users , enabling intuitive exploration through gestures, motion tracking, or gaze-based control. Such immersive systems enhance human perception and understanding in domains such as surgical simulation, smart city management, and industrial training .

Interactive Dashboards and Visual Analytics. Beyond immersion, digital twins rely on interactive dashboards and visual analytics tools to organize and interpret simulation results and live sensor data . These interfaces integrate predictive models, streaming information, and diagnostic views into a unified visual layer that supports real-time monitoring and reasoning . In practice, they appear as 3D monitoring dashboards for smart factories , preoperative visualization systems for surgical planning , or operational control centers that display traffic flow and energy distribution for situational analysis . Recent cloud-based visualization frameworks further allow distributed teams to collaboratively explore, annotate, and interpret digital twin environments through synchronized visual interfaces.

Intervening in the Physical Twin via the Digital Twin

Predicting Physical Behavior

Prediction modeling is a fundamental aspect of digital twin systems, allowing for the forecasting of future conditions and behaviors based on current and historical data. In digital twin systems, which create virtual replicas of physical entities, predictive models can forecast various scenarios such as equipment malfunctions , performance degradation , and system anomalies . These predictions are essential for optimizing performance, preventing unexpected issues, and ensuring the efficient operation of physical assets . Leveraging advanced AI techniques such as machine learning and deep learning methods, digital twins can analyze extensive data to predict trends , detect anomalies , and make decisions with real-time . This section will delve into two parts, focusing on: 1) Prediction Modeling in Digital Twin Systems; 2) Types of Prediction in Digital Twin Systems.

Prediction Modeling Fundamentals

Prediction modeling is a cornerstone of digital twin systems, providing the capability to forecast future states and behaviors of physical entities based on current and historical data. This capability is critical for optimizing operations, planning maintenance, and improving the overall performance and reliability of the system. This section introduces the concept of prediction modeling, provides a high-level mathematical definition, and presents relevant examples within digital twin systems.

Definition of Prediction Modeling. Prediction modeling in digital twin system involves forecasting future states $`\mathbf{x}_{t+T} \in \mathcal{X}`$ using observed data $`\mathbf{X}_t \subseteq \mathcal{X}`$ and a prediction function $`\mathcal{F}`$. Formally, given a set of observed states $`\mathbf{X}_t`$ up to the current time $`t`$, the goal is to estimate the future state $`\hat{\mathbf{x}}_{t+T}`$ at a future time $`t+T`$ as $`\hat{\mathbf{x}}_{t+T} = \mathcal{F}(\mathbf{X}_t, T)`$, where $`\mathbf{X}_t = \{\mathbf{x}_{t_1}, \mathbf{x}_{t_2}, \ldots, \mathbf{x}_t\}`$ represents the historical data up to the current time $`t`$, and $`T`$ is the prediction horizon, representing the time interval into the future for which the prediction is made. The state space $`\mathcal{X}`$ includes all possible states of the system, encompassing various sensor readings, operational conditions, and performance metrics. The observed data $`\mathbf{X}_t`$ are the recorded states used to train the prediction models. The function $`\mathcal{F}`$ employs machine learning, deep learning or statistical methods to predict future states based on historical and current data. To optimize the predictive function $`\mathcal{F}`$, the objective is to minimize a loss function $`\mathcal{L}`$ that captures the difference between the predicted state $`\hat{\mathbf{x}}_{t+T}`$ and the actual future state $`\mathbf{x}_{t+T}`$ as:

\mathcal{L} = \mathbb{E}[\ell(\mathbf{x}_{t+T}, \hat{\mathbf{x}}_{t+T})],where $`\ell`$ is the task-specific loss function (e.g., mean squared error $`\ell(\mathbf{x}, \hat{\mathbf{x}}) = (\mathbf{x} - \hat{\mathbf{x}})^2`$ in regression tasks).

Predictive Tasks

In digital twin systems, prediction models play a crucial role in maintaining the reliability , efficiency , and performance of both physical and cyber components. By leveraging advanced AI techniques, these models enable continuous monitoring and analysis of complex data , identifying patterns and forecasting potential issues before they manifest. The primary types of prediction in digital twin systems can be broadly categorized into two main areas: 1) Real-time Decision Making; 2) Predictive Maintenance. The following sub-sections will delve into these categories, outlining the key methods and tasks to highlight their importance and effectiveness in digital twin systems.