Predicting river flow in places without streamflow records is challenging because basins respond differently to climate, terrain, vegetation, and soils. Traditional basin attributes describe some of these differences, but they cannot fully represent the complexity of natural environments. This study examines whether AlphaEarth Foundation embeddings, which are learned from large collections of satellite images rather than designed by experts, offer a more informative way to describe basin characteristics. These embeddings summarize patterns in vegetation, land surface properties, and long-term environmental dynamics. We find that models using them achieve higher accuracy when predicting flows in basins not used for training, suggesting that they capture key physical differences more effectively than traditional attributes. We further investigate how selecting appropriate donor basins influences prediction in ungauged regions. Similarity based on the embeddings helps identify basins with comparable environmental and hydrological behavior, improving performance, whereas adding many dissimilar basins can reduce accuracy. The results show that satellite-informed environmental representations can strengthen hydrological forecasting and support the development of models that adapt more easily to different landscapes.

Accurate prediction in ungauged basins remains one of the most persistent challenges in hydrology. The Prediction in Ungauged Basins (PUB) initiative launched in 2003 reframed this issue as a decade-long community effort to improve process understanding, quantify uncertainties, and develop models capable of transferring information across basins with sparse or nonexistent observations (Hrachowitz et al., 2013;Sivapalan et al., 2003). A long-standing strategy for PUB is regionalization, in which model parameters or training data for an ungauged target basin are borrowed from gauged donor basins. This process relies fundamentally on the hypothesis that hydrological behaviors can be transferred between locations that share high similarity in their physical and climatic characteristics (Pool et al., 2021). Although foundational studies have demonstrated the potential of these donor-selection schemes, they also show that prediction performance is sensitive to how similarity is defined and implemented (Oudin et al., 2008).

Deep learning has reshaped the landscape of regional rainfall-runoff modeling, as Long Short-Term Memory (LSTM) models (Hochreiter and Schmidhuber, 1997) trained on large collections of basins have demonstrated strong information sharing and generalization capacity, achieving impressive performance even in ungauged settings (Fang et al., 2022;Kratzert et al., 2019a). This success has led to the prevailing view that multi-basin learning should supplant single-basin training (Kratzert et al., 2024). However, the tendency to continually enlarge the training dataset introduces an important limitation. Simply expanding the training set does not always yield better predictions for every catchment. Evidence from studies covering more than 3,000 U.S. basins indicates that pooling all basins into a single training set can be suboptimal, largely because of the substantial hydrological heterogeneity among nonreference basins affected by human activities (Ouyang et al., 2021). Critically, even within the carefully curated CAMELS dataset (Addor et al., 2017), a benchmark collection of reference basins with minimal human impact, training a regional deep learning model on all available data without proper screening can degrade performance at the local scale (Nai et al., 2024;Yu et al., 2024). Thus, identifying a small yet informative set of training basins remains an underexplored but highly influential lever for improving PUB performance.

The challenge of selecting these informative donors has traditionally relied on distance-based similarity analysis using readily quantifiable catchment descriptors (He et al., 2011). These hand-crafted attributes spanning physiographic, climatic, and geological factors, are typically evaluated individually or combined into composite metrics such as standardized Euclidean distance. However, conventional descriptors alone are often inadequate (Tarasova et al., 2024). Hydrological processes can vary sharply over space, meaning that spatial proximity only loosely reflects functional similarity. Furthermore, these static attributes are often too sparse to capture the complex, dynamic nature of catchment behavior, resulting in distance metrics that do not map effectively onto true hydrological similarity.

The integration of deep learning has introduced sophisticated approaches to overcome the limitations of traditional, hand-crafted similarity measures. Early studies demonstrated that regional LSTM models could implicitly exploit static attributes to infer streamflow generation mechanisms (Kratzert et al., 2019b). Recent developments take a more explicit approach by deriving improved similarity rules directly from data. One line of work defines similarity through the classification of streamflow generation mechanisms based on hydrological signatures, thereby grouping catchments with more homogeneous behavior (Yu et al., 2024). Another, more data-driven direction introduces dynamic basin-affinity metrics that quantify how the learning gradient from one basin affects the loss function of a target basin. This allows for the automatic identification of training subsets that positively contribute to the target model while filtering out “mutual noise” (Nai et al., 2024).

These emerging task-specific similarity definitions represent a significant advance. However, they define similarity primarily through the lens of the rainfall-runoff model itself (e.g., via gradients or behavioral signatures). This raises a fundamental question: can we find a task-agnostic similarity definition that is as rich and data-driven as these learned metrics, yet as physically grounded as traditional catchment descriptors? The core limitation of conventional catchment descriptors lies not in the concept of using physical attributes, but in their execution: the attributes were sparse, hand-crafted, and failed to capture the complex, integrated spatio-temporal dynamics of the land surface, such as vegetation phenology, surface moisture patterns, and land-cover heterogeneity. This specific challenge, capturing the integrated physical state of the land surface in a dense, generalpurpose representation, is precisely the promise offered by large-scale Earth Observation (EO) Foundation Models (FMs) (Lacoste et al., 2023). While the deep learning approaches previously discussed are powerful, they are fundamentally constrained by their supervised training on rainfall-runoff tasks within a limited set of gauged basins. Consequently, their learned relationships remain inherently local to that specific dataset and task, potentially limiting generalization to novel hydrological regimes. FMs offer a paradigm shift. These self-supervised models are pre-trained on massive archives of unlabeled, multi-modal geospatial data, scaling from terabytes to even petabytes, covering the entire globe (Mai et al., 2024). Critically, FMs are not trained on direct streamflow labels; instead, they learn the global intrinsic patterns and relationships within the Earth system data itself (e.g., phenology, surface moisture, land cover changes) (Kraabel et al., 2025). Through this self-supervised, global pre-training, FMs distill complex environmental signals into general-purpose “embeddings” (Lacoste et al., 2021) that describe each location with a rich, transferable representation unavailable to supervised, task-specific models. Notable examples include SatMAE (Cong et al., 2022), which leverages masked autoencoders (MAE) (He et al., 2022) to learn representations from temporal and multi-spectral satellite imagery, and Prithvi-EO-2.0 (Szwarcman et al., 2025), a multi-temporal Geospatial Foundation Model (GFM) trained on millions of samples from Harmonized Landsat and Sentinel-2 (HLS) data. These GFMs provide highdimensional vectors, such as the 256-dimensional SatCLIP location embeddings (Klemmer et al., 2025), that implicitly encode a location’s visual and environmental characteristics, like climate zones or land use patterns, enabling the identification of functionally similar locations even when they are geographically distant.

A prominent, publicly accessible model that operationalizes this concept is Google DeepMind’s AlphaEarth Foundations (AEF) (Brown et al., 2025). Hosted on Earth Engine, AEF provides publicly accessible, 64-dimensional satellite embeddings (annual, 2017-2024) that serve as analysis-ready representations. While these embeddings lack explicit hydrologic semantics, they offer a dense, rich context of the environmental state. Consequently, AEF presents a novel and potentially powerful alternative to traditional hand-crafted attributes for defining basin similarity, opening a new avenue to enhance streamflow prediction in ungauged basins.

This study first aims to evaluate the intrinsic value of AEF embeddings by assessing their performance in direct streamflow prediction under both in-sample (IS) and out-of-sample (OOS) conditions (Heudorfer et al., 2025). By directly integrating these embeddings into a deep learning model for streamflow prediction, we can quantify their inherent ability to capture and represent complex hydrological information, thereby demonstrating their fundamental utility and predictive power as a data source. Following this, we address the PUB setting by fixing a single target basin and constructing its training set exclusively from other basins chosen by a similarity rule. We evaluate three operational definitions of similarity: (i) cosine similarity computed from catchment attributes; (ii) cosine similarity on a model-learned fusion embedding obtained from the pre-LSTM fully connected layer that ingests hydrometeorological time series together with catchment attributes; and (iii) cosine similarity computed from AEF satellite embeddings. Using a large-sample benchmark (e.g., CAMELS) to standardize inputs and evaluation, we ask: which similarity yields better ungauged predictions? Crucially, this investigation includes a scaling analysis to examine how predictive performance evolves as the number of donor basins increases. This allows us to test whether the high-resolution environmental information embedded in foundation model representations supports more data-efficient learning and enables better generalization from smaller but more coherent donor sets compared with larger, more heterogeneous collections. Finally, we investigate the physical structure of the AEF embedding space to better understand the hydrological factors that shape these data-driven representations.

The remainder of the paper is organized as follows. Section 2 introduces the datasets (hydrometeorological time series, catchment attributes, and AEF embeddings), the model architecture and similarity computations, and the evaluation metrics. Section 3 details the experimental design for PUB, including target selection, donor selection under the three similarity definitions, training protocols, and ablation settings. Section 4 presents comparative analysis, focusing on predictive skill and the sensitivity of performance to training set size. Section 5 discusses the physical interpretability of the embeddings and the critical trade-off between discriminative precision and cross-regime generalization. Finally, Section 6 concludes the study and outlines directions for future work.

We used all 671 catchments from the CAMELS dataset as experimental data. For dynamic input data we used precipitation, daylength, shortwave radiation, minimum/maximum temperature, vapor pressure and potential evapotranspiration from the daymet (Thornton et al., 2016) data set in the CAMELS dataset. These features were subsequently fed into two model variants utilized in this study. One model variant utilized a set of 17 physiographic catchment attributes derived from the CAMELS dataset. This feature set is consistent with that employed by Feng, et al. (2020). Given the exhaustive description of these features provided in that prior work, we refrain from further discussion here. Another model variant utilized a 64dimensional embedding vector sourced from the Satellite Embedding dataset generated by the AEF.

AEF derives its representations from a vast archive of global satellite imagery, utilizing sensors such as Sentinel-1/2 and Landsat to cover the Earth’s land surface. Rather than training on raw time series indiscriminately, the model employs a globally balanced sampling grid. This strategy ensures that the learned features meaningfully reflect the diversity of Earth’s biomes, climates, and land-surface conditions. Through unified training pipelines, AEF condenses these multi-temporal and multi-spectral inputs into compact embedding vectors. These representations capture both fine spatial details and broader environmental patterns, effectively summarizing complex land-surface dynamics, including vegetation phenology and surface texture, into a low-dimensional format.

The final output consists of 64-dimensional embedding vectors generated at a 10-meter spatial resolution, spanning the eight-year period from 2017 to 2024. We acknowledge the temporal mismatch between this window and the standard CAMELS period . However, we treat these embeddings as static attributes based on the assumption that the fundamental physiographic characteristics they encode, including topography and dominant vegetation patterns, remain sufficiently stable over this timeframe. Furthermore, since the hydrological model training and testing phases predate the satellite observations, this setup inherently precludes any risk of data leakage. For each study basin, we obtained a single representative vector by averaging the pixel-level embeddings across both spatial and temporal dimensions.

For a foundation model, a general usage is to use its output as the input for downstream applications (the foundation model itself is the upstream foundation) (Szwarcman et al., 2025). Hence, a simple usage is to replace the raw features used in the original training set by the 64-dimensional AEF embedding vectors. These vectors are then concatenated with the corresponding ground-truth labels and fed into the model for training. During the testing phase, the embedding vectors extracted from the test set are input to the trained model to facilitate the prediction of target labels. This approach effectively utilizes the highly compact and semantically rich representations learned by AEF as a foundational feature set, significantly simplifying the input feature engineering for various downstream geospatial tasks.

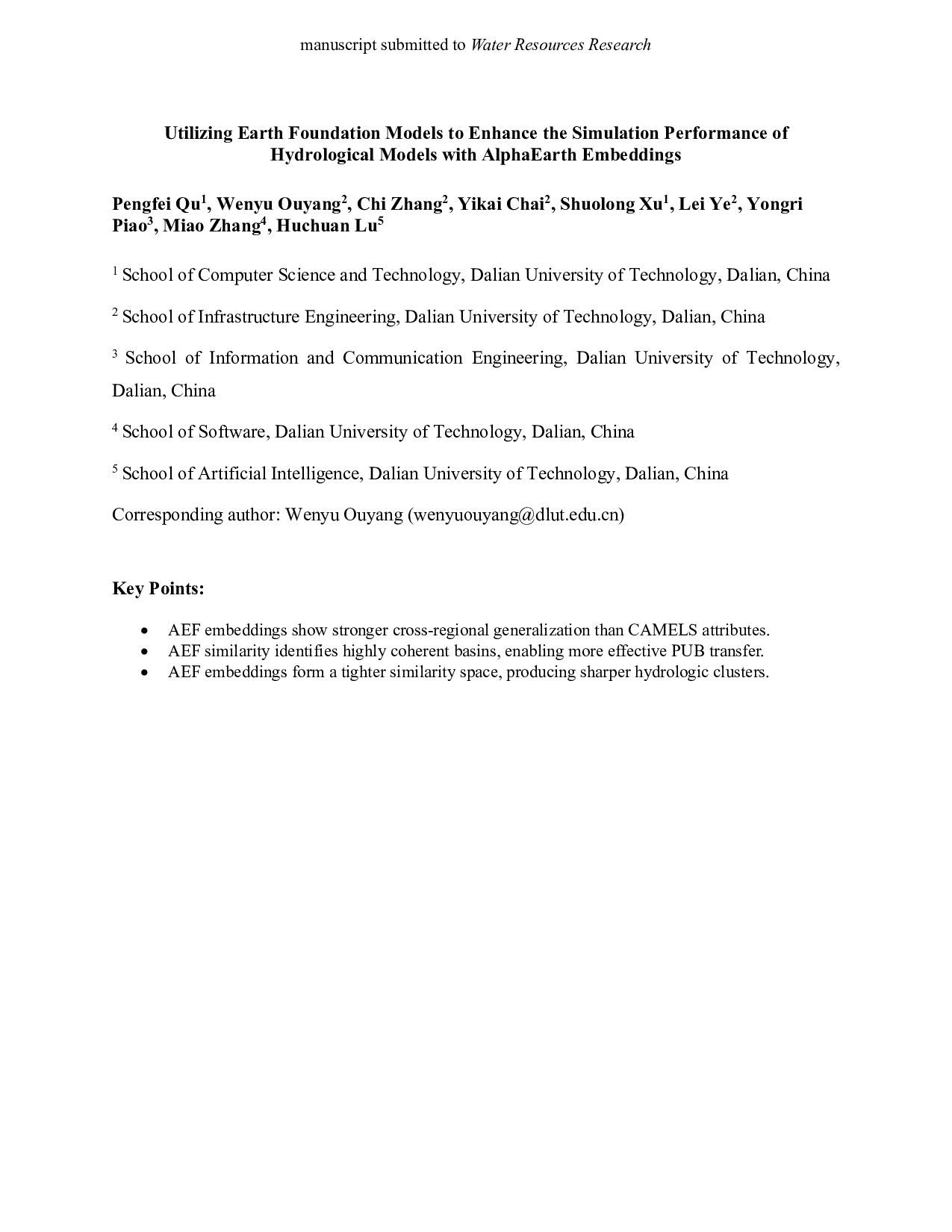

The LSTM network is a type of recurrent neural network that incorporates dedicated memory cells capable of storing information over extended time periods. Information flow within the LSTM is controlled by a specific configuration of operations known as gates. These memory cells are, in essence, analogous to the state vector within a traditional dynamical systems model, positioning LSTMs as a potentially ideal candidate for modeling complex dynamical systems. Compared to other recurrent neural network architectures, LSTMs mitigate the issues of exploding and vanishing gradients, which enables them to effectively learn long-term dependencies between input and output features. All experiments in this study utilized a simple LSTM model and multilayer perceptron that serves as a feature transformation component (Figure 1). The MLP is composed of fully connected layers with nonlinear activation functions, enabling it to bring heterogeneous inputs into a more compact and informative latent representation. This preprocessing step helps combine static basin characteristics with dynamic meteorological inputs before they enter the LSTM.

As illustrated in Figure 1, the model first integrates static basin descriptors including CAMELS attributes or AEF embeddings with dynamic meteorological inputs. These two types of information play complementary roles. The static attributes provide watershed characteristics that remain constant, whereas the dynamic features capture day-to-day climatic variations that drive hydrological responses. After concatenation, the combined input vector is passed through a multilayer perceptron that maps the heterogeneous inputs into a shared latent feature space. The transformed sequence is then processed by the LSTM, which extracts temporal dependencies essential for representing runoff-generation processes. Finally, a feedforward neural network converts the LSTM outputs into streamflow predictions. This architecture allows static basin properties to modulate how meteorological forcings are interpreted, thereby enabling the model to learn basin-specific hydrologic behavior while retaining a uniform network structure across all basins.

To improve the accuracy of predictions in ungauged basins (PUB), we opted to train the LSTM model using a set of basins that resemble the target catchment. Basin similarity was evaluated using cosine similarity computed under three different representations.

(1) Attribute similarity. Construct a standardized attribute vector for each basin and compute pairwise cosine similarity.

(2) Fusion embedding similarity. We trained a complete LSTM rainfall-runoff model in which the catchment attributes are first transformed through a fully connected layer. The resulting attribute embedding is then concatenated with the hydrometeorological time series at each time step and passed into the LSTM.

After training, we feed the catchment attributes into the network and extract the post-fully connected layer representation, which serves as a fixed-length embedding for each basin. Cosine similarity between these embeddings is then used to quantify basin similarity.

(3) AEF embedding similarity. Use the AEF embedding vector from 2.2 and compute cosine similarity.

Formally, for a representation 𝑧 ! of basin 𝑖, the similarity to basin 𝑗 is

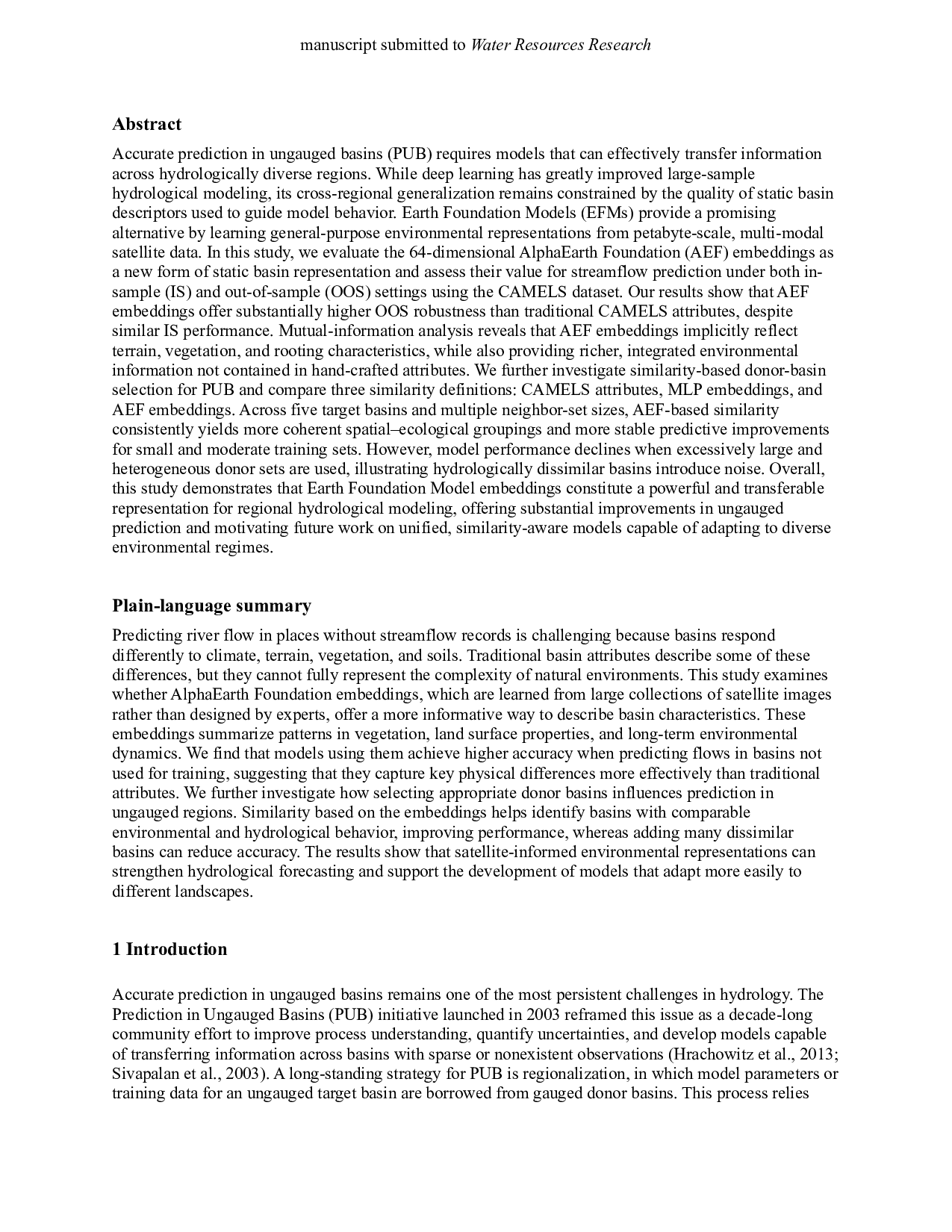

We z-score attributes across the candidate donor set; for fusion embeddings and AEF, we apply perdimension standardization before similarity computation. The data processing workflow is shown in Figure 2.

Experiment A

We first examine whether AEF can improve simulation performance relative to conventional static basin attributes, thereby assessing whether AEF capture additional information that is beneficial for streamflow prediction. We used a simple LSTM model to train with AEF embedding and attributes. The model is a 1D (input) to 1D (output) dynamical systems model, where the inputs are meteorological time series data spatially aggregated over the catchment, and the output is a streamflow time series at the corresponding gauge location. The model is trained using data from multiple catchments simultaneously. It consists of a single LSTM layer with 128 hidden states, followed by a 40% dropout layer and a linear output layer. The batch size was 256. For prediction, the best epoch’s model state was used. Training period was January 1980-December 2004, testing period was January 2010-December 2014, sequence length was 365 days.

We ran two different variants thereof. We trained two distinct models, each incorporating different sets of watershed attributes (the CAMELS attributes and AEF Embeddings) which were concatenated with dynamic meteorological inputs.

Each of these model variants were run in-sample (IS, i.e. temporal out-of-sample but spatial in-sample) and out-of-sample (OOS, i.e. temporal as well as spatial out-of-sample). Practically, IS was implemented by using the train period of 531 catchments for training and the test period of 531 for prediction. OOS was implemented by a 5-fold cross validation, where in each fold, training happened on the train period of 80% (N) of the catchments, and prediction on the test period of the other 20% (N) of catchments.

To evaluate model performance, the Nash-Sutcliffe Efficiency (NSE, Nash and Sutcliffe, 1970) and the manuscript submitted to Water Resources Research Kling-Gupta Efficiency (KGE, Gupta et al., 2009) were calculated during the test period. To account for uncertainty arising from stochastic model weight initialization, each model configuration was trained and evaluated using five different random seeds. The computation of NSE and KGE was further subjected to a bootstrap procedure to derive robust aggregate test scores. Specifically, for each catchment, the test-period predictions from all five seed realizations were pooled into a single ensemble. From this ensemble, 80% of the samples were repeatedly drawn with replacement, yielding 100 bootstrap replicates. For each replicate, NSE and KGE were recalculated, resulting in 100 bootstrapped score estimates per catchment.

To compare the score distributions of the different model configurations, we employed two-sided Kolmogorov-Smirnov (KS) tests, assuming as the null hypothesis that the two distributions are identical (Hodges, 1958). It was conducted using the respective implementations in the SciPy library (Virtanen et al., 2020). In addition, to quantify the extent of shared information between the static CAMELS attributes and AEF, we computed pairwise mutual information scores using the scikit-learn toolkit (Pedregosa et al., n.d.).

To further investigate the degree to which AEF embeddings encode complementary or redundant information relative to the traditional CAMELS attributes, we conducted a mutual information (MI) analysis between the two sets of static descriptors. Mutual information is an information-theoretic measure that quantifies the amount of shared information between two random variables and reflects the strength of their statistical dependence regardless of whether the relationship is linear or nonlinear. In this study, each CAMELS attribute and each AEF embedding dimension was treated as an independent random variable, and all possible pairs of attributes and embedding dimensions were compared to construct a complete mutual information matrix. The MI values allow us to determine whether specific AEF dimensions share substantial structure with known physiographic, climatic, or geological attributes or instead capture information that is not well represented in existing CAMELS descriptors.

For two discrete variables 𝑋 X and 𝑌 Y, mutual information is formally defined as

where 𝑝(𝑥, 𝑦) is the empirical joint probability and 𝑝(𝑥) and 𝑝(𝑦) are the corresponding marginal distributions. All variables were standardized to ensure comparability across features and to reduce the effects of scale differences.

The resulting MI matrix serves as a diagnostic tool for evaluating information redundancy between the two descriptor sets. High MI values reveal embedding dimensions that closely align with known watershed characteristics, whereas consistently low MI values suggest that AEF representations encode distinct and potentially hydrologically meaningful patterns not captured by traditional CAMELS attributes. This analysis complements the simulation experiments by offering a quantitative assessment of how much novel information AEF embeddings contribute beyond existing static attributes.

The purpose of Experiment B is to examine whether choosing basins that share similar hydrological characteristics, instead of relying on the full set of available basins, can lead to improved prediction performance in ungauged regions. A further aim is to understand whether different definitions of basin similarity influence how well the model generalizes as the size of the training set gradually increases. Through this experiment, we evaluate how the selection of hydrologically comparable basins contributes to maintaining process consistency and to reducing the noise that may arise when basins with markedly different hydrological behavior are included in training.

To conduct this analysis, we rank all basins in the CAMELS dataset with respect to a given target basin using three similarity measures defined in Section 2.3.

Based on these rankings, we construct seven training sets by selecting the top k most similar basins, where k ∈ {100, 200, 300, 400, 500, 600}, as well as a baseline using all 670 basins except the target basin. Each training set is used to train the same LSTM model employed in Experiment A, and performance is evaluated on the target basin using NSE. This design allows us to systematically study how prediction skill scales as the training set expands from strongly similar basins to increasingly dissimilar ones.

To ensure the experiment remains computationally feasible while still covering diverse hydrological regimes, we selected five basins from distinct geographic and ecological regions in CAMELS. These basins span a wide range of climatic and physiographic conditions, enabling us to evaluate whether similaritybased training-set selection consistently improves PUB performance across contrasting hydrological environments. Performing the full scaling analysis on all 671 basins would be computationally prohibitive; therefore, these five representative basins offer a balanced and tractable compromise between computational cost and experimental breadth.

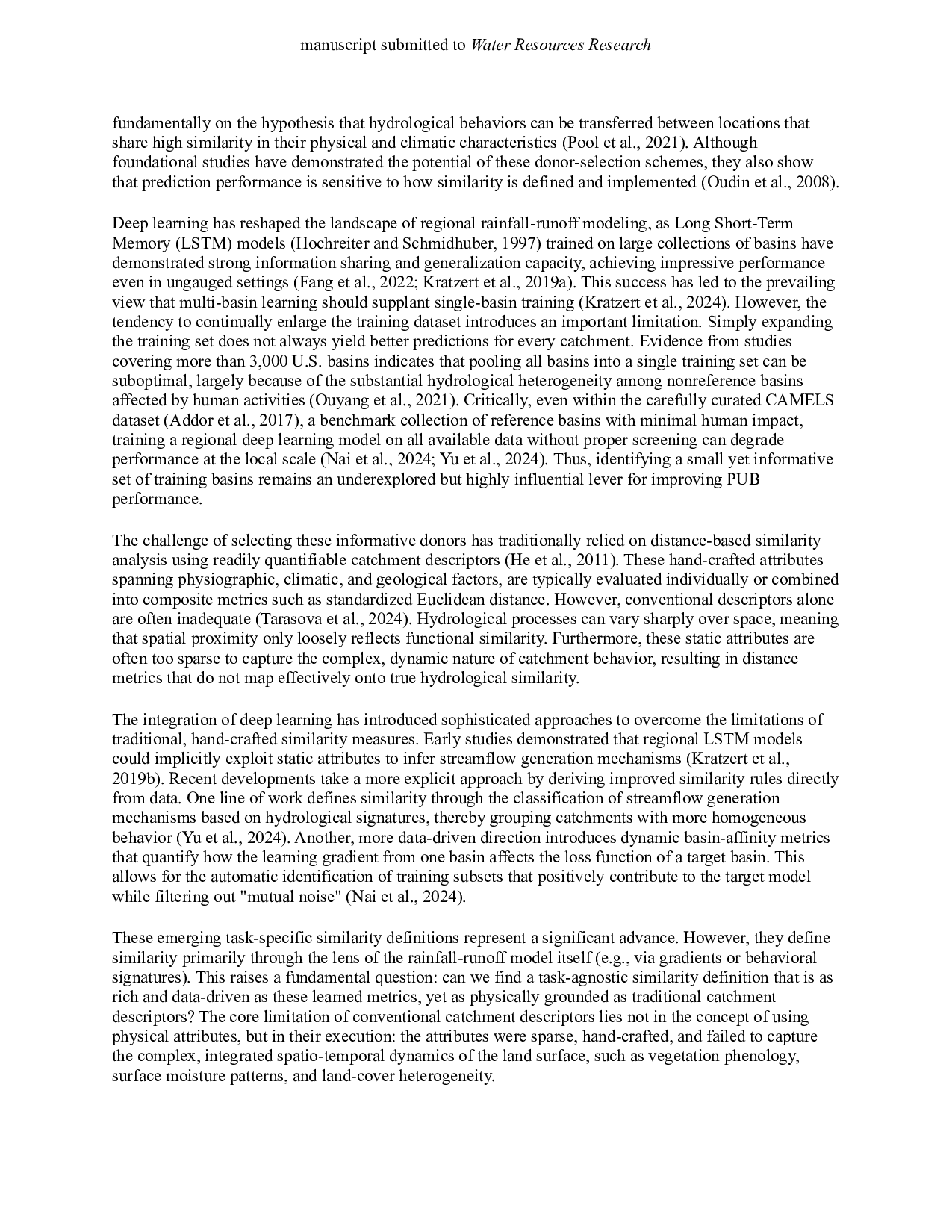

Figure 4 compares the NSE performance of two model variants-one using CAMELS attributes and the other using AEF embeddings-under both in-sample (IS) and out-of-sample (OOS) conditions (KGE results shown in Figure S1). Under the IS scenario, the two models exhibit nearly indistinguishable performance distributions (KS = 0.0177, p-value = 0.0779). In the OOS scenario, however, a modest yet statistically significant divergence in their performance distributions emerges (KS = 0.0863, p-value = 6.07×10⁻⁹). Although both models experience a performance decline when moving from the IS to OOS, the reduction is substantially larger for the CAMELS-attribute-based model. This indicates that the generalization capacity derived from conventional basin attributes is highly limited. In contrast, the AEF-embedding-based model exhibits a considerably smaller degradation, suggesting that the embedding features provide a more transferable representation of basin characteristics and support improved predictive generalization under distributional shifts. Most importantly, the AEF-embedding-based model outperforms the CAMELSattribute-based model. This suggests that AEF encapsulates richer and more informative representations of basin characteristics, enabling the model to extract underlying relationships that are more relevant for streamflow simulation than those captured by traditional static attributes.

Further evidence is provided by the mutual information analysis between the CAMELS attributes and the AEF embedding (Figure 5). The results show that several CAMELS terrain and vegetation attributes exhibit substantial shared information with the AEF space, including elevation, slope, LAI metrics, and dominant land cover. This pattern is expected given that topography and vegetation can be directly inferred from remote-sensing imagery, and root depth is strongly linked to vegetation type and thus partly recoverable through indirect cues.

At the same time, the CAMELS attribute set itself omits many aspects of land-surface and ecohydrological dynamics that are present in modern satellite observations. Thus, some of the information in AEF embeddings is not explicitly represented in CAMELS. This asymmetry could explain why AEF simultaneously provides complementary information beyond the CAMELS descriptors and leads to a better OOS result. The relative robustness of the AEF model raises questions about how deep learning models leverage physiographic attributes. Our results echo concerns raised in recent literature that static attributes may serve partially as identifiers for basins in large-sample learning systems, enabling the model to memorize basinspecific behaviors in-sample rather than learning generalized hydrologic structure (Li et al., 2022). Conversely, being derived from large-scale, self-supervised learning across millions of satellite-grid samples, AEF appears to encode broader environmental regularities. These patterns are known to be strongly linked to hydrologic functioning but are difficult to hand-engineer into catchment descriptors.

While the AEF embeddings contain environmental signals aligned with a subset of CAMELS attributes, a considerable number of attributes display very low mutual information with AEF, suggesting that they may be weakly observable from remote sensing or represent hydrologic processes that the embeddings do not completely encode. These attributes may represent noisy variables, redundant information already captured by meteorological inputs, or aspects of subsurface processes that are invisible to surface imagery, suggesting better embeddings exist when encoding global physiographical attributes.

In summary, these results demonstrate that AEF embeddings provide a richer and more transferable representation of basin characteristics than traditional CAMELS attributes, raising an important question: how can such representations be most effectively used to improve cross-basin generalization?

Building on the findings in Section 4.1 that AEF provide a more informative basis for PUB than the CAMELS attributes, we further investigate whether training on hydrologically similar basins can yield improved predictive performance, and whether a smaller but carefully curated training set can achieve performance comparable to, or better than, a larger but noisier training pool.

To understand the mechanism driving potential performance differences, we first examine the topological structure of the similarity spaces defined by the three methods (Figure 6). A striking contrast emerges between the approaches. The attribute and MLP-embedding matrices (Figs. 6a-b) exhibit diffuse, fragmented patterns, indicating that these metrics capture only coarse relationships where “nearest manuscript submitted to Water Resources Research neighbors” may still possess considerable heterogeneity. In distinct contrast, the AEF similarity (Fig. 6c) reveals a globally continuous and dense structure: notably, the baseline similarity remains relatively high even among “dissimilar” basins, suggesting that the foundation model captures a fundamental environmental coherence across the entire domain. Within this globally coherent space, strong blockdiagonal clusters emerge, organizing basins into distinct, highly homogeneous regimes. Crucially, the high global coherence combined with sharp local clustering sets a clear expectation for the scaling experiment: the high “neighbor purity” within these AEF blocks suggests that highly relevant hydrological information can be retrieved from even a small number of donors, whereas the noisier attribute space likely requires aggregating a larger pool of basins to stabilize predictions. To visualize these patterns at the level of a single basin, Figure 7 presents stripe plots for the target basin 01013500. Each vertical stripe represents one candidate basin, and the color encodes its similarity to the target under each method. Under CAMELS attributes and MLP-embeddings, stripes fluctuate irregularly, showing scattered pockets of high similarity interspersed with low-similarity basins. This reflects their diffuse and noisy similarity space. Under AEF embeddings, however, we observe a tighter, more coherent band of high similarity, with fewer abrupt fluctuations. This indicates that AEF identifies a more compact and hydrologically consistent set of neighbors. The trends observed in NSE across different similarity definitions highlight the critical role of similarityguided training-set construction in PUB performance. When the training set is small (e.g., the top 100-200 most similar basins), AEF-based similarity consistently achieves superior performance for several basins. This indicates that the similarity space defined by AEF helps the model learn more effective cross-basin patterns early in training, even under limited-sample conditions. In contrast, random donor selection performs the worst across all settings, reinforcing the necessity of similarity-aware basin selection in PUB scenarios.

As the training set expands to moderate sizes (300-500 basins), the performance of all methods improves, though with varying degrees of gain. This divergence suggests that each similarity measure induces a distinct basin-selection paradigm that shapes the model’s generalization pathway: for some methods, enlarging the training set introduces additional “useful” basins that strengthen learning, whereas others tend to incorporate more noisy or hydrologically dissimilar basins, limiting the benefits of increased data availability.

Although the three approaches differ in their training configurations, with the attribute-based and fusionembedding methods using 24-dimensional input vectors, while the AEF-embedding method employs 71-dimensional inputs, the final performance differences are negligible when 670 basins are included in the training set. This suggests that with sufficiently large training samples, the models are capable of adequately capturing the dominant hydrological signals, thereby reducing the sensitivity to differences in input dimensionality. Consequently, under large-sample training conditions, the effect of varying input dimensions on overall results can be considered marginal. Taken together, Sections 4.1 and 4.2 show that AEF embeddings not only provide a more expressive representation of basin characteristics than traditional static attributes but also interact in important ways with how donor basins are selected for training. Reliable cross-regional prediction therefore depends not only on having informative representations, but also on constructing training sets that balance informativeness and heterogeneity when transferring information across basins.

A central goal of this study was to evaluate whether Earth foundation model embeddings, specifically the 64-dimensional AEF embeddings, can meaningfully enhance generalization in rainfall-runoff simulation. Our results support this potential and demonstrate that AEF-based similarity is highly effective at identifying relevant donor basins to maintain robust predictive skill for prediction in ungauged basins (PUB). Yet, the reliance on these implicit, high-dimensional representations derived purely from global satellite observation necessitates a deeper examination of their physical interpretability compared to explicit catchment descriptors. Furthermore, the very nature of this high information density warrants discussion regarding its influence on model utility when extrapolating to dissimilar basins, particularly whether the precision of these embeddings aids or hinders performance across distinct hydrological regimes.

We firstly investigate the physical interpretability of the embeddings, examining the extent to which the learned latent structures correspond to essential physiographic attributes. Specifically, we cluster the 671 CAMELS-US basins based on their descriptor representations-either the original static CAMELS attributes or the AEF embeddings-and assign each basin to a discrete group (“C0-C8”, etc.). Each cluster can be viewed as a data-driven hydrological “regime”, in which basins share similar feature-space characteristics.

To determine the appropriate number of clusters for each representation, we employ the Silhouette Score as an automated model selection criterion. Concretely, we apply k-means clustering across a range of cluster numbers (e.g., 𝐾 = 2 to 15) and compute the Silhouette Score for each configuration. The Silhouette Score jointly evaluates within-cluster cohesion and between-cluster separation, where values closer to 1 indicate clearer and more stable cluster structure, and values near 0 suggest weakly defined or ambiguous partitioning. By selecting the value of 𝐾 that maximizes the Silhouette Score, we obtain the clustering configuration that best reflects the intrinsic structure of each representation.

Applying this automated procedure yields 12 clusters for both the CAMELS attributes and the MLPembeddings, whereas the AEF embeddings form a more compact structure with only 9 clusters (Figure 9). The spatial distributions of these clusters illustrate how each type of descriptor partitions the CAMELS-US basins into distinct hydrologic and physiographic regimes. The clusters produced from CAMELS attributes and those derived from the MLP embeddings show broadly similar spatial patterns and share comparable regional boundaries. This outcome reflects the tendency of the MLP embeddings, although learned within a rainfall-runoff model, to preserve much of the information embedded in the original static attributes. In contrast, the clusters produced by the AEF embeddings show smoother, more coherent spatial organization, suggesting that the foundation model internally captures large-scale environmental gradients that are not explicitly represented by hand-crafted basin descriptors. To further evaluate whether the AEF-derived clusters reflect meaningful hydrologic structure, Figure 10 summarizes the distributions of key CAMELS static attributes across the nine clusters (“C0-C8”). The differences among clusters are clear and systematic. Terrain-related variables such as catchment area, mean slope, and mean elevation vary substantially, with some clusters representing high-elevation, steep basins manuscript submitted to Water Resources Research typical of mountain regions that respond rapidly to rainfall, while others group flatter, low-lying basins where hydrologic processes unfold more slowly. Vegetation and land-cover characteristics also show strong separation. Some clusters include basins with dense forest cover and high leaf-area indices, whereas others are associated with sparsely vegetated or mixed land-cover settings. Soil and subsurface properties, including rooting depth, soil depth, porosity, conductivity, and water-holding capacity, reveal similarly meaningful distinctions, indicating that certain clusters capture shallow, coarse-textured mountain soils, while others correspond to deeper and more porous soils typical of alluvial plains. Geological attributes reinforce this pattern, as clusters tend to group basins with similar lithologic classes, porosity, and permeability. Together, these attribute patterns suggest that the AEF embeddings organize basins into groups with coherent physical and hydrological characteristics and that the learned representation captures key environmental controls on basin behavior.

The broader structure shown in Figure 10 also illustrates that the AEF embeddings encode much more than simple visual similarity. They arrange basins along environmental gradients that correspond closely to dominant runoff-generation mechanisms. Although the embeddings were obtained through self-supervised learning on satellite imagery without hydrological labels, they nonetheless capture coordinated patterns across topography, vegetation, soils, and geology. These results indicate that the embeddings have strong physical interpretability. They align with major physiographic attributes more clearly than both the CAMELS attributes and the MLP embeddings, and they group basins in ways that reflect meaningful environmental processes. Such coherence provides a solid conceptual basis for applying AEF representations in PUB experiments and motivates further investigation into their spatial structure in future work.

To test the ability or limits of model transferability across distinct hydrological regimes, we conducted a manuscript submitted to Water Resources Research cluster-based cross-validation experiment (distinct from standard geographic PUR -prediction in ungauged regions). Instead of using fixed geographical regions like ecoregions (Feng et al., 2021;Omernik and Griffith, 2014), we defined “regimes” dynamically by clustering basins based on their feature spaces (CAMELS attributes vs. AEF embeddings). For each scheme, we withheld one entire cluster as the test target and trained on the remaining clusters, thereby forcing the model to generalize to a group of basins that are mathematically “dissimilar” to the training set. For each representation, we applied the Silhouette Score to determine the optimal number of clusters, resulting in 12 clusters for CAMELS attributes and 9 clusters for AEF embeddings. We then performed a leave-one-cluster-out experiment: one entire cluster was withheld as the test group, and the model was trained on all remaining clusters. This setup forces the model to extrapolate to a group of basins that are, by construction, mathematically dissimilar from those in the training set.

Figure 11 summarizes the aggregated performance across all regimes (all 671 basins). A clear divergence in cross-regime generalization performance emerges that clusters formed using AEF consistently led to weaker results compared with those derived from CAMELS attributes. a clear divergence emerges: the model informed by AEF clusters consistently yields weaker generalization performance compared to the one based on CAMELS attributes. This result, while seemingly counterintuitive given AEF’s success in donor selection (Section 4.2), highlights a critical “granularity-generalization” trade-off.

The AEF feature space is highly informative and discriminative. It captures nuanced differences in land surface characteristics (e.g., vegetation texture, specific land-use patterns) that distinguish basins with high precision. Consequently, when an entire AEF cluster is withheld, the model is deprived of a specific, fine-grained environmental signal that it has likely never seen in the training set. The model struggles to bridge the gap between the distinct “regimes” defined by AEF because the feature space is too strict and it penalizes dissimilarity heavily. In contrast, CAMELS attributes directly characterize key hydrological determinants, yielding clusters that are generally more homogeneous in their hydrological responses. The resulting clusters are less distinct, meaning that the “training” clusters likely still contain basins that broadly resemble the “test” cluster in terms of hydrological regime. This “blurriness” paradoxically aids broad generalization: the model learns a generic, average behavior that transfers more easily, even if it lacks precision. It is also worth noting that the CAMELS attributes produce more clusters than AEF, resulting in smaller clusters and thus larger training sets for each cross-regime experiment. Hence, despite this larger inclusion of potentially dissimilar basins, the CAMELS-based results outperform those based on AEF. This comparison further highlights that the AEF embeddings provide a much more complete and expressive characterization of basin properties than the static CAMELS attributes. This finding has broader implications for the pursuit of a unified hydrological model. The fact that performance degrades significantly when introducing dissimilar basins (particularly in the high-fidelity AEF space) suggests that, given current data constraints, a single global model may struggle to optimally represent the full diversity of hydrological behaviors. The reliance on surface-based remote sensing (as in AEF) captures high-resolution landscape details but misses critical information such as subsurface heterogeneity, creating a “context gap” that prevents the model from unifying distinct regimes. Therefore, our results suggest that under the current data paradigm, a segmented modeling strategy that trains specialized models on carefully selected, hydrologically similar subsets remain more effective than the brute-force pooling of diverse datasets. Moving towards a truly unified model will likely require more than just embeddings from geospatial foundation models; it calls for increasing data completeness, such as integrating more information from diverse data sources (Kraabel et al., 2025) and variables (Ouyang et al., 2025) to help the model separate general physical patterns from unique local characteristics.

This study systematically validated the superiority of AlphaEarth Foundation (AEF) embeddings as a new form of static basin representation for large-sample hydrological modeling and further examined their potential in prediction in ungauged basins (PUB). The results show that AEF embeddings provide much stronger cross-regional generalization than traditional CAMELS attributes. Although both types of static features performed similarly in-sample, with median NSE values of 0.708 for the AEF embeddings and 0.706 for the model using CAMELS attributes, their behavior differed noticeably once they were evaluated in spatial out-of-sample settings. For these more challenging conditions, the median NSE of the AEFembeddings rose to 0.612, while the model relying on CAMELS attributes reached only 0.553. Consequently, models incorporating AEF embeddings were more robust and yielded higher predictive accuracy across basins. Mutual-information analysis further confirmed that the embeddings not only capture deep environmental signals related to topography, vegetation, and other fundamental controls on hydrologic response, but also encoding integrated environmental information not explicitly present within CAMELS attributes. These findings strongly indicate that Earth Foundation Models provide physically meaningful and transferable basin representations.

In the PUB experiments, we quantitatively evaluated the improvement in cross-basin predictive performance achieved through similarity-based training basin selection strategies, focusing on comparing the efficacy of various similarity metrics. The results demonstrate a significant advantage and robustness for the AEF embedding similarity metric. Under small training set sizes (𝑘 ≤ 300), the AEF embedding method successfully identified hydrologically more consistent training subsets, providing the most stable predictive performance enhancement compared to CAMELS attribute similarity and fused embedding similarity. For instance, the NSE values achieved by AEF peaked at 0.75 at 𝑘 = 200 in Basins 1013500 and 14138900, substantially surpassing other baseline methods. However, the mean performance trend plot confirms that as the training set size continuously increases, the marginal benefit of performance improvement diminishes and even degrades. Although AEF maintained the highest average NSE of approximately 0.6 within the 𝑘 = 300 to 𝑘 = 500 range, the inclusion of functionally dissimilar basins due to excessive training set expansion introduced noise, ultimately attenuating the benefits of similaritydriven selection. This finding suggests that the optimization of cross-basin performance stems from a multiplicative synergy between high-quality environmental representation and similarity-driven data filtering, where AEF embeddings provide an efficient mechanism for capturing physical basin similarity, and the similarity selection mechanism determines the model’s capacity to effectively leverage this information.

Our experiments also highlight the critical role of data scale and heterogeneity. Under the current CAMELS-US scale (600-670 basins), expanding the training set by adding dissimilar basins introduces strong heterogeneity-driven noise that outweighs the benefits of additional data, leading to performance deterioration. Crucially, this study highlights a central trade-off in current deep learning hydrology, the tension between discriminative precision and broad generalization. Our cross-regime experiments showed that while AEF’s high information density makes it an excellent “discriminator” for finding nearest neighbors, its sensitivity to fine-grained environmental details can hinder generalization when the model is forced to extrapolate to entirely new regimes. This suggests that current “entity-aware” or regional models still struggle to decouple underlying physical laws from the specific static contexts in which they were trained. Whether global-scale datasets covering North America, Europe, East Asia, Australia, and beyond might ultimately offset this effect and enable models to learn truly transferable hydrologic patterns remains an open question requiring further investigation. Therefore, a key direction for future work is therefore to develop a single unified hydrological model capable of adapting to any basin. Such a model would dynamically activate different parameter substructures via mechanisms such as conditional computation, dynamic routing, or mixture-of-experts to automatically identify and utilize the most relevant hydrological processes for each ungauged basin, without retraining.

Overall, this study highlights the substantial potential of Earth Foundation Models for regionalized hydrological modeling and provides a foundational step toward constructing transferable, cross-ecoregion basin representations. More importantly, it underscores the need for deeper inquiry into how deep learning models represent hydrologic processes, utilize information, and generalize across basins. We hope that the results presented here contribute to renewed interest in the central scientific question of whether deep learning can truly learn hydrologic functional behavior, and that the insights gained will help advance PUB theory and methodology into a new stage of development.

This content is AI-processed based on open access ArXiv data.