Wittgenstein s Family Resemblance Clustering Algorithm

📝 Original Paper Info

- Title: Wittgenstein s Family Resemblance Clustering Algorithm- ArXiv ID: 2601.01127

- Date: 2026-01-03

- Authors: Golbahar Amanpour, Benyamin Ghojogh

📝 Abstract

This paper, introducing a novel method in philomatics, draws on Wittgenstein's concept of family resemblance from analytic philosophy to develop a clustering algorithm for machine learning. According to Wittgenstein's Philosophical Investigations (1953), family resemblance holds that members of a concept or category are connected by overlapping similarities rather than a single defining property. Consequently, a family of entities forms a chain of items sharing overlapping traits. This philosophical idea naturally lends itself to a graph-based approach in machine learning. Accordingly, we propose the Wittgenstein's Family Resemblance (WFR) clustering algorithm and its kernel variant, kernel WFR. This algorithm computes resemblance scores between neighboring data instances, and after thresholding these scores, a resemblance graph is constructed. The connected components of this graph define the resulting clusters. Simulations on benchmark datasets demonstrate that WFR is an effective nonlinear clustering algorithm that does not require prior knowledge of the number of clusters or assumptions about their shapes.💡 Summary & Analysis

1. **Applying Wittgenstein's Family Resemblance to Machine Learning**: This research introduces a novel clustering approach based on philosophical insight, treating data points as "family members" and representing their similarities through graphs, thus understanding complex data structures. 2. **WFR Clustering Algorithm**: The WFR algorithm, developed from the family resemblance concept, calculates pairwise resemblances among data points to form clusters naturally without assuming specific shapes or predefined cluster numbers. This offers more flexibility compared to traditional clustering methods. 3. **Experimental Validation**: The effectiveness of the WFR algorithm in capturing complex and nonlinear cluster structures is demonstrated through various toy benchmark datasets.Sci-Tube Style Script

Level 1: Beginner

When analyzing data, we often need to group similar items together. This paper introduces a new method inspired by philosophical ideas. Wittgenstein’s concept of “family resemblance” explains how certain items can be perceived as similar; this is applied in machine learning to represent the similarities between data points through graphs and group them accordingly.

Level 2: Intermediate

Wittgenstein described relationships between concepts using the term “family resemblance.” This study develops a clustering algorithm, WFR, based on that concept. It represents similarities among data points as a graph and naturally forms clusters from this representation. This method is particularly effective in dealing with complex nonlinear structures.

Level 3: Advanced

The WFR algorithm represents pairwise resemblances between data points as graphs to form clusters naturally without assuming specific shapes or predefined numbers of clusters, offering greater flexibility than traditional methods. Experimental results demonstrate its effectiveness in capturing complex and nonlinear cluster structures across various toy benchmark datasets.

📄 Full Paper Content (ArXiv Source)

Introduction

This paper introduces a novel approach in philomatics1 that draws inspiration from Wittgenstein’s concept of family resemblance in analytic philosophy to develop a clustering algorithm for machine learning. In 1953, in his book Philosophical Investigations, Ludwig Wittgenstein proposed that members of a concept or category are related through overlapping similarities rather than by a single defining property . According to this view, a family of entities forms a network or chain of interrelated items, where each member resembles some, but not necessarily all, other members. This insight has been widely applied in analytic philosophy to explain the structure of concepts in language, ethics, art, and science, where traditional essentialist definitions fail.

The notion of family resemblance naturally suggests a graph-based perspective for clustering in machine learning. In a dataset, individual data points can be viewed as “members” of a conceptual family, and their pairwise resemblances (or similarities) correspond to the overlapping traits emphasized by Wittgenstein. By representing these resemblances as the edges in a graph, clusters can emerge as connected groups of points, analogous to overlapping networks of resemblance in philosophical concepts.

Motivated by this analogy, we propose the Wittgenstein’s Family Resemblance (WFR) clustering algorithm. WFR begins by computing resemblance scores between neighboring data instances based on resemblance function. A threshold is then applied to these scores to construct a resemblance graph, where edges indicate strong resemblance between points. The connected components of this graph naturally define clusters, without requiring prior knowledge of the number of clusters or assumptions about cluster shapes.

We evaluate the performance of WFR on a variety of toy benchmark datasets. Experimental results demonstrate that the algorithm effectively captures complex, nonlinear cluster structures. These results highlight the potential of WFR as a flexible, philosophically motivated approach to unsupervised learning, bridging insights from analytic philosophy and modern machine learning .

This paper is organized as follows. Section 2 provides the background on Wittgenstein’s family resemblance in analytic philosophy. The proposed WFR clustering is presented in Section 3. Section 4 discusses the time and space complexities of the proposed algorithm. Simulations in Section 5 justify the effectiveness of the proposed algorithm. Finally, Section 6 concludes the paper with a possible future direction for this algorithm.

Background on Wittgenstein’s Family Resemblance in Analytic Philosophy

Introduction to Ludwig Wittgenstein’s Philosophy

Biography of Ludwig Wittgenstein

Ludwig Wittgenstein (1889–1951) was an Austrian-British philosopher who made foundational contributions to logic, the philosophy of language, and the philosophy of mind. He studied engineering in Berlin and then moved to Cambridge to study under Bertrand Russell. Later, he became a professor of philosophy at the University of Cambridge. His work profoundly influenced analytic philosophy in the 20th century .

Early Wittgenstein (1911–1929)

The early Wittgenstein, active primarily between 1911 and 1929, is exemplified by his work Tractatus Logico-Philosophicus, published in 1921 . In the Tractatus, he develops a “picture theory” of language, according to which propositions represent the logical structure of reality and determine what can meaningfully be said. The work aims to draw sharp boundaries for language and dissolve philosophical problems by showing them as nonsensical. This book is highly structured, with a hierarchical, numbered system of propositions reminiscent of Spinoza’s Ethics , and it focuses on the logical form of language and its relation to the world .

Later Wittgenstein (1930–1951)

After a period away from philosophy, Wittgenstein returned and revised many of his earlier positions. His later philosophy, mainly from 1930 until his death in 1951, is most clearly presented in Philosophical Investigations, published posthumously in 1953 . In this book, he rejects his primary idea of a single universal logical structure and emphasizes that meaning arises from the use of language in context, introducing the notions of “language-games” and “forms of life.” Philosophy, in the later Wittgenstein, is therapeutic: it clarifies the actual use of language to resolve confusion rather than building a systematic theory .

Key Differences of Early and Later Wittgenstein

The main differences between early and later Wittgenstein can be summarized as follows:

-

View of Language: Early Wittgenstein sees language as picturing reality; later Wittgenstein sees language as diverse practices embedded in life.

-

Philosophical Aim: Early work seeks a unified theory of meaning and limits of language; later work rejects grand theories and focuses on clarifying confusion in actual language use.

-

Method and Style: The Tractatus is formal and logical, whereas Investigations is fragmentary and conversational.

-

Russell’s View: Bertrand Russell explicitly admired Wittgenstein’s early philosophy but strongly criticized his later philosophy .

The Notion of Family Resemblance

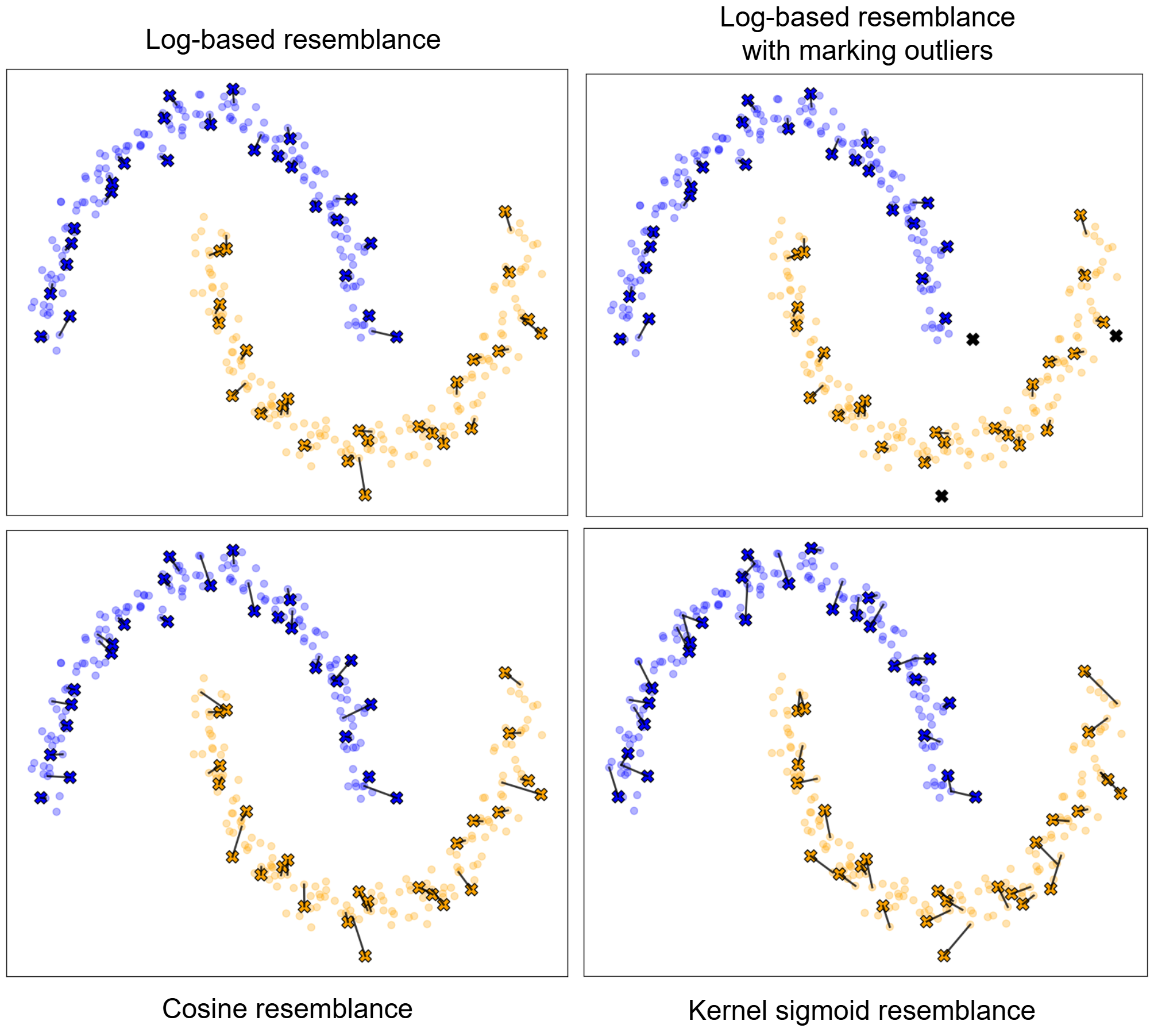

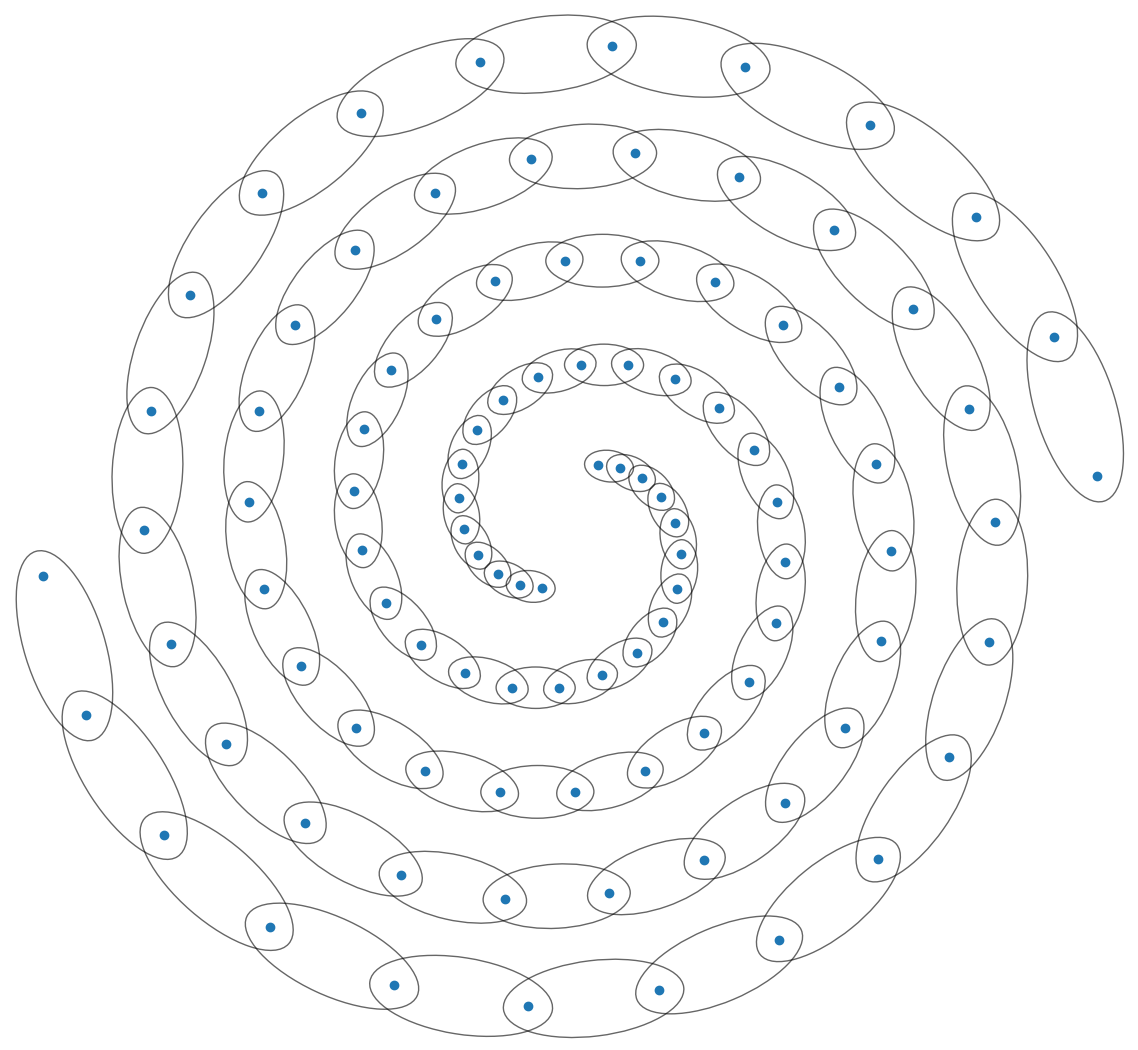

In Philosophical Investigations , §§66–712, the later Wittgenstein introduced the notion of family resemblance. To illustrate this idea, consider three entities, labeled 1, 2, and 3, as shown in Fig. 1. Suppose entity 1 possesses attributes (or so-called traits) $`a`$ and $`b`$, entity 2 possesses attributes $`b`$ and $`c`$, and entity 3 possesses attributes $`c`$ and $`d`$. Although entities 1 and 3 share no attributes directly, all three may nonetheless be regarded as belonging to the same family. This is because entity 1 shares an attribute with entity 2, and entity 2 shares an attribute with entity 3, forming a chain of overlapping similarities. Thus, resemblance is established not through a single common feature shared by all entities, but through a network or tree-like structure of partial overlaps.

Use of Family Resemblance in Analytic Philosophy

Wittgenstein’s notion of family resemblance is widely used in analytic philosophy to analyze concepts that resist definition in terms of necessary and sufficient conditions. Instead of a single shared essence, such concepts are unified by overlapping similarities. In the following, we review some of the use cases of family resemblance in analytic philosophy.

Ordinary Language Concepts

Family resemblance is invoked when analyzing ordinary language concepts whose extensions are heterogeneous. Wittgenstein’s canonical example is the concept of a game . Board games, card games, athletic competitions, and solitary amusements share no single common feature. Instead, they exhibit overlapping similarities such as rule-following, competition, skill, or entertainment.

Philosophy of Language

In analytic philosophy of language, family resemblance is used to support the thesis that meaning is grounded in use rather than in strict definitions . Words such as “language”, “sentence”, or “meaning” do not admit sharp boundaries but are understood through patterns of use across contexts; thus, they can be understood by family resemblance .

Philosophy of Science

Family resemblance has been invoked in discussions of scientific classification, particularly in cases where categories resist essentialist definition . A prominent example arises in the classification of biological species. According to essentialist accounts of natural kinds, each genuine kind—such as a biological species or a chemical element—is characterized by an underlying essence, with membership determined by necessary and sufficient conditions . However, empirical findings in biology reveal substantial variation among organisms within a species: no single genetic, morphological, or ecological trait is shared by all and only its members, and species boundaries are often indeterminate, as illustrated by phenomena such as ring species, hybridization, and asexual reproduction . In light of this, proponents of a family resemblance approach argue that species are unified not by a common essence but by overlapping clusters of traits, with different members sharing different subsets of these properties .

Aesthetics and Philosophy of Art

In analytic aesthetics, family resemblance is used to reject definitional theories of art. Paintings, musical works, performances, and conceptual art forms share overlapping similarities—such as expression, intention, and cultural role—without a single defining property .

Ethics and Moral Philosophy

Family resemblance has been used in ethics and moral philosophy. Moral concepts such as virtue, responsibility, or vice (opposite of virtue) are often treated as family resemblance concepts. For example, Courage, honesty, generosity, and kindness resemble one another without sharing a common essence . This supports anti-reductive approaches to moral theory .

Philosophy of Mind

In philosophy of mind and cognitive science, family resemblance is used to analyze mental categories such as emotions or intelligence. Emotions like fear, anger, joy, and shame lack a single shared physiological or intentional structure, instead forming a cluster of related phenomena .

Wittgenstein’s Family Resemblance Clustering Algorithm

We propose the Wittgenstein’s Family Resemblance (WFR) clustering algorithm, which is inspired by family resemblance in philosophy. The details of this algorithm are explained in the following.

Main Idea

The central idea of WFR clustering is to compute pairwise resemblances between neighboring data instances. After applying a threshold to these resemblance values, instances with sufficiently high resemblance are linked, forming chains of similarity. These chains collectively define a family, which constitutes a cluster. This behavior is illustrated in Fig. 2 where two nonlinear clusters are found as two chains of similarities.

Resemblance Functions

For forming the clusters, i.e., families of resemblance, a notion of resemblance should be defined to calculate the resemblance (or similarities) of the neighboring data instances. Various resemblance functions can be used as long as they are increasing functions with respect to similarities.

The resemblance function is a map:

\begin{equation}

\begin{aligned}

&r: \mathcal{X} \times \mathcal{X} \rightarrow \mathbb{R}, \\

&r: \ensuremath\boldsymbol{x}_1, \ensuremath\boldsymbol{x}_2 \mapsto r(\ensuremath\boldsymbol{x}_1, \ensuremath\boldsymbol{x}_2),

\end{aligned}

\end{equation}where $`\mathcal{X}`$ is the space of data and $`\ensuremath\boldsymbol{x}_1`$ and $`\ensuremath\boldsymbol{x}_2`$ are two data instances. The $`r(\ensuremath\boldsymbol{x}_1, \ensuremath\boldsymbol{x}_2) \in \mathbb{R}`$ denotes the resemblance score of data instances $`\ensuremath\boldsymbol{x}_1 \in \mathbb{R}^d`$ and $`\ensuremath\boldsymbol{x}_2 \in \mathbb{R}^d`$. Some example resemblance functions, for calculating the resemblance scores, are introduced in the following.

Log-based Resemblance Function

The log-based resemblance function is:

\begin{align}

r(\ensuremath\boldsymbol{x}_1, \ensuremath\boldsymbol{x}_2) := \frac{1}{1 + \log\!\big(\|\ensuremath\boldsymbol{x}_1 - \ensuremath\boldsymbol{x}_2\|_2 + 1 + \epsilon\big)},

\end{align}where $`\|.\|_2`$ denotes the $`\ell_2`$ norm and $`\epsilon`$ is a small positive number for numerical stability. This resemblance score is in range $`[0, 1]`$ where dissimilar and equal data instances have resemblances $`0`$ and $`1`$, respectively.

Cosine Resemblance Function

The cosine resemblance function is:

\begin{align}

r(\ensuremath\boldsymbol{x}_1, \ensuremath\boldsymbol{x}_2) := \cos(\ensuremath\boldsymbol{x}_1, \ensuremath\boldsymbol{x}_2) = \frac{\ensuremath\boldsymbol{x}_1^\top \ensuremath\boldsymbol{x}_2}{\|\ensuremath\boldsymbol{x}_1\|_2\, \|\ensuremath\boldsymbol{x}_2\|_2},

\end{align}which is the inner product of normalized data instances. This resemblance score is in range $`[-1, 1]`$ where dissimilar and equal data instances have resemblances $`-1`$ and $`1`$, respectively. The cosine function calculates the resemblance in terms of angles between data instances.

Kernel Resemblance Function

Kernel functions can be used for resemblance (or similarity) measurements. The kernel resemblance function is:

\begin{align}

r(\ensuremath\boldsymbol{x}_1, \ensuremath\boldsymbol{x}_2) := k(\ensuremath\boldsymbol{x}_1, \ensuremath\boldsymbol{x}_2) = \phi(\ensuremath\boldsymbol{x}_1)^\top \phi(\ensuremath\boldsymbol{x}_2),

\end{align}where $`k(.,.)`$ is the kernel function and $`\phi(.)`$ is the pulling function from the input space to the Reproducing Kernel Hilbert Space (RKHS) .

Any kernel function, such as Radial Basis Function (RBF) and sigmoid kernels, can be used for the kernel resemblance. If the kernel resemblance function is used in WFR clustering, the algorithm can be named kernel WFR clustering algorithm.

Thresholding Resemblances and Search for Family Resemblances

Nearest Neighbors Graph

We first find the $`k`$-Nearest Neighbors ($`k`$NN) of the training data instances. Thus, the neighbors of each data instance is found and a $`k`$NN graph is formed. Different algorithms can be used for finding the $`k`$NN graph . Some of the possible algorithms to use are brute-force (exhaustive) nearest neighbor, KD-Tree nearest neighbor , and Ball Tree nearest neighbor , which are implemented in Scikit-learn library .

Resemblance Calculation and Thresholding

Using the resemblance function, we calculate the resemblance of each data instance with its $`k`$-NN. This creates a resemblance graph, represented by a resemblance matrix $`\ensuremath\boldsymbol{R} \in \mathbb{R}^{n \times n}`$ where $`n`$ denotes the number of training data instances. If the $`i`$-th instance has the $`j`$-th instance as its neighbor, the $`(i,j)`$-th element of the resemblance matrix is:

\begin{align}

\label{equation_resemblance_matrix}

\ensuremath\boldsymbol{R}_{ij} :=

\begin{cases}

r(\ensuremath\boldsymbol{x}_i, \ensuremath\boldsymbol{x}_j) & \mbox{if } \ensuremath\boldsymbol{x}_j \in k\text{NN}(\ensuremath\boldsymbol{x}_i) \\

0 & \mbox{Otherwise,}

\end{cases}

\end{align}where $`\ensuremath\boldsymbol{R}_{ij}`$ denotes the $`(i,j)`$-th element of the matrix $`\ensuremath\boldsymbol{R}`$, and the resemblance scores can optionally be transformed to be non-negative.

The resemblance matrix is normalized to be between zero and one:

\begin{align}

\widehat{\ensuremath\boldsymbol{R}} := \frac{1}{r_{\text{max}} - r_{\text{min}}} (\ensuremath\boldsymbol{R} - r_{\text{min}}),

\end{align}where $`\text{min}`$ and $`\text{max}`$ are the minimum and maximum resemblances in the resemblance matrix.

Then, a threshold $`\tau \in [0, 1]`$ is applied to the normalized resemblances to wipe out the weak resemblances below the threshold. We define the (possibly asymmetric) adjacency matrix $`\ensuremath\boldsymbol{A} \in \{0,1\}^{n \times n}`$ as:

\begin{equation}

\ensuremath\boldsymbol{A}_{ij} :=

\begin{cases}

1, & \text{if } \widehat{\ensuremath\boldsymbol{R}}_{ij} \geq \tau \\

0, & \text{otherwise}.

\end{cases}

\end{equation}Since $`\ensuremath\boldsymbol{A}`$ may be asymmetric, because of using $`k`$NN with $`k < n`$), we enforce symmetry by defining the final adjacency matrix:

\begin{equation}

\widetilde{\ensuremath\boldsymbol{A}} = \ensuremath\boldsymbol{A} \lor \ensuremath\boldsymbol{A}^\top,

\end{equation}where $`\lor`$ denotes the elementwise logical OR. Equivalently, this can be written entrywise as:

\begin{equation}

\widetilde{\ensuremath\boldsymbol{A}}_{ij} = \max\{\ensuremath\boldsymbol{A}_{ij}, \ensuremath\boldsymbol{A}_{ji}\}.

\end{equation}Note that we use logical OR rather than mutual kNN to avoid disconnecting chains of resemblance.

To ensure the presence of self-loops, we set the diagonal entries of the adjacency matrix to one:

\begin{equation}

\widetilde{\ensuremath\boldsymbol{A}}_{ii} = 1, \quad \forall i \in \{ 1,\dots,n \}.

\end{equation}Search in the Adjacency Graph

The adjacency matrix $`\widetilde{\ensuremath\boldsymbol{A}}`$ induces an adjacency graph in which adjacent data instances are connected. Graph search algorithms , such as Depth-First Search (DFS) or Breadth-First Search (BFS) , can be employed to identify the connected components of this graph. Each connected component corresponds to a family, or cluster, in which data instances are related through a chain of resemblance. Binary connectivity captures the existence of family resemblance chains rather than their strength.

Optional Outlier Marking

Outlier detection can be optionally incorporated into WFR clustering by treating data instances belonging to small clusters as outliers with label $`-1`$. One criterion for identifying small clusters is to label clusters whose size is below a fixed ratio (e.g., $`0.05`$) of the maximum cluster size. Alternatively, a statistical approach can be adopted by fitting a normal distribution to the cluster sizes and designating clusters with sizes smaller than the mean minus a specified multiple of the standard deviation as small clusters.

Automatic Thresholding

Clustering is inherently an ill-defined problem, as the perceived number of clusters can vary depending on the observer’s perspective. For instance, one person may identify two clusters in a dataset, while another may perceive three clusters by examining finer separations. Consequently, all clustering algorithms involve at least one hyperparameter that determines or influences the number of clusters. Some algorithms, such as K-means , explicitly require the number of clusters as input, whereas others, such as DBSCAN , include hyperparameters that indirectly affect the number of clusters.

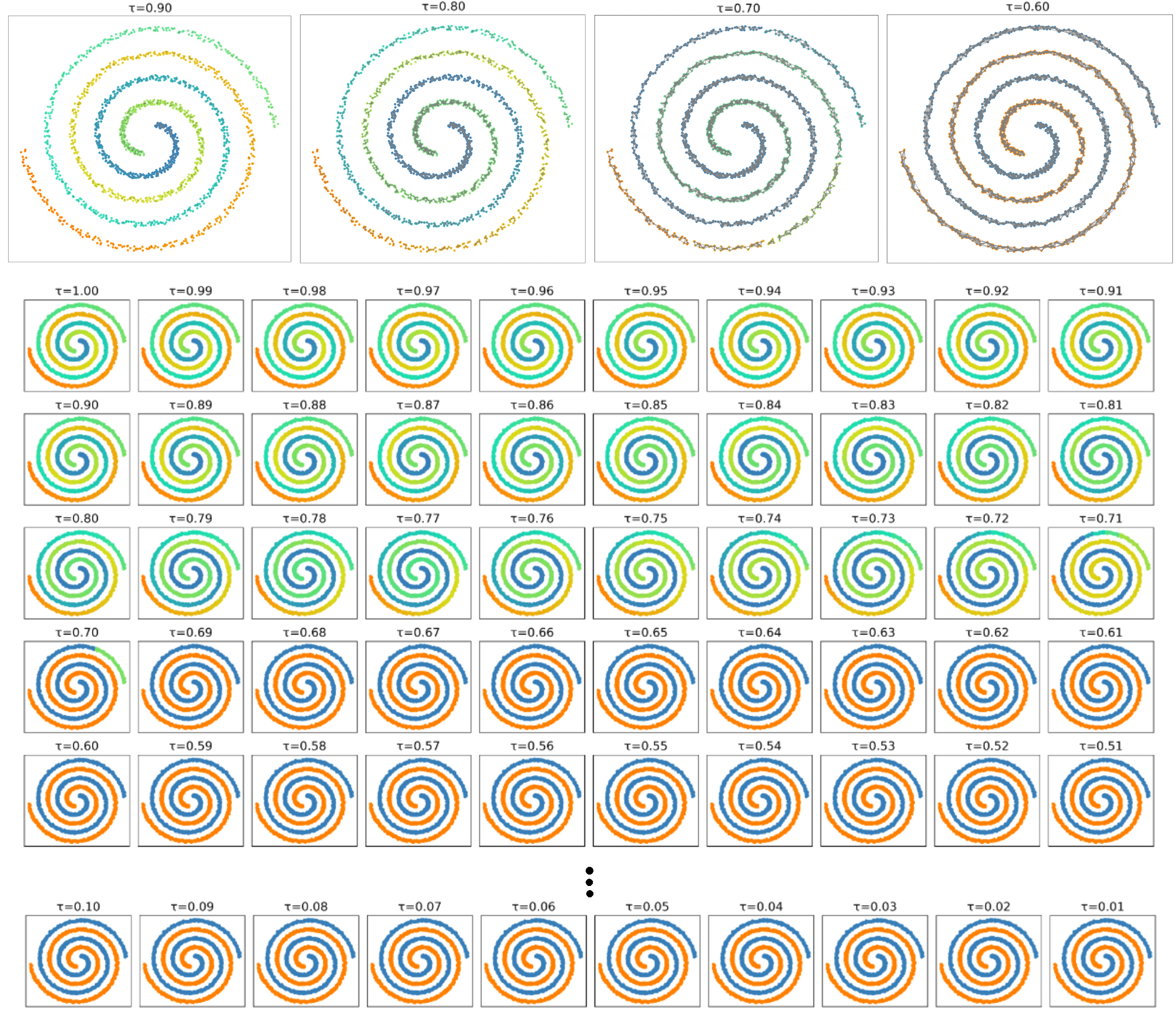

Similarly, WFR incorporates the threshold $`\tau`$ as its key hyperparameter, which controls the number of resulting clusters. Higher values of $`\tau`$ impose stricter thresholding, leading to a larger number of clusters. As illustrated in Fig. 3 (top), setting $`\tau=0.90`$ produces many clusters, while $`\tau=0.80`$ yields fewer. At $`\tau=0.70`$, the number of clusters decreases to six due to existing gaps between some data instances, and finally at $`\tau=0.60`$, the clustering correctly identifies two clusters.

However, thresholding in the WFR clustering algorithm can be performed automatically, albeit at the cost of increased computational time during the clustering phase3. This can be achieved by defining a grid of candidate thresholds within the range $`[0, 1]`$, using a fixed step size (e.g., $`0.01`$). For each candidate threshold, clusters are determined according to the procedure described previously. Subsequently, the following evaluation scores are computed.

The graph-based cluster separation score is defined as

\begin{align}

\label{equation_s1}

s_1 = 1 - \frac{ \displaystyle \sum_{i=1}^{n} \sum_{j \in \mathcal{N}_k(i)} \frac{ \mathbb{I}[\ell_i \neq \ell_j] }{ d_{ij} + \varepsilon } }{ \displaystyle \sum_{i=1}^{n} \sum_{j \in \mathcal{N}_k(i)} \frac{1}{d_{ij} + \varepsilon} },

\end{align}where $`\ell_i \in \{1, \dots, c\}`$ is the cluster label of $`\ensuremath\boldsymbol{x}_i`$, $`\mathcal{N}_k(i)`$ is the set of $`k`$ nearest neighbors of $`\ensuremath\boldsymbol{x}_i`$, $`d_{ij} = \|\ensuremath\boldsymbol{x}_i - \ensuremath\boldsymbol{x}_j\|_2`$ is the Euclidean distance between $`\ensuremath\boldsymbol{x}_i`$ and $`\ensuremath\boldsymbol{x}_j`$, $`\varepsilon > 0`$ is a small constant for numerical stability, and $`\mathbb{I}[\ell_i \neq \ell_j]`$ is the indicator function that equals 1 if $`\ensuremath\boldsymbol{x}_i`$ and $`\ensuremath\boldsymbol{x}_j`$ are in different clusters, and 0 otherwise.

The cluster size score is defined as:

\begin{equation}

\label{equation_s2}

\begin{aligned}

s_2 = &\left( \frac{1}{c} \sum_{j=1}^{c} \min \Big( \frac{f_j}{f_{\min}}, 1 \Big) \right) \times \\

&~~~~~~~~~~~ \exp\!\Big(\! - \alpha \, \mathrm{Var}(f_1, \dots, f_c) \Big),

\end{aligned}

\end{equation}where $`c`$ is the number of clusters, $`n_j`$ is the number of points in cluster $`j`$, $`n`$ is the total number of points, $`f_j = n_j / n`$ is the fraction of points in cluster $`j`$, $`f_{\min}`$ is the minimum acceptable cluster fraction (e.g., $`0.05`$), $`\alpha \ge 1`$ (e.g., $`2.0`$) is the imbalance penalty strength, and $`\mathrm{Var}(f_1, \dots, f_c)`$ is the variance of cluster fractions across all clusters.

The score $`s_1`$ increases when clusters are well-separated, whereas $`s_2`$ increases when cluster sizes are not excessively small. Both scores lie within the range $`[0, 1]`$. The optimal threshold $`\tau`$ for WFR clustering is the one that maximizes the sum of these scores:

\begin{align}

\tau := \arg\max_{\tau}\, (s_1 + s_2).

\end{align}Note that it is also possible to use alternative clustering evaluation scores, such as Silhouette score or Davies–Bouldin index .

Figure 3 (bottom) illustrates the grid search for the optimal threshold $`\tau`$ on the two-spirals dataset. Beginning the search at $`\tau=1`$ produces an excessive number of clusters. As the threshold is decreased, the number of clusters is gradually reduced. For thresholds $`\tau \leq 0.69`$, the combined score $`s_1 + s_2`$ improves, and the algorithm correctly identifies the two underlying clusters.

Test Phase (Out-of-sample Clustering)

In the test phase for out-of-sample clustering, the $`k`$ Nearest Neighbors ($`k`$NN) of each test data instance are first identified among the training data instances. The resemblance scores between each test data instance and its $`k`$NN training instances are then computed. This process yields the test resemblance graph, represented by the test resemblance matrix $`\ensuremath\boldsymbol{R}' \in \mathbb{R}^{n_t \times n}`$, where $`n`$ and $`n_t`$ denote the numbers of training and test samples, respectively. Let $`\ensuremath\boldsymbol{x}'_i`$ denote the $`i`$-th test data instance and $`\ensuremath\boldsymbol{x}_j`$ denote the $`j`$-th training data instance. The $`(i,j)`$-th entry of the test resemblance matrix is defined as:

\begin{align}

\label{equation_test_resemblance_matrix}

\ensuremath\boldsymbol{R}'_{ij} :=

\begin{cases}

r(\ensuremath\boldsymbol{x}'_i, \ensuremath\boldsymbol{x}_j), & \text{if } \ensuremath\boldsymbol{x}_j \in k\text{NN}(\ensuremath\boldsymbol{x}'_i), \\

0, & \text{otherwise}.

\end{cases}

\end{align}The test resemblance matrix is normalized using the minimum and maximum values of the training resemblance matrix:

\begin{align}

\widehat{\ensuremath\boldsymbol{R}}' := \frac{1}{r_{\text{max}} - r_{\text{min}}} (\ensuremath\boldsymbol{R}' - r_{\text{min}}).

\end{align}This ensures consistency between training and test resemblance scales. Any entries of the normalized matrix that fall below zero or exceed one are clipped to zero and one, respectively.

For each test data instance, if the maximum normalized resemblance to its $`k`$NN exceeds the threshold $`\tau`$ (or the optimal threshold obtained via automatic thresholding), the cluster label of the training data instance with the highest resemblance is assigned to the test instance:

\begin{equation}

\label{equation_test_label}

\ell'_{i} :=

\begin{cases}

\ell_j, & \text{if } \max_j \widehat{\ensuremath\boldsymbol{R}}'_{ij} \geq \tau \\

-1, & \text{otherwise},

\end{cases}

\end{equation}where $`\ell'_i`$ denotes the cluster label of the $`i`$-th test data instance and $`\ell_j`$ denotes the cluster label of the $`j`$-th training data instance. As shown in Eq. ([equation_test_label]), if the maximum normalized resemblance falls below the threshold, the test instance is considered as an outlier and assigned the label $`-1`$.

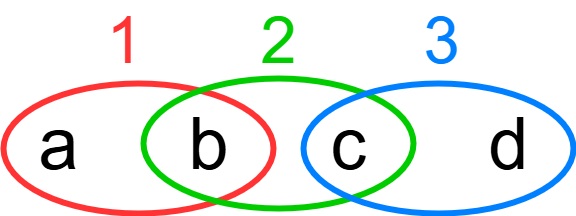

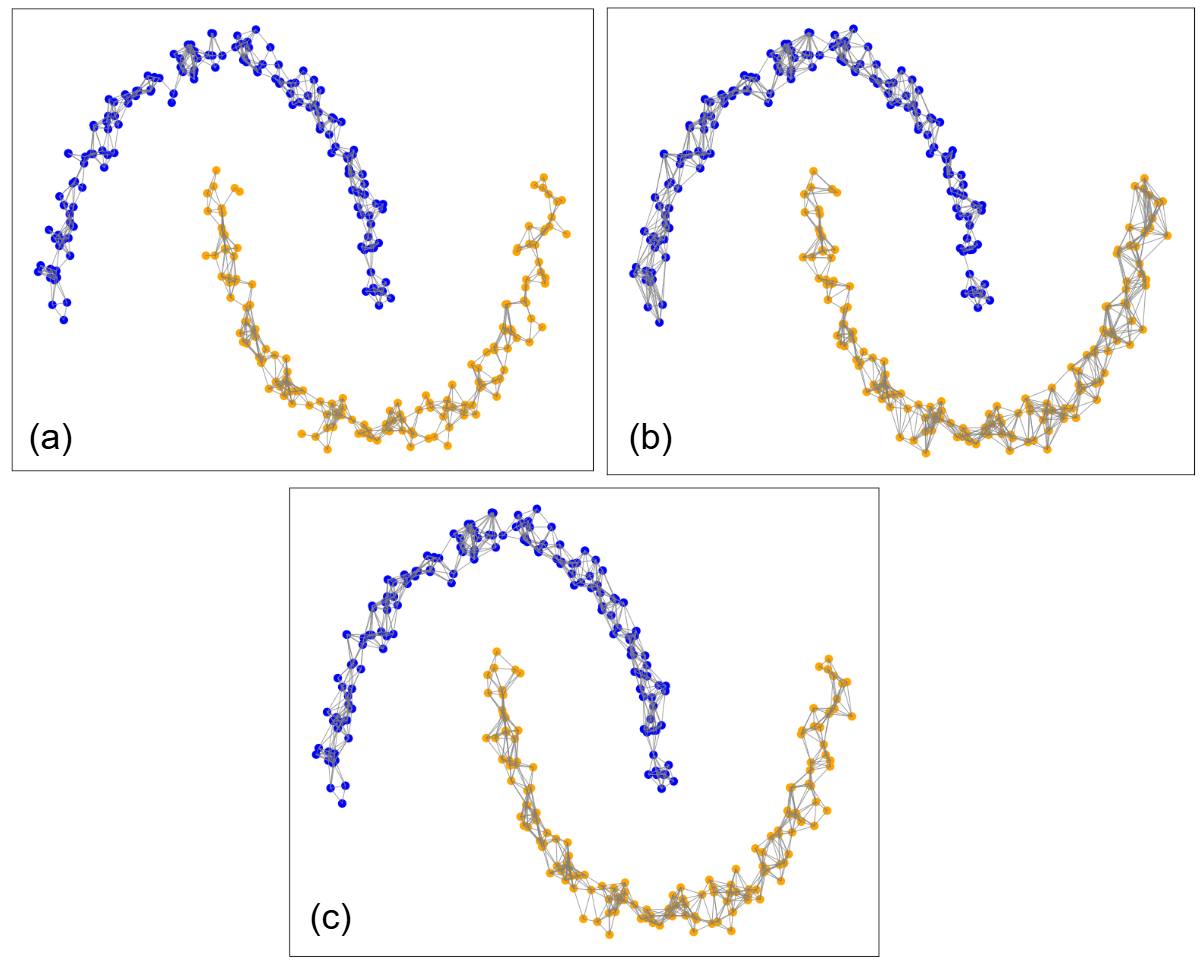

The test phase of WFR clustering, using log-based, cosine, and sigmoid kernel resemblance functions, is illustrated in Fig. 4. In this figure, test data instances are marked by crosses, with colors indicating their assigned cluster labels. The black lines connect each test instance to its neighboring training instance with the highest resemblance score.

Algorithms for Training and Test Phases

The algorithms for training and test phases of the proposed WFR clustering are shown in Algorithms [algorithm_WFR_training] and [algorithm_WFR_test], respectively.

In training, as in Algorithm [algorithm_WFR_training], the $`k`$NN graph of training data is calculated. Then, the resemblance matrix is computed and normalized. If automatic thresholding is used, the best threshold is detected by a grid search. If automatic thresholding is used, the hyperparameter $`\tau`$ is used as the threshold. Finally, the adjacency graph is calculated and a DFS or BFS search is performed in the adjacency graph.

In the test phase, as in Algorithm [algorithm_WFR_test], the $`k`$NN graph of test data is computed among the training data. Then, the test resemblance matrix is calculated and normalized using the minimum and maximum of the training resemblance matrix. Finally, the label of each test data instance is determined if its maximum resemblance with a neighbor training data instance is above the threshold. Otherwise, it is an outlier.

Input: training dataset $`\{\ensuremath\boldsymbol{x}_i\}_{i=1}^n`$. Output: cluster labels $`\{\ell_i\}_{i=1}^n`$. Calculate the $`k`$NN graph of training data. $`\ensuremath\boldsymbol{R}_{ij} = \begin{cases} r(\ensuremath\boldsymbol{x}_i, \ensuremath\boldsymbol{x}_j) & \mbox{if } \ensuremath\boldsymbol{x}_j \in k\text{NN}(\ensuremath\boldsymbol{x}_i) \\ 0 & \mbox{Otherwise} \end{cases}`$ $`\widehat{\ensuremath\boldsymbol{R}} = \frac{1}{r_{\text{max}} - r_{\text{min}}} (\ensuremath\boldsymbol{R} - r_{\text{min}})`$ $`\{\ell_i\}_{i=1}^n \gets \text{Search}(\widehat{\ensuremath\boldsymbol{R}}, \tau, \text{outlier\_marking})`$ $`\{\ell_i\}_{i=1}^n`$

Input: test dataset $`\{\ensuremath\boldsymbol{x}'_i\}_{i=1}^{n_t}`$. Output: cluster labels $`\{\ell'_i\}_{i=1}^{n_t}`$. Calculate the $`k`$NN graph of test data among training data. $`\ensuremath\boldsymbol{R}'_{ij} := \begin{cases} r(\ensuremath\boldsymbol{x}'_i, \ensuremath\boldsymbol{x}_j), & \text{if } \ensuremath\boldsymbol{x}_j \in k\text{NN}(\ensuremath\boldsymbol{x}'_i) \\ 0, & \text{otherwise} \end{cases}`$ $`\widehat{\ensuremath\boldsymbol{R}}' := \frac{1}{r_{\text{max}} - r_{\text{min}}} (\ensuremath\boldsymbol{R}' - r_{\text{min}})`$ $`\ell'_{i} := \begin{cases} \ell_j, & \text{if } \max_j \widehat{\ensuremath\boldsymbol{R}}'_{ij} \geq \tau \\ -1, & \text{otherwise} \end{cases}`$ $`\{\ell'_i\}_{i=1}^{n_t}`$

Time and Space Complexities

In this section, we analyze the time and space complexities of the proposed WFR clustering algorithm for both the training and test phases. Let $`n`$ denote the number of training samples, $`n_t`$ the number of test samples, $`d`$ the data dimensionality, and $`k`$ the number of nearest neighbors.

The Complexity of Training Phase

$`k`$NN Graph Construction:

The first step of the training phase is constructing the $`k`$-Nearest Neighbors ($`k`$NN) graph for the training data. Using a brute-force search, this step requires $`\mathcal{O}(n^2 d)`$ time. When spatial indexing structures such as KD-trees or Ball trees are applicable, the expected time complexity reduces to $`\mathcal{O}(n \log n \cdot d)`$ for low- to moderate-dimensional data. The space complexity for storing the kNN graph is $`\mathcal{O}(nk)`$.

Resemblance Computation and Normalization:

Resemblance scores are computed only between each data instance and its $`k`$ nearest neighbors. Therefore, resemblance computation requires $`\mathcal{O}(nk \cdot d)`$ time, assuming that evaluating the resemblance function is linear in terms of the dimension $`d`$. The resemblance matrix is sparse and requires $`\mathcal{O}(nk)`$ memory. Normalization of the resemblance values requires a single pass over the nonzero entries and thus has time complexity $`\mathcal{O}(nk)`$.

Thresholding and Graph Construction:

Applying the threshold to the resemblance matrix and enforcing symmetry both require $`\mathcal{O}(nk)`$ time and space, as only $`k`$ neighbors per node are considered. The resulting adjacency graph remains sparse.

Graph Search for Connected Components:

Identifying connected components using DFS or BFS has time complexity $`\mathcal{O}(n + |E|)`$, where $`|E| = \mathcal{O}(nk)`$ is the number of edges in the adjacency graph. Thus, this step requires $`\mathcal{O}(nk)`$ time and $`\mathcal{O}(n)`$ additional space for bookkeeping.

Automatic Thresholding (Optional):

If automatic thresholding is employed using a grid search over $`T`$ candidate threshold values, the graph construction and search steps are repeated $`T`$ times. In this case, the total training time complexity becomes:

\begin{align}

\mathcal{O}\big(n^2 d + (T \cdot nk) \big),

\end{align}for brute-force kNN, or:

\begin{align}

\mathcal{O}\big((n \log n \cdot d) + (T \cdot nk) \big),

\end{align}when using efficient nearest neighbor search. The space complexity remains $`\mathcal{O}(nk)`$. Note that $`T`$ is a fixed number so it can be ignored in the complexity analysis.

The Complexity of Test Phase

In the test phase, each test data instance finds its $`k`$ nearest neighbors among the training data. Using brute-force search, this requires $`\mathcal{O}(n_t n d)`$ time, which can be reduced to $`\mathcal{O}(n_t \log n \cdot d)`$ using tree-based nearest neighbor methods. Computing resemblance scores between test instances and their neighbors requires $`\mathcal{O}(n_t k d)`$ time and $`\mathcal{O}(n_t k)`$ space.

Assigning cluster labels based on the maximum resemblance score for each test instance is a linear operation with time complexity $`\mathcal{O}(n_t k)`$ and negligible additional memory overhead. Therefore, the overall test-time complexity is dominated by nearest neighbor search.

| Algorithm | Time Complexity | Space Complexity |

|---|---|---|

| K-means | $`\mathcal{O}(n c d I)`$ | $`\mathcal{O}(nd)`$ |

| Gaussian Mixture Models (EM) | $`\mathcal{O}(n c d I)`$ | $`\mathcal{O}(nd)`$ |

| Affinity Propagation | $`\mathcal{O}(n^2 I)`$ | $`\mathcal{O}(n^2)`$ |

| Mean Shift | $`\mathcal{O}(n^2 I)`$ | $`\mathcal{O}(n)`$ |

| Spectral Clustering | $`\mathcal{O}(n^3)`$ | $`\mathcal{O}(n^2)`$ |

| Agglomerative Clustering (Hierarchical) | $`\mathcal{O}(n^2 \log n)`$ | $`\mathcal{O}(n^2)`$ |

| Ward Clustering | $`\mathcal{O}(n^2)`$ | $`\mathcal{O}(n^2)`$ |

| DBSCAN | $`\mathcal{O}(n \log n)`$ | $`\mathcal{O}(n)`$ |

| OPTICS | $`\mathcal{O}(n \log n)`$ | $`\mathcal{O}(n)`$ |

| HDBSCAN | $`\mathcal{O}(n \log n)`$ | $`\mathcal{O}(n)`$ |

| BIRCH | $`\mathcal{O}(n)`$ | $`\mathcal{O}(n)`$ |

| WFR (ours) | $`\mathcal{O}(n \log n \cdot d + nk)`$ | $`\mathcal{O}(nk)`$ |

Comparison with Baseline Clustering Algorithms

Table [table_complexity_comparison] compares the computational complexity of WFR clustering with several well-known clustering algorithms. As shown in this table, many classical clustering algorithms incur quadratic or cubic time and space complexity, which limits their scalability to large datasets. Spectral clustering , affinity propagation , and hierarchical methods require $`\mathcal{O}(n^2)`$ memory, while spectral clustering additionally requires $`\mathcal{O}(n^3)`$ time due to eigen-decomposition.

Density-based methods such as DBSCAN , OPTICS , and HDBSCAN achieve near-linear time complexity under suitable indexing assumptions, but rely on density estimation and distance thresholds that may be sensitive to data distribution and scaling. Centroid-based methods such as K-means and Gaussian mixture models scale well but require the number of clusters to be specified in advance and struggle with nonconvex cluster shapes.

The proposed WFR clustering operates on a sparse kNN resemblance graph and avoids dense similarity matrices. Its time and space complexities scale linearly with respect to the number of edges in the graph, $`\mathcal{O}(nk)`$, making it comparable to density-based methods in scalability while retaining flexibility in similarity definition and supporting kernel-based and non-metric resemblances. Furthermore, unlike many baseline methods, WFR naturally supports out-of-sample clustering.

Simulations

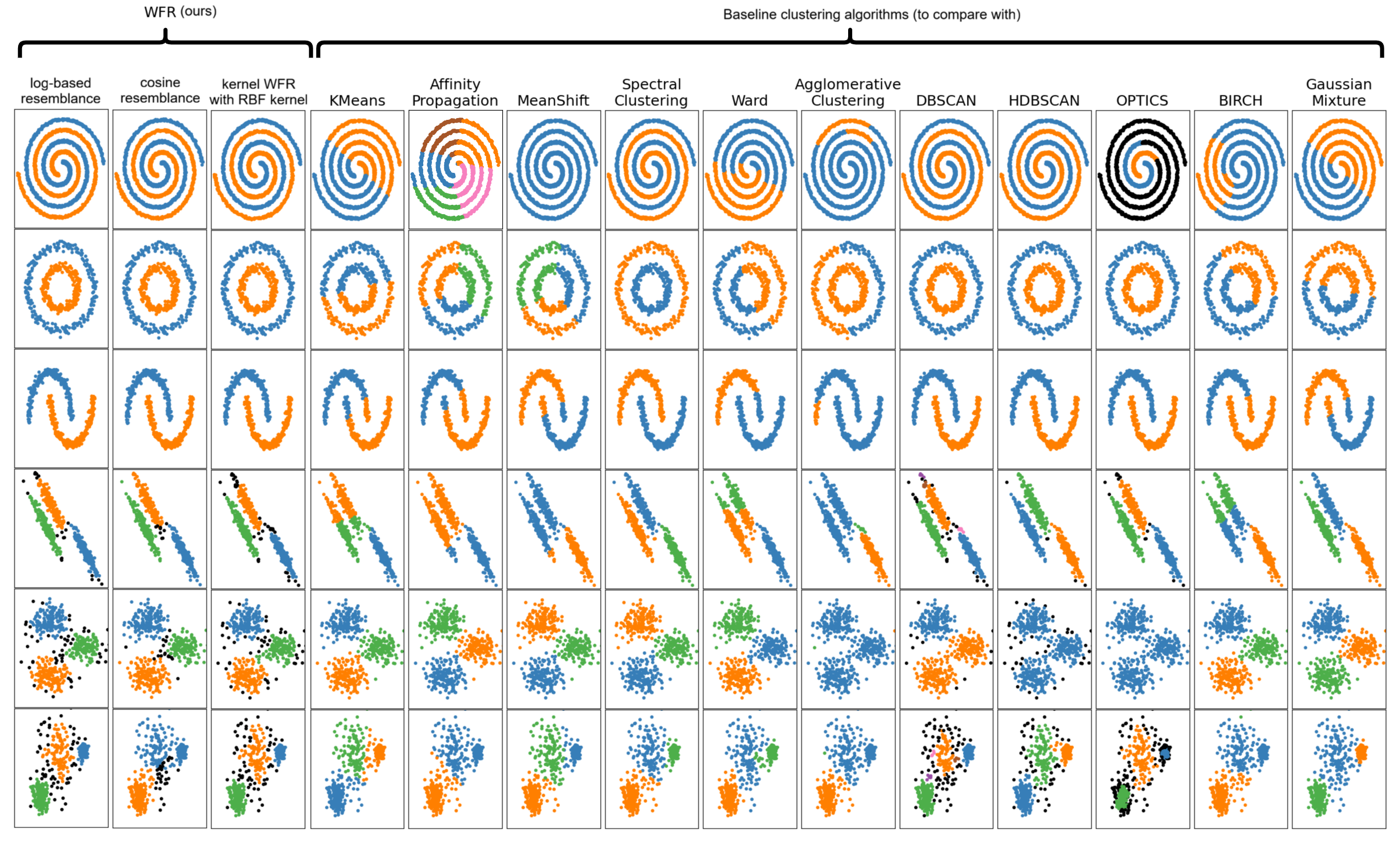

Comparison of Resemblance Graphs for Different Resemblance Functions

Figure 5 illustrates the adjacency graphs produced by the WFR algorithm using log-based, cosine, and RBF kernel resemblance functions on the two-moons dataset. In this figure, the gray lines represent the thresholded resemblances between training data instances. As the figure shows, different resemblance functions induce slightly different adjacency graphs, which in turn lead to minor variations in the resulting clustering.

Comparison with Other Clustering Algorithms

In this section, we compare our proposed WFR clustering algorithm with well-known, effective clustering algorithms, including K-means , affinity propagation , mean shift , spectral clustering , Ward , agglomerative clustering (hierarchical clustering) , DBSCAN , HDBSCAN , OPTICS , BIRCH , and gaussian mixture models using Expectation Maximization (EM) . For the baseline clustering methods, we use their optimized libraries available in Scikit-learn . A Scikit-learn–compatible implementation of the proposed WFR algorithm is available in the following GitHub repository: https://github.com/bghojogh/WFR_Clustering .

Figure 6 illustrates the clustering results on several benchmark datasets with diverse cluster shapes, obtained using different clustering algorithms. The benchmark datasets include two-spirals, two-circles, two-moons, and three additional benchmarks, each consisting of three Gaussian distributions with varying shapes. As shown in the figure, the proposed WFR clustering method, employing various resemblance functions—namely log-based, cosine, and RBF kernel functions—performs effectively in identifying the correct cluster structures while accurately detecting outliers. In contrast, some baseline clustering algorithms fail to recover the true clusters, particularly in highly nonlinear scenarios. Unlike DBSCAN, WFR does not rely on density estimation or metric balls and supports arbitrary resemblance functions, including kernel-based similarities. This shows the effectiveness of the proposed WFR and kernel WFR clustering algorithms in detecting true clusters in both linear and nonlinear scenarios.

Conclusion and Future Directions

This paper proposed a novel clustering algorithm, termed Wittgenstein’s Family Resemblance (WFR), along with its kernel-based variant. The algorithm is inspired by the concept of family resemblance introduced by Ludwig Wittgenstein in analytical philosophy and represents an instance of philomatics, wherein philosophical ideas are leveraged to develop mathematical methods.

In WFR, a nearest-neighbor graph is constructed, and resemblance (similarity) scores are computed between neighboring data instances. After applying a thresholding step, data instances that are connected through chains of resemblance are assigned to the same cluster. In its current formulation, the WFR algorithm employs a binary resemblance graph, where edges indicate only the presence or absence of resemblance.

Future work may extend WFR to weighted resemblance graphs, enabling the incorporation of resemblance strength while preserving the notion of family resemblance chains. This extension would yield a weighted adjacency graph with thresholded resemblance values, allowing for more nuanced clustering behavior.

Acknowledgement

The authors were unaware of the related work in during the development of the WFR algorithm and the preparation of the manuscript. While related in spirit, their method differs from WFR clustering in several aspects. Interested readers may consult for an alternative family-resemblance-based clustering approach. Connections between family resemblance and machine learning have also been discussed in informal venues . Family resemblance has further been explored in the context of fuzzy sets .

📊 논문 시각자료 (Figures)

A Note of Gratitude

The copyright of this content belongs to the respective researchers. We deeply appreciate their hard work and contribution to the advancement of human civilization.-

Philomatics is a combination of philosophy and mathematics (or machine learning which is a field of mathematics). For more information, refer to . ↩︎

-

It is a standard scholarly shorthand, especially in philosophy and law. It means from section 66 through section 71 (inclusive). Note that, in philosophical manuscripts, § and §§ refer to Section and Sections, respectively. ↩︎

-

This procedure increases computational cost linearly with the number of candidate thresholds. ↩︎