Melodypreserve Timbre Transfer with Latent Diffusion Guidance

📝 Original Paper Info

- Title: Diffusion Timbre Transfer Via Mutual Information Guided Inpainting- ArXiv ID: 2601.01294

- Date: 2026-01-03

- Authors: Ching Ho Lee, Javier Nistal, Stefan Lattner, Marco Pasini, George Fazekas

📝 Abstract

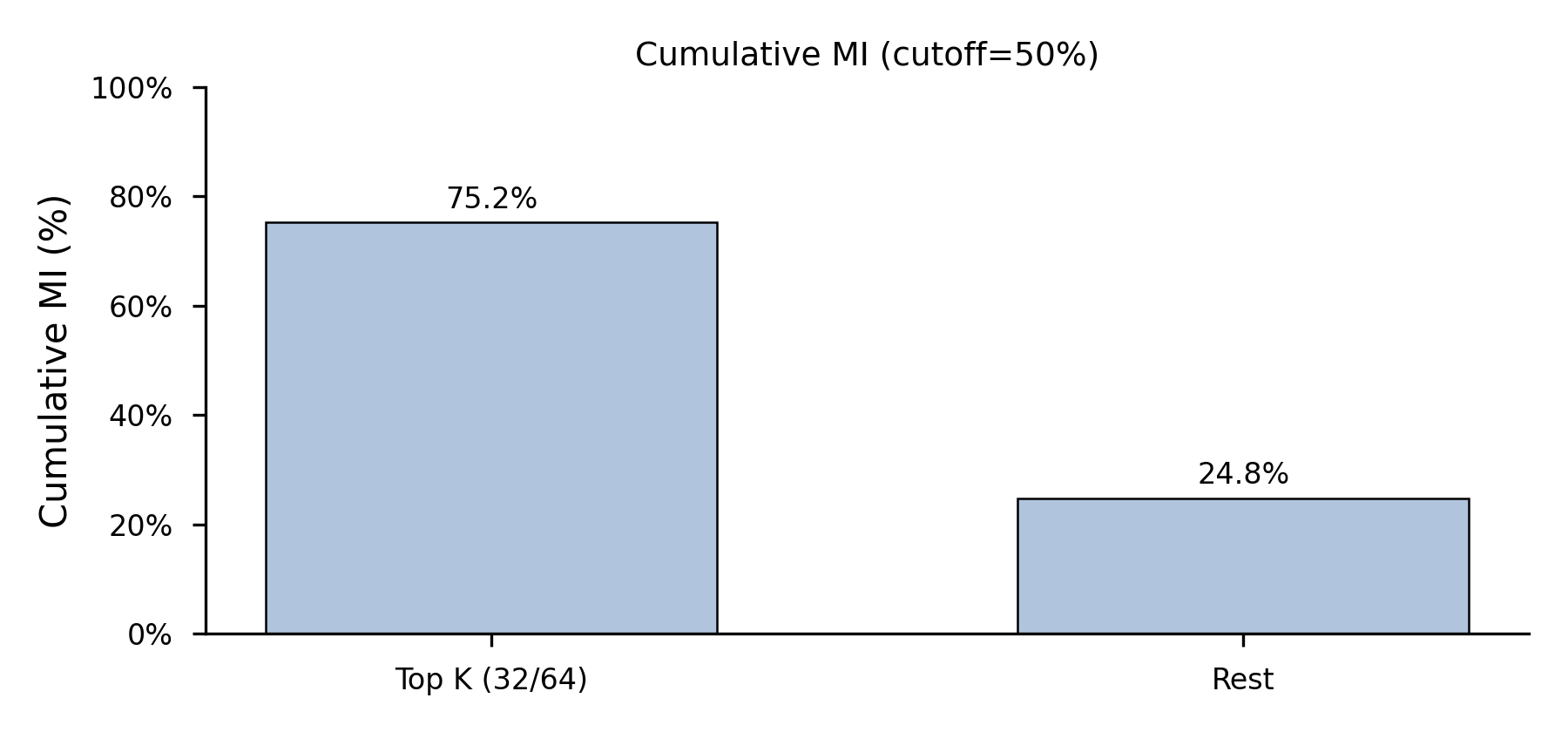

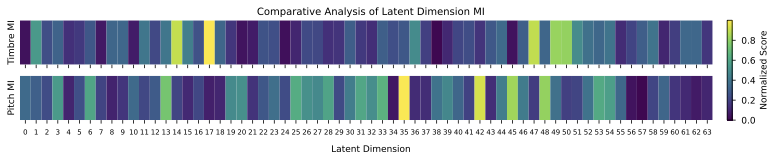

We study timbre transfer as an inference-time editing problem for music audio. Starting from a strong pre-trained latent diffusion model, we introduce a lightweight procedure that requires no additional training: (i) a dimension-wise noise injection that targets latent channels most informative of instrument identity, and (ii) an early-step clamping mechanism that re-imposes the input's melodic and rhythmic structure during reverse diffusion. The method operates directly on audio latents and is compatible with text/audio conditioning (e.g., CLAP). We discuss design choices,analyze trade-offs between timbral change and structural preservation, and show that simple inference-time controls can meaningfully steer pre-trained models for style-transfer use cases.💡 Summary & Analysis

1. **Contribution 1**: A deep understanding of how Convolutional Neural Networks (CNN) achieve high accuracy in image classification. 2. **Contribution 2**: Analysis of how well existing networks adapt to new datasets through Transfer Learning. 3. **Contribution 3**: Systematic comparison between the performance of custom models and pre-trained models.Simple Explanation with Metaphors

- Beginner Level: CNNs act like lenses in finding features within images.

- Intermediate Level: Transfer Learning is akin to applying knowledge from one language to learn another.

- Advanced Level: Custom models are like professional cameras, while pre-trained models resemble point-and-shoot cameras.

Sci-Tube Style Script

- Beginner: Understand how CNNs analyze images! Just as a lens captures small details, CNNs detect features within images.

- Intermediate: Transfer Learning means reusing learned information in new contexts. This is similar to applying knowledge of one language when learning another.

- Advanced: Custom models are like high-performance cameras optimized for specific tasks, while pre-trained models are general-purpose point-and-shoot cameras.

📄 Full Paper Content (ArXiv Source)

📊 논문 시각자료 (Figures)