MethConvTransformer Early AD Detection Through DNA Methylation

📝 Original Paper Info

- Title: MethConvTransformer A Deep Learning Framework for Cross-Tissue Alzheimer s Disease Detection- ArXiv ID: 2601.00143

- Date: 2026-01-01

- Authors: Gang Qu, Guanghao Li, Zhongming Zhao

📝 Abstract

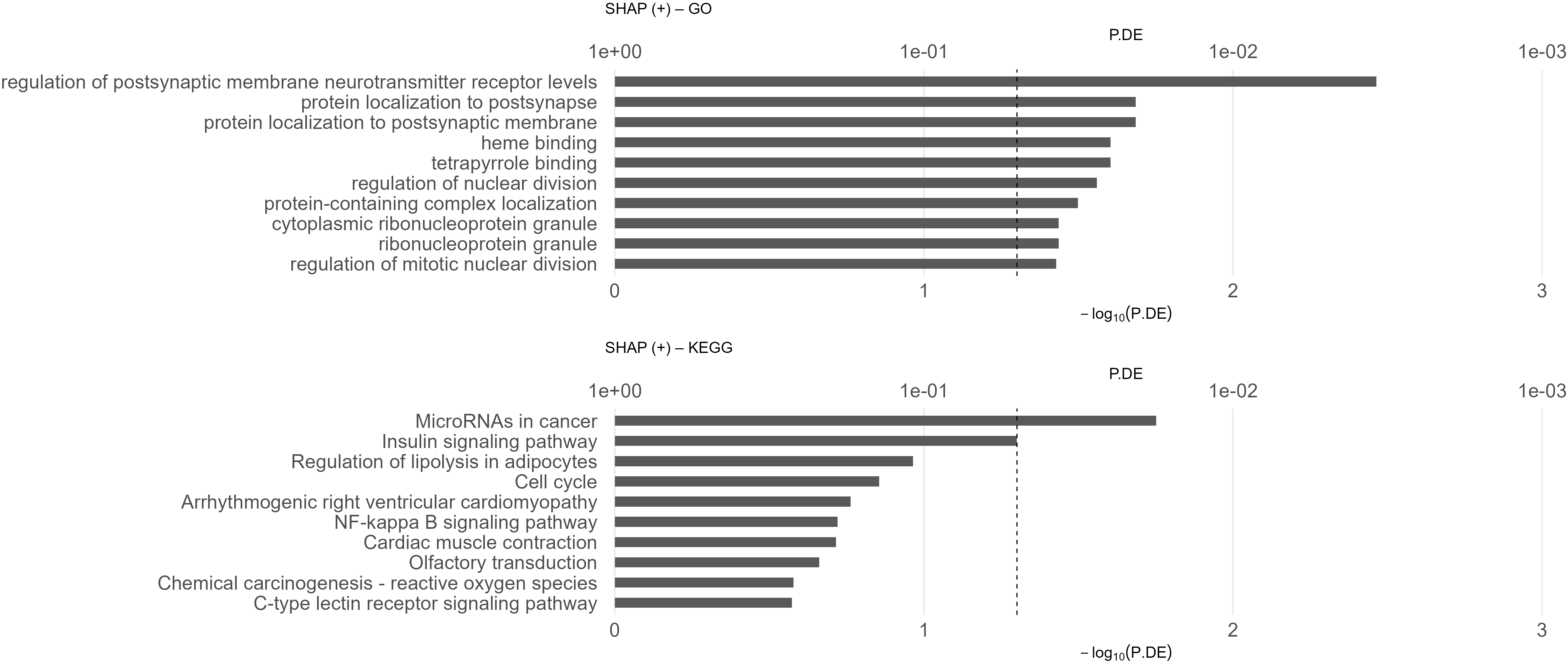

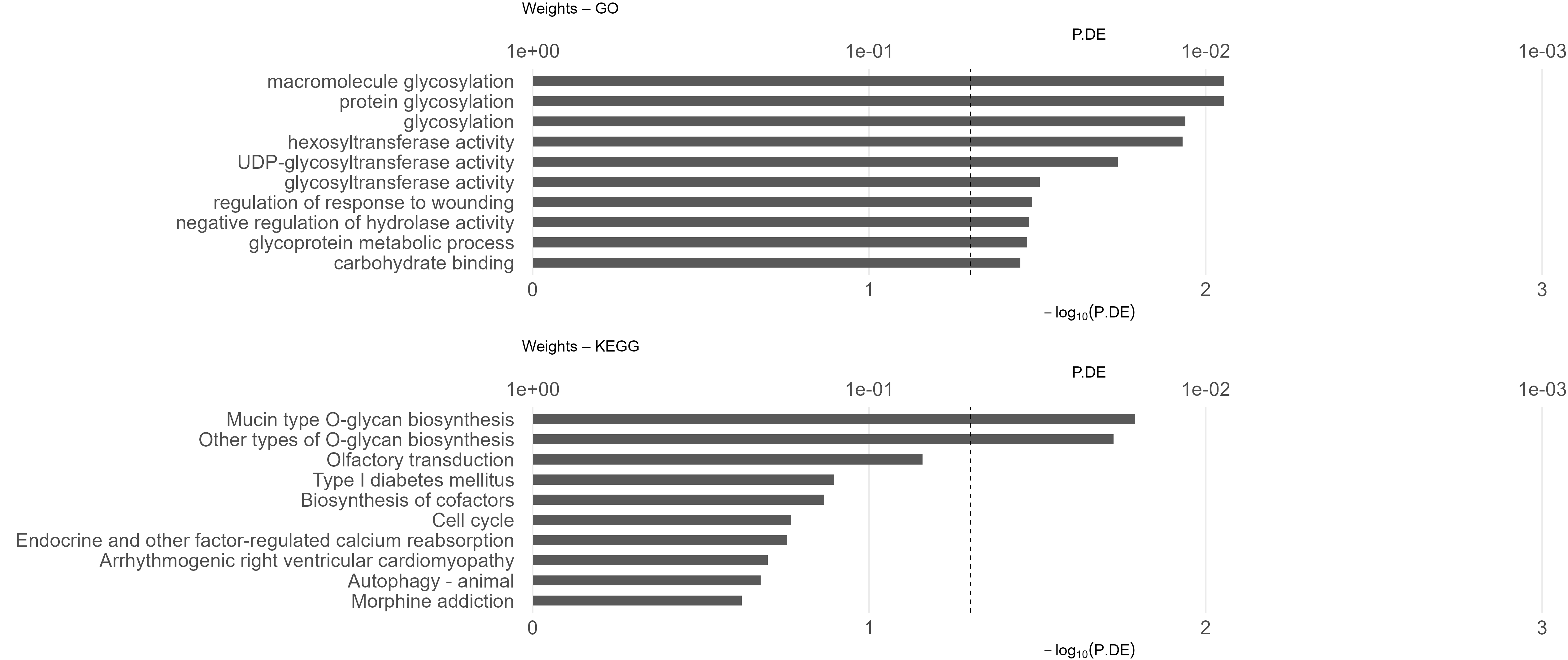

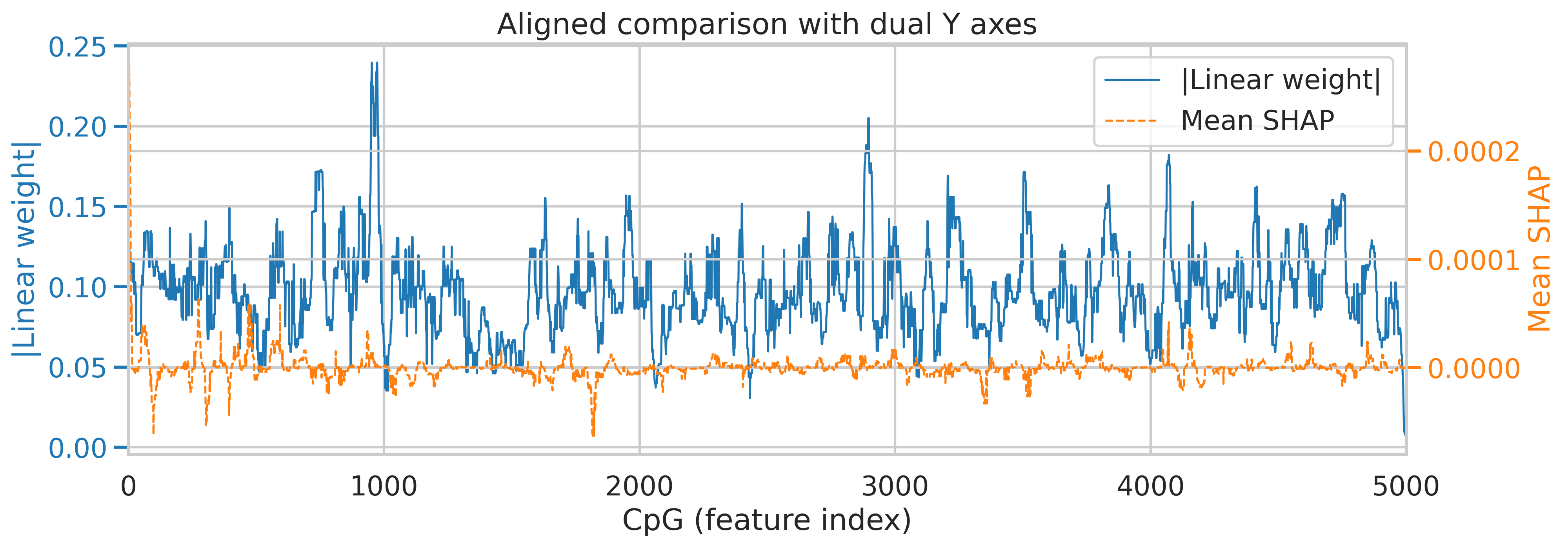

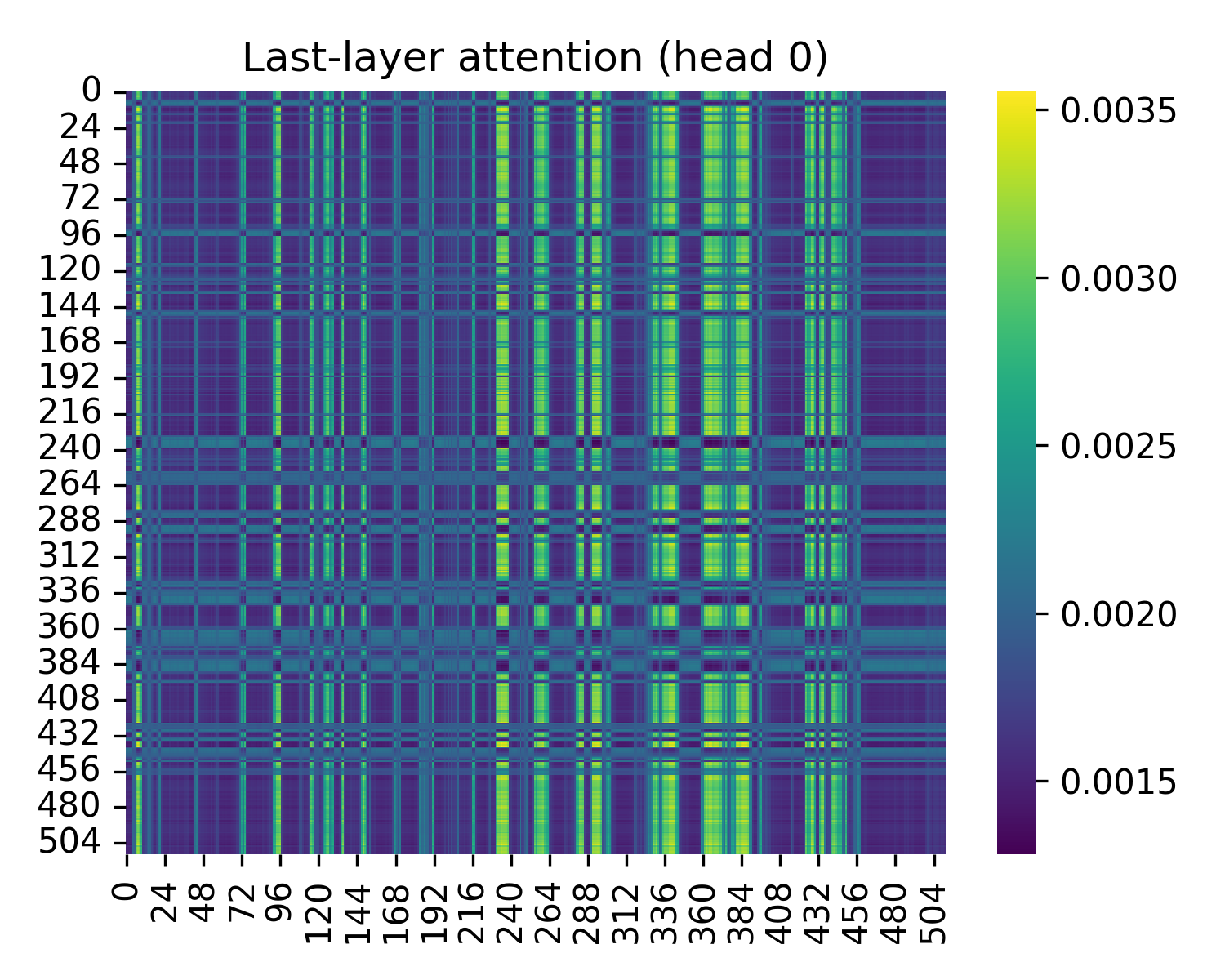

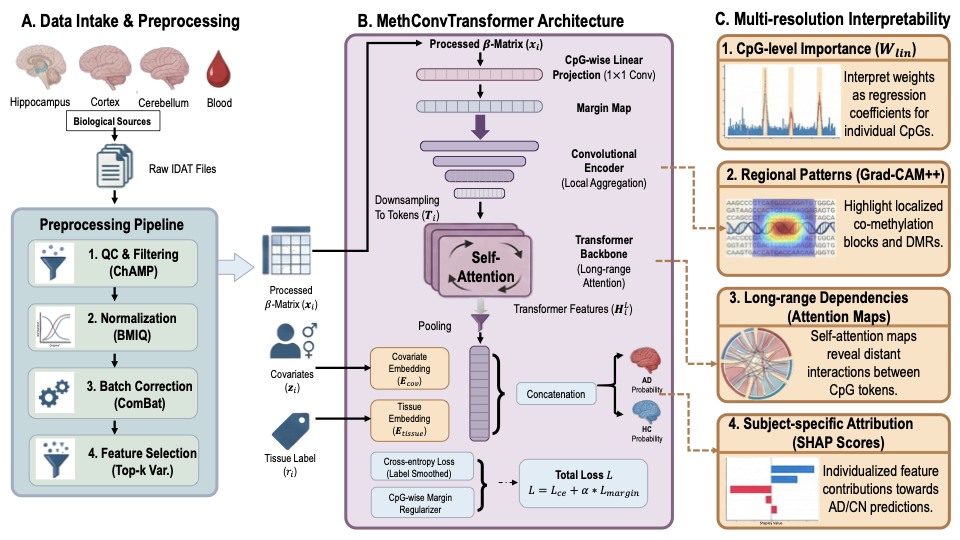

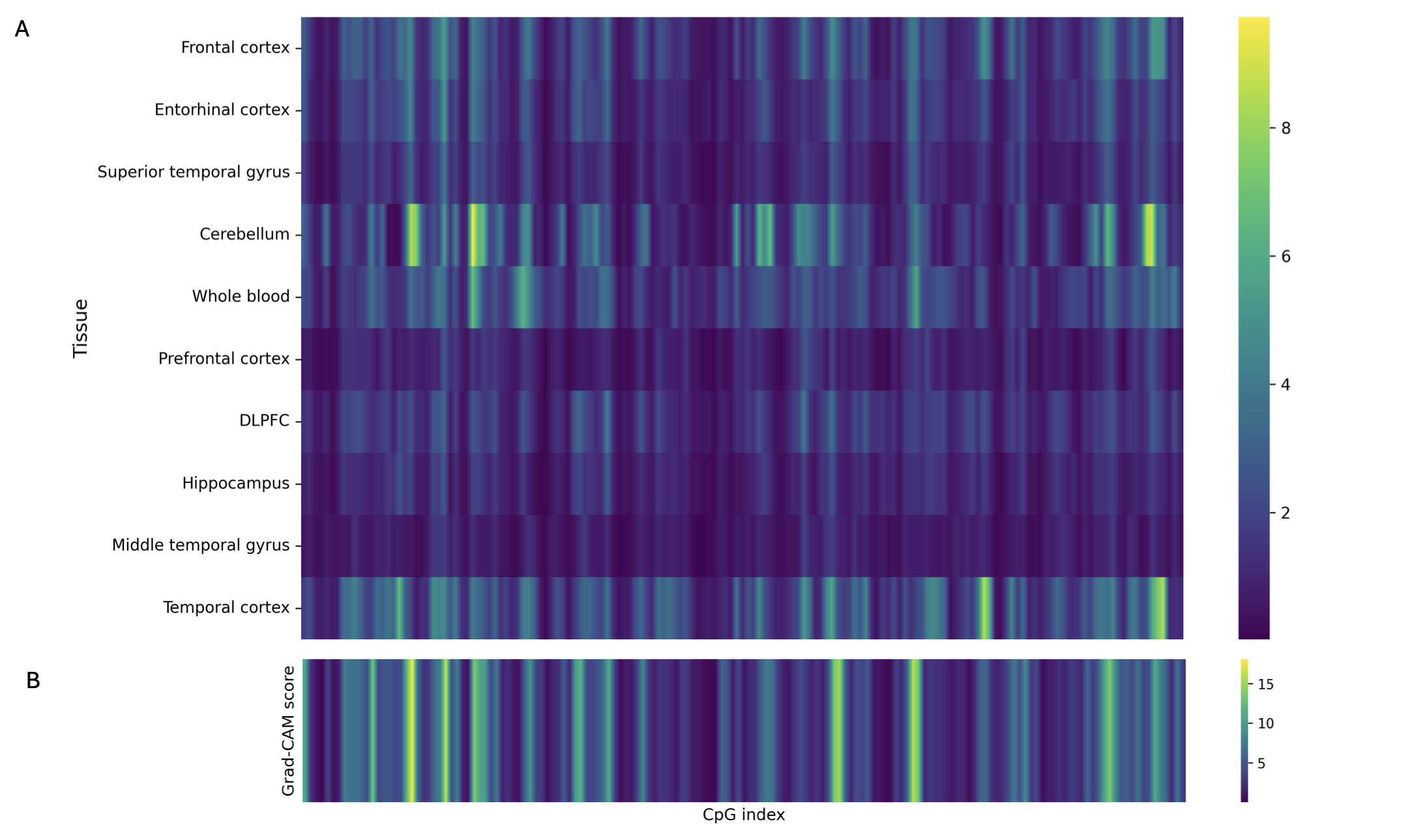

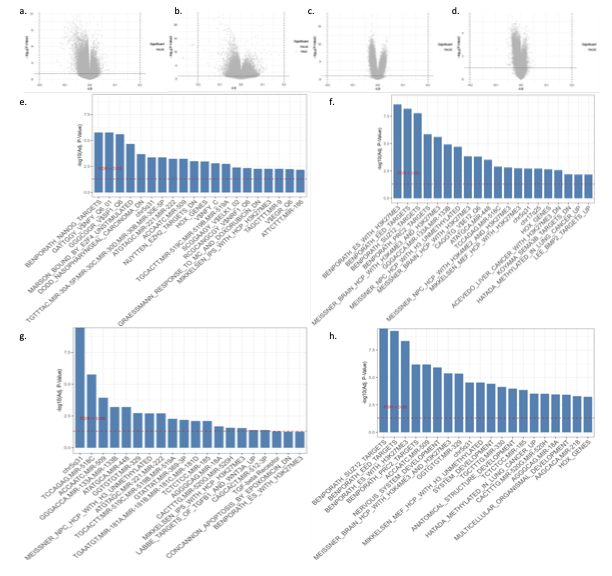

Alzheimer's disease (AD) is a multifactorial neurodegenerative disorder characterized by progressive cognitive decline and widespread epigenetic dysregulation in the brain. DNA methylation, as a stable yet dynamic epigenetic modification, holds promise as a noninvasive biomarker for early AD detection. However, methylation signatures vary substantially across tissues and studies, limiting reproducibility and translational utility. To address these challenges, we develop MethConvTransformer, a transformer-based deep learning framework that integrates DNA methylation profiles from both brain and peripheral tissues to enable biomarker discovery. The model couples a CpG-wise linear projection with convolutional and self-attention layers to capture local and long-range dependencies among CpG sites, while incorporating subject-level covariates and tissue embeddings to disentangle shared and region-specific methylation effects. In experiments across six GEO datasets and an independent ADNI validation cohort, our model consistently outperforms conventional machine-learning baselines, achieving superior discrimination and generalization. Moreover, interpretability analyses using linear projection, SHAP, and Grad-CAM++ reveal biologically meaningful methylation patterns aligned with AD-associated pathways, including immune receptor signaling, glycosylation, lipid metabolism, and endomembrane (ER/Golgi) organization. Together, these results indicate that MethConvTransformer delivers robust, cross-tissue epigenetic biomarkers for AD while providing multi-resolution interpretability, thereby advancing reproducible methylation-based diagnostics and offering testable hypotheses on disease mechanisms.💡 Summary & Analysis

1. **Custom Training vs Custom Model Development** Traditional training methods are well-understood and effective, but they show their limitations when faced with new challenges. This is akin to trying to complete a novel task with outdated tools; sometimes developing a new tool can yield better results.-

Transfer Learning: Leveraging Existing Knowledge

Transfer learning involves applying an already trained model to a new problem. It’s like using knowledge learned from books in real-world applications, which can be highly effective in computer vision tasks. -

Pre-trained Models: Saving Time and Resources

Pre-trained models use models that have been trained on large datasets. This approach saves researchers significant time and computational resources, much like preparing a meal with pre-prepared ingredients is faster and easier.

📄 Full Paper Content (ArXiv Source)

📊 논문 시각자료 (Figures)