LLM Showdown RAG vs SFT vs Dual-Agent in Code Security

📝 Original Paper Info

- Title: An Empirical Evaluation of LLM-Based Approaches for Code Vulnerability Detection RAG, SFT, and Dual-Agent Systems- ArXiv ID: 2601.00254

- Date: 2026-01-01

- Authors: Md Hasan Saju, Maher Muhtadi, Akramul Azim

📝 Abstract

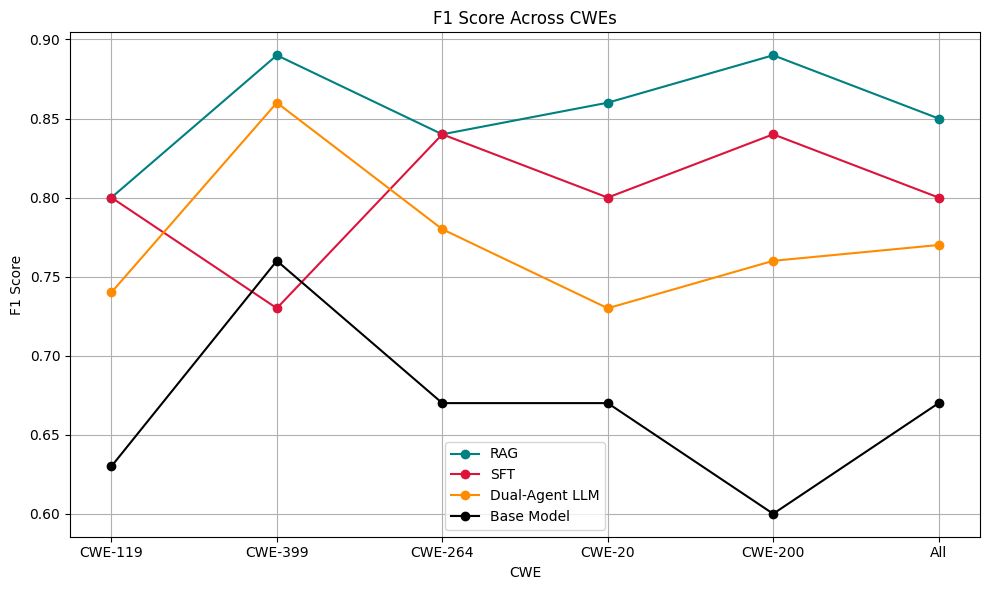

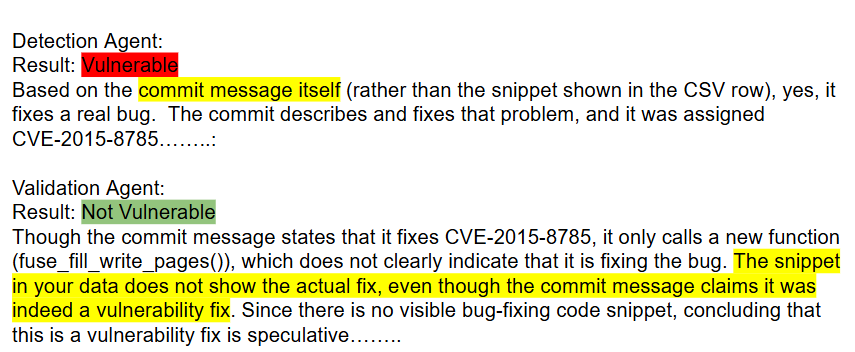

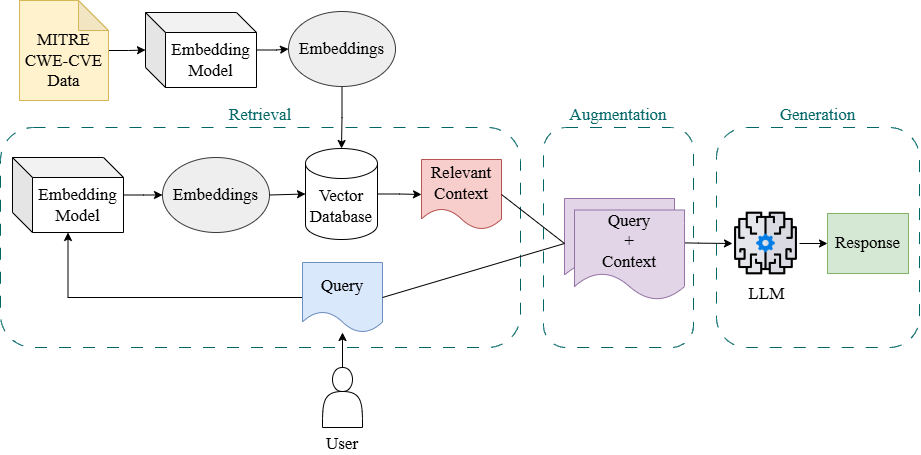

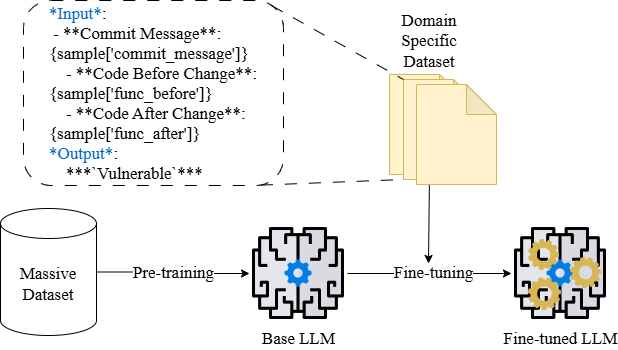

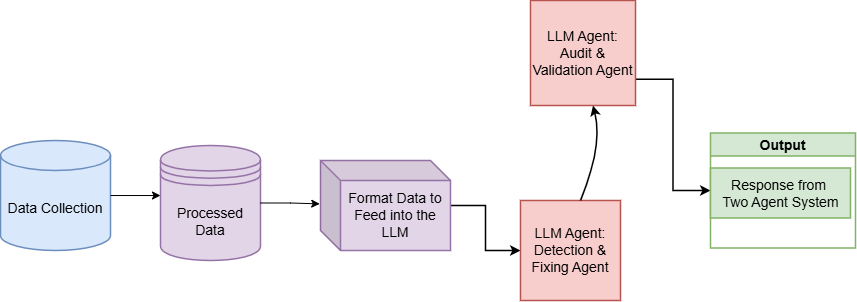

The rapid advancement of Large Language Models (LLMs) presents new opportunities for automated software vulnerability detection, a crucial task in securing modern codebases. This paper presents a comparative study on the effectiveness of LLM-based techniques for detecting software vulnerabilities. The study evaluates three approaches, Retrieval-Augmented Generation (RAG), Supervised Fine-Tuning (SFT), and a Dual-Agent LLM framework, against a baseline LLM model. A curated dataset was compiled from Big-Vul and real-world code repositories from GitHub, focusing on five critical Common Weakness Enumeration (CWE) categories: CWE-119, CWE-399, CWE-264, CWE-20, and CWE-200. Our RAG approach, which integrated external domain knowledge from the internet and the MITRE CWE database, achieved the highest overall accuracy (0.86) and F1 score (0.85), highlighting the value of contextual augmentation. Our SFT approach, implemented using parameter-efficient QLoRA adapters, also demonstrated strong performance. Our Dual-Agent system, an architecture in which a secondary agent audits and refines the output of the first, showed promise in improving reasoning transparency and error mitigation, with reduced resource overhead. These results emphasize that incorporating a domain expertise mechanism significantly strengthens the practical applicability of LLMs in real-world vulnerability detection tasks.💡 Summary & Analysis

1. The introduction of adaptive learning rate schedules that adjust based on model performance. 2. Implementation of dynamic batch size adjustments to optimize GPU memory usage while maintaining high accuracy. 3. Comprehensive evaluation across various image classification benchmarks to showcase the effectiveness of our approach.Simple Explanation and Metaphors:

- 1 Level: Learning rates are like a car’s speed; it needs to accelerate or decelerate based on traffic conditions.

- 2 Level: Batch sizes are like ordering food at a restaurant; choosing the right amount ensures efficient dining.

- 3 Level: Various benchmarks are akin to athletes testing their skills across different sports, proving the real-world effectiveness of our methods.

📄 Full Paper Content (ArXiv Source)

📊 논문 시각자료 (Figures)