Big AI is accelerating the metacrisis What can we do?

📝 Original Paper Info

- Title: Big AI is accelerating the metacrisis What can we do?- ArXiv ID: 2512.24863

- Date: 2025-12-31

- Authors: Steven Bird

📝 Abstract

The world is in the grip of ecological, meaning, and language crises which are converging into a metacrisis. Big AI is accelerating them all. Language engineers are playing a central role, persisting with a scalability story that is failing humanity, supplying critical talent to plutocrats and kleptocrats, and creating new technologies as if the whole endeavour was value-free. We urgently need to explore alternatives, applying our collective intelligence to design a life-affirming future for NLP that is centered on human flourishing on a living planet.💡 Summary & Analysis

1. **Key Contributions**: - Analyzes the three crises (ecological, meaning, language) caused by LLMs and how they interact. - Argues for establishing protected spaces in academia to discuss problems with LLMs, balancing technological progress with social responsibility. - Emphasizes that language technology can play a crucial role in sustaining human life on Earth and proposes that linguists should be aware of the political and value-laden nature of their work.-

Simple Explanation with Metaphors:

- The paper describes large language models as “demons destroying forests,” emphasizing the need for protected areas to mitigate these negative impacts.

- It argues for a safe space to discuss LLM issues, likening it to a ‘room where doctors debate patient health in hospitals.’

-

Sci-Tube Style Script:

- “Hello everyone, today we’re looking at how large language models (LLMs) impact human society and the Earth’s ecosystem. First, LLMs put significant environmental stress by operating data centers. Second, they undermine social meanings, leading to a crisis of truth and meaning. Finally, LLMs severely affect minority language communities, necessitating more equitable and responsible approaches.”

📄 Full Paper Content (ArXiv Source)

Large Language Models and Generative AI have become “a source of great fascination” leading to “wildly hyperbolic theories of the virtual realm” , a new so-called “Intelligent Age defined by advancements in knowledge, health, culture and societal welfare” . This AI Gold Rush has become a “silent nuclear holocaust in our information ecosystem” .

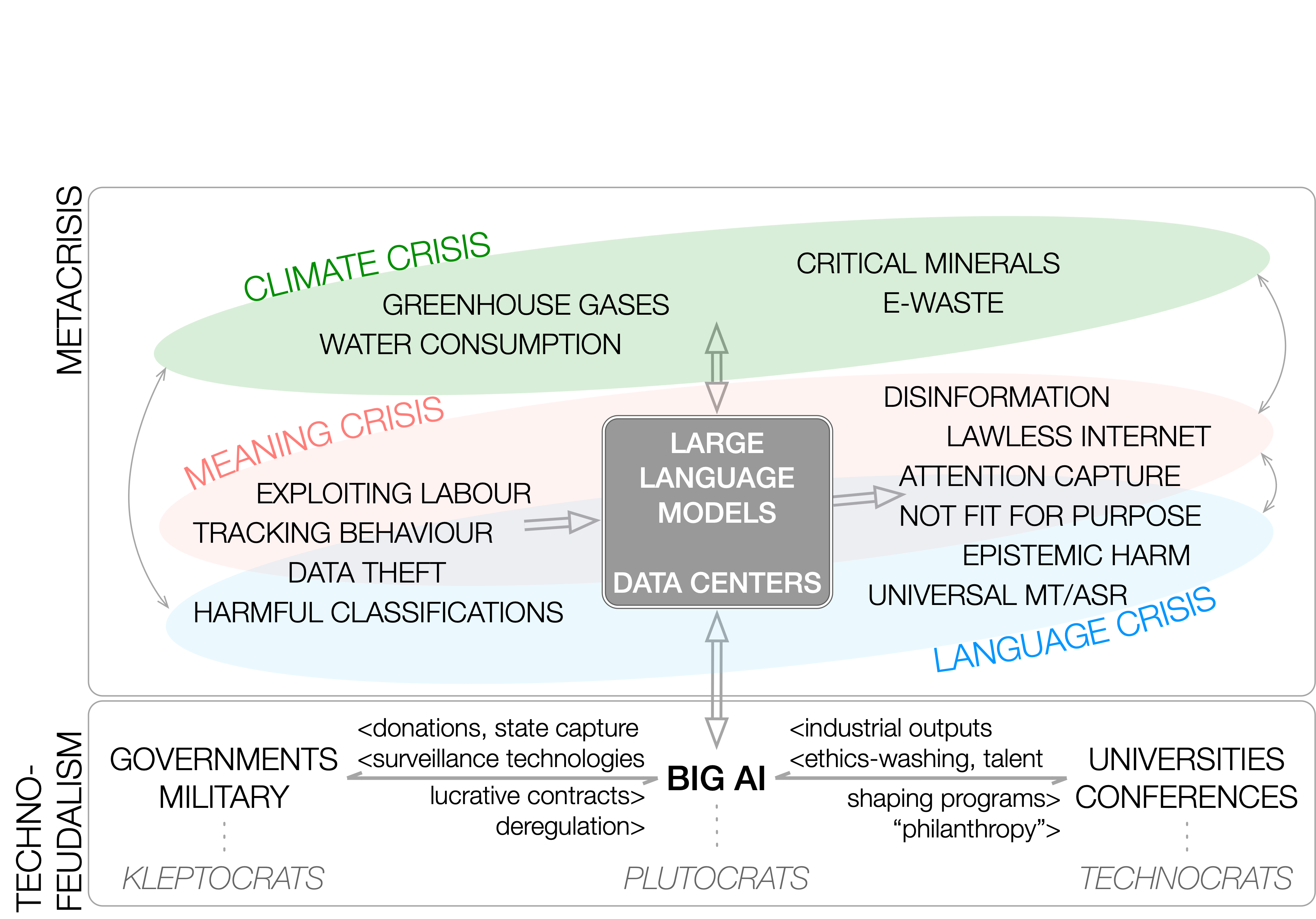

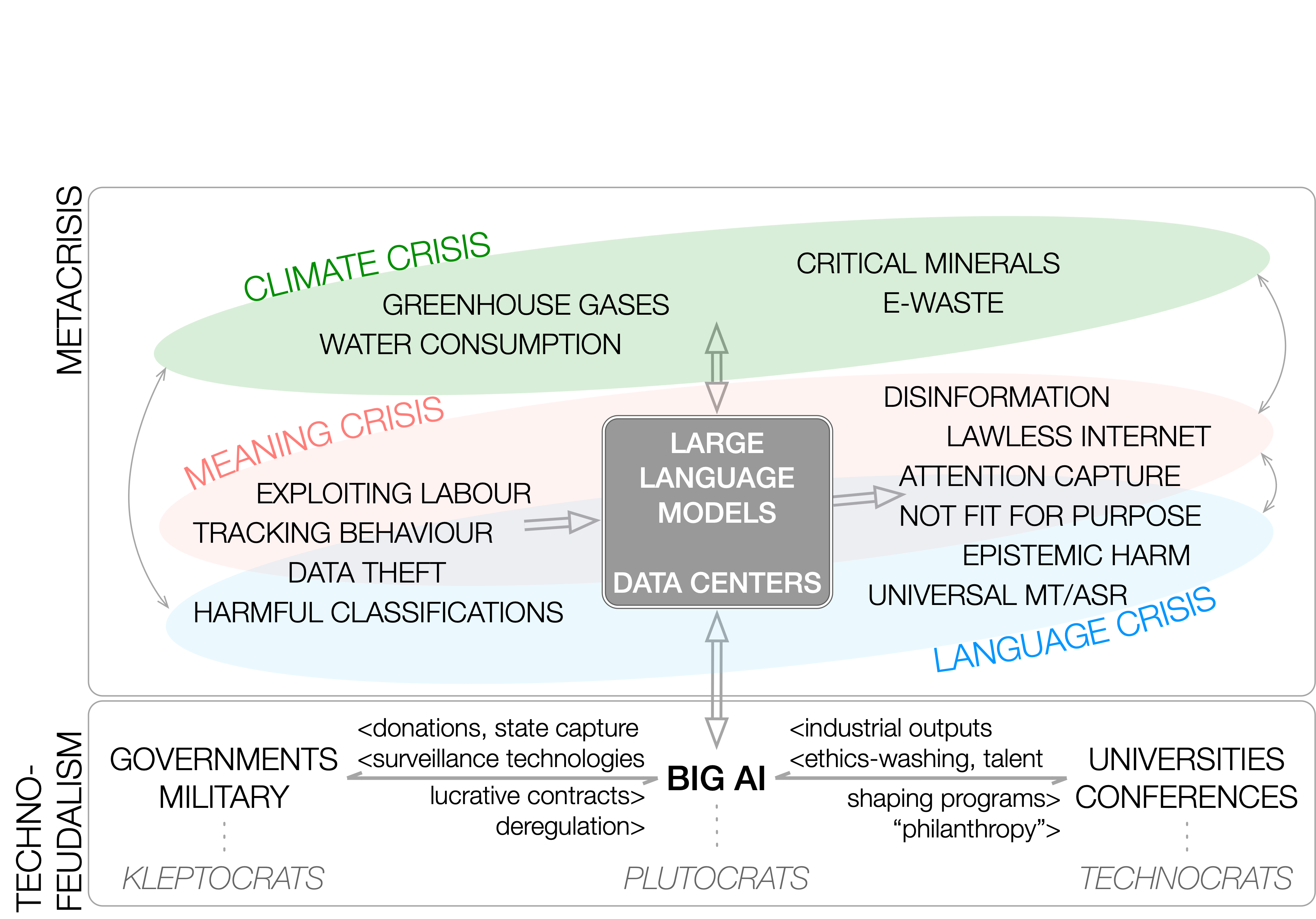

“Big AI” – the corporations, the state capture, the “various kinds of automation sold as AI” – is escalating global crises which are now reaching tipping point. Six of nine planetary boundaries have been breached . There is a real prospect of ecosystem, economic, and geopolitical collapse . Big AI is now fuelling this system while stoking itself. Big AI is accelerating the metacrisis (cf. Fig. 1).

Our professional body, the Association for Computational Linguistics (ACL), is possibly the largest publisher of LLM research. Authors warrant that their work complies with the ACL Code of Ethics, “understanding that the public good is the paramount consideration” . How are we to reconcile our professional obligation with the harms caused by the technologies we are creating?

To speak out is to take the ACL Code seriously. Yet earlier versions of this article were three times rejected from ACL venues: Unempirical! Courts controversy! Underestimates GenAI! A political pamphlet! No facts were contested. It seems that the problem is not with truth but with truth-telling. Kind people advised me not to criticise but to participate, saying that this AI-driven future is inevitable.

If nothing else, we agree that language is key, only not language as sequence data but language as humanity’s greatest technology for sustaining our common life. AI scales, not through huge LLMs run in Big AI’s polluting data centres, but through amplifying the social, leveraging the exponential possibilities offered by human networks.

None of the above is to blame individuals. “We are all complicit. We’ve allowed the ‘market’ to define what we value so that the redefined common good seems to depend on profligate lifestyles that enrich the sellers while impoverishing the soul and the earth” . Brilliant language engineers have been recruited into structures that convert public goods like corpora into private goods like LLMs, while extracting knowledge and behaviour through deception, exploitation, and theft . How do we operate instead as professionals, dedicated to the public good as paramount?

Cascading Crises

LLMs are implicated in three crises

When it comes to LLMs, three crises are particularly significant thanks to the way language constitutes our common life: our stewardship of the planet; the wellbeing of our communities; and the diversity of our cultures. We consider each in turn.

Ecological crisis:

The world is experiencing a cascade of crises encompassing climate, pollution, and biodiversity, linked to heatwaves, flash floods, drought and wildfires . To this, Big AI’s data centres add excessive greenhouse gas emissions, water usage, e-waste, and critical minerals . Societal collapse is a plausible outcome due to: diminishing returns from adding complexity to social structures; the possibility of environmental perturbations that surpass the limits of adaptation; and the way risks propagate through complex systems .

Meaning crisis:

Big AI’s attention economy is linked to social media addiction . LLMs perpetuate harmful classifications . LLMs undermine critical thinking, knowledge diversity, and democracy . LLMs generate fake news, education, and healthcare , lacking access to truth or social norms . The result is a crisis of truth and meaning.

Language crisis:

Minoritised speech communities experience economic and cultural alienation, displacement, and genocide leading to language extinction . The problems are sociopolitical and not fixed by language technologies, with their epistemic harms . Claims for the universal applicability of technologies like speech recognition and machine translation neglect the realities that: (a) most of the world’s population is multilingual, already using a few dozen contact languages for information access and economic participation; (b) the 90% of the world’s languages outside the most populous are generally non-bounded, non-homogeneous, non-written, and non-standardised .

LLMs amplify crisis interactions

Ecological crisis $`\leftrightarrow`$ meaning crisis:

The ecological crisis feeds the meaning crisis when LLM content uses eco-anxiety to capture attention, and doomscrolling numbs eco-anxiety . In the reverse direction, LLM content on social media narcotises dysfunction and apathy, making it harder for communities to unite in the face of the ecological crisis .

Meaning crisis $`\leftrightarrow`$ language crisis:

The meaning crisis feeds the language crisis when the avalanche of attention-grabbing LLM content from dominant languages crowds out local languages, and when attention capture leads to non-participation in local lifeworlds . In the reverse direction, language loss undermines the place of elders, disrupts knowledge transmission, and harms wellbeing and cognition . The data free-for-all violates Indigenous (and human) sovereignty, stoking both crises .

Language crisis $`\leftrightarrow`$ ecological crisis:

The language crisis feeds the ecological crisis when it undermines the capacity of indigenous communities to take care of their storied ancestral lands which are rich in species diversity; and when it accelerates the loss of medicinal knowledge as a resource for human wellbeing . In the reverse direction, mining and climate disasters intensified by data centres displace people from their lands, while climate change and pandemics decimate linguistic communities, and loss of ecological diversity undermines cultures which depend on plant and animal species .

The metacrisis:

The world’s crises are interconnected systems , drawing together in what has been called the polycrisis or the metacrisis , “a complex system of interrelated, varied, and multi-layered crises” . As shown, Big AI, its LLMs, and data centres are all implicated. On top of this, Big AI accelerates itself through the phenomenon of AI hype .

In short, Big AI is accelerating the metacrisis.

A Sober Assessment

Big AI will not govern itself

Big AI interest in ethics functions to minimise regulatory oversight , shaping governments and academia in “a relational outcome of entangled dynamics between design decisions, norms, and power” , cf. Figure 1. Big AI purports to “solve” the ethical problems of AI with more AI, thanks to “quantitative notions of fairness [that] funnel our thinking into narrow silos” , and leading to the devastating absence of the rule of law in the virtual world .

Politically-motivated “philanthropy” and ethics washing function to maintain a deregulated space, in what is known as the “mirage of algorithmic governance” , a “façade that justifies deregulation, self-regulation or market driven governance” . Big AI puts moneymaking before public safety . “The idea that economic growth and the pursuit of profit should be tempered in the interests of individual well-being and less unequal societies is anathema to those in the vanguard of economic libertarianism” .

There are many initiatives to regulate Big AI , but “fully characterizing the social and environmental impacts of Gen-AI is complex and hinders targeted regulations” . “It will be far from straightforward to implement [AI ethics frameworks] in practice to constrain the behaviour of those with disproportionate power to shape AI development and governance” . This is no reason not to try.

The benefits do not justify the harms

In a consequentialist moment we might be tempted to dismiss the manifold harms of Big AI (§2) considering the grandiose promises: from “eliminating poverty to establishing sustainable cities and communities and providing quality education for all” . While waiting for those benefits, we can still consider the quality of the science.

Sequence models are far removed from natural language . Much work is superficial and fashion-driven, with SOTA-chasing and endless “tables with numbers” . Bias is generally understood “as though it is a bug to be fixed rather than a feature of classification itself” . The actors are “deafeningly male and white and technoheroic” , valorising technical novelty over all else . AI isn’t working, or even helpful, for most people on the planet .

Only a minority of researchers can access SOTA tools, and they must use exponentially more resources for only linear performance gains . Review processes allow industry “research” to leverage the prestige of conference publication into reputational benefits for private companies . In spite of its manifold harms (§2), LLM research receives scholarly recognition . Big AI is free to “police its own use of artificial intelligence [leading to] the creation of a prominent conference on ‘Fairness, Accountability, and Transparency’ [sponsored by] Google, Facebook, and Microsoft” . This is the Big Tobacco playbook all over again .

The scalability story is a myth

Data centres cannot keep growing on a planet facing climate catastrophe . AI safety is inherently not scalable , and so we see a futile quest where guardrails are piled on top of monitoring systems on top of mitigations in a perpetual game of Whac-a-Mole. Big AI’s dirty secret is the annotation sweatshops located in the “hidden outposts of AI” . “The myth of AI as affordable and efficient depends on layers of exploitation, including the extraction of mass unpaid labor to fine-tune the AI systems of the richest companies on earth” .

Despair is not an option

It is tempting to despair. The long-awaited traction of NLP wasn’t meant to be like this. Yet “restoration is a powerful antidote to despair. Restoration offers concrete means by which humans can once again enter into positive, creative relationship with the more-than-human world, meeting responsibilities that are simultaneously material and spiritual. It’s not enough to grieve. It’s not enough to just stop doing bad things” .

What Can We Do?

1. Public good as the paramount consideration.

The ACL Code applies to the conduct of its members, not just our publications. It is not enough to assert that “someone’s going to do it anyway” (the myth of technological inevitability; ), or that “my contribution is but a small cog in a large machine” (the problem of many hands; ), or that “my work is connecting the world for good” (the myth that universal technology artefacts solve social problems; ).

Faced with the metacrisis (§2), the great challenge of our time is to “rebuild our societies, starting from what’s right in front of us: our areas of influence” . This is fundamentally a communal activity: “All of our flourishing is mutual” .

2. Protect NLP/ACL from corporate capture.

We need to address the inequity that exists when people with Big AI sponsorship have a disproportionate opportunity to make SOTA contributions . We also need to recognise that the public good principle of the ACL is not aligned with actors who “understand AI as a commercial product that should be kept as a closely guarded secret, and used to make profits for private companies” .

There is a prima facie conflict of interest when Big AI sponsors our professional bodies and when Big AI employees serve as office-bearers. Whether real or perceived, such conflicts must be declared and managed, as we navigate the “internal battle between those in charge of the business, which [needs] to be on the right side of power, and the independent editorial hierarchy, which [needs] to be responsible to the people” .

3. Cultivate actual natural language processing.

The ACL has the liberty to re-assert the scope of computational linguistics in its calls for papers, centering the phenomenon of natural human language , and to use evaluation “as a force to drive change” .

4. Establish protected spaces for critical NLP.

Review processes should ensure that scholarly contributions are not rejected simply for challenging the status quo. This includes research on power dynamics and injustices , which may encounter friction from reviewers who want to guard the space for ideologically safe contributions.

5. Articulate a vision for life-sustaining research.

What is our vision for language technology in the context of human flourishing on a living planet? New conference themes, workshops, and journal special issues are a start. However, I believe we need to promote new framings, methods, and evaluations, e.g.: the principles of data feminism ; the 10 Point Plan to Address the Information Crisis ; community-centric approaches ; the Ethics of Care ; decolonising methods ; Bender’s proposals for resisting dehumanisation ; and Sen’s Capability Approach . Language engineers need to be made aware of the political and value-laden nature of their work .

6. Leadership with public statements & policies.

In view of the harms of LLMs and the imperative to understand the public good as paramount, the ACL could provide informational statements and develop policy positions . This would serve ACL Ends “to represent computational linguistics to foundations and government agencies worldwide” and “to provide information on computational linguistics to the general public”.

7. Fundamental shift in perception and values.

Taking action is difficult unless we are “nurtured by deeply held values and ways of seeing ourselves and the world” . Only then can we stop seeing social problems as opportunities for universal technologies , resist technofeudalism and the “AI Arms Race” , replace our quest for efficiency with “values such as autonomy, creativity, ethics, slowness, carefulness” , supplant extractivist thinking with abundant intelligences , and embrace natural language as humanity’s greatest technology for enacting our agency and sustaining our common life.

111

Mohamed Abdalla and Moustafa Abdalla. 2021. The Grey Hoodie Project: Big Tobacco, Big Tech, and the threat on academic integrity . In Proceedings of the 2021 AAAI/ACM Conference on AI, Ethics, and Society, pages 287–297. ACM.

Mohamed Abdalla, Jan Philip Wahle, Terry Ruas, Aurélie Névéol, Fanny Ducel, Saif Mohammad, and Karen Fort. 2023. The elephant in the room: Analyzing the presence of Big Tech in natural language processing research . In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics, pages 13141–13160. ACL.

ACL. 2017. ACL policy on political statements and actions. https://aclweb.org/adminwiki/index.php/ACL_Policy_on_Political_Statements_and_Actions , Accessed 20251229.

ACL. 2020. Code of Ethics. https://www.aclweb.org/portal/content/acl-code-ethics , Accessed 20250204.

Will Aitken, Mohamed Abdalla, Karen Rudie, and Catherine Stinson. 2024. Collaboration or corporate capture? quantifying NLP’s reliance on industry artifacts and contributions . In Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics, pages 3433–3448. ACL.

Linda Martı́n Alcoff. 2022. Extractivist epistemologies . Tapuya: Latin American Science, Technology and Society, 5:2127231.

Ramón Alvarado. 2023. AI as an epistemic technology . Science and Engineering Ethics, 29(5):32.

Julia Webster Ayuso. 2025. The languages lost to climate change. Noema Magazine. https://www.noemamag.com/the-languages-lost-to-climate-change/ , Accessed 20250217.

Noman Bashir, Priya Donti, James Cuff, Sydney Sroka, Marija Ilic, Vivienne Sze, Christina Delimitrou, and Elsa Olivetti. 2024. The climate and sustainability implications of Generative AI. An MIT Exploration of Generative AI. https://mit-genai.pubpub.org/pub/8ulgrckc , Accessed 20250518.

Jo Bates, Monika Fratczak, Helen Kennedy, Itzelle Medina Perea, and Erinma Ochu. 2025. Feeding the machine: Practitioner experiences of efforts to overcome AI’s data dilemma . Big Data and Society, 12(4):20539517251396092.

Quinn Beato. 2024. The Paradox of Climate Doomerism. Ph.D. thesis, University of Colorado Boulder.

Emily M Bender. 2024. Resisting dehumanization in the Age of “AI” . Current Directions in Psychological Science, 33:114–120.

Emily M Bender, Timnit Gebru, Angelina McMillan-Major, and Shmargaret Shmitchell. 2021. On the dangers of stochastic parrots: Can language models be too big? In Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency, pages 610–623. ACM.

Emily M. Bender and Alex Hanna. 2025. The AI Con: How To Fight Big Tech’s Hype and Create the Future We Want. Penguin.

Emily M. Bender and Alexander Koller. 2020. Climbing towards NLU: On meaning, form, and understanding in the Age of Data . In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, pages 5185–5198. ACL.

Marianne Bertrand, Matilde Bombardini, Raymond Fisman, and Francesco Trebbi. 2020. Tax-exempt lobbying: Corporate philanthropy as a tool for political influence . American Economic Review, 110(7):2065–2102.

Vikram R Bhargava and Manuel Velasquez. 2021. Ethics of the attention economy: The problem of social media addiction . Business Ethics Quarterly, 31:321–359.

Roy Bhaskar, Sean Esbjörn-Hargens, Nicholas Hedlund, and Mervyn Hartwig. 2016. Metatheory for the Twenty-First Century: Critical Realism and Integral Theory in Dialogue . Routledge.

Elettra Bietti. 2021. From ethics washing to ethics bashing: A moral philosophy view on tech ethics . Journal of Social Computing, 2:266–283.

Steven Bird. 2020. Decolonising speech and language technology . In Proceedings of the 28th International Conference on Computational Linguistics, page 3504–3519. ICCL.

Steven Bird. 2024. Must NLP be extractive? In Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics, pages 14915–14929. ACL.

Steven Bird and Dean Yibarbuk. 2024. Centering the speech community . In Proceedings of the 18th Conference of the European Association for Computational Linguistics, pages 826–839. ACL.

Abeba Birhane, Pratyusha Kalluri, Dallas Card, William Agnew, Ravit Dotan, and Michelle Bao. 2022. The values encoded in machine learning research . In Proceedings of the 2022 ACM Conference on Fairness, Accountability, and Transparency, pages 173–184. ACM.

Rishi Bommasani. 2023. Evaluation for change . In Findings of the Association for Computational Linguistics, pages 8227–8239. ACL.

Faith Boninger and T Philip Nichols. 2025. Fit for purpose? how today’s commercial digital platforms subvert key goals of public education. http://nepc.colorado.edu/publication/digital-platforms , Accessed 20250930.

Mikael Brunila. 2025. Cosine capital: Large language models and the embedding of all things . Big Data and Society, 12(4):20539517251386055.

Jenna Burrell. 2024. Automated decision-making as domination . First Monday, 29(4).

Grzegorz Chrupała. 2023. Putting natural in natural language processing . In Findings of the Association for Computational Linguistics, pages 7820–7827. ACL.

Kenneth Ward Church and Valia Kordoni. 2022. Emerging trends: SOTA-chasing . Natural Language Engineering, 28:249–269.

Mark Coeckelbergh. 2025. Llms, truth, and democracy: An overview of risks . Science and Engineering Ethics, 31(1):4.

Jonathan Cohn. 2020. In a different code: Artificial intelligence and the ethics of care . The International Review of Information Ethics, 28:1–7.

A Feder Cooper, Emanuel Moss, Benjamin Laufer, and Helen Nissenbaum. 2022. Accountability in an algorithmic society: relationality, responsibility, and robustness in machine learning . In Proceedings of the 2022 ACM Conference on Fairness, Accountability, and Transparency, pages 864–876. ACM.

Ned Cooper, Courtney Heldreth, and Ben Hutchinson. 2024. “It’s how you do things that matters”: Attending to process to better serve indigenous communities with language technologies . In Proceedings of the 18th Conference of the European Chapter of the Association for Computational Linguistics, pages 204–211. ACL.

Eric Corbett, Remi Denton, and Sheena Erete. 2023. Power and public participation in AI . In Proceedings of the 3rd ACM Conference on Equity and Access in Algorithms, Mechanisms, and Optimization, pages 1–13. ACM.

Cynthia Coyne, Greg Williams, and Kamaljit K. Sangha. 2022. Assessing the value of ecosystem services from an indigenous estate: Warddeken Indigenous Protected Area, Australia . Frontiers in Environmental Science, 10:845178.

Kate Crawford. 2021. The Atlas of AI: Power, Politics, and the Planetary Costs of Artificial Intelligence . Yale University Press.

David Crystal. 2002. Language Death . Cambridge University Press.

Hermann H. Dieter. 2005. Protection of the world’s linguistic and ecological diversity: Two sides of the same coin . In Bernd Hamm and Russell Smandych, editors, Cultural Imperialism: Essays on the Political Economy of Cultural Domination, pages 233–243. University of Toronto Press.

Catherine D’Ignazio and Lauren F Klein. 2023. Data feminism. MIT Press.

Ravit Dotan and Smitha Milli. 2020. Value-laden disciplinary shifts in machine learning . In Proceedings of the 2020 Conference on Fairness, Accountability, and Transparency. ACM.

Rayane El Masri and Aaron Snoswell. 2025. Towards attuned AI: Integrating care ethics in large language model development and alignment. In Third Workshop on Socially Responsible Language Modelling Research, Conference on Language Modelling.

Catalina Goanta, Nikolaos Aletras, Ilias Chalkidis, Sofia Ranchordás, and Gerasimos Spanakis. 2023. Regulation and NLP (RegNLP): Taming large language models . In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, pages 8712–8724. ACL.

AC Grayling. 2025. For The People. Oneworld Publications.

Shane Greenstein. 2023. The AI Gold Rush . IEEE Micro, 43(06):126–128.

John Hajek and Yvette Slaughter. 2014. Challenging the Monolingual Mindset . Multilingual Matters.

Paula Helm, Gábor Bella, Gertraud Koch, and Fausto Giunchiglia. 2024. Diversity and language technology: How language modeling bias causes epistemic injustice . Ethics and Information Technology, 26(1):8.

Kathleen Heugh. 2017. Displacement and language . In Suresh Canagarajah, editor, The Routledge Handbook of Migration and Language, pages 187–206. Routledge.

Michael Townsen Hicks, James Humphries, and Joe Slater. 2024. ChatGPT is bullshit . Ethics and Information Technology, 26(2):1–10.

Luke Kemp. 2025. Goliath’s Curse: The History and Future of Societal Collapse. Knopf.

Robin Wall Kimmerer. 2013. Braiding Sweetgrass: Indigenous Wisdom, Scientific Knowledge and the Teachings of Plants. Penguin.

Keith Kirkpatrick. 2023. The carbon footprint of artificial intelligence . Communications of the ACM, 66(8):17–19.

Tamara Kneese and Meg Young. 2024. Carbon emissions in the tailpipe of generative AI . Harvard Data Science Review, 5.

Konstantinos Kogkalidis and Stergios Chatzikyriakidis. 2025. On tables with numbers, with numbers . In Proceedings of the 1st Workshop on Language Models for Underserved Communities, pages 104–115. ACL.

Philipp Krämer, Ulrike Vogl, and Leena Kolehmainen. 2022. What is “language making”? International Journal of the Sociology of Language, 274:1–27.

Kevin LaGrandeur. 2024. The consequences of AI hype . AI and Ethics, 4:653–656.

Michael Lawrence, Thomas Homer-Dixon, Scott Janzwood, Johan Rockstöm, Ortwin Renn, and Jonathan F. Donges. 2024. Global polycrisis: The causal mechanisms of crisis entanglement . Global Sustainability, 7:e6.

Hao-Ping Lee, Advait Sarkar, Lev Tankelevitch, Ian Drosos, Sean Rintel, Richard Banks, and Nicholas Wilson. 2025. The impact of generative AI on critical thinking: Self-reported reductions in cognitive effort and confidence effects from a survey of knowledge workers . In Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems, pages 1–22.

Timothy Lenton, Laurie Laybourn, David Armstrong McKay, Sina Loriani, Jesse Abrams, Steven Lade, Jonathen Donges, Manjana Milkoreit, Steven Smith, Emma Bailey, and others. 2023. Global Tipping Points Report 2023: Summary Report. University of Exeter.

Jason Edward Lewis, Hēmi Whaanga, and Ceyda Yolgörmez. 2024. Abundant intelligences: Placing AI within Indigenous knowledge frameworks . AI and Society.

Carmen Zamorano Llena, Jonas Stier, and Billy Gray. 2024. The future of (meta)crisis: From anxiety and the culture of fear to hope, solidarity, and the culture of resilience? In Carmen Zamorano Llena, Jonas Stier, and Billy Gray, editors, Crisis and the Culture of Fear and Anxiety in Contemporary Europe, pages 224–231. Routledge.

Paola Lopez. 2024. More than the sum of its parts: Susceptibility to algorithmic disadvantage as a conceptual framework . In Proceedings of the 2024 ACM Conference on Fairness, Accountability, and Transparency, pages 909–919. ACM.

Dylan Scott Low, Isaac Mcneill, and Michael James Day. 2022. Endangered languages: A sociocognitive approach to language death, identity loss, and preservation in the age of artificial intelligence . Sustainable Multilingualism, 21:1–25.

Joanna Macy and Molly Young Brown. 2014. Coming Back to Life: The Guide to the Work that Reconnects. New Society Publishers.

Luisa Maffi. 2005. Linguistic, cultural, and biological diversity . Annual Review of Anthropology, 34:599–617.

Keoni Mahelona, Gianna Leoni, Suzanne Duncan, and Miles Thompson. 2023. ’s whisper is another case study in colonisation. Papa Reo. https://blog.papareo.nz/whisper-is-another-case-study-in-colonisation/ , Accessed 20250215.

Alva Markelius, Connor Wright, Joahna Kuiper, Natalie Delille, and Yu-Ting Kuo. 2024. The mechanisms of AI hype and its planetary and social costs . AI and Ethics, 4:727–742.

Nina Markl. 2022. Mind the data gap(s): Investigating power in speech and language datasets . In Proceedings of the Second Workshop on Language Technology for Equality, Diversity and Inclusion, pages 1–12. ACL.

Nina Markl, Lauren Hall-Lew, and Catherine Lai. 2024. Language technologies as if people mattered: Centering communities in language technology development. In Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation, pages 10085–99.

Samuel Mateus. 2020. Narcotizing dysfunction . In The SAGE International Encyclopedia of Mass Media and Society. SAGE Publications.

McKinsey. 2024. AI for Social Good: Improving Lives and Protecting the Planet. McKinsey and Company. https://www.mckinsey.com/capabilities/quantumblack/our-insights/ai-for-social-good , Accessed 20250215.

Benjamin Mitchell and William Fleischman. 2020. Responsibility in the age of irresponsible speech. In Mario Arias-Oliva, Jorge Pelegrı́n-Borondo, Kiyoshi Murata, and Ana Marı́a Lara Palma, editors, Societal Challenges in the Smart Society, pages 587–592. Universidad de La Rioja.

Shira Mitchell, Eric Potash, Solon Barocas, Alexander D’Amour, and Kristian Lum. 2021. Algorithmic fairness: Choices, assumptions, and definitions . Annual Review of Statistics and Its Application, 8:141–163.

Shakir Mohamed, Marie-Therese Png, and William Isaac. 2020. Decolonial AI: Decolonial theory as sociotechnical foresight in artificial intelligence . Philosophy and Technology, pages 1–26.

Jared Moore, Declan Grabb, William Agnew, Kevin Klyman, Stevie Chancellor, Desmond C. Ong, and Nick Haber. 2025. Expressing stigma and inappropriate responses prevents llms from safely replacing mental health providers. In Proceedings of the 2025 ACM Conference on Fairness, Accountability, and Transparency, pages 599–627. ACM.

Edgar Morin and Anne Brigitte Kern. 1999. Homeland Earth: A Manifesto for the New Millenium. Hampton Press.

James Muldoon, Mark Graham, and Callum Cant. 2024. Feeding the Machine: The Hidden Human Labor Powering AI. Bloomsbury.

Rodrigo Ochigame. 2022. The invention of ‘ethical AI’: How Big Tech manipulates academia to avoid regulation. In Thao Phan, Jake Goldenfein, and Declan Kuch, editors, Economies of Virtue: The Circulation of ‘Ethics’ in AI, pages 49–56. Amsterdam: Institute of Network Cultures. Originally appeared in The Intercept (December 2019).

Seán S ÓhÉigeartaigh, Jess Whittlestone, Yang Liu, Yi Zeng, and Zhe Liu. 2020. Overcoming barriers to cross-cultural cooperation in AI ethics and governance . Philosophy and Technology, 33:571–593.

Emmanouil Papagiannidis, Patrick Mikalef, and Kieran Conboy. 2025. Responsible artificial intelligence governance: A review and research framework . Journal of Strategic Information Systems, 34(2):101885.

Helen Pearson. 2024. The rise of eco-anxiety: Scientists wake up to the mental-health toll of climate change . Nature, 628(8007):256–258.

Bernard Perley. 2012. Zombie linguistics: Experts, endangered languages and the curse of undead voices . Anthropological Forum, 22:133–149.

Andrew J. Peterson. 2025. AI and the problem of knowledge collapse . AI and Society, pages 1–21.

Thao Phan, Jake Goldenfein, Monique Mann, and Declan Kuch. 2022. Economies of virtue: The circulation of ‘ethics’ in Big Tech . Science as Culture, 31:121–135.

Maria Ressa. 2022. How to Stand Up to a Dictator. Penguin.

Katherine Richardson, Will Steffen, Wolfgang Lucht, Jørgen Bendtsen, Sarah E Cornell, Jonathan F Donges, Markus Drüke, Ingo Fetzer, Govindasamy Bala, Werner Von Bloh, and others. 2023. Earth beyond six of nine planetary boundaries . Science Advances, 9(37):eadh2458.

Laura Schelenz and Maria Pawelec. 2022. Information and communication technologies for development (ICT4D) critique . Information Technology for Development, 28:165–188.

Chris Schmitz, Jonathan Rystrøm, and Jan Batzner. 2025. Oversight structures for agentic AI in public-sector organizations . In Proceedings of the 1st Workshop for Research on Agent Language Models, pages 298–308. ACL.

Lane Schwartz. 2022. Primum Non Nocere: Before working with Indigenous data, the ACL must confront ongoing colonialism . In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics, pages 724–731.

Roy Schwartz, Jesse Dodge, Noah A. Smith, and Oren Etzioni. 2020. Green AI . Communications of the ACM, 63(12):54–63.

Peter Seele and Mario Schultz. 2022. From greenwashing to machinewashing: A model and future directions derived from reasoning by analogy . Journal of Business Ethics, 178:1063–1089.

Giustina Selvelli. 2024. Endangered languages, endangered environments: Reflections on an integrated approach towards current issues of ecocultural diversity loss. In Andrej Lukšič and Sultana Jovanovska, editors, The Public, the Private and the Commons: Challenges of a Just Green Transition, pages 141–152. National and University Library, Ljubljana.

Amartya Sen. 1999. Development as Freedom. Oxford University Press.

Renee Shelby, Shalaleh Rismani, Kathryn Henne, AJung Moon, Negar Rostamzadeh, Paul Nicholas, N’Mah Yilla-Akbari, Jess Gallegos, Andrew Smart, Emilio Garcia, and Gurleen Virk. 2023. Sociotechnical harms of algorithmic systems: Scoping a taxonomy for harm reduction . In Proceedings of the 2023 AAAI/ACM Conference on AI, Ethics, and Society, pages 723–741.

Natalie Sherman and Madeline Halpert. 2025. ezos, Zuckerberg, Pichai attend Trump’s inauguration. BBC News. https://www.bbc.com/news/articles/cvgpqeq82rvo , Accessed 20250518.

Tom Slee. 2020. The incompatible incentives of private-sector AI . In Markus D. Dubber, Frank Pasquale, and Sunit Das, editors, The Oxford Handbook of Ethics of AI, pages 106–123. Oxford University Press.

Linda Tuhiwai Smith. 2012. Decolonizing Methodologies, 2nd edition. Zed Books.

Ramesh Srinivasan. 2017. Whose Global Village?: Rethinking how Technology Shapes Our World. NYU Press.

Shashank Srivastava. 2025. Large language models threaten language’s epistemic and communicative foundations . In Proceedings of the 2025 Conference on Empirical Methods in Natural Language Processing, pages 28650–28664. ACL.

Swati Srivastava. 2023. Algorithmic governance and the international politics of Big Tech . Perspectives on Politics, 21:989–1000.

Daniel Steel, Charly Phillips, Amanda Giang, and Kian Mintz-Woo. 2024. A forward-looking approach to climate change and the risk of societal collapse . Futures, 158:103361.

UNEP. 2021. Making Peace with Nature: A Scientific Blueprint to Tackle the Climate, Biodiversity and Pollution Emergencies. United Nations Environment Program. https://www.unep.org/resources/making-peace-nature , Accessed 20250204.

UNEP. 2024. Navigating New Horizons: A global foresight report on planetary health and human wellbeing. United Nations Environment Programme. https://www.unep.org/resources/global-foresight-report , Accessed 20240204.

Yanis Varoufakis. 2024. Technofeudalism: What Killed Capitalism. Melville House.

Maggie Walter and Michele Suina. 2019. Indigenous data, indigenous methodologies and indigenous data sovereignty . International Journal of Social Research Methodology, 22:233–43.

Robert Weissman, Michael Tanglis, Eileen O’Grady, Jon Golinger, Rick Claypool, and Alan Zibel. 2025. Banquet of greed: Trump ballroom donors feast on federal funds and favors. https://www.citizen.org/article/banquet-of-greed-trump-ballroom-donors-feast-on-federal-funds-and-favors/ , Accessed 20251124.

Didier Wernli, Lucas Böttcher, Flore Vanackere, Yuliya Kaspiarovich, Maria Masood, and Nicolas Levrat. 2023. Understanding and governing global systemic crises in the 21st century: a complexity perspective . Global Policy, 14:207–228.

Douglas Whalen, Margaret Moss, and Daryl Baldwin. 2016. Healing through language: Positive physical health effects of indigenous language use . F1000Research, 5.

World Economic Forum. 2025. As World Economic Forum Annual Meeting 2025 opens, leaders call for renewed global collaboration in the Intelligent Age. https://www.weforum.org/press/2025/01/as-world-economic-forum-annual-meeting-2025-opens-leaders-call-for-renewed-global -collaboration-in-the-intelligent-age/, Accessed 20250212.

Rui-Jie Yew and Brian Judge. 2025. Anti-regulatory AI: How “AI safety” is leveraged against regulatory oversight . In Proceedings of the 5th ACM Conference on Equity and Access in Algorithms, Mechanisms, and Optimization, pages 16–27. ACM.

Meg Young, Michael Katell, and PM Krafft. 2022. Confronting power and corporate capture at the FAccT conference . In Proceedings of the 2022 ACM Conference on Fairness, Accountability, and Transparency, pages 1375–1386. ACM.

Shoshana Zuboff. 2022. Surveillance capitalism or democracy? The death match of institutional orders and the politics of knowledge in our information civilization . Organization Theory, 3(3):1–79.

📊 논문 시각자료 (Figures)