Hypergraph Memory Boosts Multi-step RAG s Long-Context Reasoning

📝 Original Paper Info

- Title: Improving Multi-step RAG with Hypergraph-based Memory for Long-Context Complex Relational Modeling- ArXiv ID: 2512.23959

- Date: 2025-12-30

- Authors: Chulun Zhou, Chunkang Zhang, Guoxin Yu, Fandong Meng, Jie Zhou, Wai Lam, Mo Yu

📝 Abstract

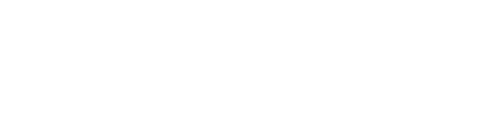

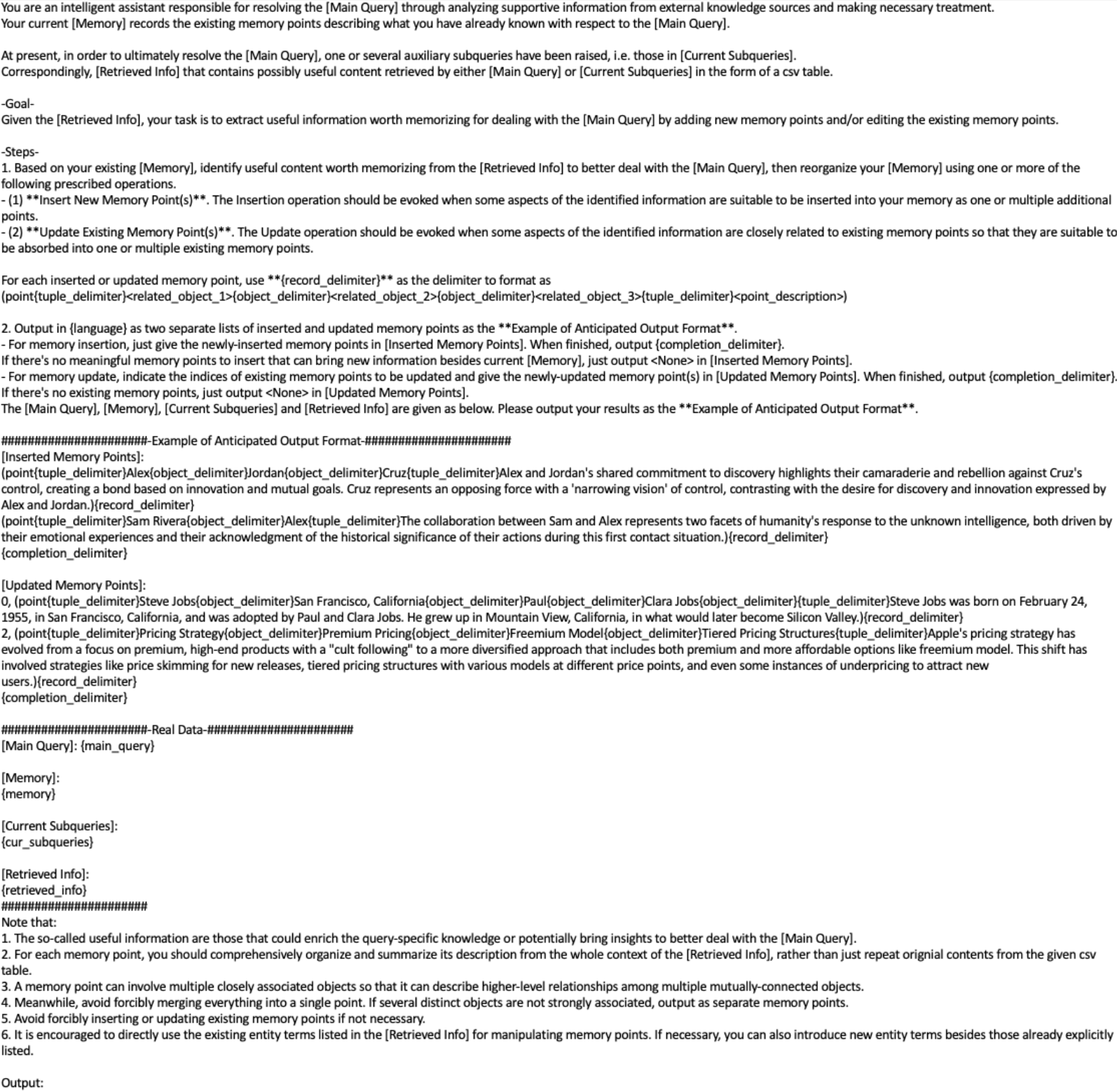

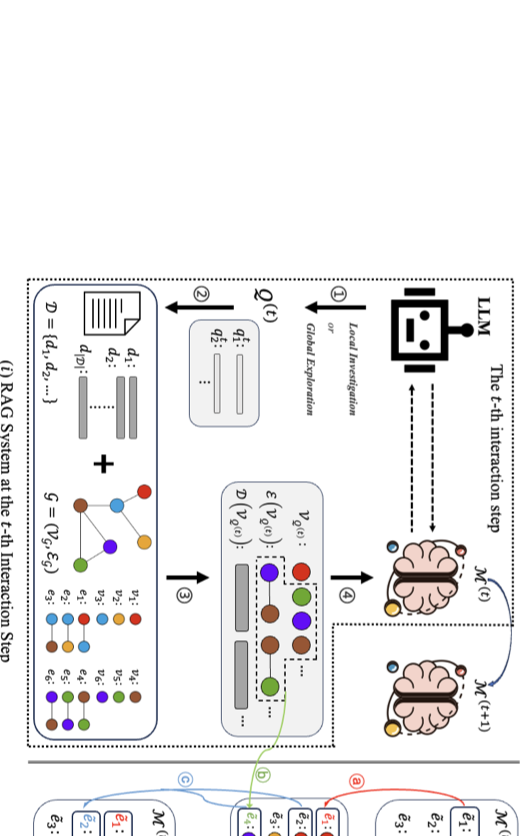

Multi-step retrieval-augmented generation (RAG) has become a widely adopted strategy for enhancing large language models (LLMs) on tasks that demand global comprehension and intensive reasoning. Many RAG systems incorporate a working memory module to consolidate retrieved information. However, existing memory designs function primarily as passive storage that accumulates isolated facts for the purpose of condensing the lengthy inputs and generating new sub-queries through deduction. This static nature overlooks the crucial high-order correlations among primitive facts, the compositions of which can often provide stronger guidance for subsequent steps. Therefore, their representational strength and impact on multi-step reasoning and knowledge evolution are limited, resulting in fragmented reasoning and weak global sense-making capacity in extended contexts. We introduce HGMem, a hypergraph-based memory mechanism that extends the concept of memory beyond simple storage into a dynamic, expressive structure for complex reasoning and global understanding. In our approach, memory is represented as a hypergraph whose hyperedges correspond to distinct memory units, enabling the progressive formation of higher-order interactions within memory. This mechanism connects facts and thoughts around the focal problem, evolving into an integrated and situated knowledge structure that provides strong propositions for deeper reasoning in subsequent steps. We evaluate HGMem on several challenging datasets designed for global sense-making. Extensive experiments and in-depth analyses show that our method consistently improves multi-step RAG and substantially outperforms strong baseline systems across diverse tasks.💡 Summary & Analysis

1. **Importance of Data Augmentation**: This study explains how data augmentation techniques improve model performance in image datasets. It's akin to adding new photos to an album, enhancing the overall collection. 2. **Hyperparameter Optimization**: The research discusses methods for setting hyperparameters to maximize model performance, similar to precisely adjusting seasoning quantities to make a dish taste better. 3. **Integrated Approach**: Combining data augmentation and hyperparameter optimization results in superior performance compared to using each technique individually, much like combining different ingredients and seasonings to create new flavors.📄 Full Paper Content (ArXiv Source)

📊 논문 시각자료 (Figures)