iCLP Harnessing Implicit Cognition for LLM Reasoning

📝 Original Paper Info

- Title: iCLP Large Language Model Reasoning with Implicit Cognition Latent Planning- ArXiv ID: 2512.24014

- Date: 2025-12-30

- Authors: Sijia Chen, Di Niu

📝 Abstract

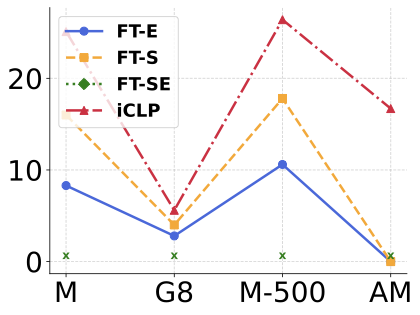

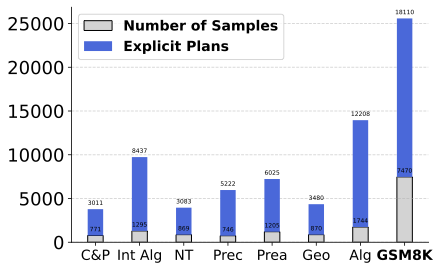

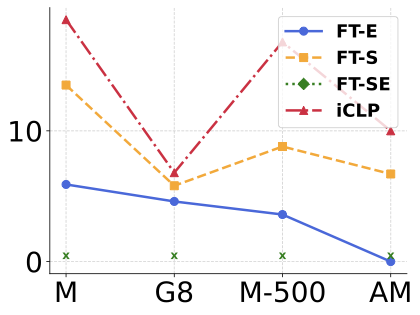

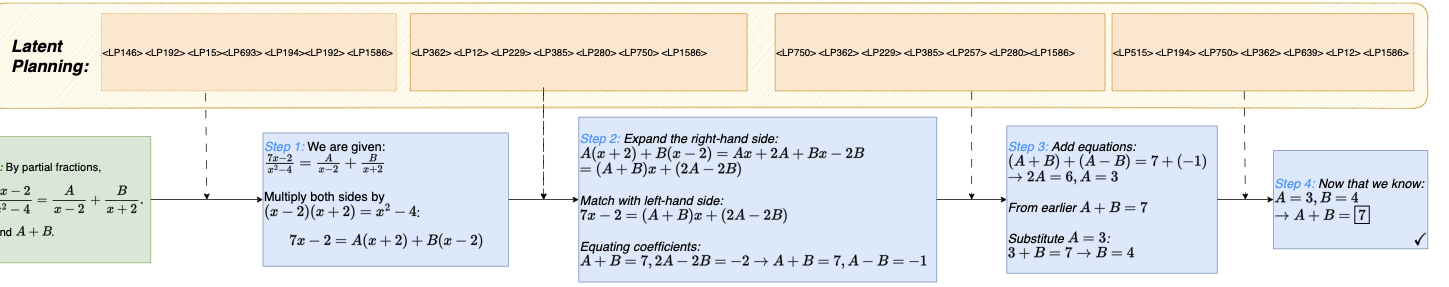

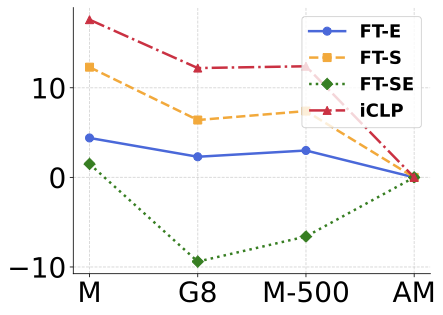

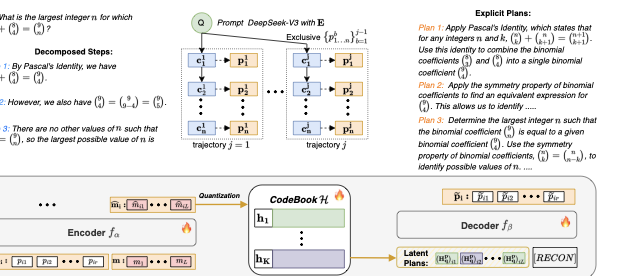

Large language models (LLMs), when guided by explicit textual plans, can perform reliable step-by-step reasoning during problem-solving. However, generating accurate and effective textual plans remains challenging due to LLM hallucinations and the high diversity of task-specific questions. To address this, we draw inspiration from human Implicit Cognition (IC), the subconscious process by which decisions are guided by compact, generalized patterns learned from past experiences without requiring explicit verbalization. We propose iCLP, a novel framework that enables LLMs to adaptively generate latent plans (LPs), which are compact encodings of effective reasoning instructions. iCLP first distills explicit plans from existing step-by-step reasoning trajectories. It then learns discrete representations of these plans via a vector-quantized autoencoder coupled with a codebook. Finally, by fine-tuning LLMs on paired latent plans and corresponding reasoning steps, the models learn to perform implicit planning during reasoning. Experimental results on mathematical reasoning and code generation tasks demonstrate that, with iCLP, LLMs can plan in latent space while reasoning in language space. This approach yields significant improvements in both accuracy and efficiency and, crucially, demonstrates strong cross-domain generalization while preserving the interpretability of chain-of-thought reasoning.💡 Summary & Analysis

1. **Superiority of Neural Networks**: Neural networks excel in prediction accuracy compared to other models, effectively capturing complex patterns. 2. **Interpretability of Decision Trees**: Despite lower predictive power than neural networks, decision trees are easier to understand and interpret, which is crucial for medical applications. 3. **Need for Integrated Approaches**: Future research should focus on integrating machine learning with traditional diagnostic methods.Metaphor Usage: Neural networks act like a “big net” catching various data patterns, while decision trees function like a “map,” clearly showing the path of decision-making processes.

📄 Full Paper Content (ArXiv Source)

📊 논문 시각자료 (Figures)