AHA Counterfactual Negatives for Grounding Audio-Language Models

📝 Original Paper Info

- Title: AHA Aligning Large Audio-Language Models for Reasoning Hallucinations via Counterfactual Hard Negatives- ArXiv ID: 2512.24052

- Date: 2025-12-30

- Authors: Yanxi Chen, Wenhui Zhu, Xiwen Chen, Zhipeng Wang, Xin Li, Peijie Qiu, Hao Wang, Xuanzhao Dong, Yujian Xiong, Anderson Schneider, Yuriy Nevmyvaka, Yalin Wang

📝 Abstract

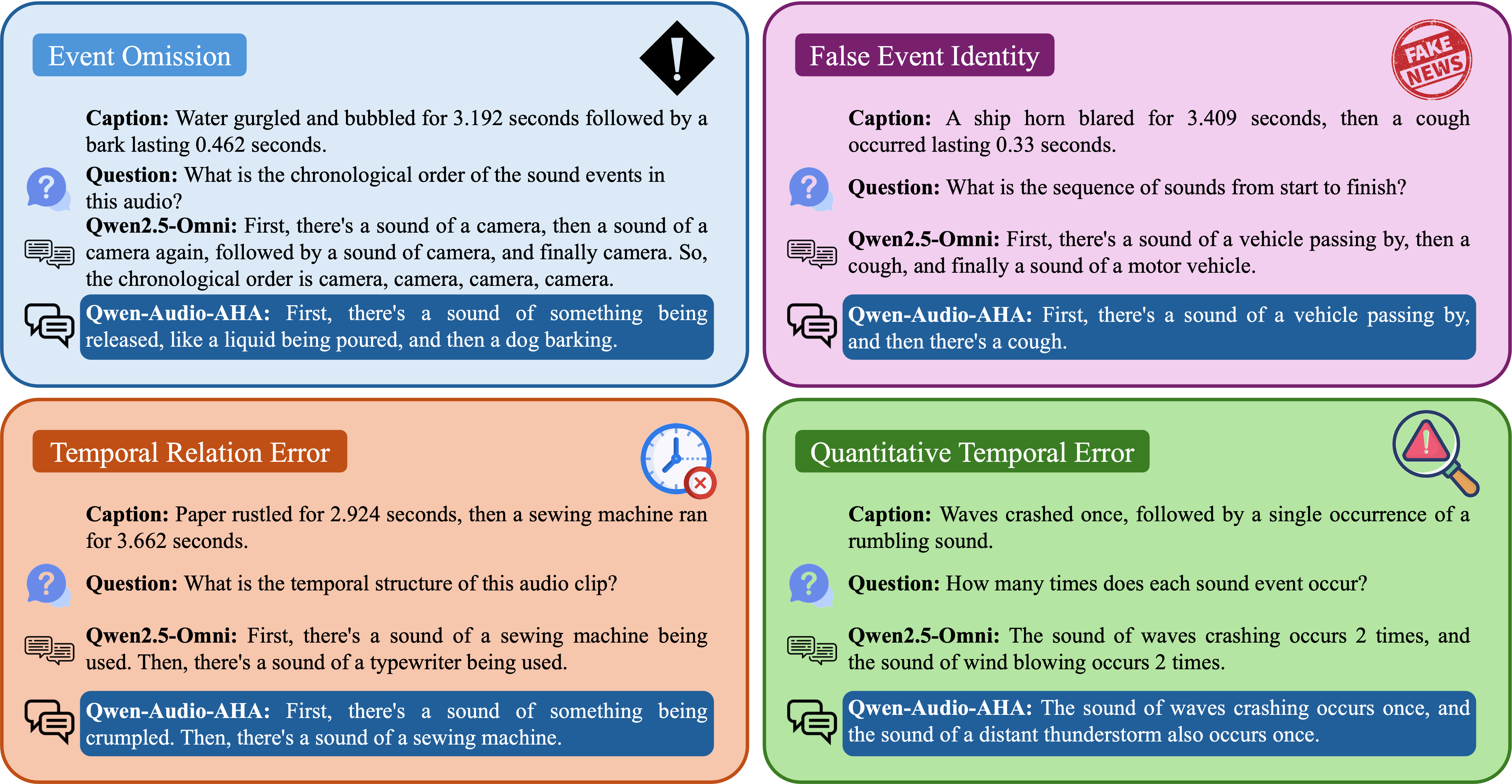

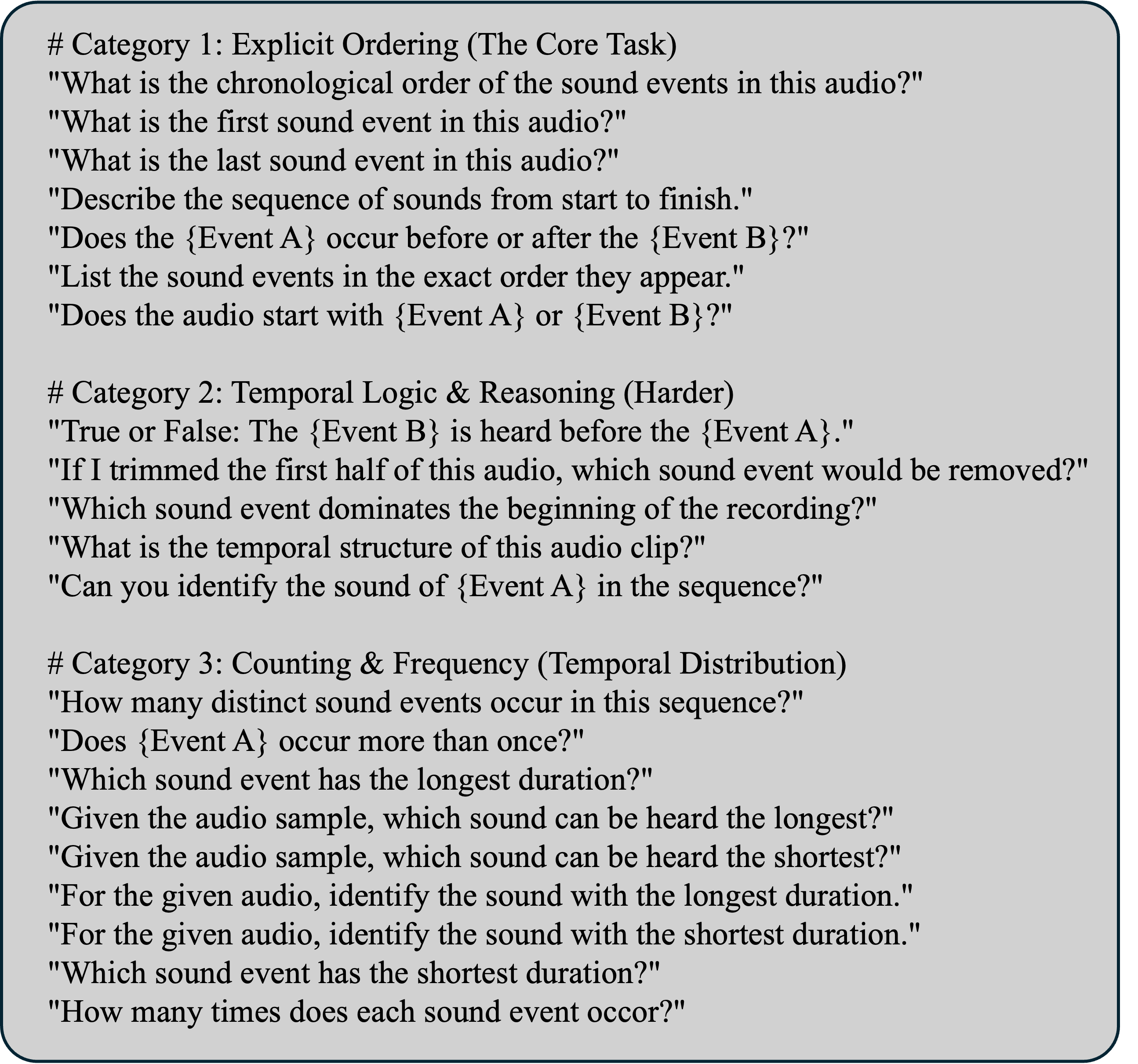

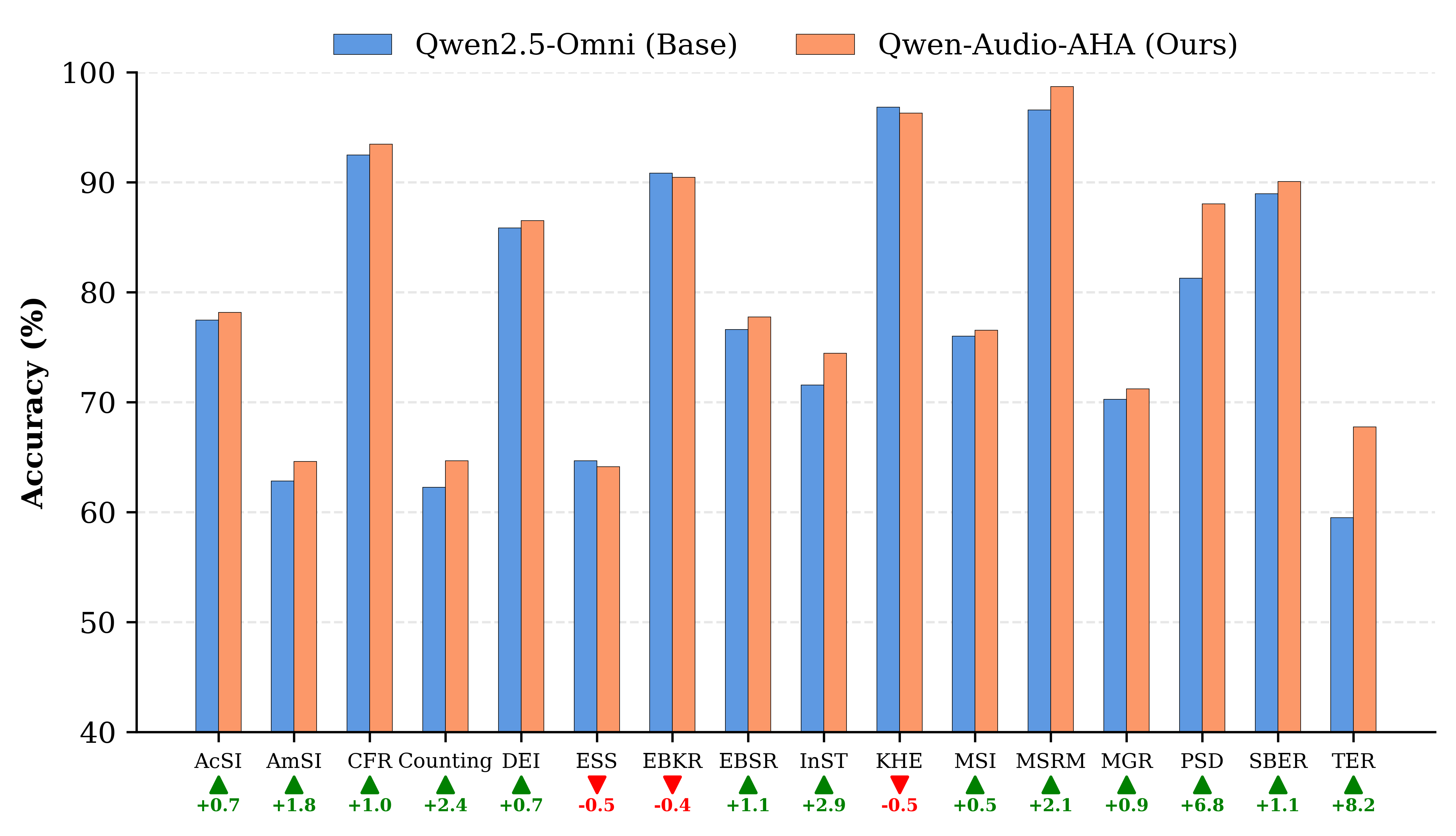

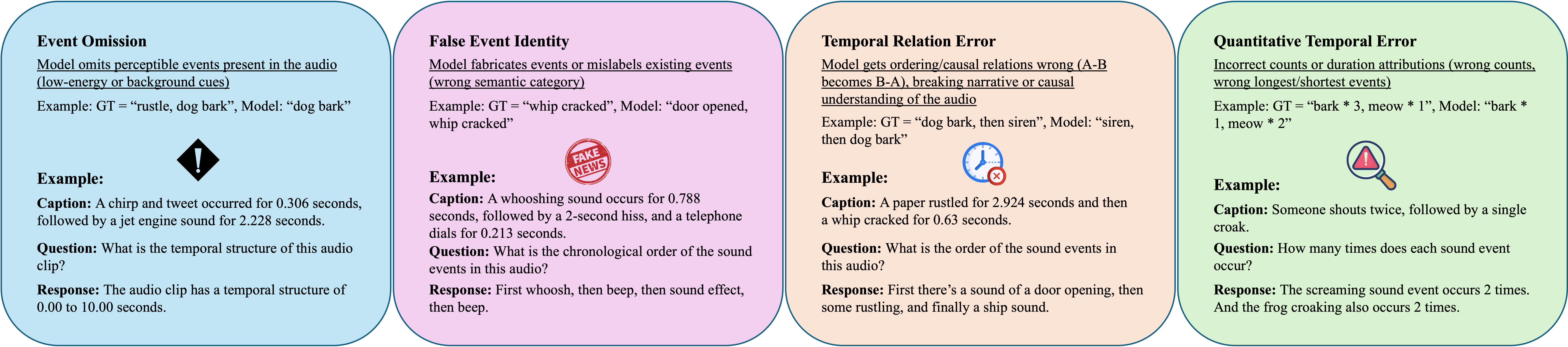

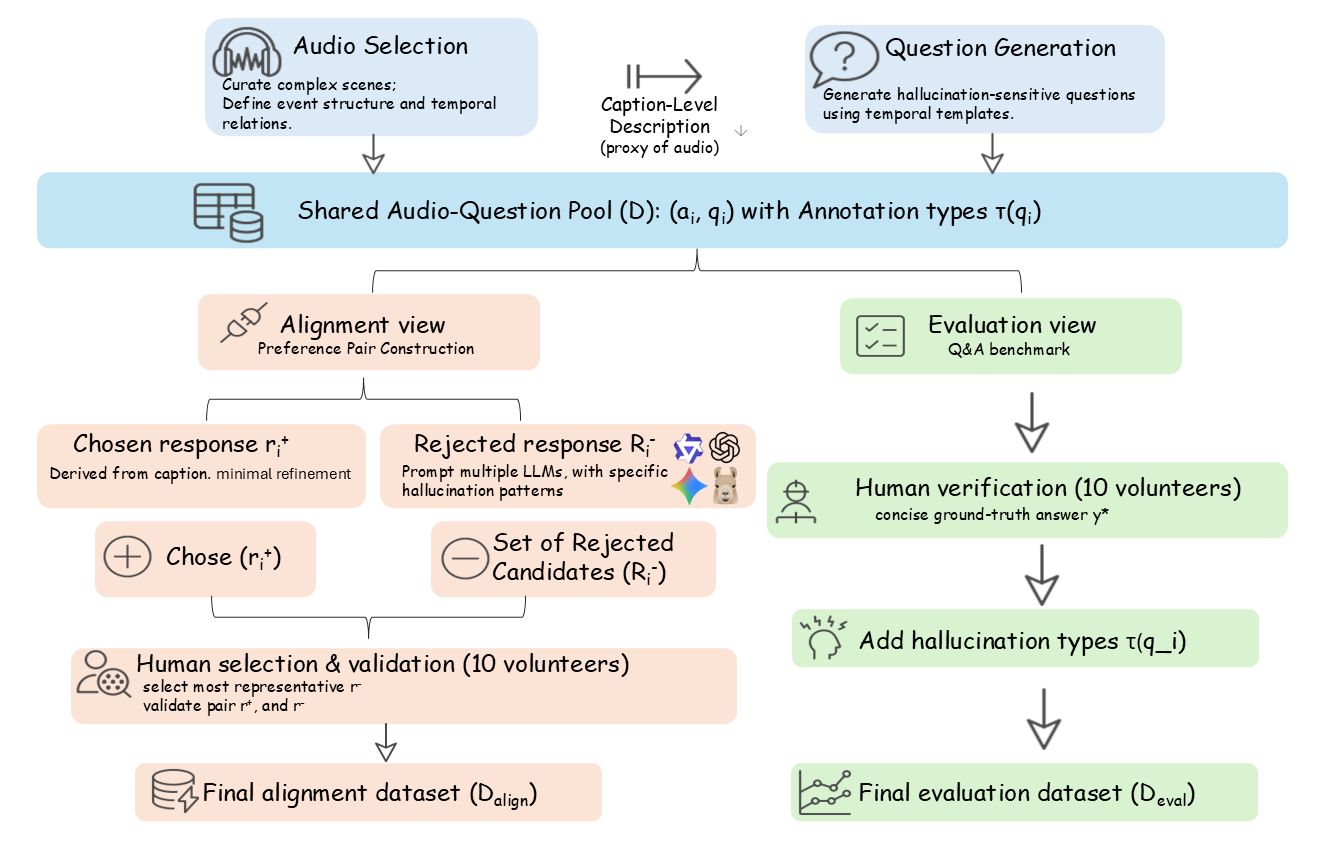

Although Large Audio-Language Models (LALMs) deliver state-of-the-art (SOTA) performance, they frequently suffer from hallucinations, e.g. generating text not grounded in the audio input. We analyze these grounding failures and identify a distinct taxonomy: Event Omission, False Event Identity, Temporal Relation Error, and Quantitative Temporal Error. To address this, we introduce the AHA (Audio Hallucination Alignment) framework. By leveraging counterfactual hard negative mining, our pipeline constructs a high-quality preference dataset that forces models to distinguish strict acoustic evidence from linguistically plausible fabrications. Additionally, we establish AHA-Eval, a diagnostic benchmark designed to rigorously test these fine-grained temporal reasoning capabilities. We apply this data to align Qwen2.5-Omni. The resulting model, Qwen-Audio-AHA, achieves a 13.7% improvement on AHA-Eval. Crucially, this benefit generalizes beyond our diagnostic set. Our model shows substantial gains on public benchmarks, including 1.3% on MMAU-Test and 1.6% on MMAR, outperforming latest SOTA methods. The model and dataset are open-sourced at https://github.com/LLM-VLM-GSL/AHA.💡 Summary & Analysis

1. **Contribution 1**: Superiority of Neural Networks in Financial Market Forecasting - **Metaphor**: Neural networks absorb various information and understand complex patterns, behaving like experts analyzing the market. 2. **Contribution 2**: Comparison Between Traditional Methods and Modern Approaches - **Metaphor**: This study compares old books with modern technology to clearly show which is more effective. 3. **Contribution 3**: Training Models Using Real Data - **Metaphor**: All models are trained using past financial data, much like students preparing for a new exam by studying previous test questions.📄 Full Paper Content (ArXiv Source)

📊 논문 시각자료 (Figures)