DRL-TH Smart Navigation with Temporal Graphs and Fusion

📝 Original Paper Info

- Title: DRL-TH Jointly Utilizing Temporal Graph Attention and Hierarchical Fusion for UGV Navigation in Crowded Environments- ArXiv ID: 2512.24284

- Date: 2025-12-30

- Authors: Ruitong Li, Lin Zhang, Yuenan Zhao, Chengxin Liu, Ran Song, Wei Zhang

📝 Abstract

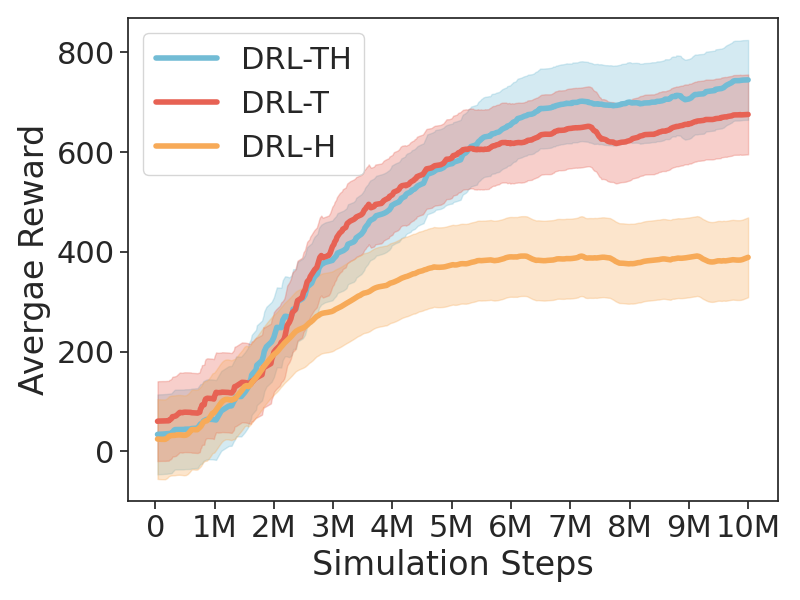

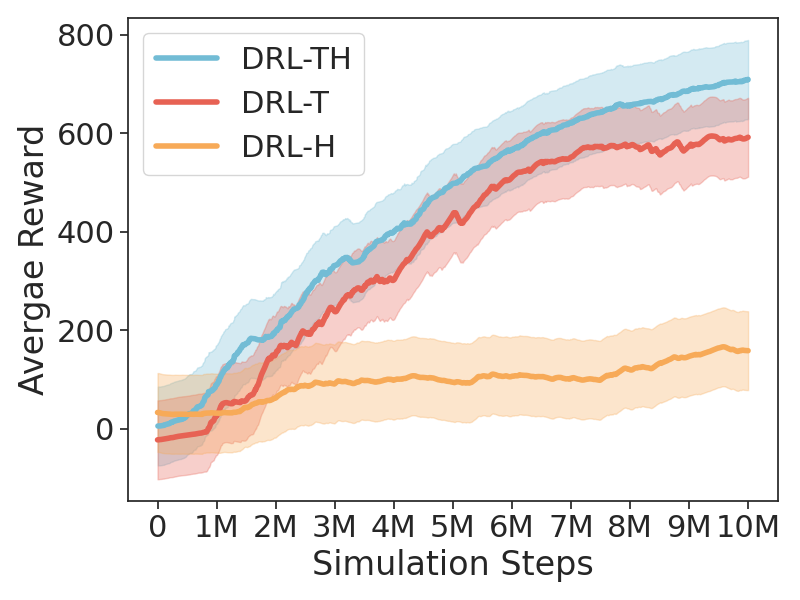

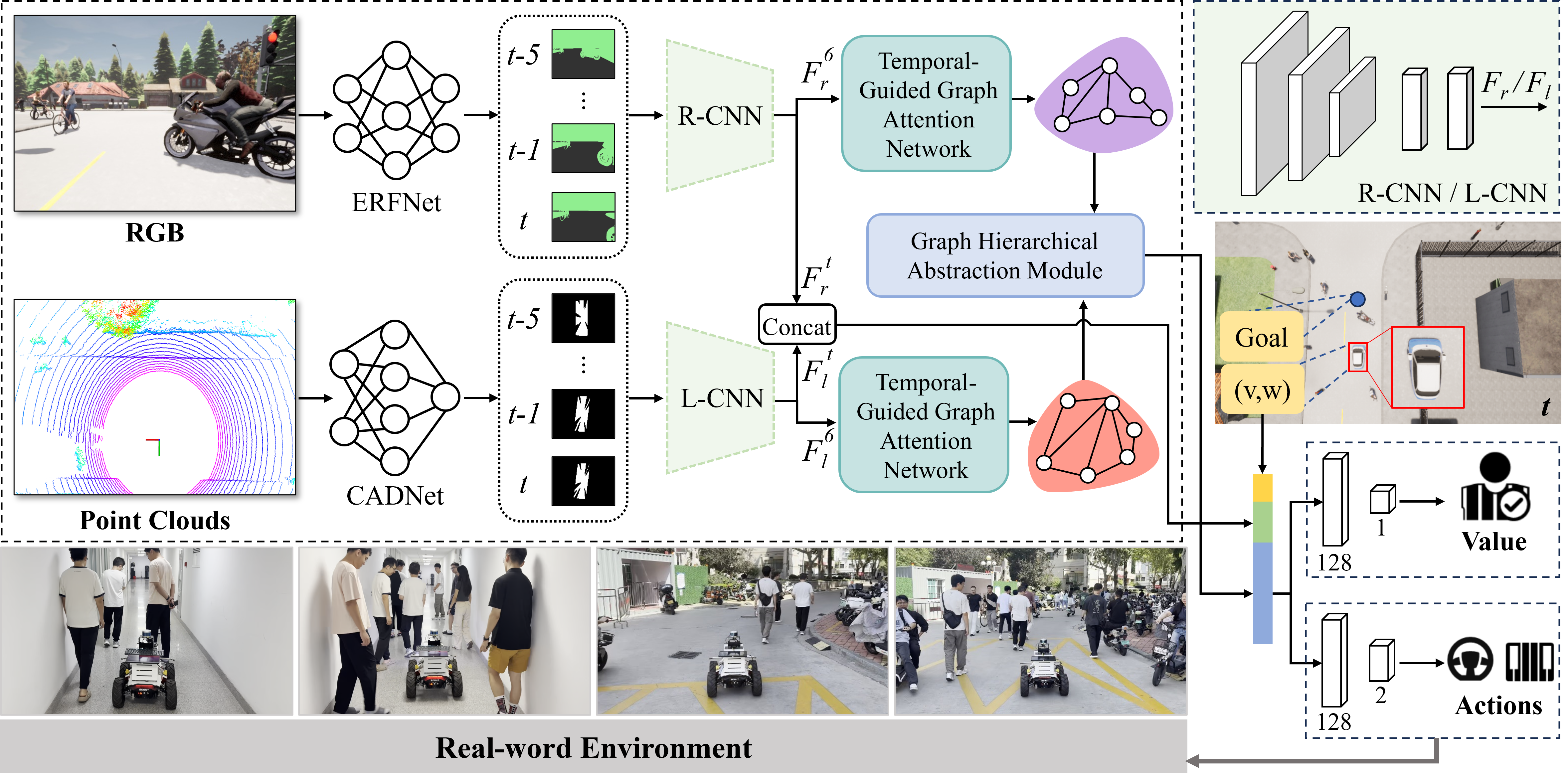

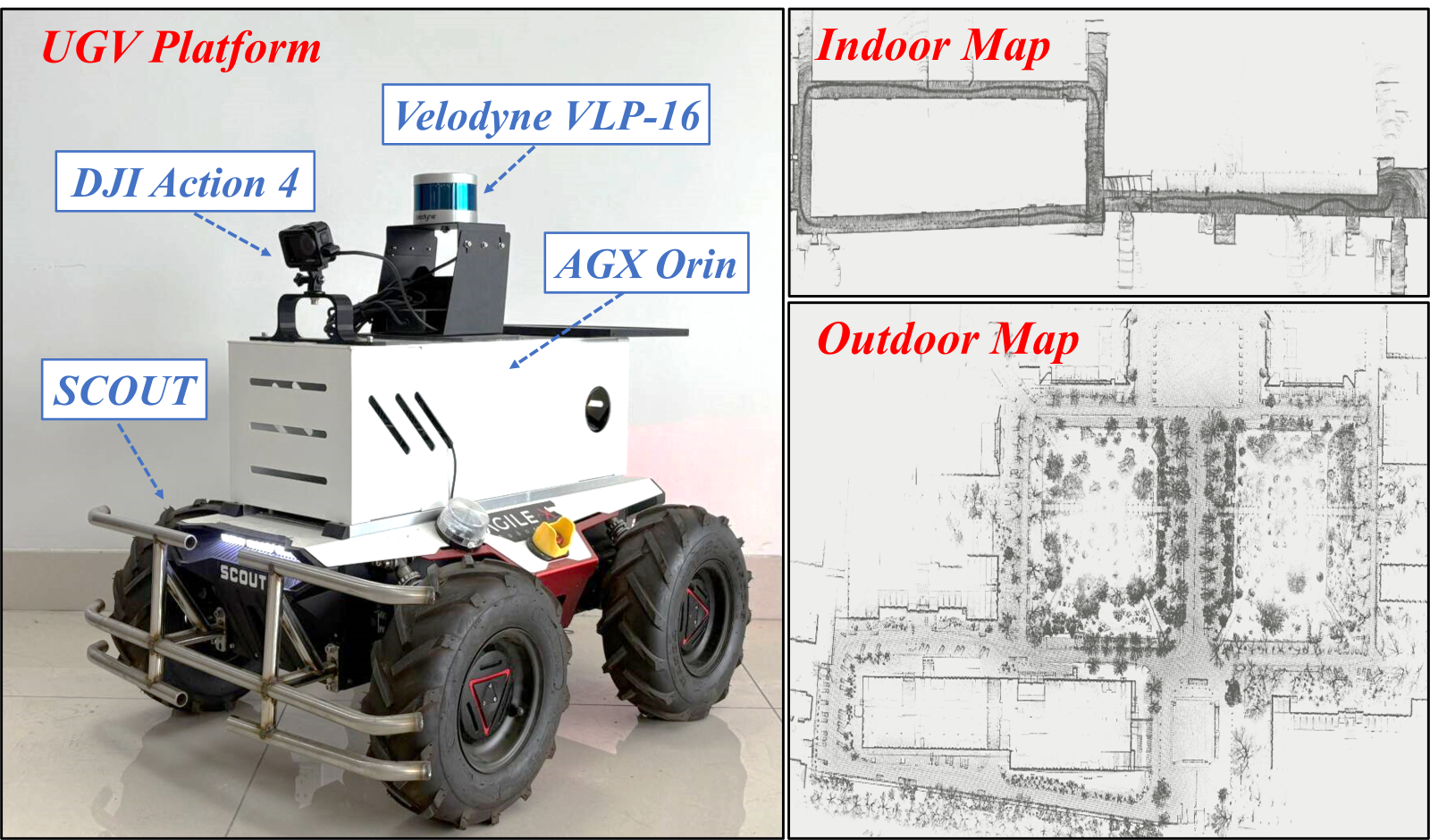

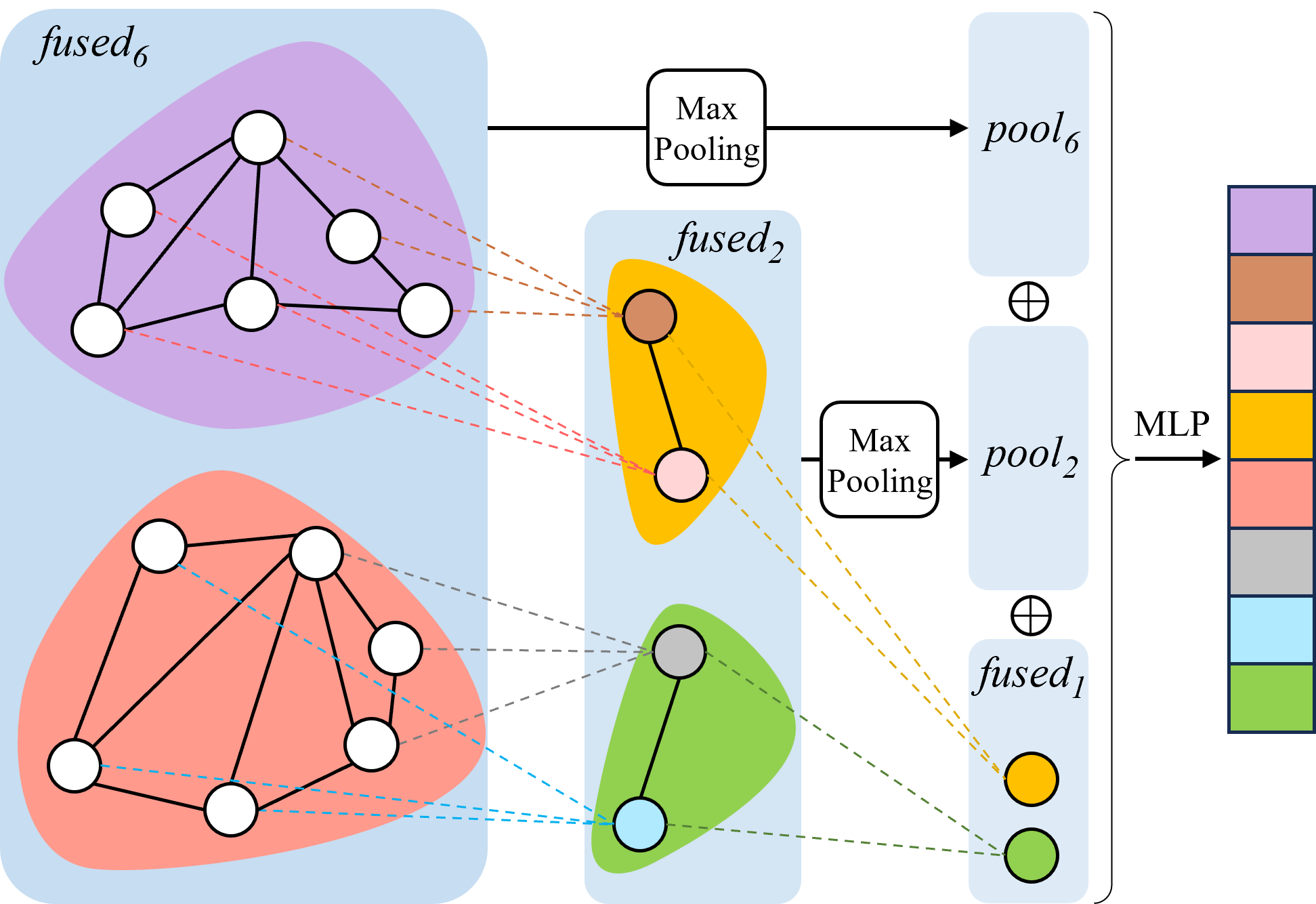

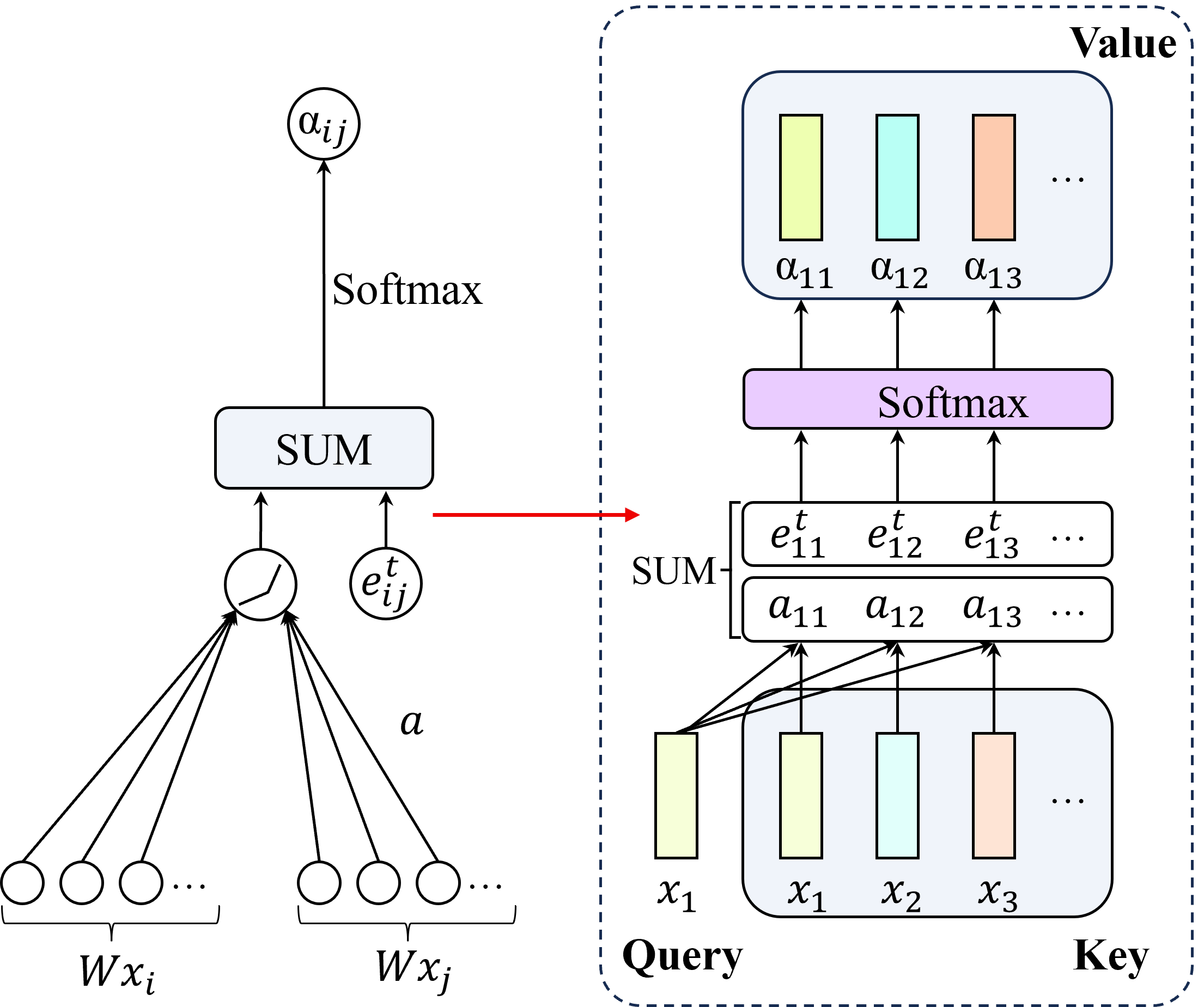

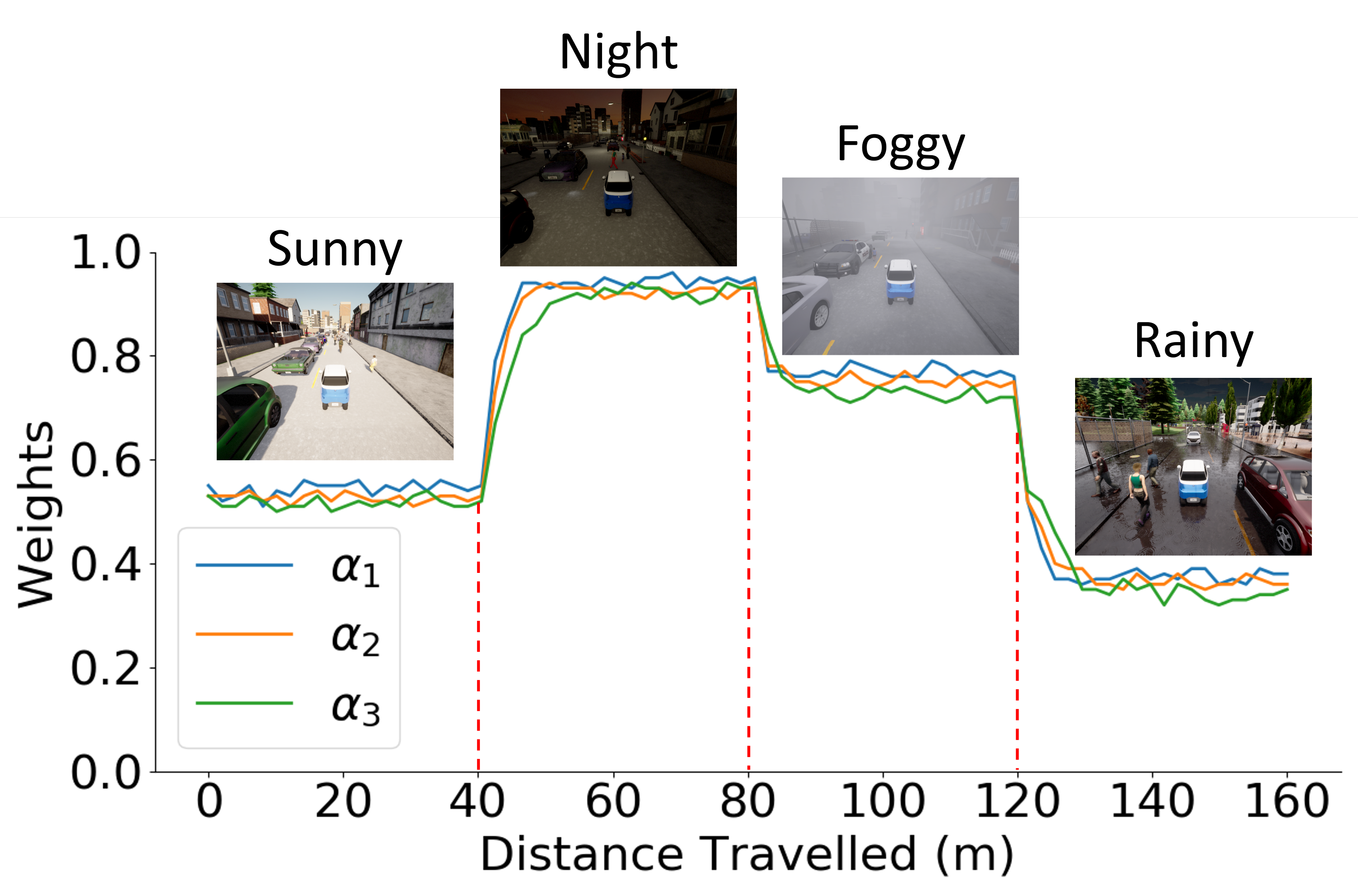

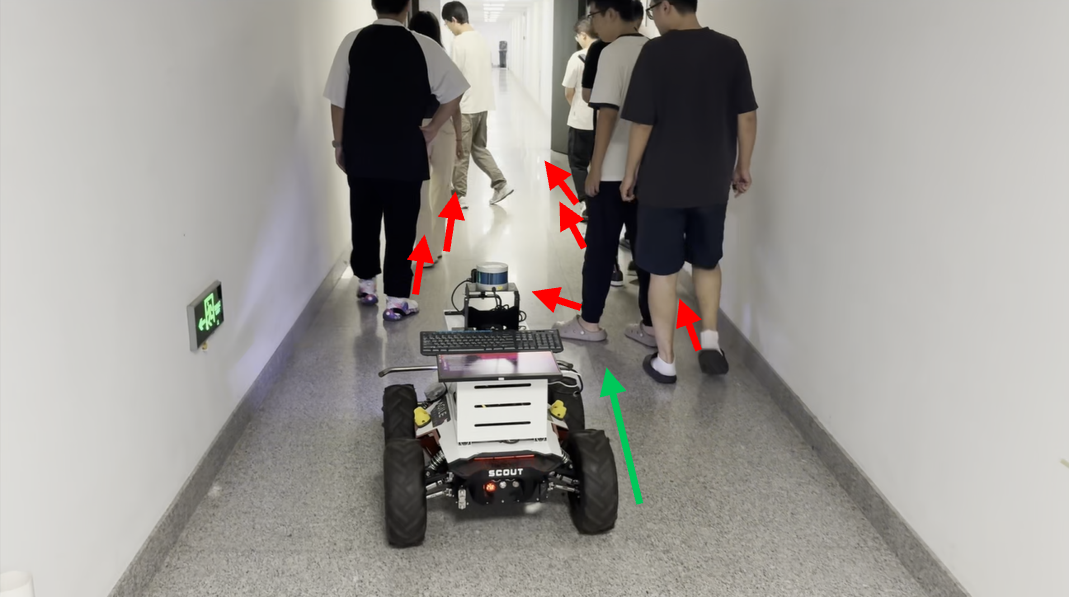

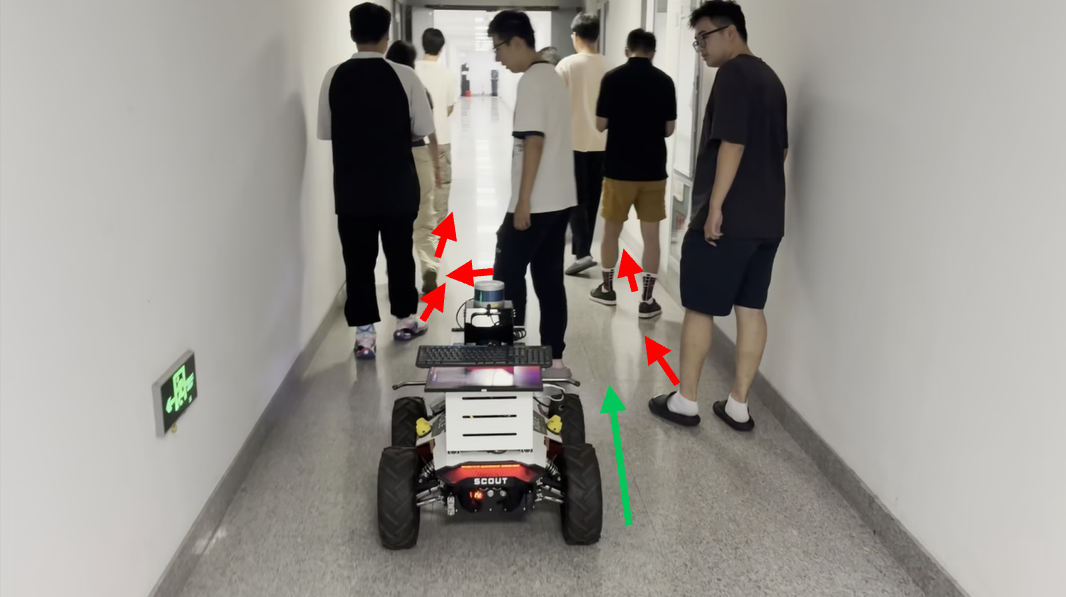

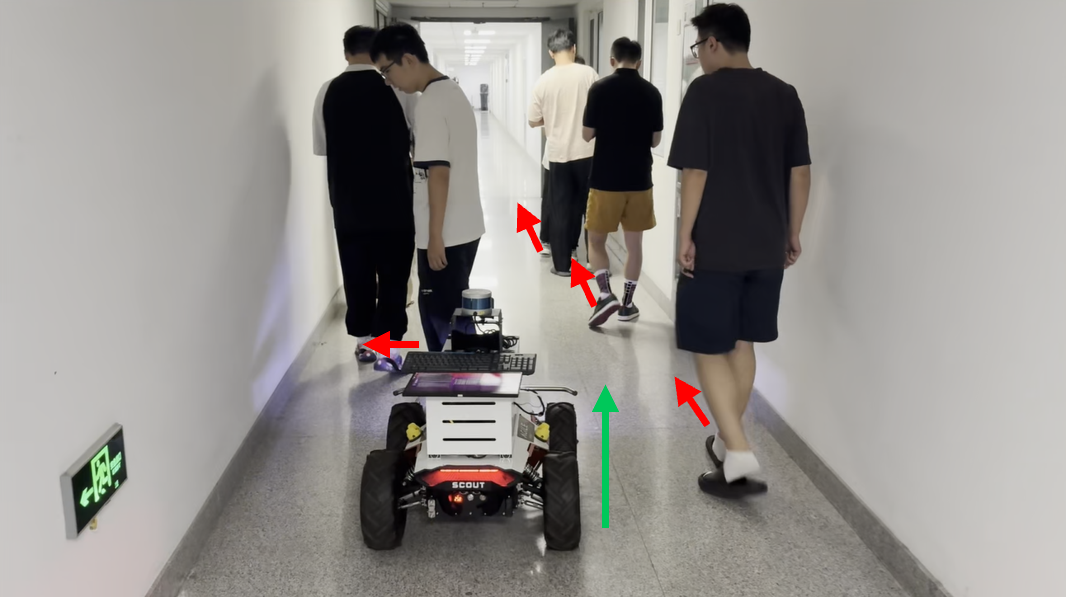

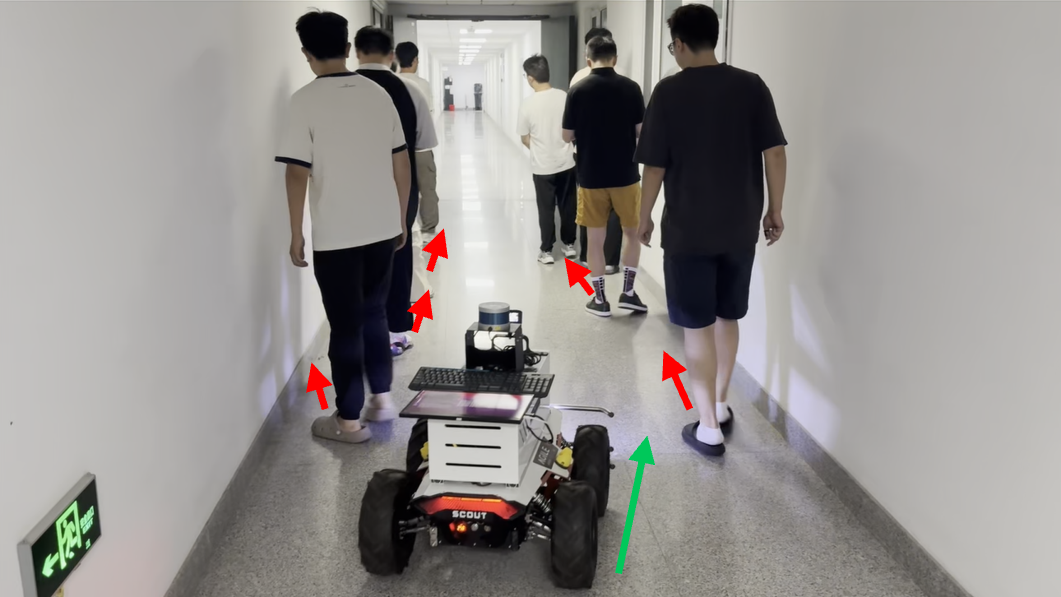

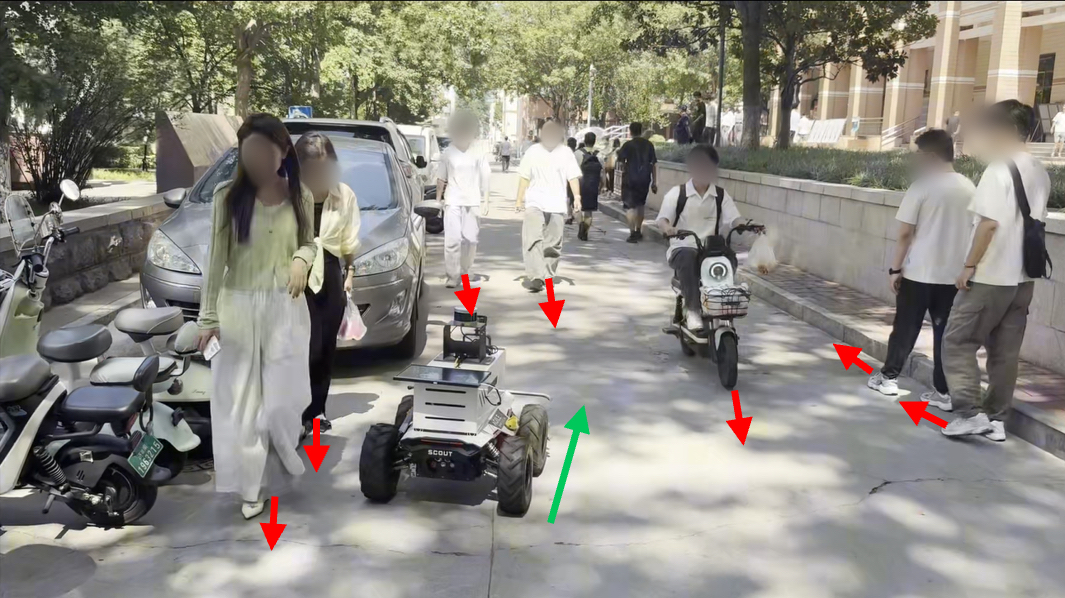

Deep reinforcement learning (DRL) methods have demonstrated potential for autonomous navigation and obstacle avoidance of unmanned ground vehicles (UGVs) in crowded environments. Most existing approaches rely on single-frame observation and employ simple concatenation for multi-modal fusion, which limits their ability to capture temporal context and hinders dynamic adaptability. To address these challenges, we propose a DRL-based navigation framework, DRL-TH, which leverages temporal graph attention and hierarchical graph pooling to integrate historical observations and adaptively fuse multi-modal information. Specifically, we introduce a temporal-guided graph attention network (TG-GAT) that incorporates temporal weights into attention scores to capture correlations between consecutive frames, thereby enabling the implicit estimation of scene evolution. In addition, we design a graph hierarchical abstraction module (GHAM) that applies hierarchical pooling and learnable weighted fusion to dynamically integrate RGB and LiDAR features, achieving balanced representation across multiple scales. Extensive experiments demonstrate that our DRL-TH outperforms existing methods in various crowded environments. We also implemented DRL-TH control policy on a real UGV and showed that it performed well in real world scenarios.💡 Summary & Analysis

1. **Performance Comparison Between Three Key Models:** This paper compares the effectiveness of custom models, transfer learning, and pre-trained models in CNN-based systems. Think of each model like a bicycle: custom models require more time to build from scratch, while transfer learning and pre-trained models can leverage what they've learned elsewhere to get there faster. 2. **Performance Variability Across Datasets:** The performance of each model varies significantly depending on the dataset used. It's akin to how riding a bike can be easier or harder based on weather conditions; all models perform well in good conditions, but stronger models are needed when things get tougher. 3. **Experimental Approach:** Evaluating each model’s performance through experimentation is central to this paper. Picture it as testing various routes and weather conditions while riding a bicycle to see how well each bike performs.📄 Full Paper Content (ArXiv Source)

📊 논문 시각자료 (Figures)