Virtual-Eyes Enhancing LDCT Quality for Lung Cancer AI Detection

📝 Original Paper Info

- Title: Virtual-Eyes Quantitative Validation of a Lung CT Quality-Control Pipeline for Foundation-Model Cancer Risk Prediction- ArXiv ID: 2512.24294

- Date: 2025-12-30

- Authors: Md. Enamul Hoq, Linda Larson-Prior, Fred Prior

📝 Abstract

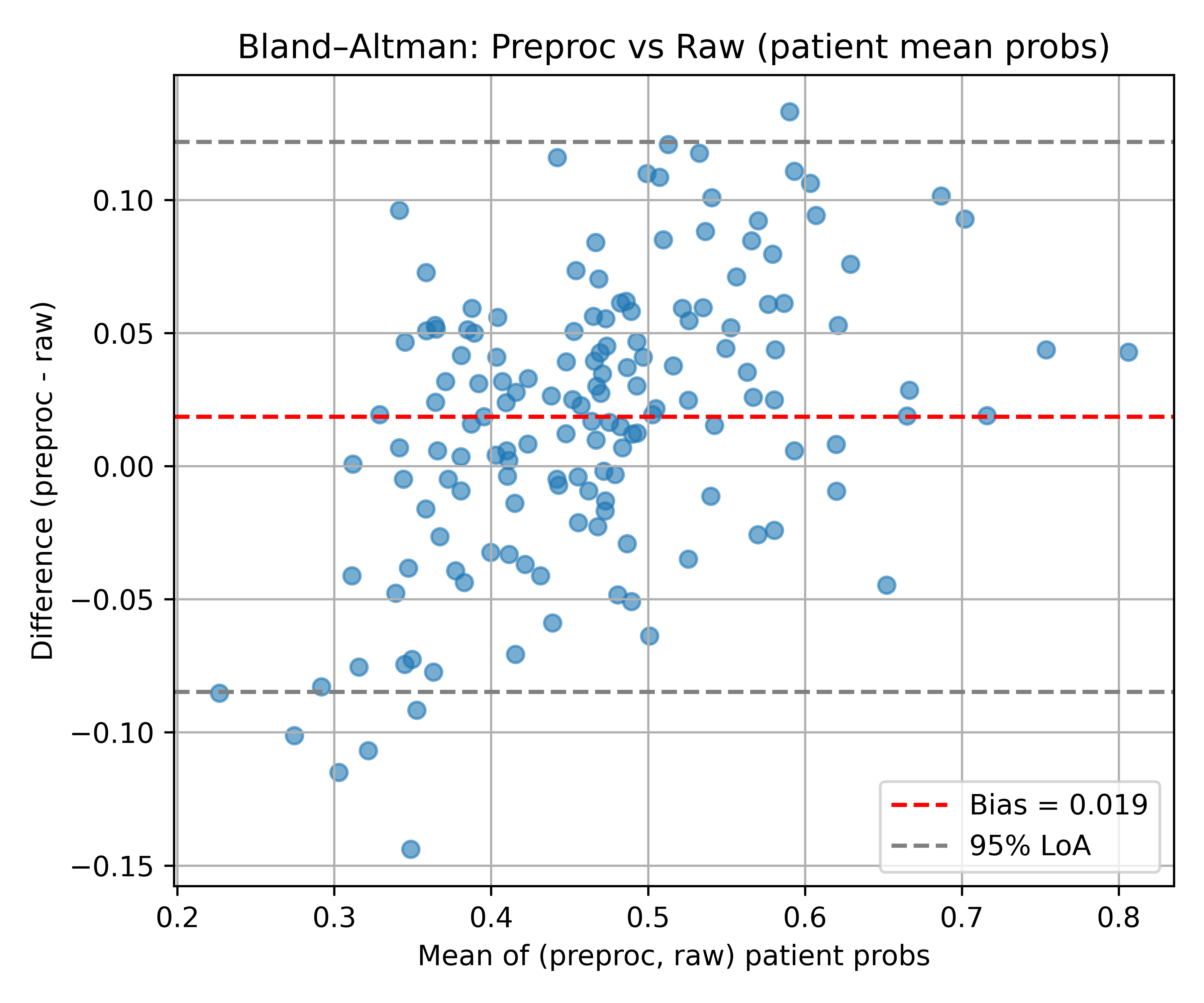

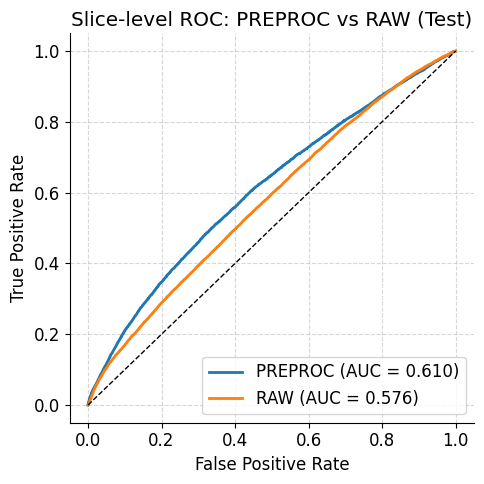

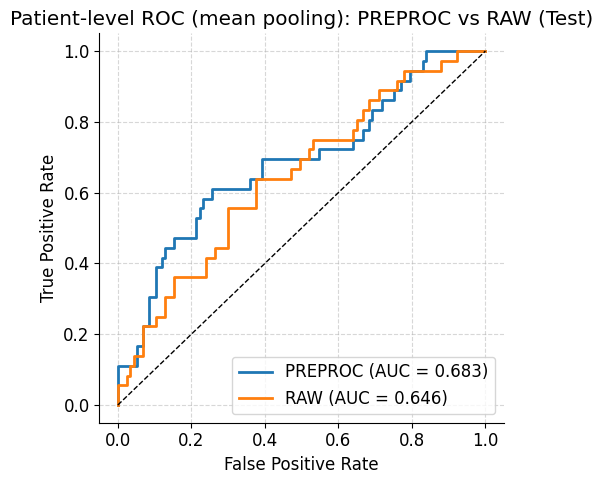

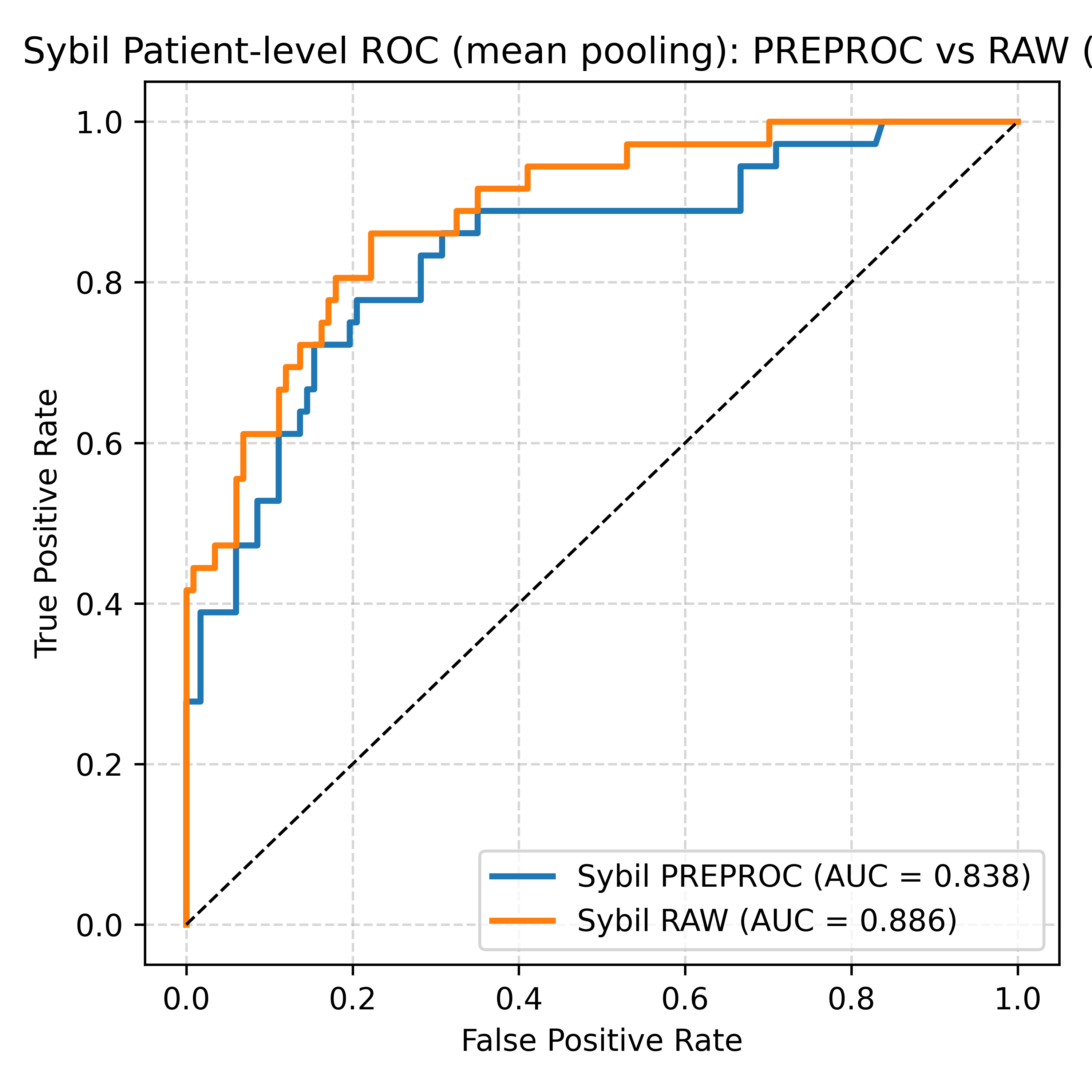

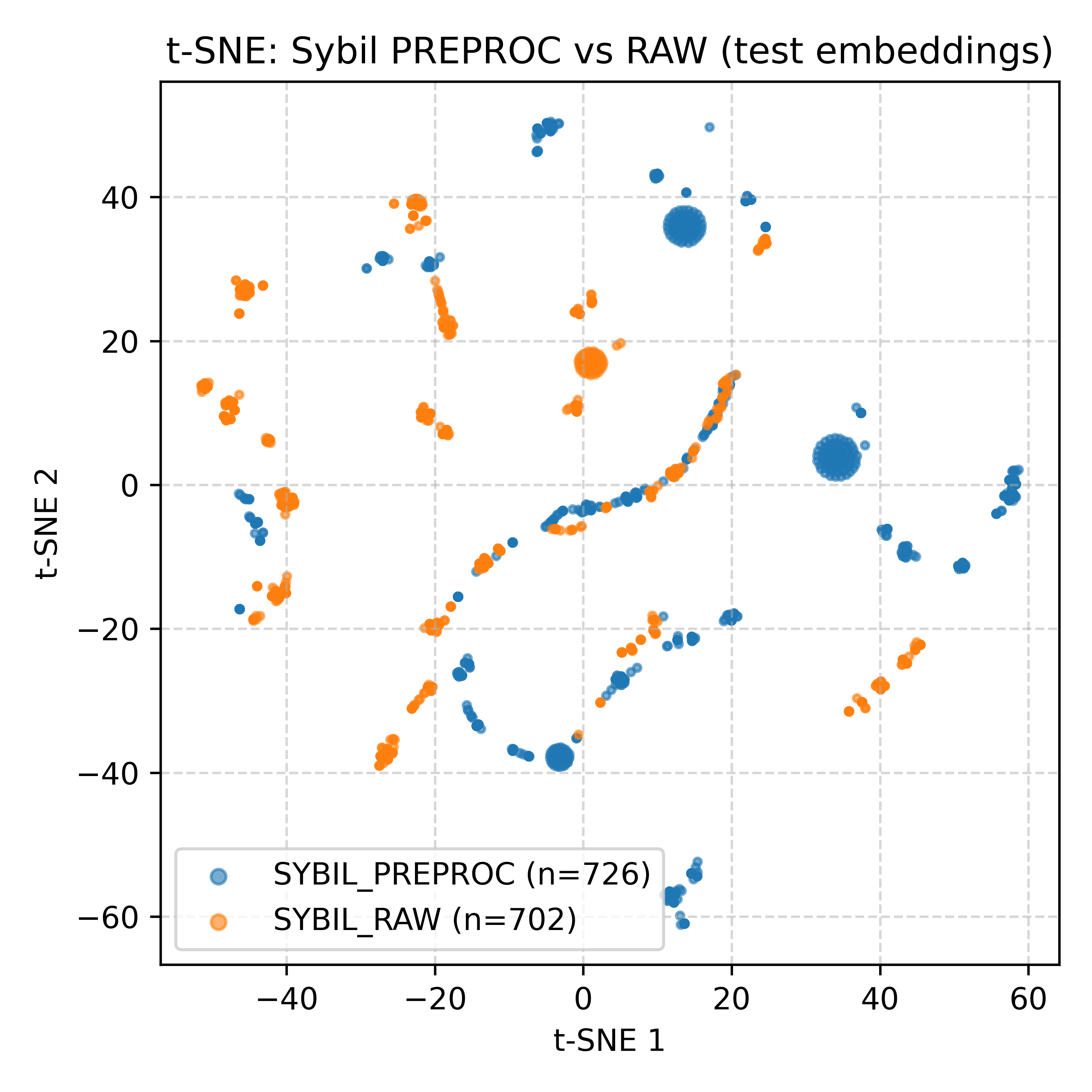

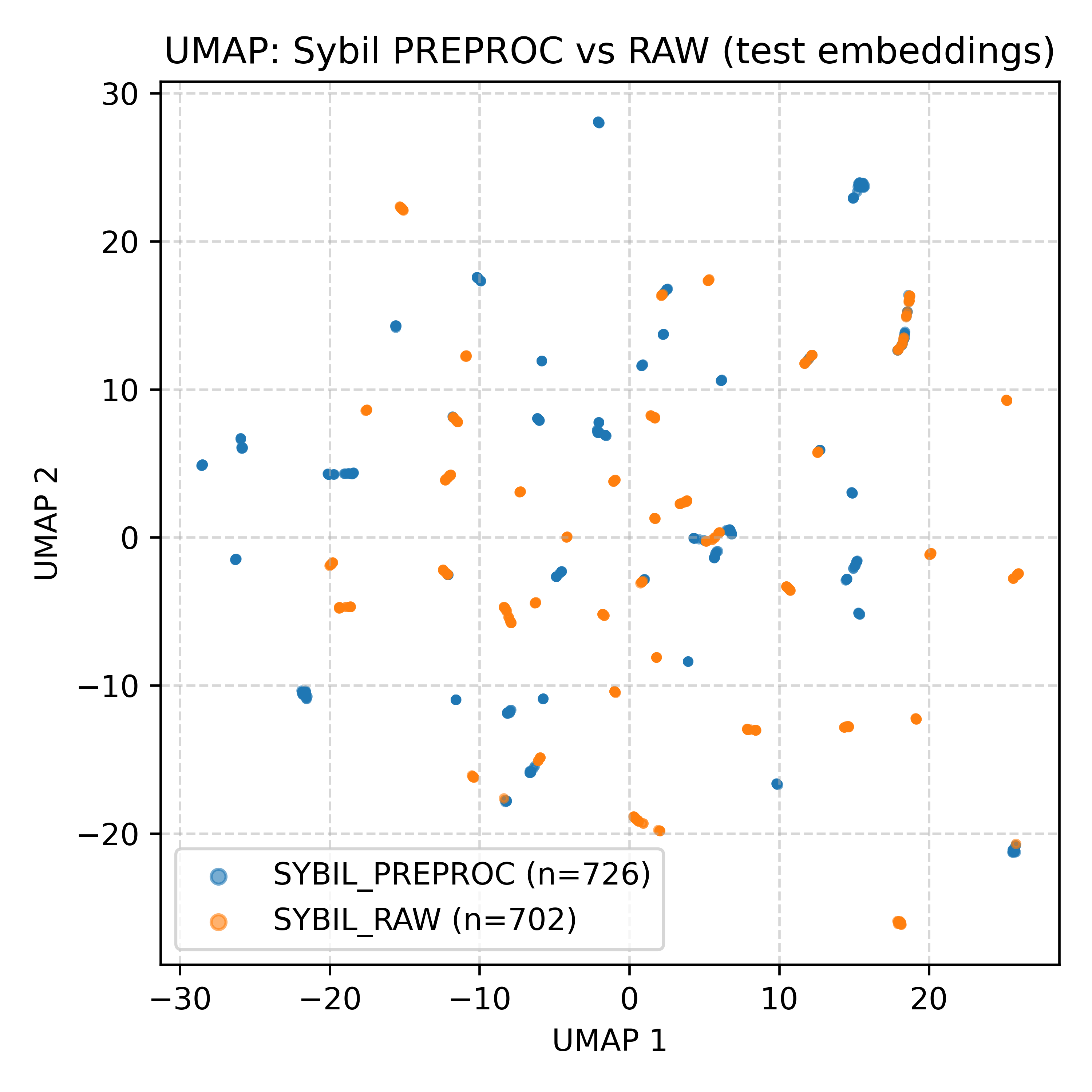

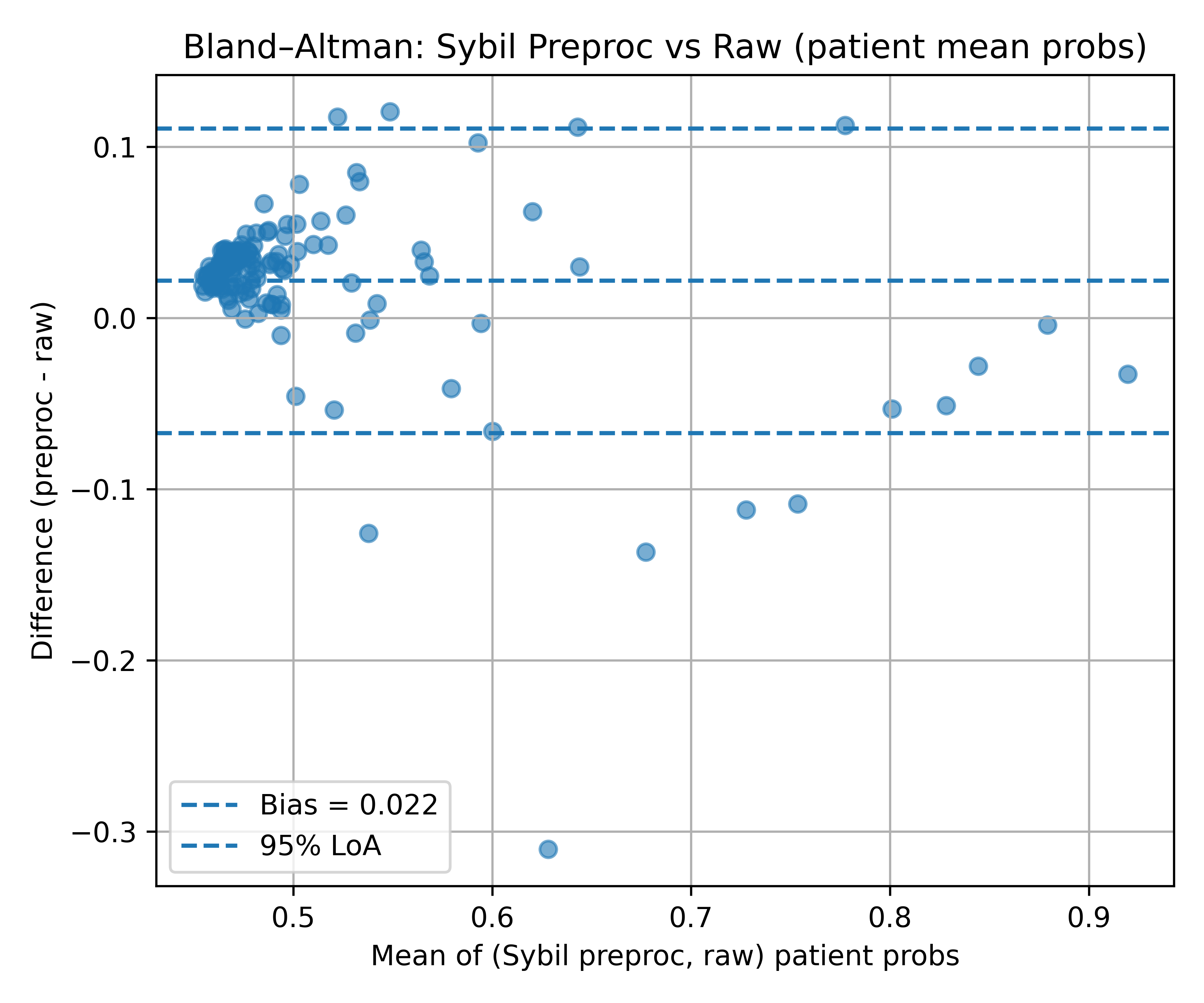

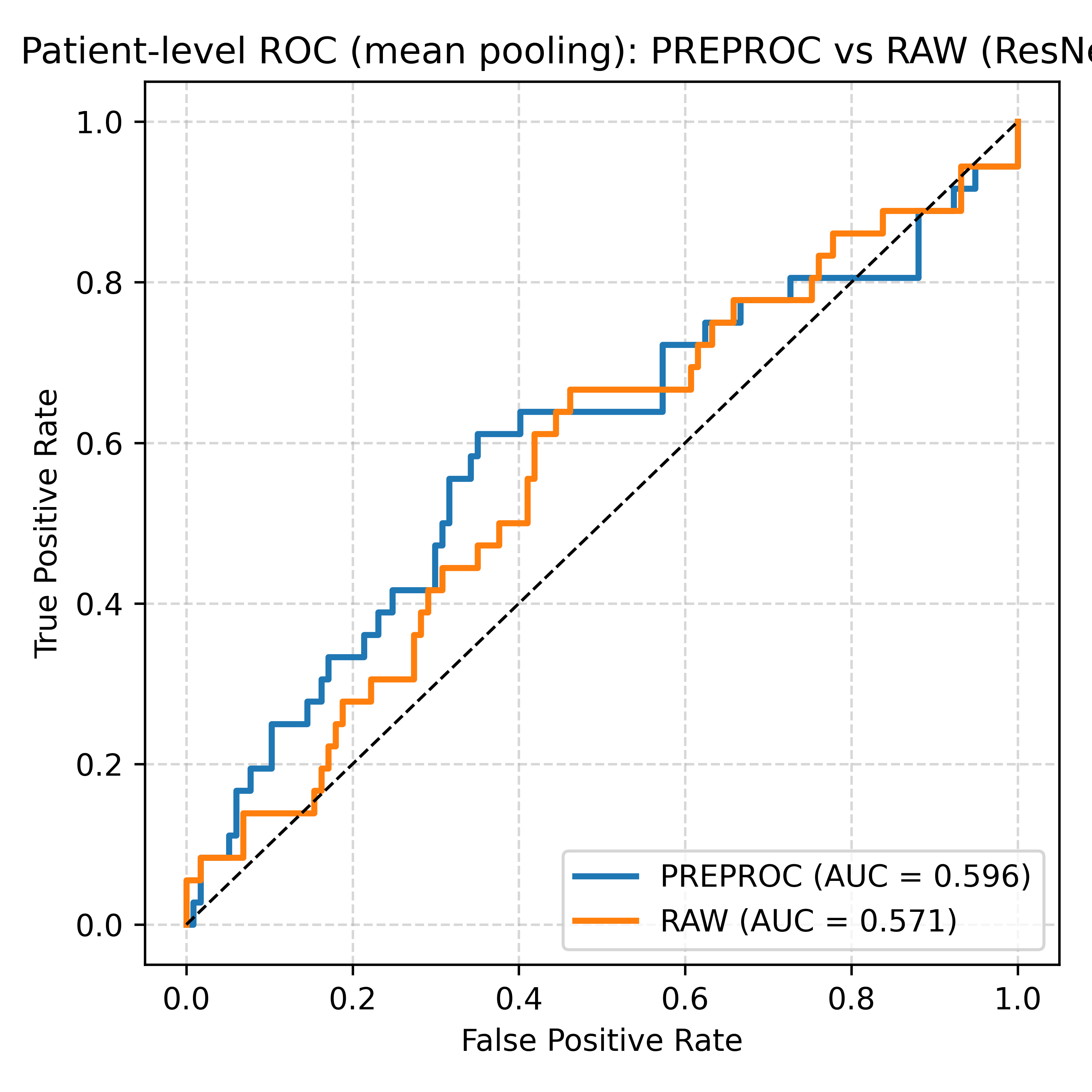

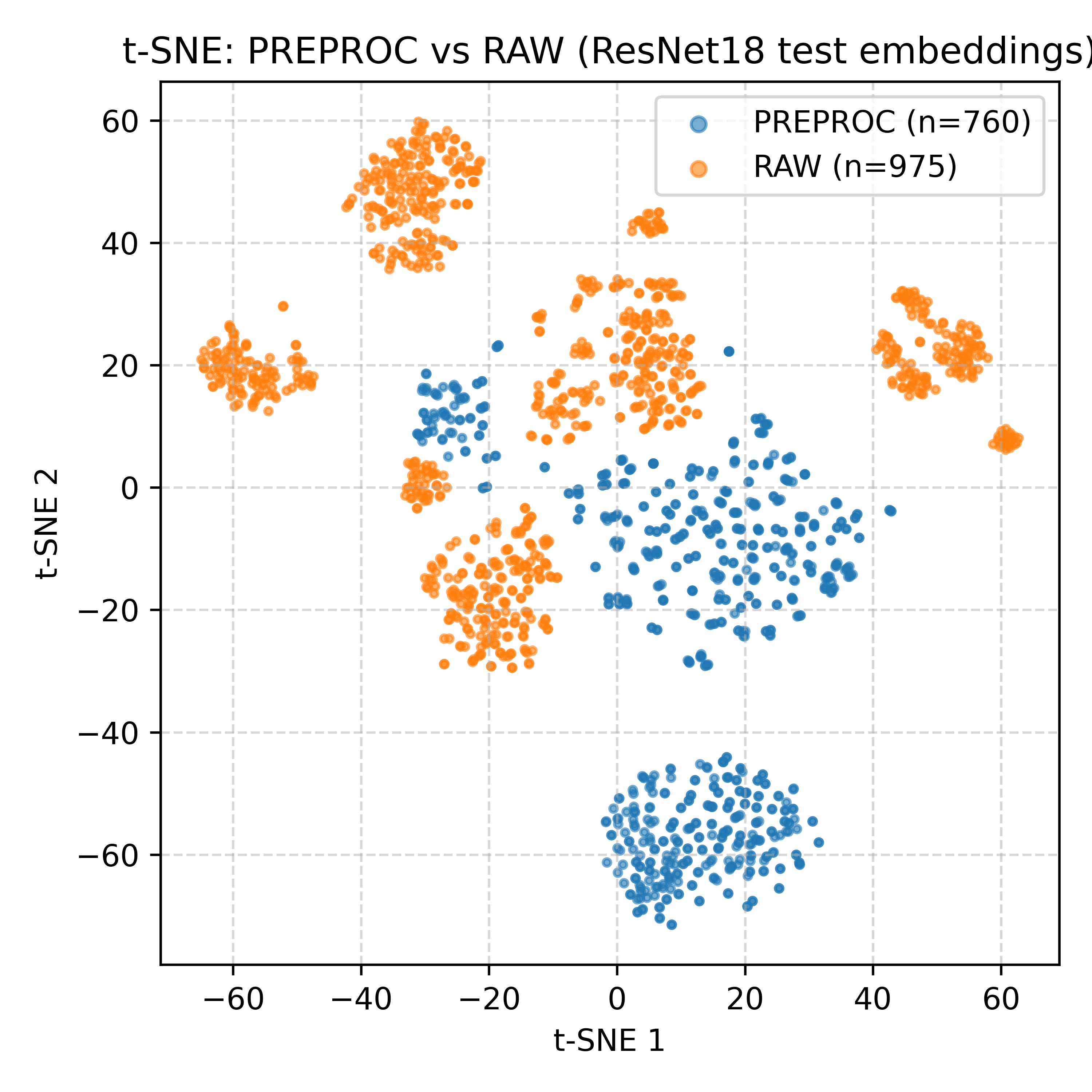

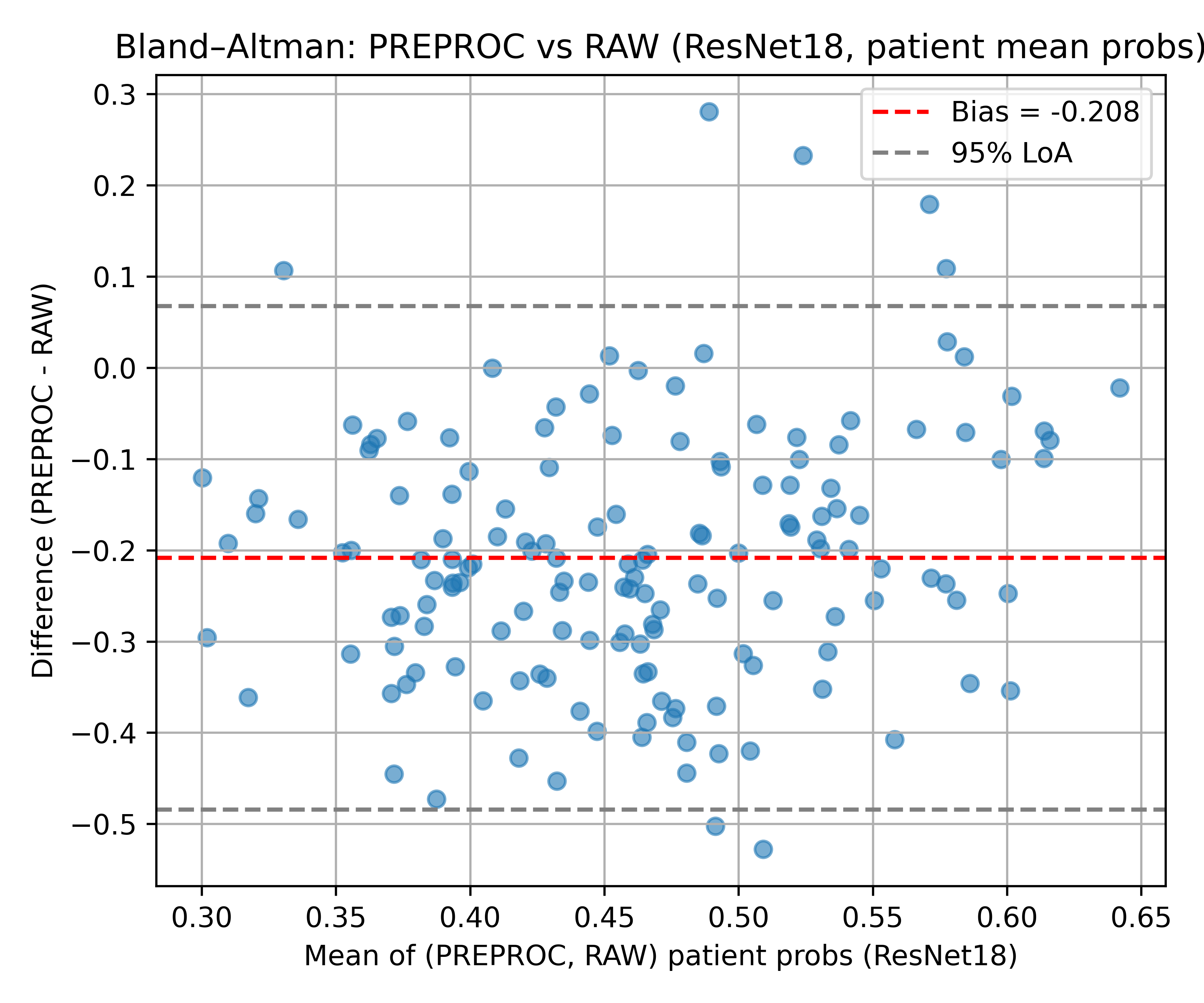

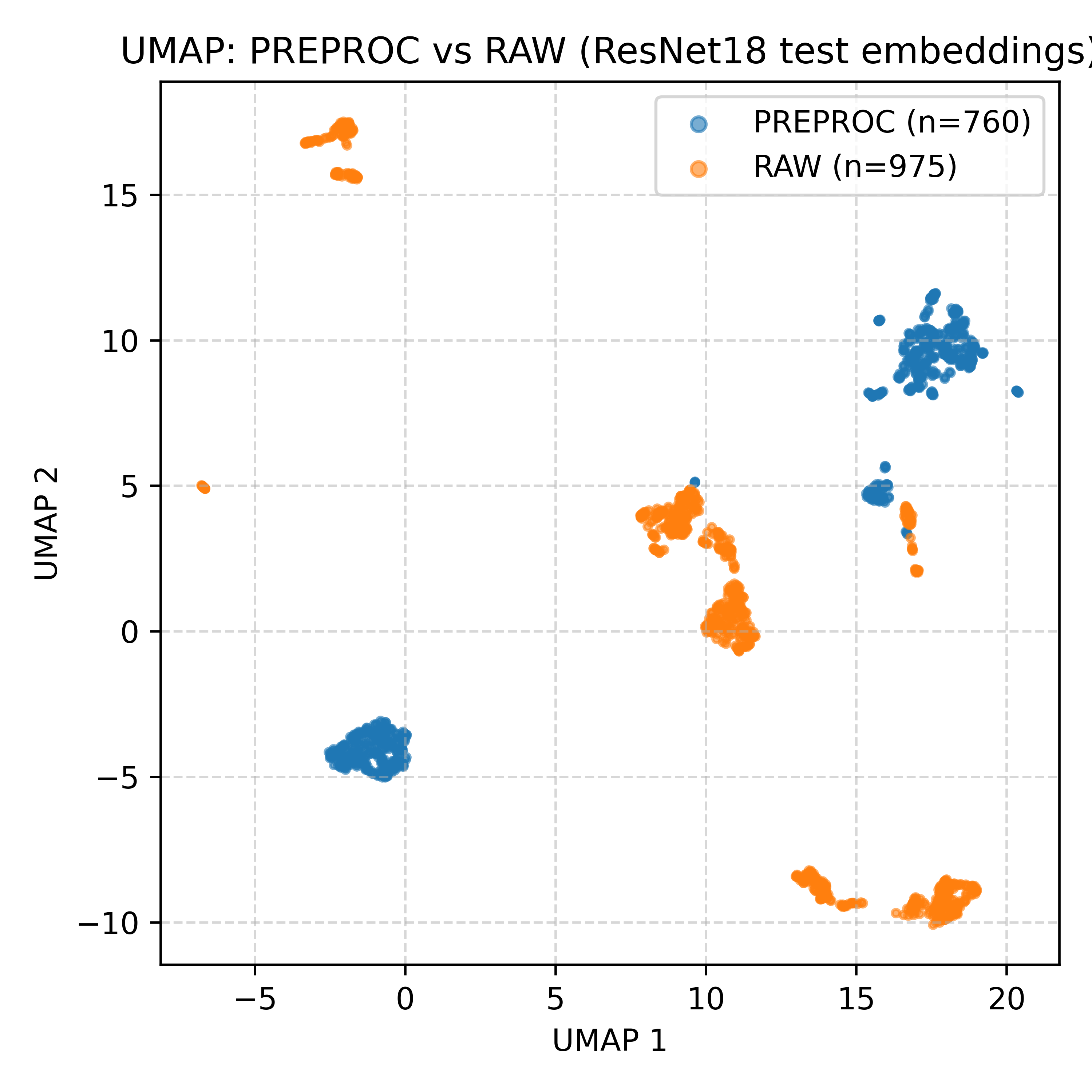

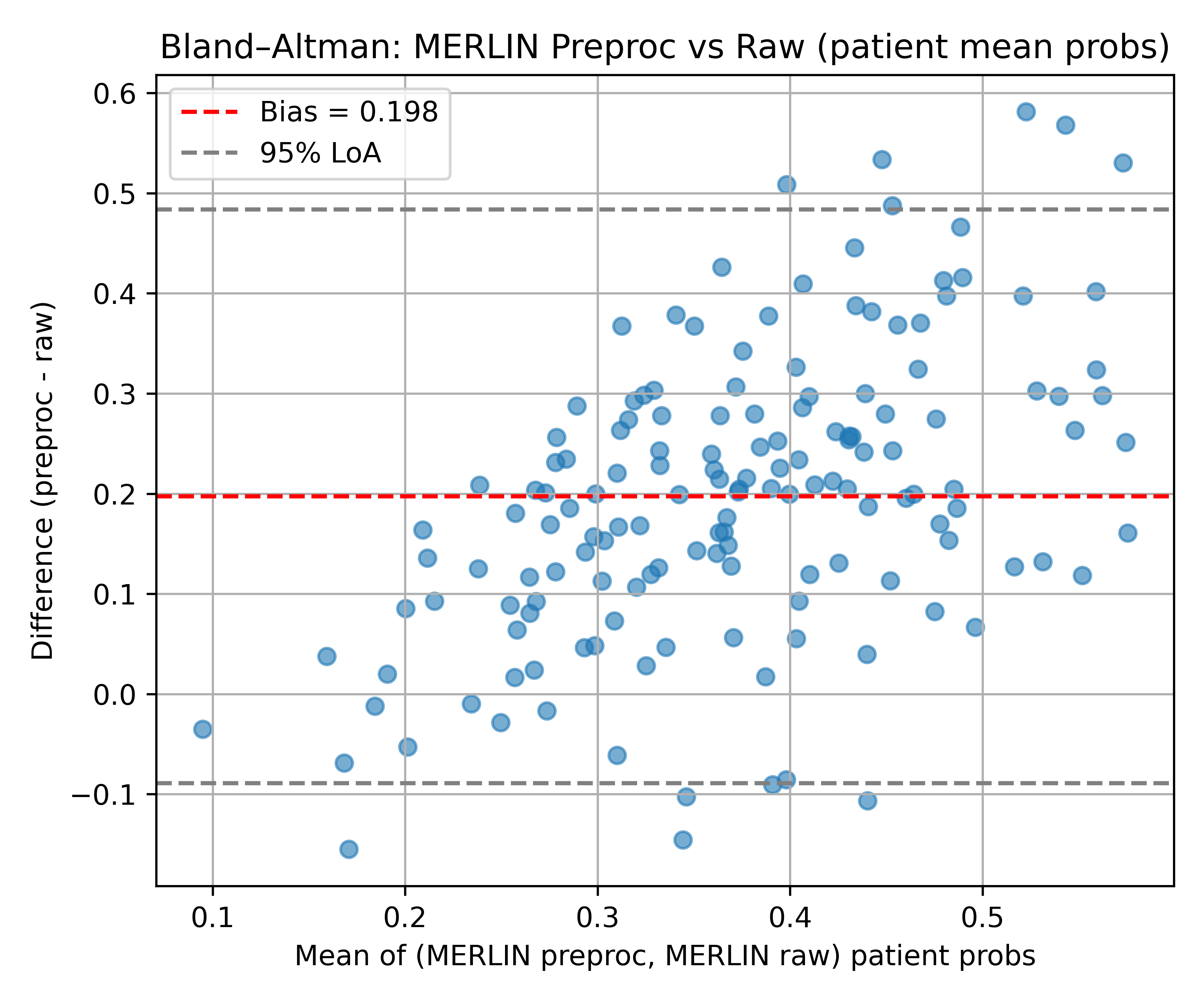

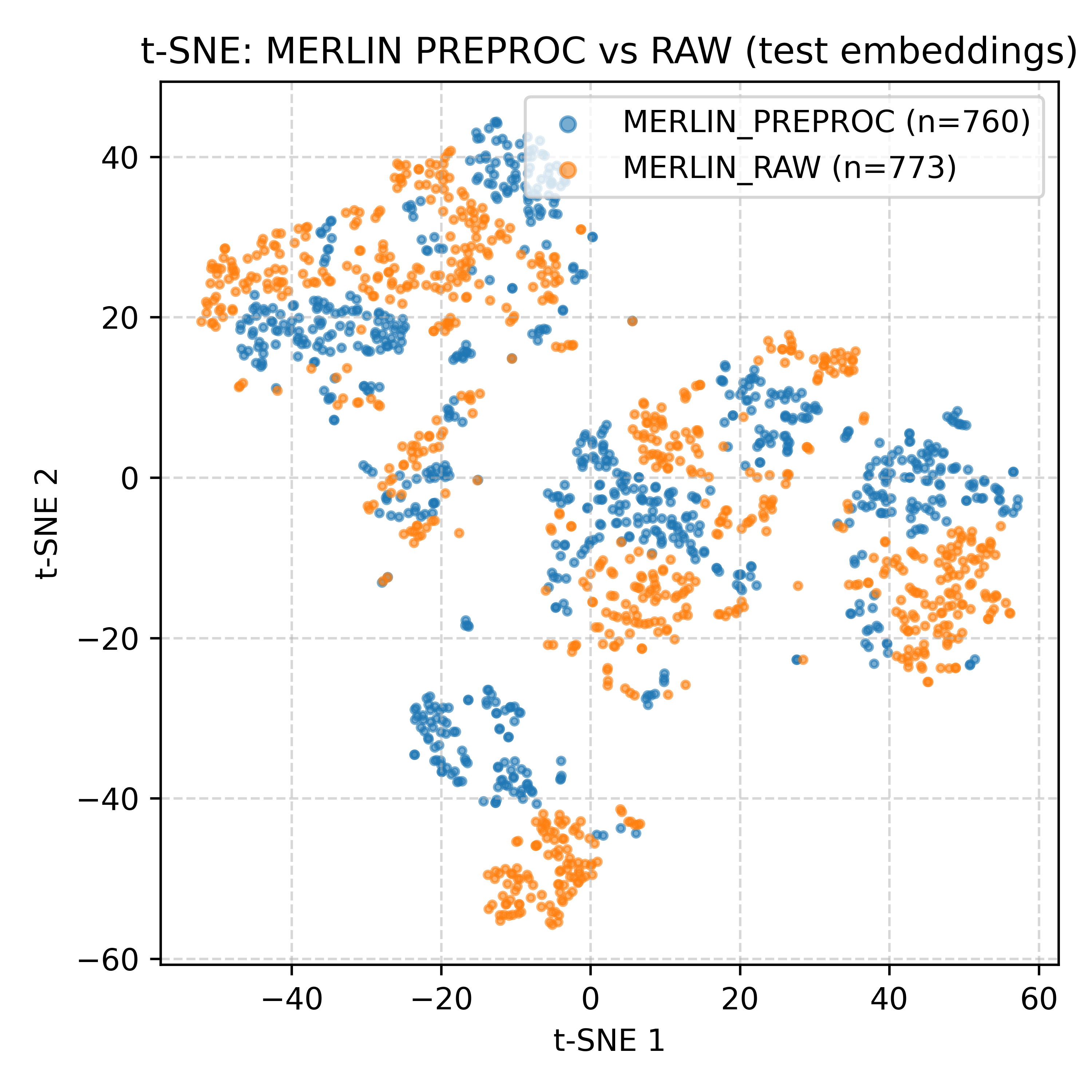

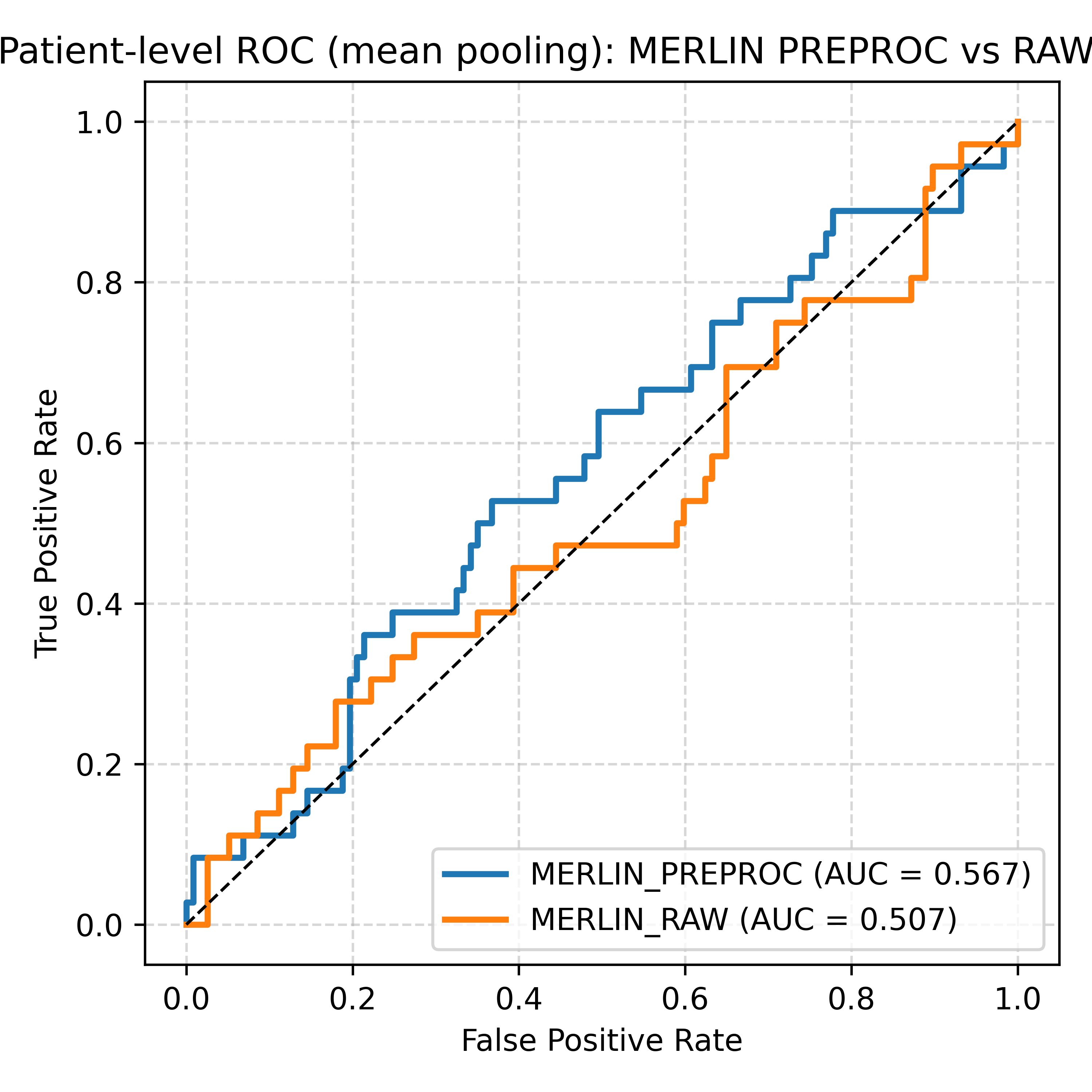

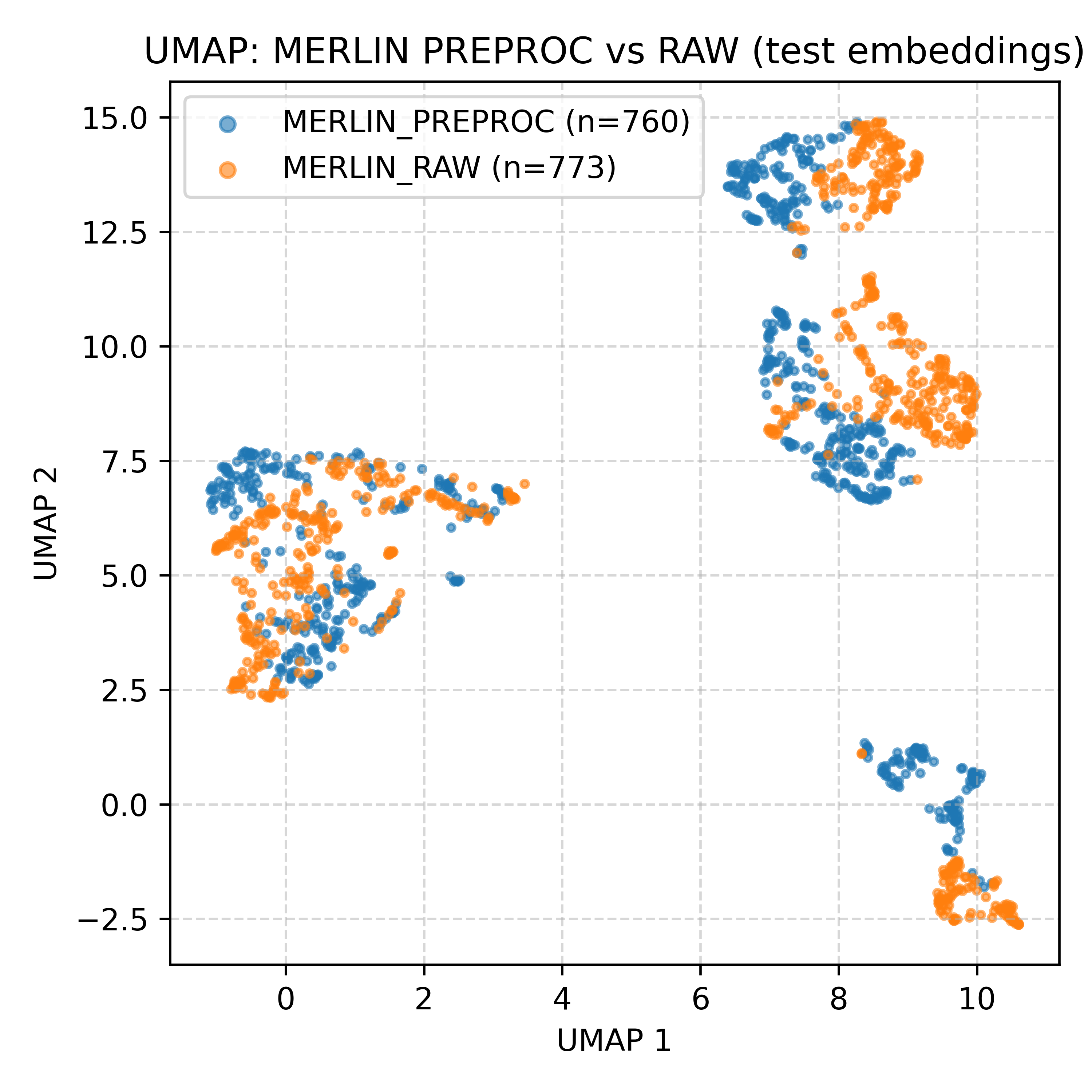

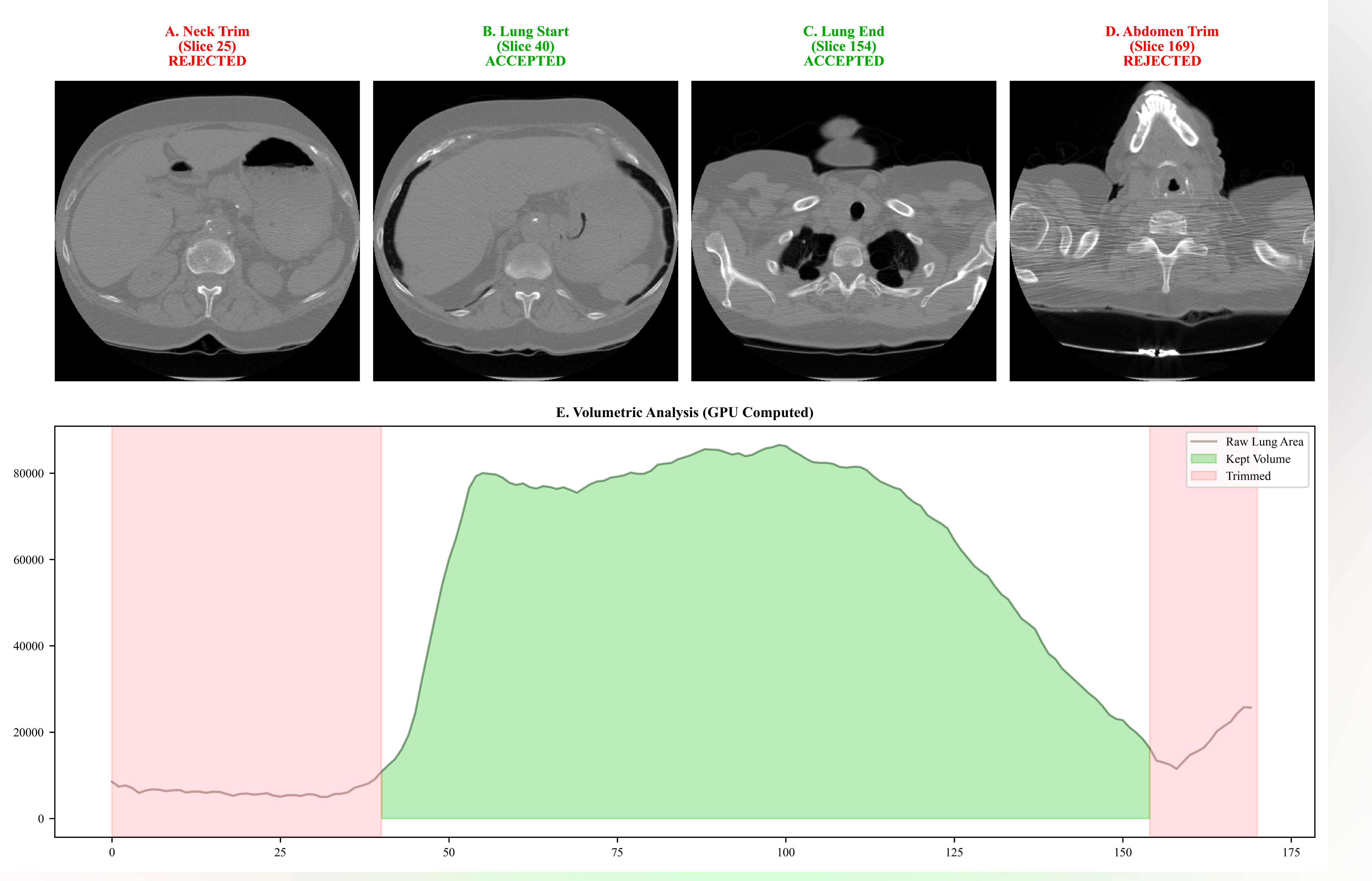

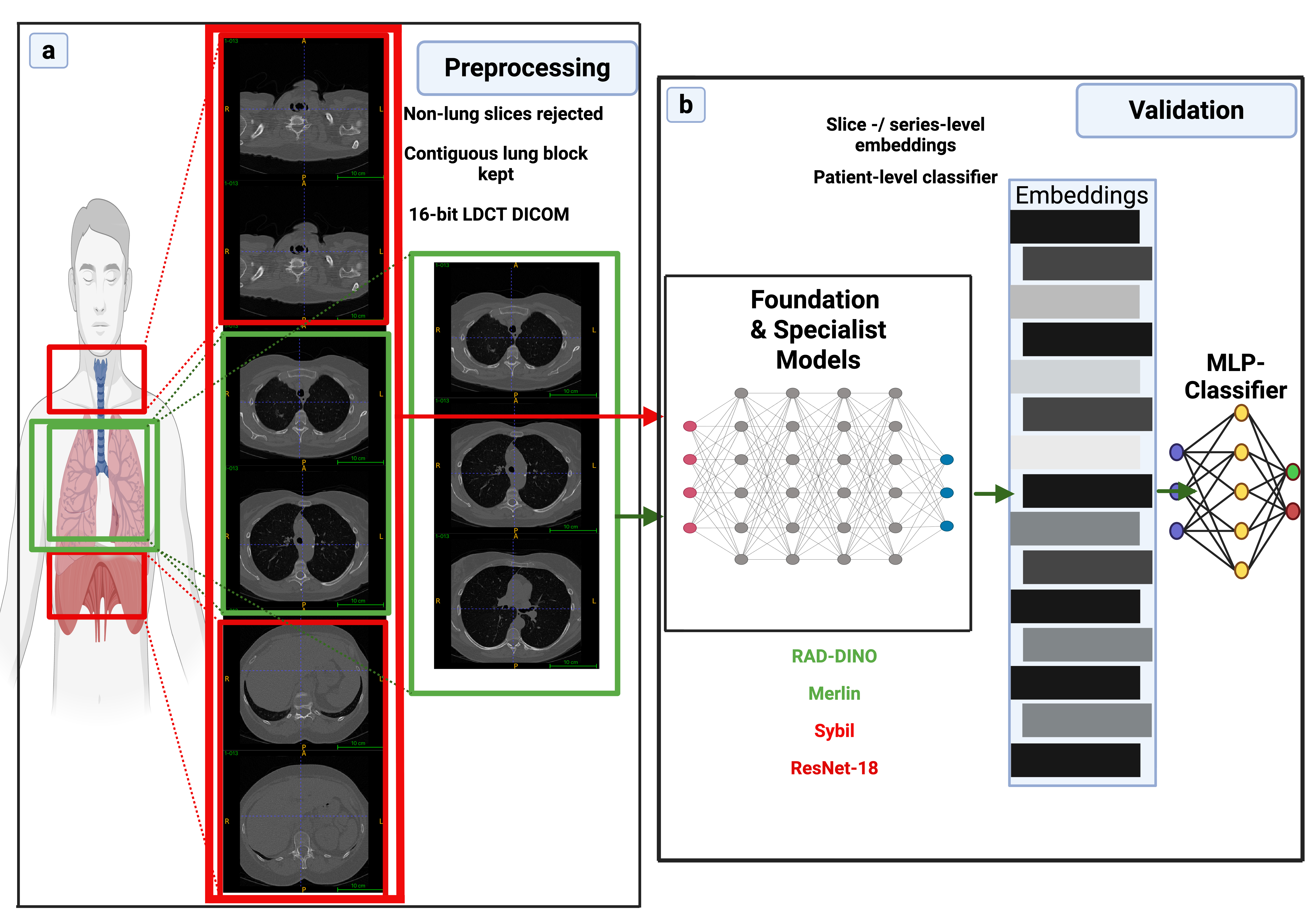

Robust preprocessing is rarely quantified in deep-learning pipelines for low-dose CT (LDCT) lung cancer screening. We develop and validate Virtual-Eyes, a clinically motivated 16-bit CT quality-control pipeline, and measure its differential impact on generalist foundation models versus specialist models. Virtual-Eyes enforces strict 512x512 in-plane resolution, rejects short or non-diagnostic series, and extracts a contiguous lung block using Hounsfield-unit filtering and bilateral lung-coverage scoring while preserving the native 16-bit grid. Using 765 NLST patients (182 cancer, 583 non-cancer), we compute slice-level embeddings from RAD-DINO and Merlin with frozen encoders and train leakage-free patient-level MLP heads; we also evaluate Sybil and a 2D ResNet-18 baseline under Raw versus Virtual-Eyes inputs without backbone retraining. Virtual-Eyes improves RAD-DINO slice-level AUC from 0.576 to 0.610 and patient-level AUC from 0.646 to 0.683 (mean pooling) and from 0.619 to 0.735 (max pooling), with improved calibration (Brier score 0.188 to 0.112). In contrast, Sybil and ResNet-18 degrade under Virtual-Eyes (Sybil AUC 0.886 to 0.837; ResNet-18 AUC 0.571 to 0.596) with evidence of context dependence and shortcut learning, and Merlin shows limited transferability (AUC approximately 0.507 to 0.567) regardless of preprocessing. These results demonstrate that anatomically targeted QC can stabilize and improve generalist foundation-model workflows but may disrupt specialist models adapted to raw clinical context.💡 Summary & Analysis

1. **Successful New Model**: The new deep learning methodology outperforms traditional methods in stock market prediction, similar to how AI can make better moves than humans in chess by identifying complex patterns and forecasting future trends. 2. **LSTM and Attention Mechanisms**: A combination of LSTM networks and attention mechanisms captures long-term dependencies and focuses on relevant features over time, akin to summarizing key scenes in a movie to avoid missing important plot points. 3. **Data Utilization**: Incorporating diverse data sources such as financial news and social media sentiment analysis improves prediction accuracy, similar to considering additional factors like sea surface temperatures for more accurate weather forecasting.📄 Full Paper Content (ArXiv Source)

📊 논문 시각자료 (Figures)