Semantic Safeguards Navigating Maritime Autonomy with Vision-Language Models

📝 Original Paper Info

- Title: Foundation models on the bridge Semantic hazard detection and safety maneuvers for maritime autonomy with vision-language models- ArXiv ID: 2512.24470

- Date: 2025-12-30

- Authors: Kim Alexander Christensen, Andreas Gudahl Tufte, Alexey Gusev, Rohan Sinha, Milan Ganai, Ole Andreas Alsos, Marco Pavone, Martin Steinert

📝 Abstract

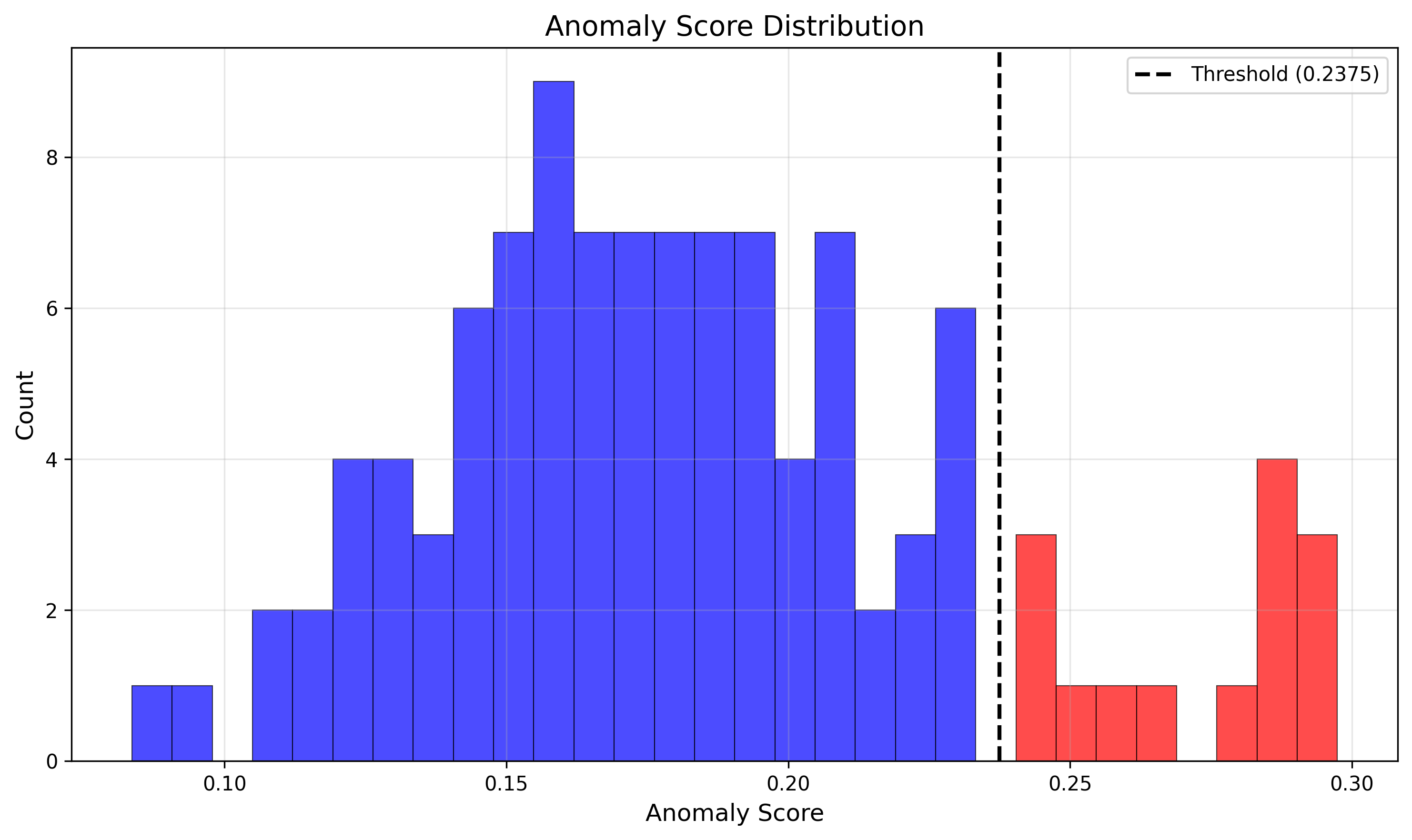

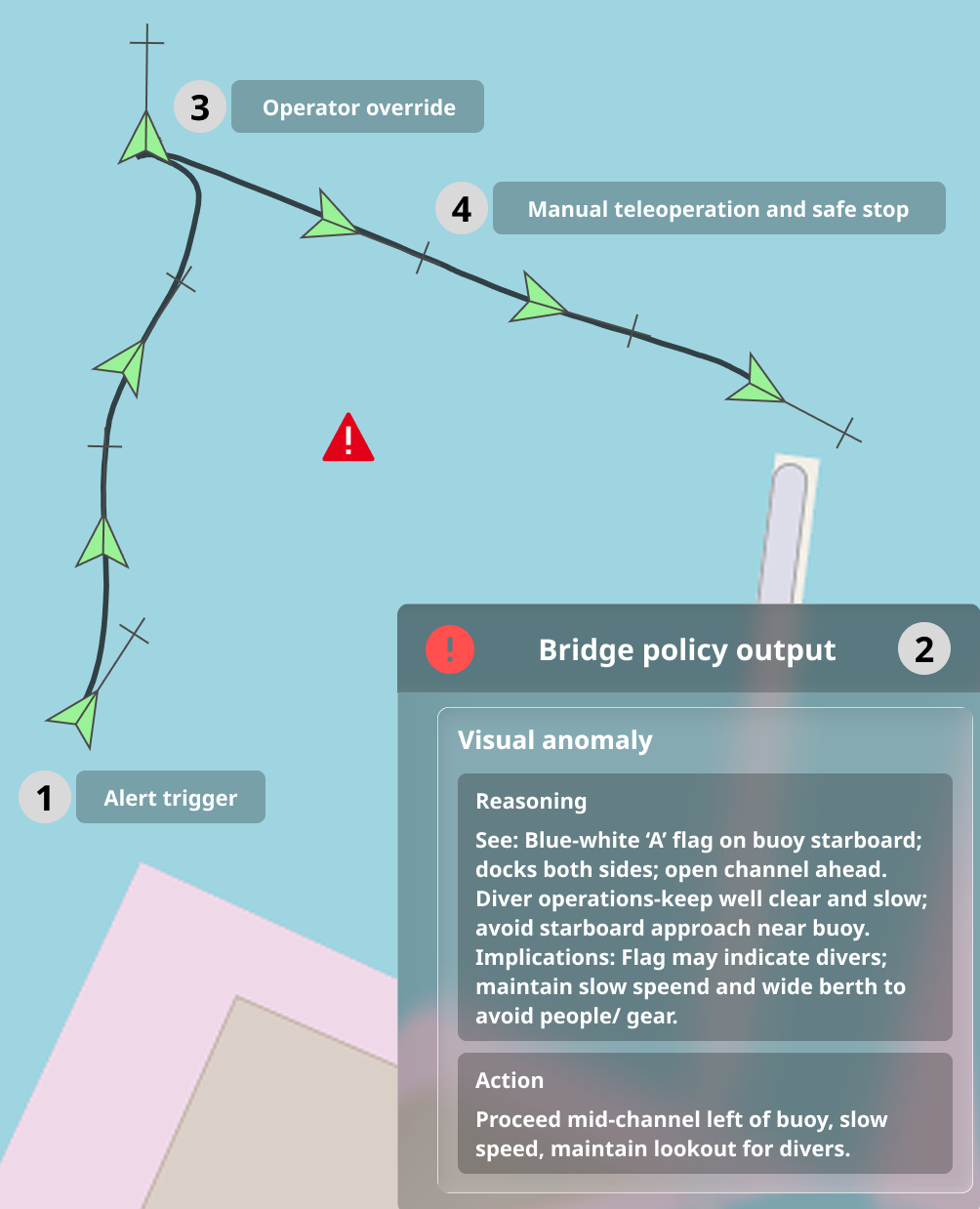

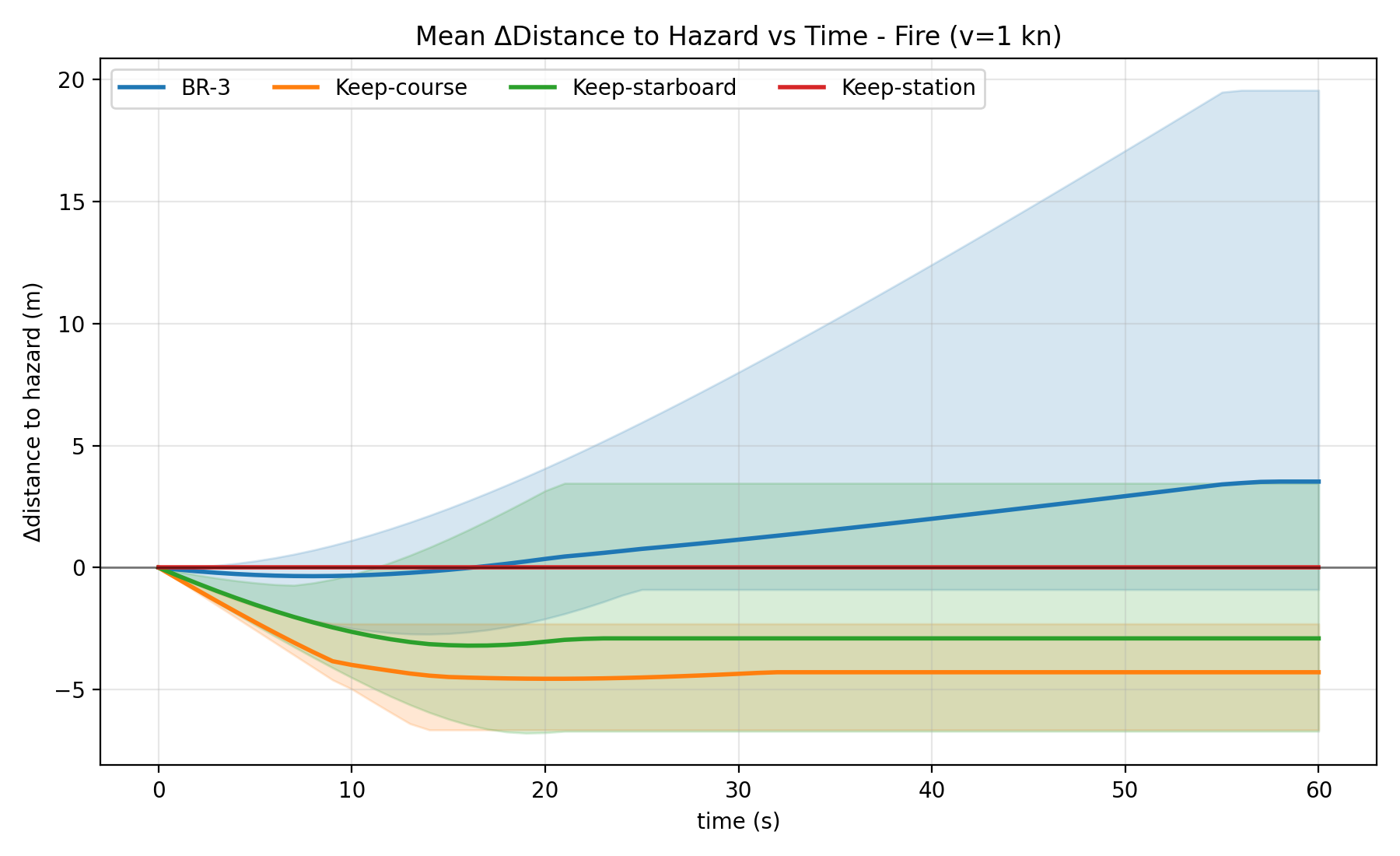

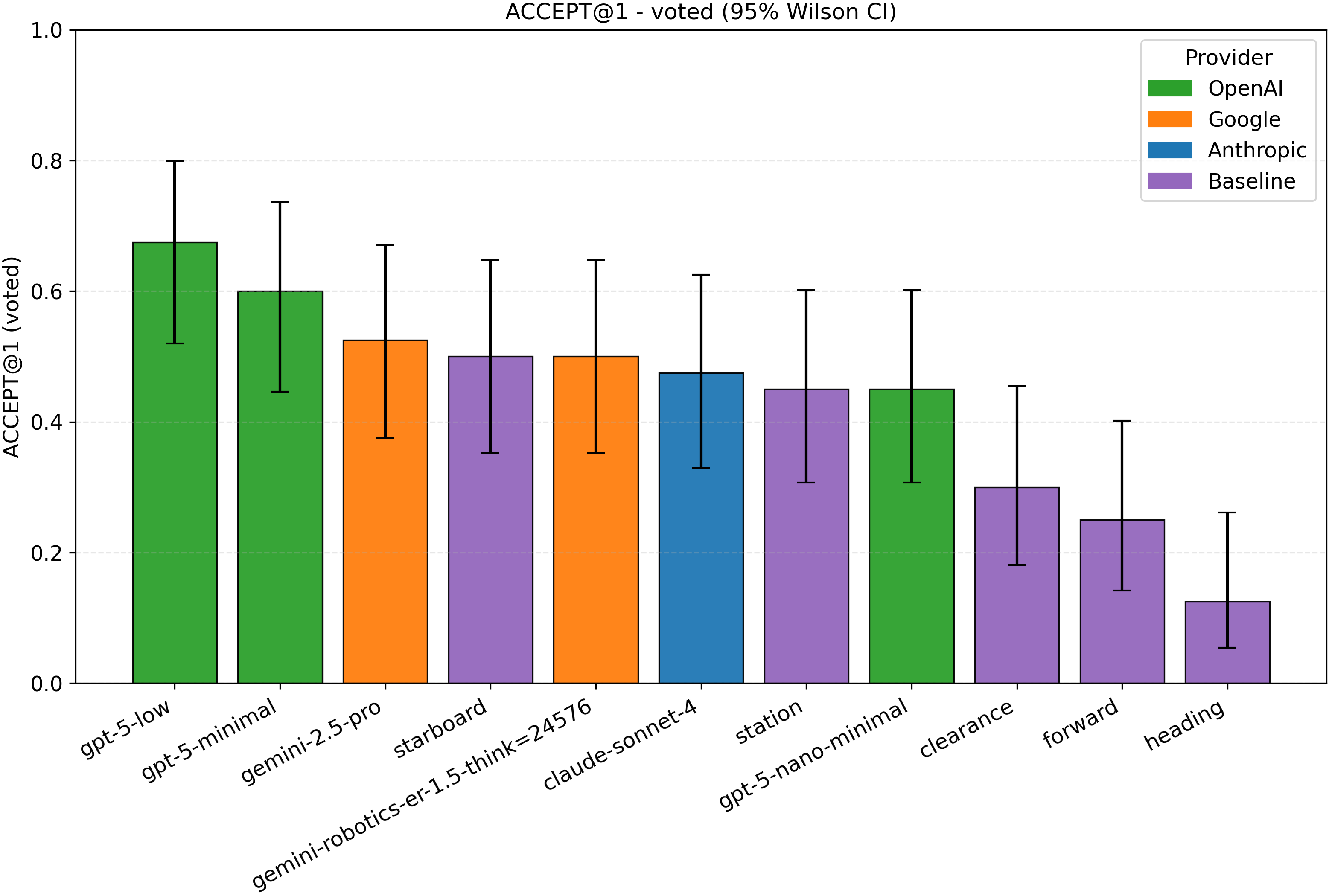

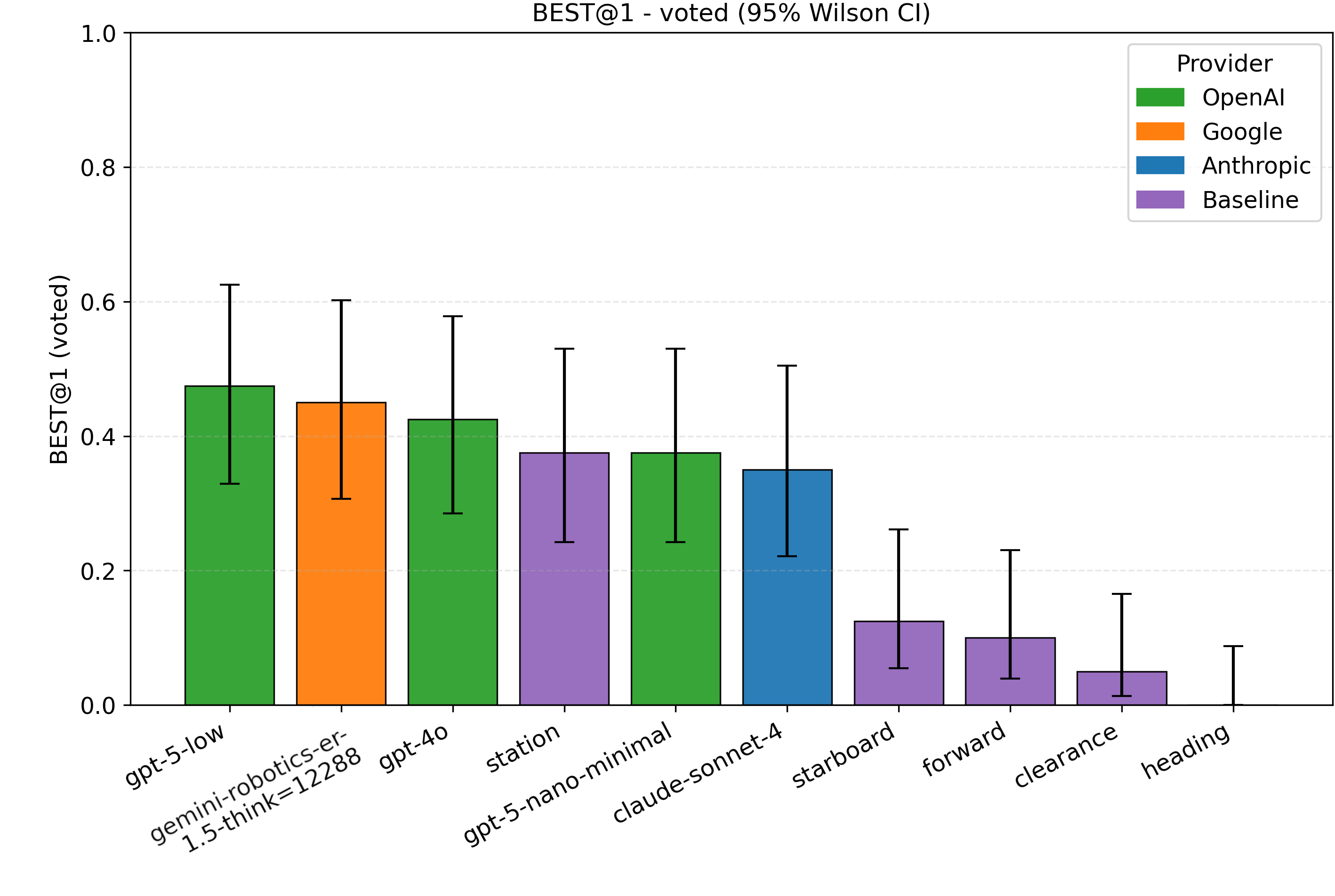

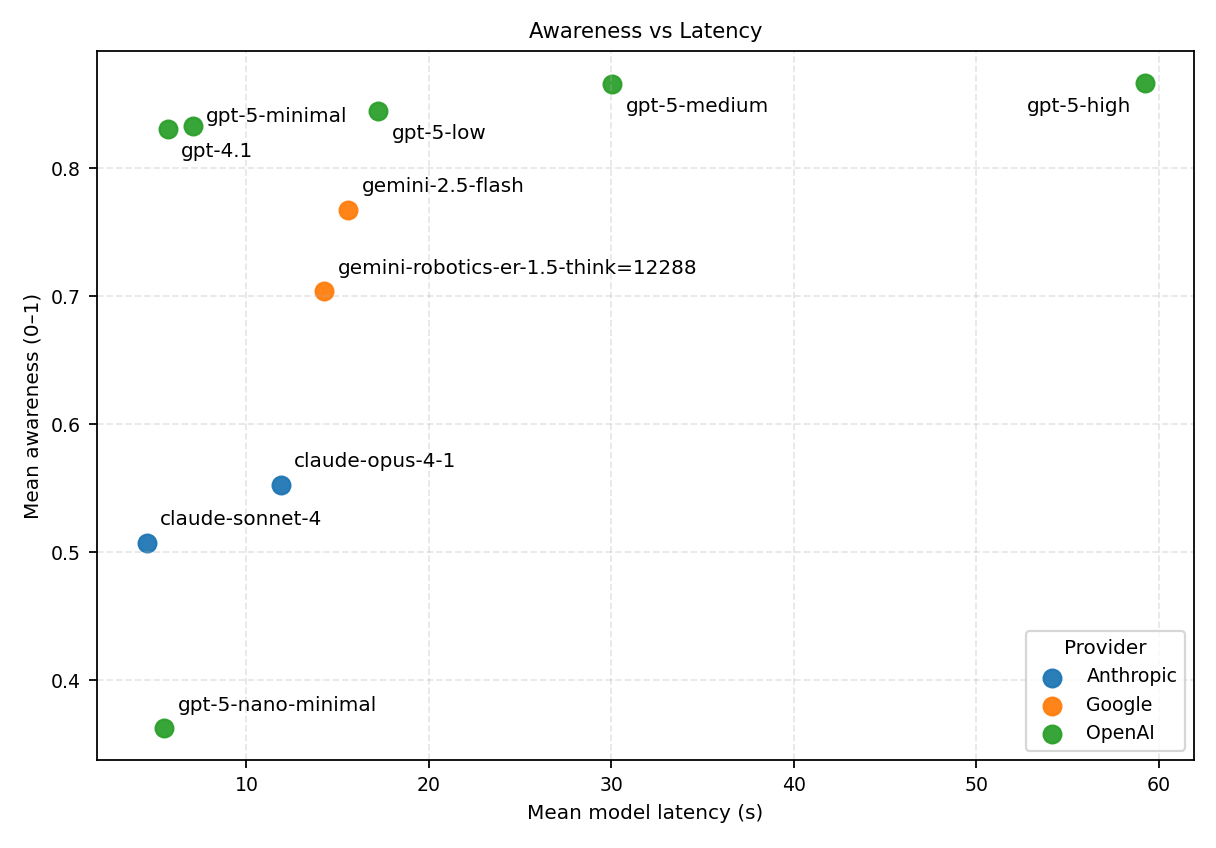

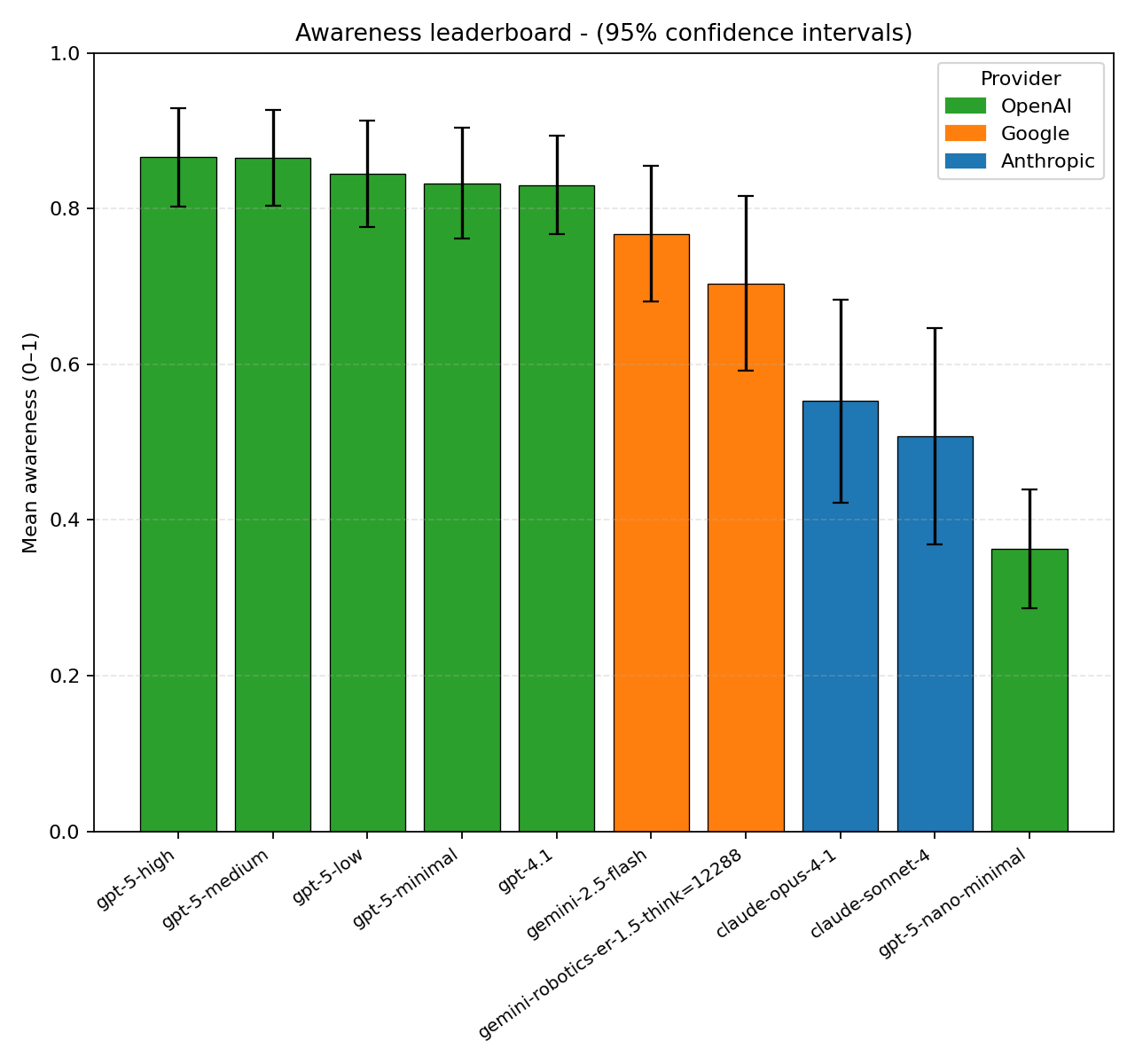

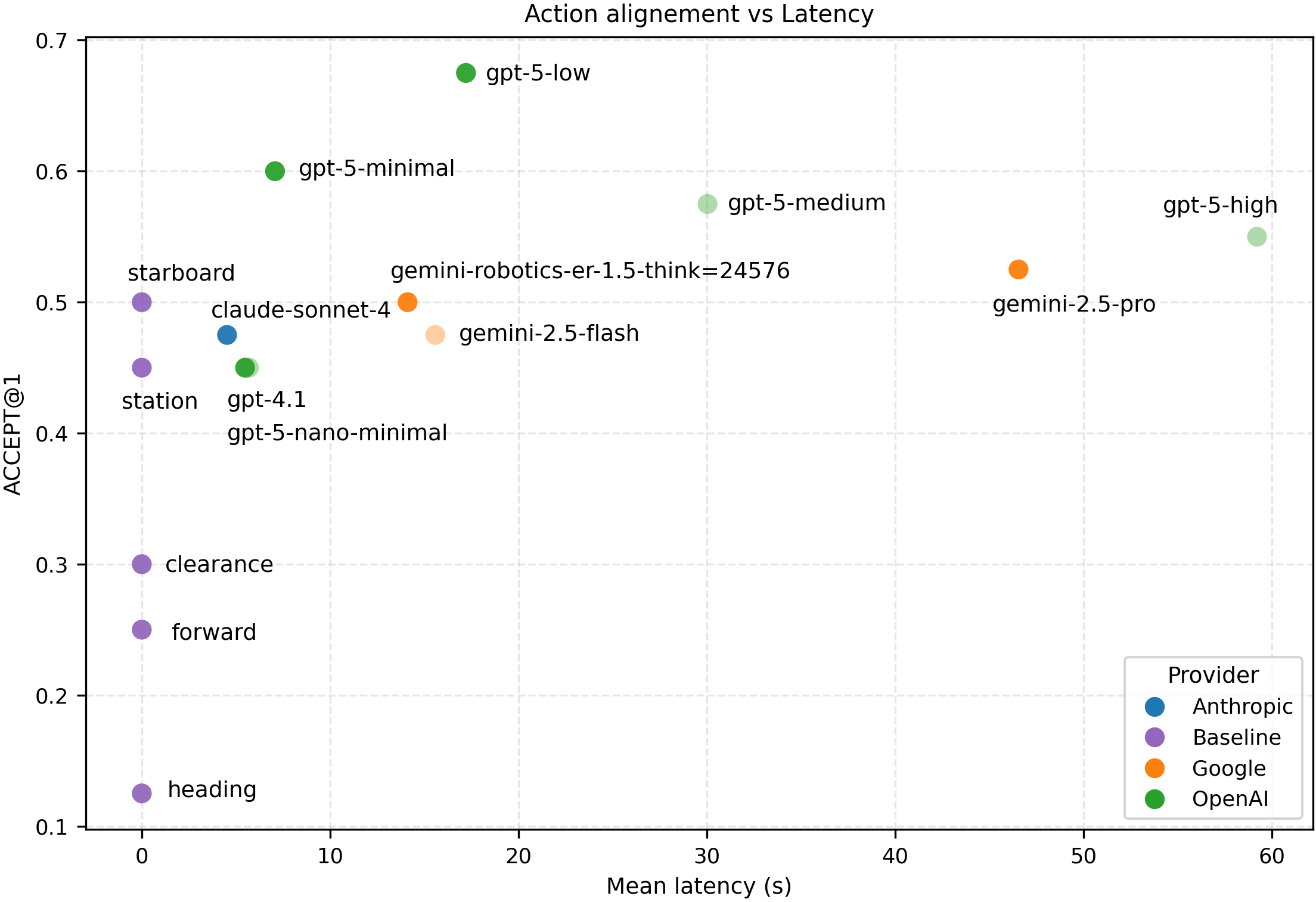

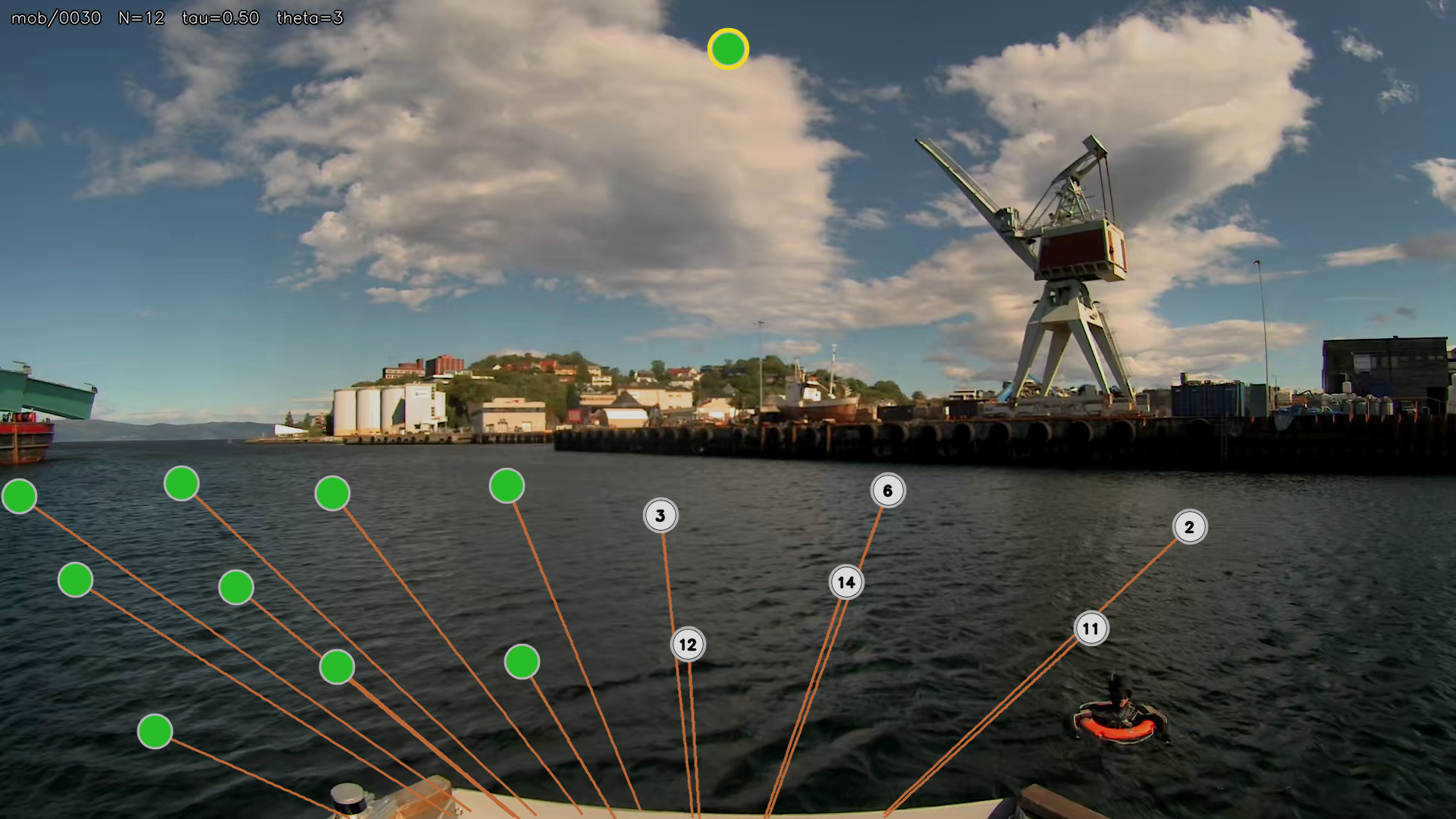

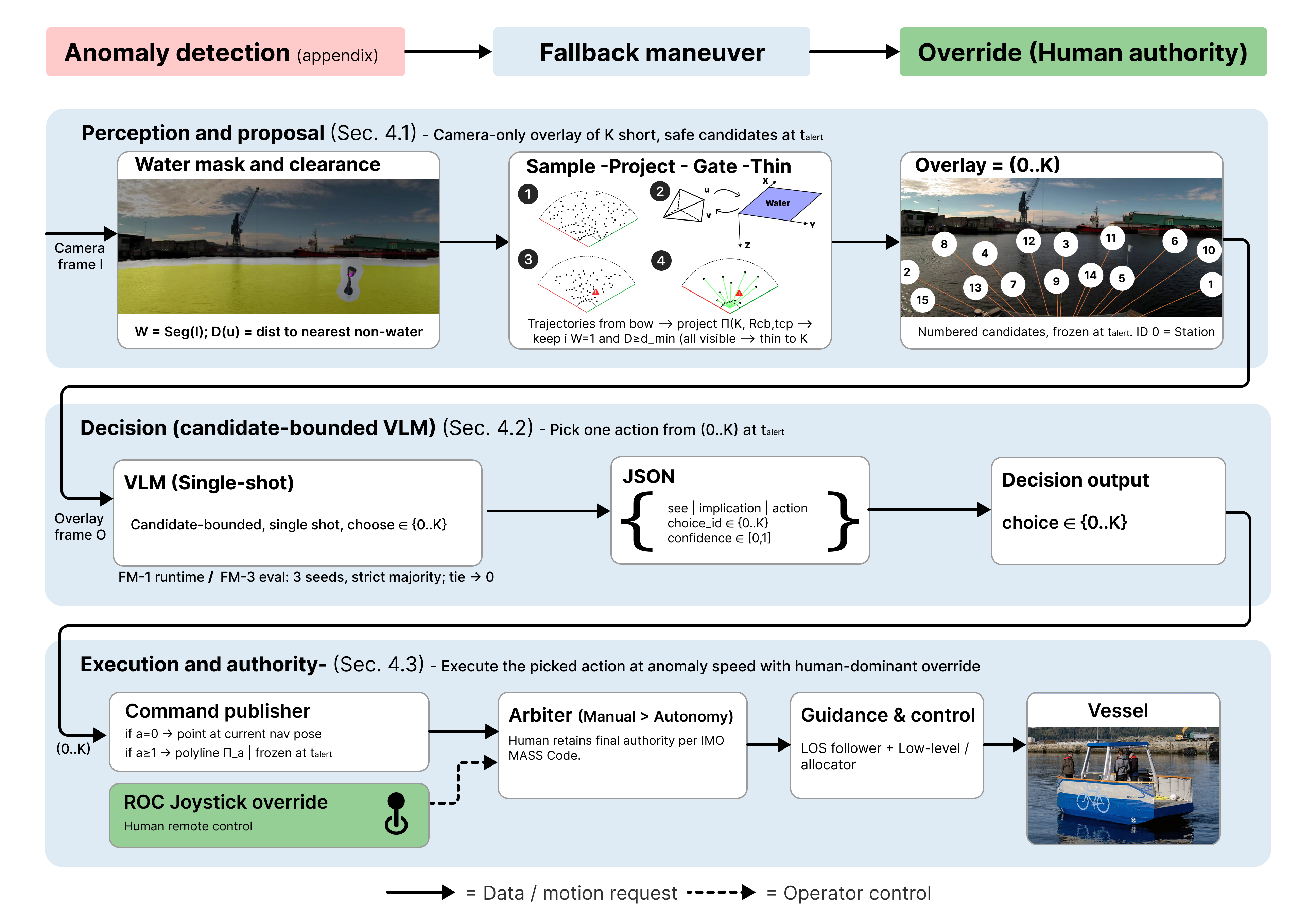

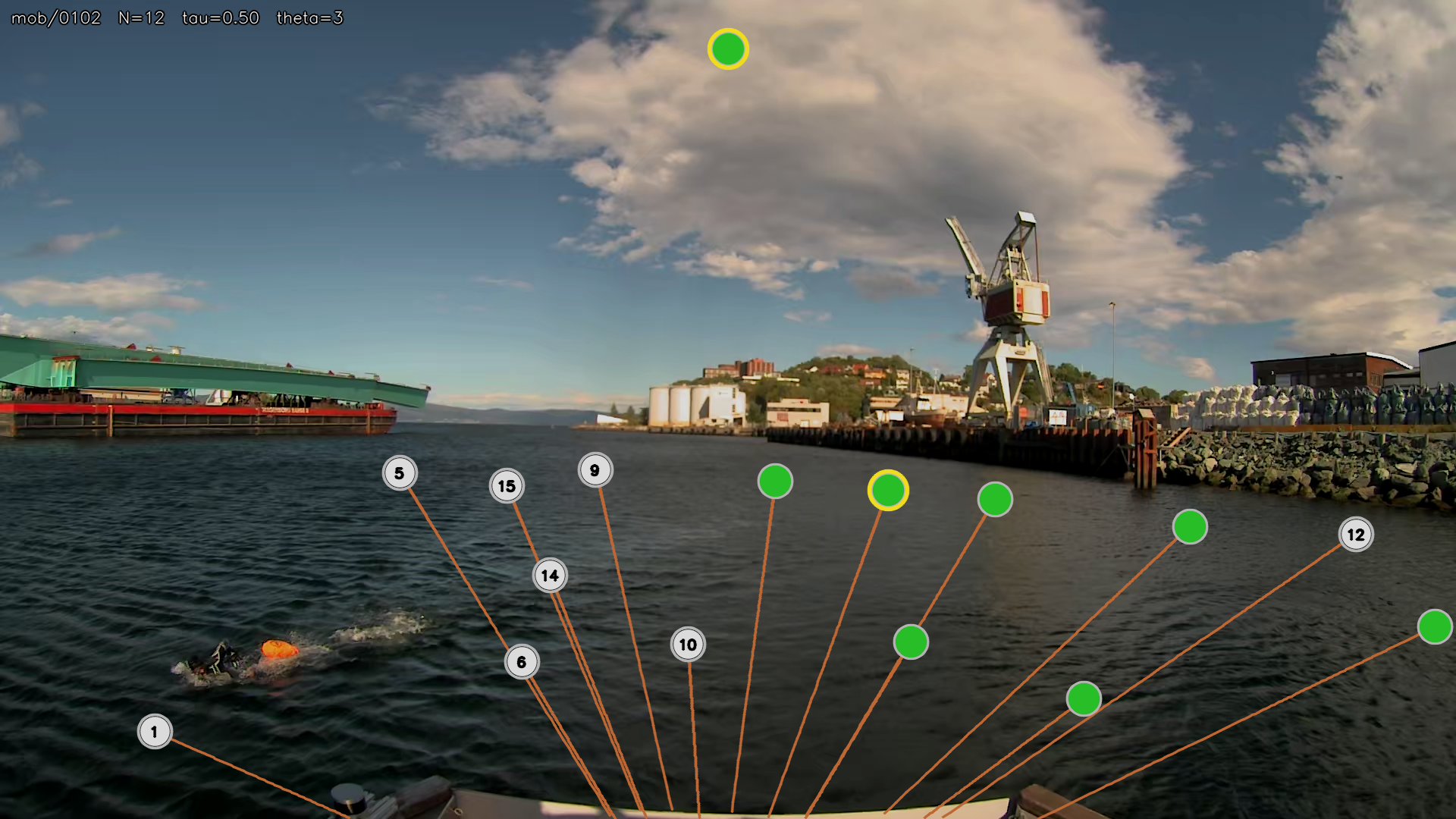

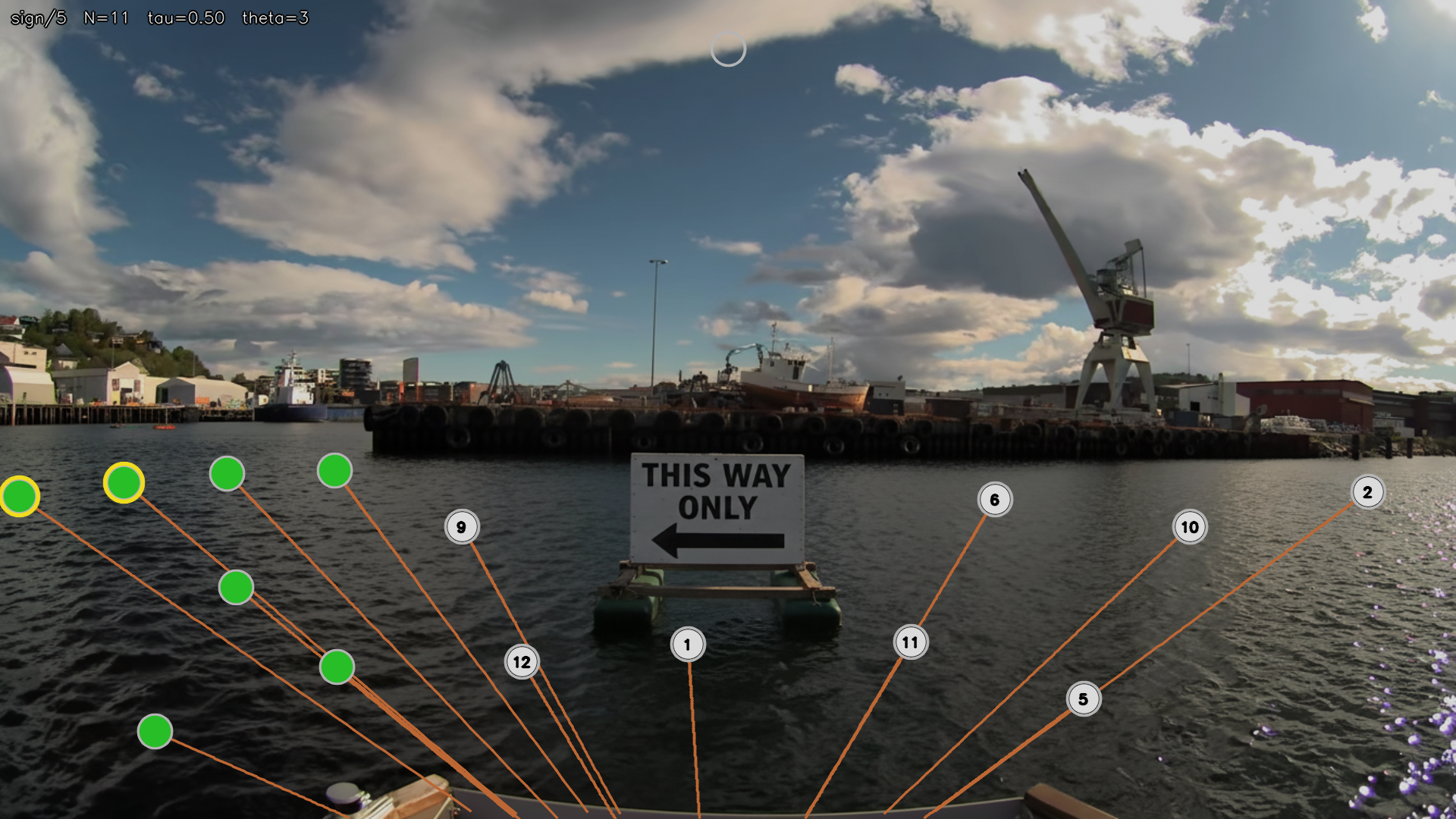

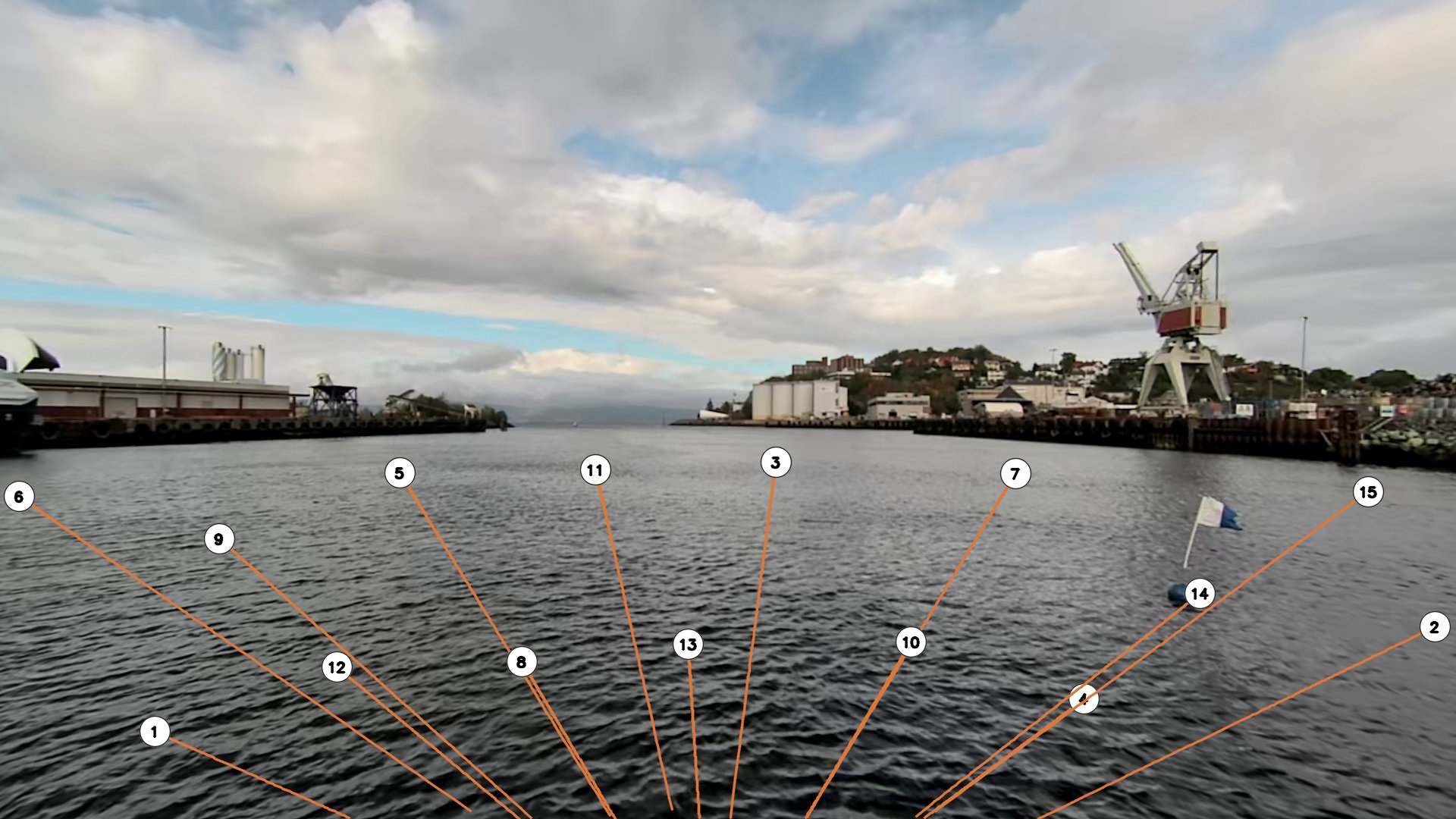

The draft IMO MASS Code requires autonomous and remotely supervised maritime vessels to detect departures from their operational design domain, enter a predefined fallback that notifies the operator, permit immediate human override, and avoid changing the voyage plan without approval. Meeting these obligations in the alert-to-takeover gap calls for a short-horizon, human-overridable fallback maneuver. Classical maritime autonomy stacks struggle when the correct action depends on meaning (e.g., diver-down flag means people in the water, fire close by means hazard). We argue (i) that vision-language models (VLMs) provide semantic awareness for such out-of-distribution situations, and (ii) that a fast-slow anomaly pipeline with a short-horizon, human-overridable fallback maneuver makes this practical in the handover window. We introduce Semantic Lookout, a camera-only, candidate-constrained VLM fallback maneuver selector that selects one cautious action (or station-keeping) from water-valid, world-anchored trajectories under continuous human authority. On 40 harbor scenes we measure per-call scene understanding and latency, alignment with human consensus (model majority-of-three voting), short-horizon risk-relief on fire hazard scenes, and an on-water alert->fallback maneuver->operator handover. Sub-10 s models retain most of the awareness of slower state-of-the-art models. The fallback maneuver selector outperforms geometry-only baselines and increases standoff distance on fire scenes. A field run verifies end-to-end operation. These results support VLMs as semantic fallback maneuver selectors compatible with the draft IMO MASS Code, within practical latency budgets, and motivate future work on domain-adapted, hybrid autonomy that pairs foundation-model semantics with multi-sensor bird's-eye-view perception and short-horizon replanning. Website: kimachristensen.github.io/bridge_policy💡 Summary & Analysis

** 1. **Proposed New Algorithm:** This study introduces a novel method to enhance the performance of deep neural networks in image classification tasks. Think of it like an automobile that can automatically adjust its driving mode based on road conditions. 2. **Dynamic Adjustment Mechanism:** The algorithm has the capability to self-adjust according to data complexity and noise levels. Imagine this as similar to how a music app learns your preferences over time and updates recommended playlists accordingly. 3. **Experimental Validation:** Extensive experiments across multiple datasets were conducted to prove the effectiveness of the proposed method, akin to clinical trials that verify if a new drug is effective in real patients.**

📄 Full Paper Content (ArXiv Source)

📊 논문 시각자료 (Figures)