Peer-Review Inspired LLM Ensemble Scoring and Selecting Excellence

📝 Original Paper Info

- Title: Scoring, Reasoning, and Selecting the Best! Ensembling Large Language Models via a Peer-Review Process- ArXiv ID: 2512.23213

- Date: 2025-12-29

- Authors: Zhijun Chen, Zeyu Ji, Qianren Mao, Junhang Cheng, Bangjie Qin, Hao Wu, Zhuoran Li, Jingzheng Li, Kai Sun, Zizhe Wang, Yikun Ban, Zhu Sun, Xiangyang Ji, Hailong Sun

📝 Abstract

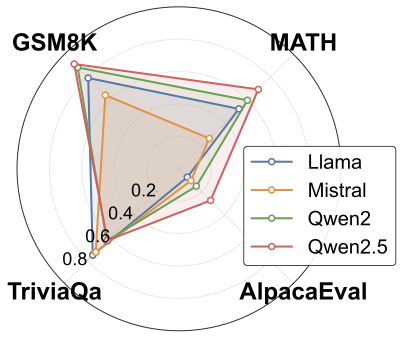

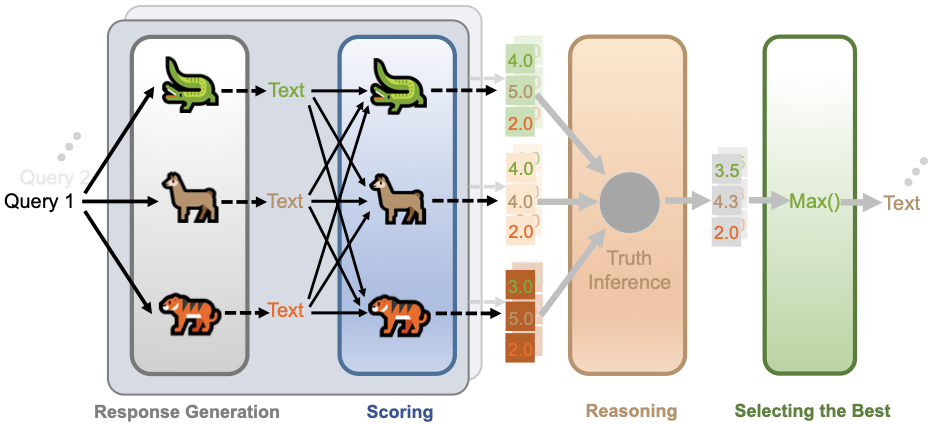

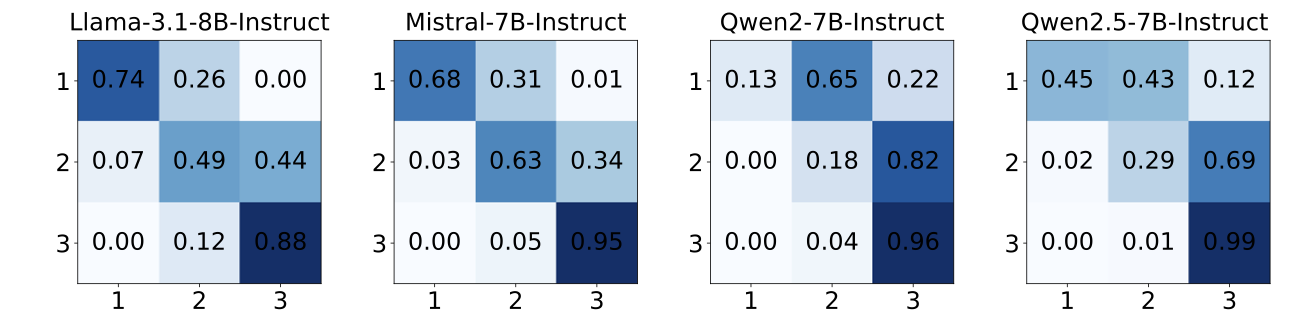

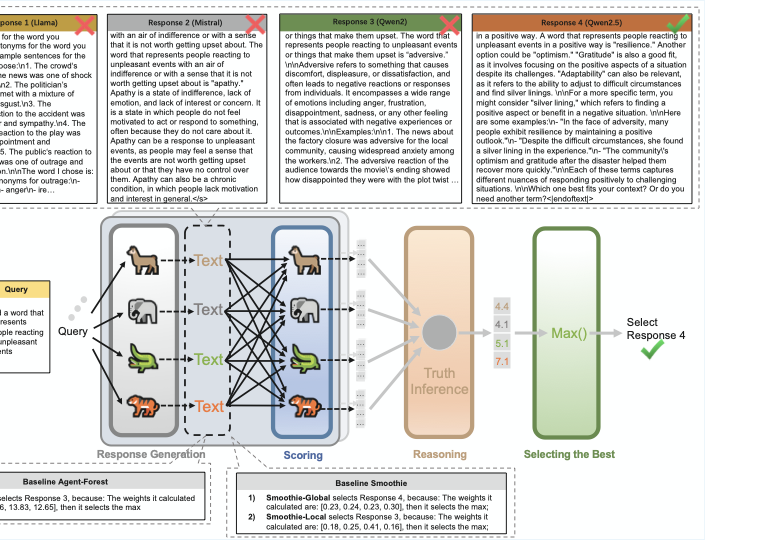

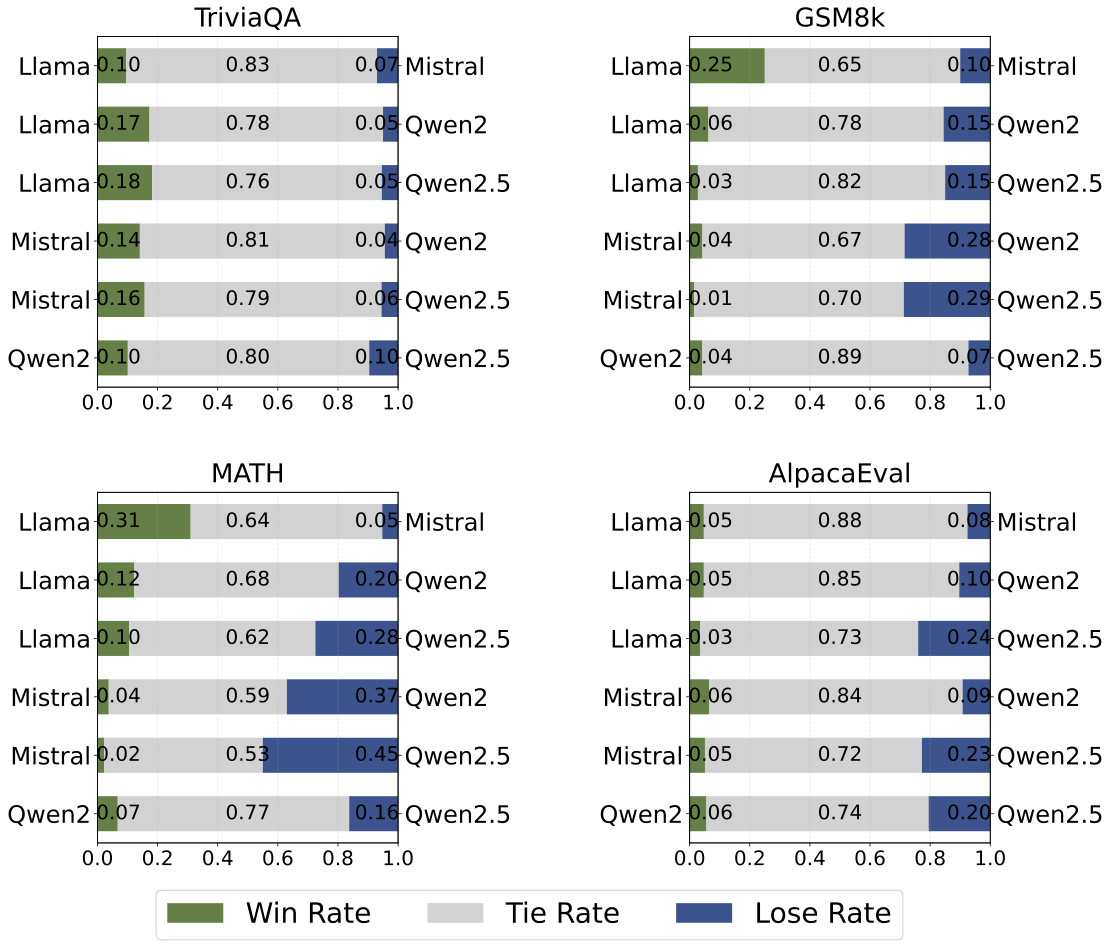

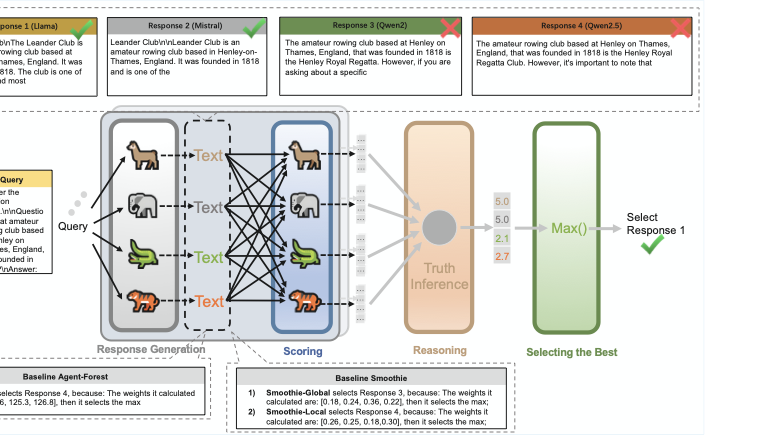

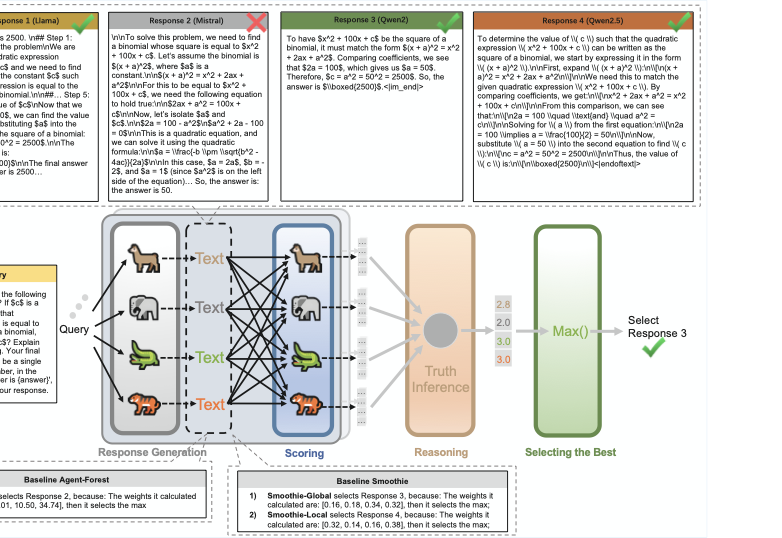

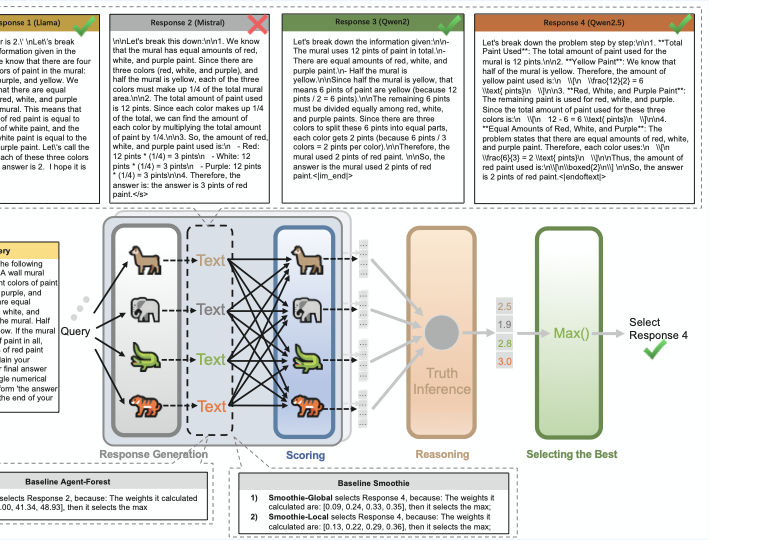

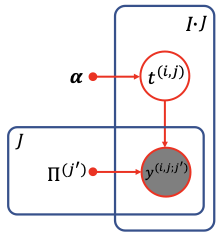

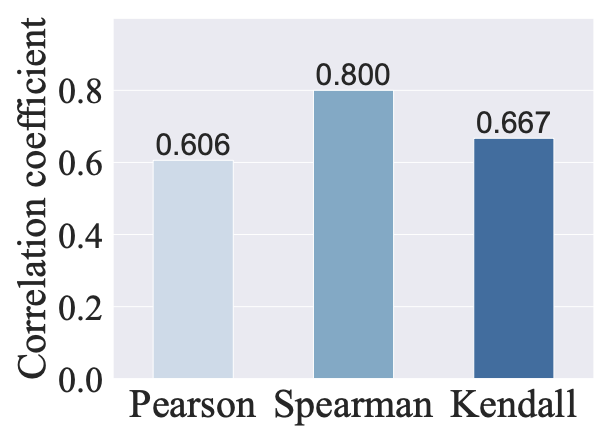

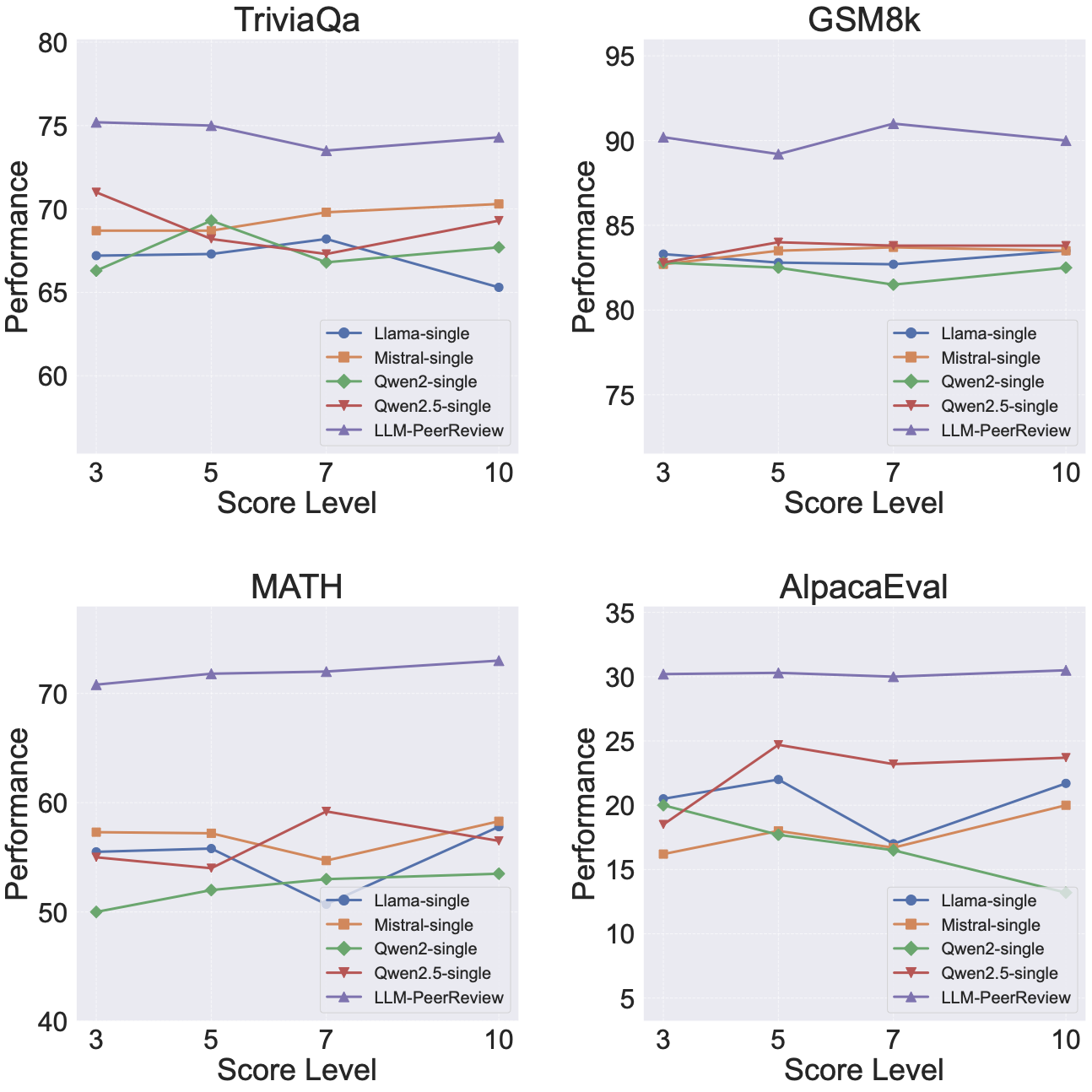

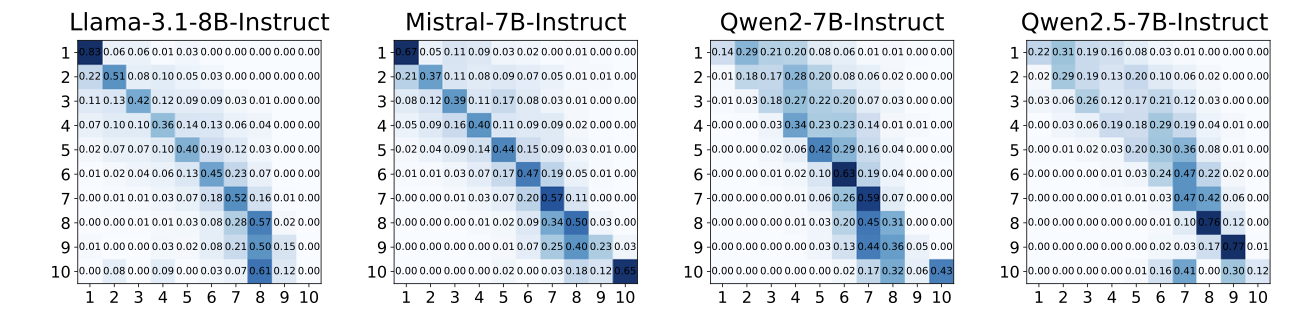

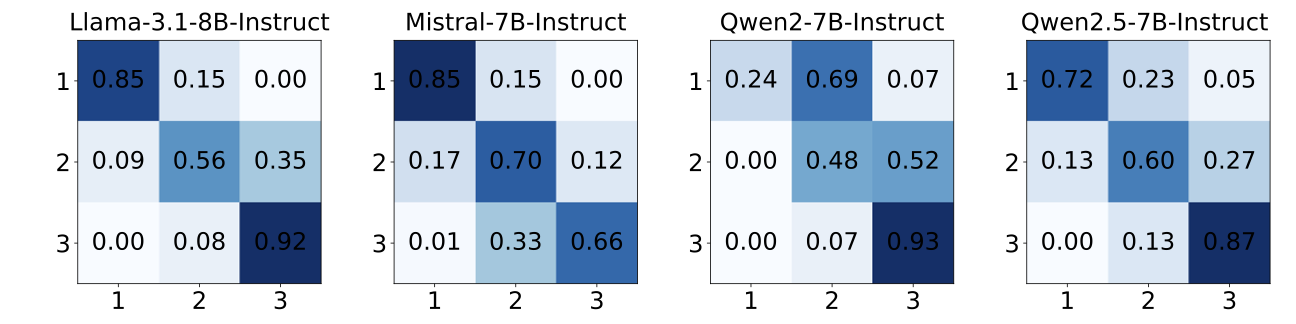

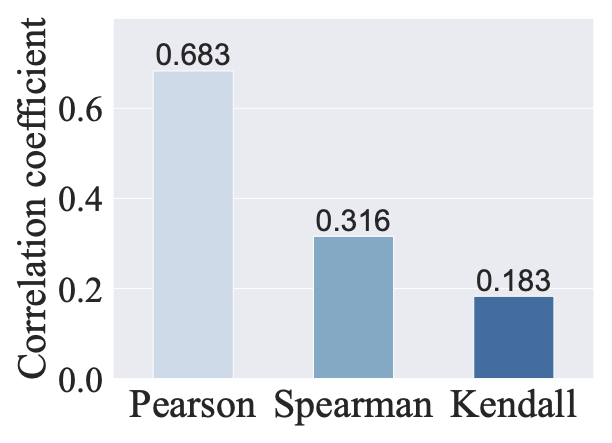

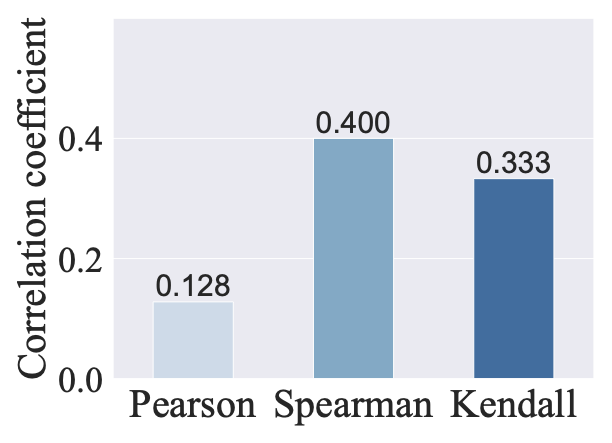

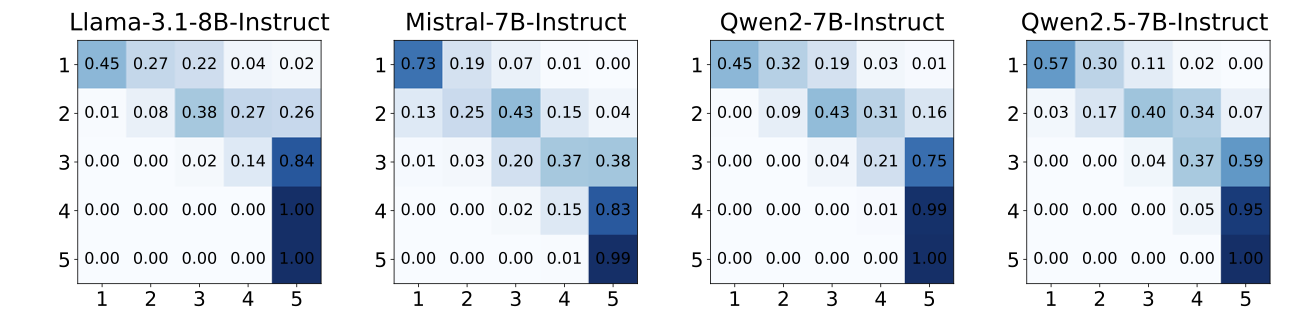

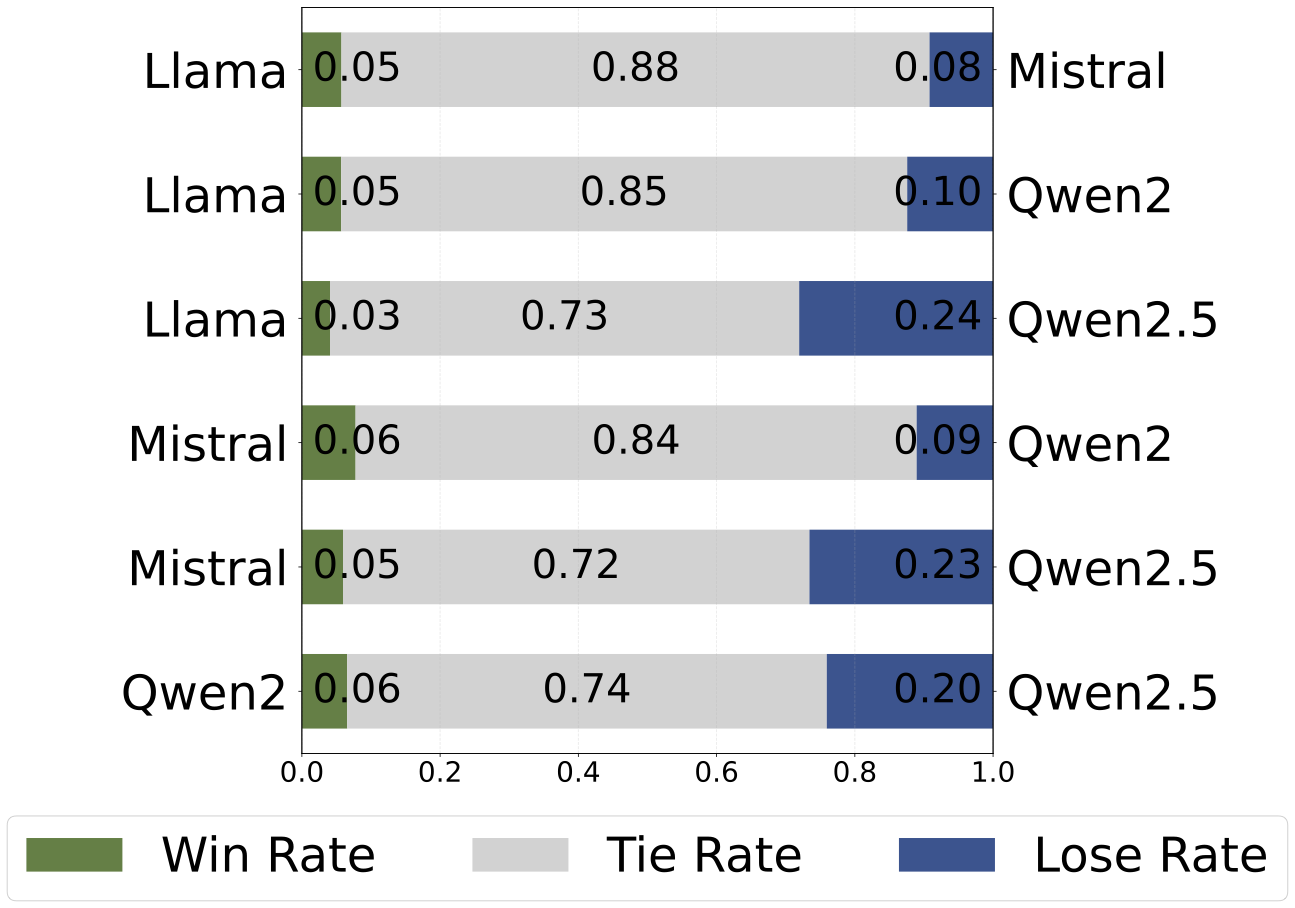

We propose LLM-PeerReview, an unsupervised LLM Ensemble method that selects the most ideal response from multiple LLM-generated candidates for each query, harnessing the collective wisdom of multiple models with diverse strengths. LLM-PeerReview is built on a novel, peer-review-inspired framework that offers a clear and interpretable mechanism, while remaining fully unsupervised for flexible adaptability and generalization. Specifically, it operates in three stages: For scoring, we use the emerging LLM-as-a-Judge technique to evaluate each response by reusing multiple LLMs at hand; For reasoning, we can apply a principled graphical model-based truth inference algorithm or a straightforward averaging strategy to aggregate multiple scores to produce a final score for each response; Finally, the highest-scoring response is selected as the best ensemble output. LLM-PeerReview is conceptually simple and empirically powerful. The two variants of the proposed approach obtain strong results across four datasets, including outperforming the recent advanced model Smoothie-Global by 6.9% and 7.3% points, respectively.💡 Summary & Analysis

1. **Contribution 1: Systematic Comparison** - This study accurately measures the performance of three learning methods across various datasets, clarifying which method is most effective under what circumstances. 2. **Contribution 2: Enhanced Practical Application Potential** - By analyzing the performance of transfer learning and pre-trained models, this research suggests how to effectively use AI even with small-scale datasets. 3. **Contribution 3: Development of Optimization Strategies** - The comparison between custom models and transfer learning provides insights into which learning approach is more efficient under specific conditions.📄 Full Paper Content (ArXiv Source)

📊 논문 시각자료 (Figures)