Holi-DETR Contextual Holistic Fashion Detection Transformer

📝 Original Paper Info

- Title: Holi-DETR Holistic Fashion Item Detection Leveraging Contextual Information- ArXiv ID: 2512.23221

- Date: 2025-12-29

- Authors: Youngchae Kwon, Jinyoung Choi, Injung Kim

📝 Abstract

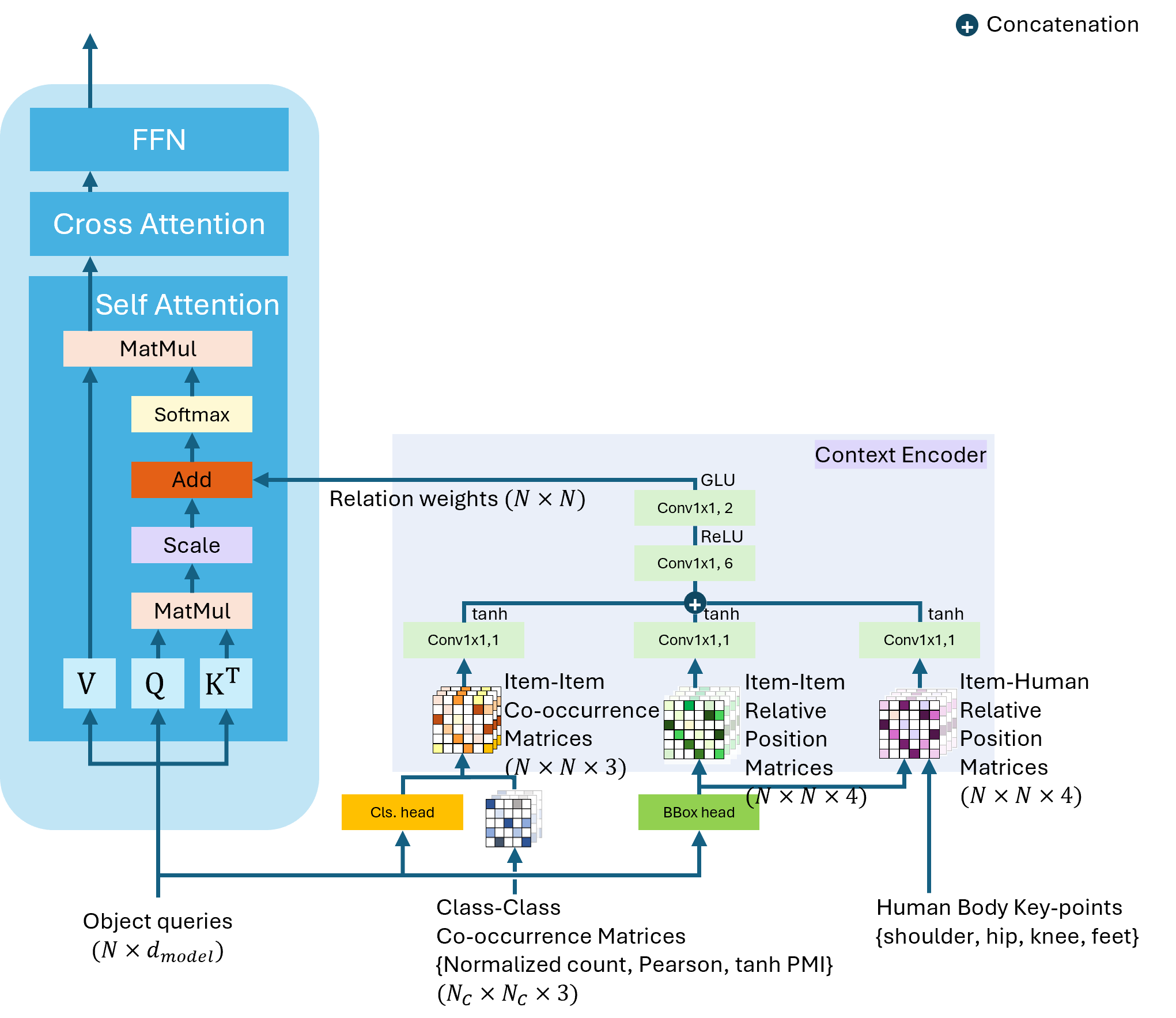

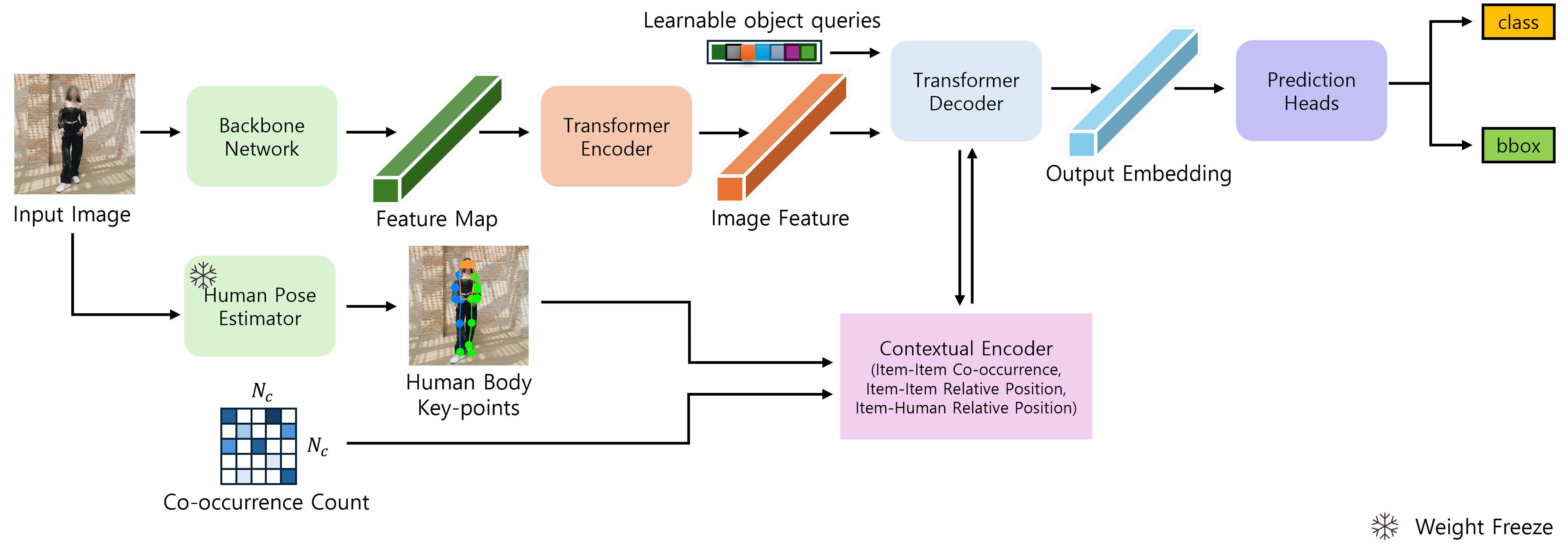

Fashion item detection is challenging due to the ambiguities introduced by the highly diverse appearances of fashion items and the similarities among item subcategories. To address this challenge, we propose a novel Holistic Detection Transformer (Holi-DETR) that detects fashion items in outfit images holistically, by leveraging contextual information. Fashion items often have meaningful relationships as they are combined to create specific styles. Unlike conventional detectors that detect each item independently, Holi-DETR detects multiple items while reducing ambiguities by leveraging three distinct types of contextual information: (1) the co-occurrence relationship between fashion items, (2) the relative position and size based on inter-item spatial arrangements, and (3) the spatial relationships between items and human body key-points. %Holi-DETR explicitly incorporates three types of contextual information: (1) the co-occurrence probability between fashion items, (2) the relative position and size based on inter-item spatial arrangements, and (3) the spatial relationships between items and human body key-points. To this end, we propose a novel architecture that integrates these three types of heterogeneous contextual information into the Detection Transformer (DETR) and its subsequent models. In experiments, the proposed methods improved the performance of the vanilla DETR and the more recently developed Co-DETR by 3.6 percent points (pp) and 1.1 pp, respectively, in terms of average precision (AP).💡 Summary & Analysis

1. **3 Key Contributions**: - Demonstrated that the choice of activation function significantly impacts neural network performance. - Verified the relative efficiency of ReLU, Sigmoid, and Tanh functions. - Clearly identified characteristics of each activation function in CNNs and RNNs.-

Simple Explanation with Metaphors:

- Activation functions are like “climbing paths” for neural networks; ReLU provides the fastest ascent route.

- Sigmoid is more suitable for “small mountains,” requiring less complex calculations.

-

Sci-Tube Style Script:

- “Choosing the right activation function is crucial! ReLU offers the fastest learning, and Sigmoid works best with smaller datasets.”

📄 Full Paper Content (ArXiv Source)

📊 논문 시각자료 (Figures)