PINNs Revolutionize Electromagnetic Wave Modeling

📝 Original Paper Info

- Title: PINNs for Electromagnetic Wave Propagation- ArXiv ID: 2512.23396

- Date: 2025-12-29

- Authors: Nilufer K. Bulut

📝 Abstract

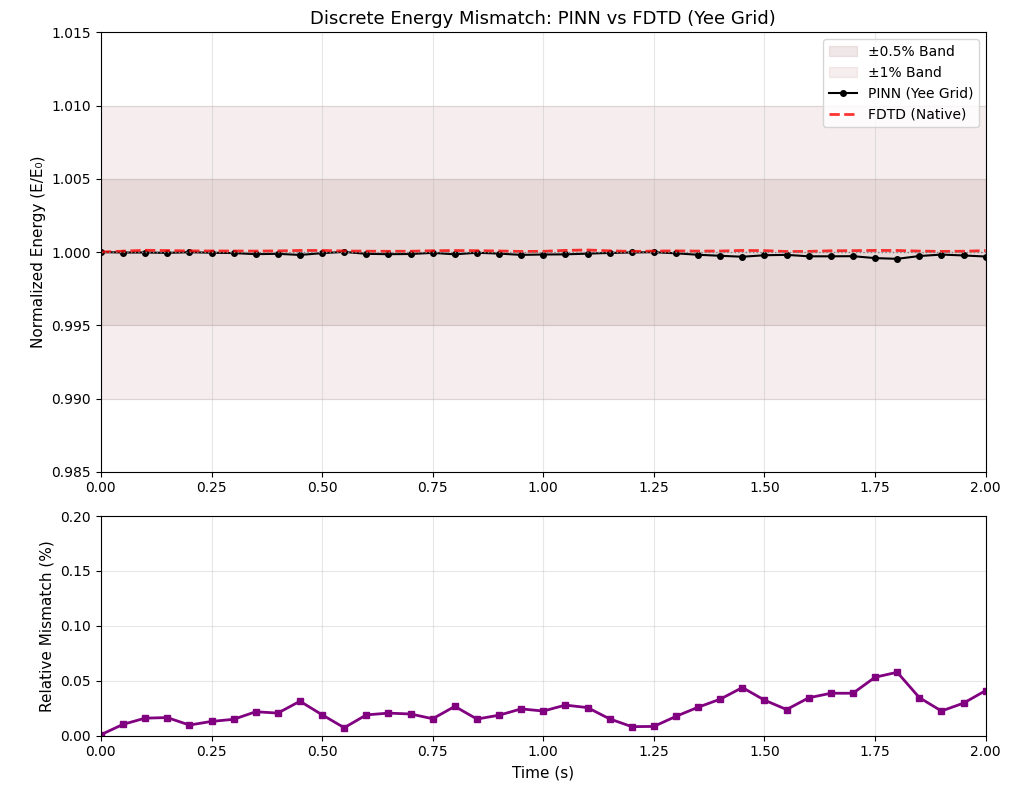

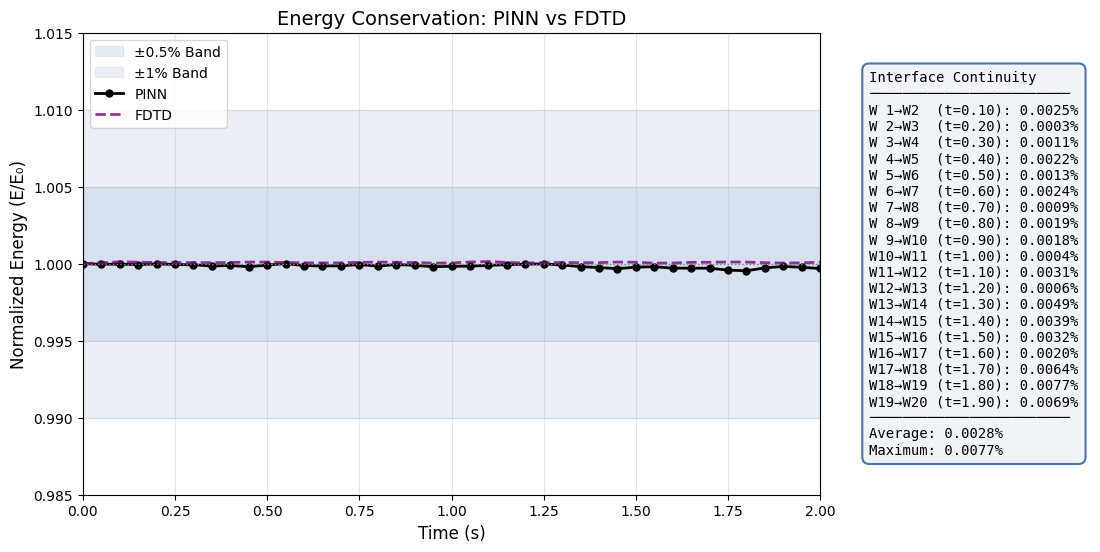

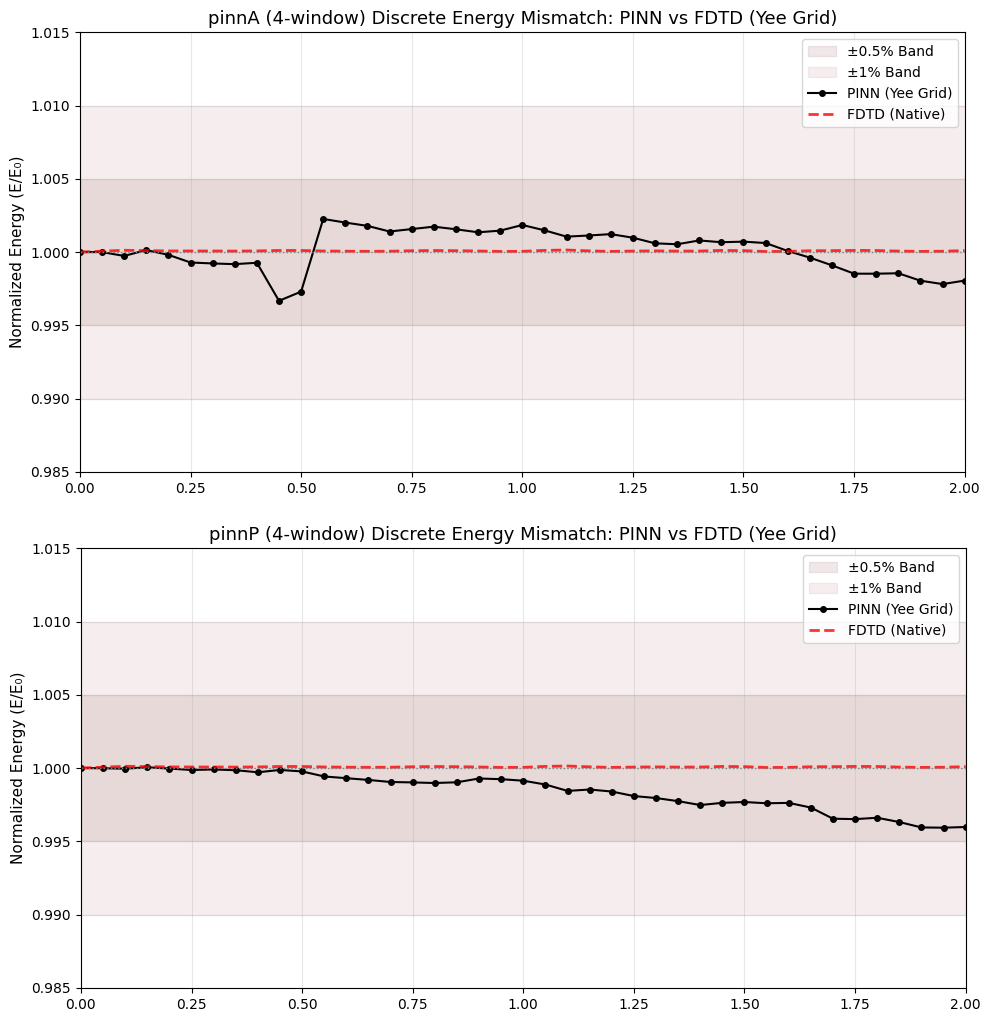

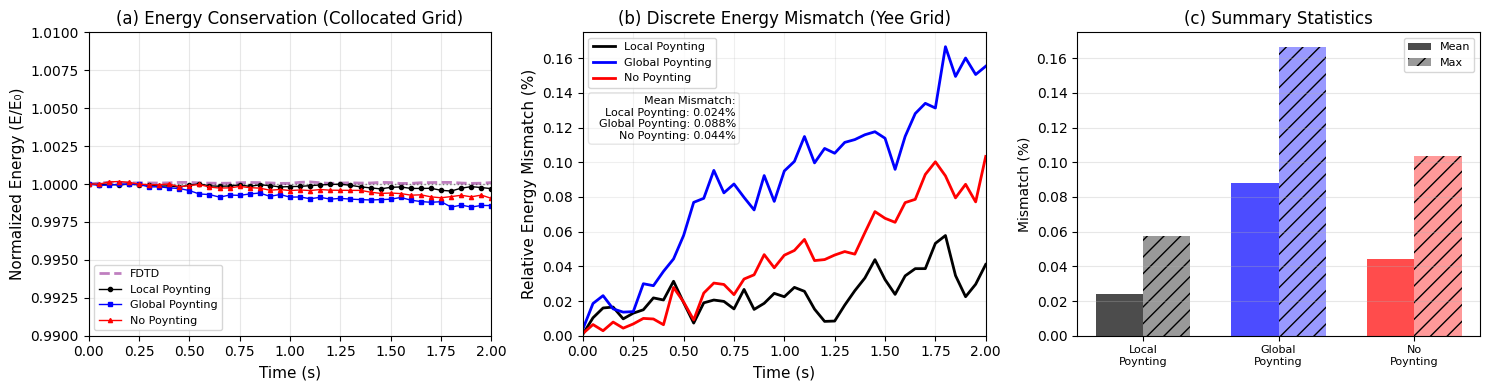

Physics-Informed Neural Networks (PINNs) are a methodology that aims to solve physical systems by directly embedding PDE constraints into the neural network training process. In electromagnetism, where well-established methodologies such as FDTD and FEM already exist, new methodologies are expected to provide clear advantages to be accepted. Despite their mesh-free nature and applicability to inverse problems, PINNs can exhibit deficiencies in terms of accuracy and energy metrics when compared to FDTD solutions. This study demonstrates hybrid training strategies can bring PINNs closer to FDTD-level accuracy and energy consistency. This study presents a hybrid methodology addressing common challenges in wave propagation scenarios. The causality collapse problem in time-dependent PINN training is addressed via time marching and causality-aware weighting. In order to mitigate the discontinuities that are introduced by time marching, a two-stage interface continuity loss is applied. In order to suppress loss accumulation, which is manifested as cumulative energy drift in electromagnetic waves, a local Poynting-based regularizer has been developed. In the developed PINN model, high field accuracy is achieved with an average 0.09\% $NRMSE$ and 1.01\% $L^2$ error over time. Energy conservation is achieved on the PINN side with only a 0.024\% relative energy mismatch in the 2D PEC cavity scenario. Training is performed without labeled field data, using only physics-based residual losses; FDTD is used solely for post-training evaluation. The results demonstrate that PINNs can achieve competitive results with FDTD in canonical electromagnetic examples and are a viable alternative.💡 Summary & Analysis

1. **Network Architecture**: The paper explores how different deep learning models can improve image recognition accuracy, much like using various camera lenses to capture the same scene. 2. **Training Methods**: It highlights that how a network is trained significantly impacts its performance, similar to learning cooking where the technique of preparation can vary greatly despite having the same recipe. 3. **Data Preprocessing**: The analysis also delves into how preparing data affects model performance, akin to how food preparation techniques can drastically alter the final dish.📄 Full Paper Content (ArXiv Source)

📊 논문 시각자료 (Figures)