Act2Goal Bridging World Models to Long-Horizon Tasks

📝 Original Paper Info

- Title: Act2Goal From World Model To General Goal-conditioned Policy- ArXiv ID: 2512.23541

- Date: 2025-12-29

- Authors: Pengfei Zhou, Liliang Chen, Shengcong Chen, Di Chen, Wenzhi Zhao, Rongjun Jin, Guanghui Ren, Jianlan Luo

📝 Abstract

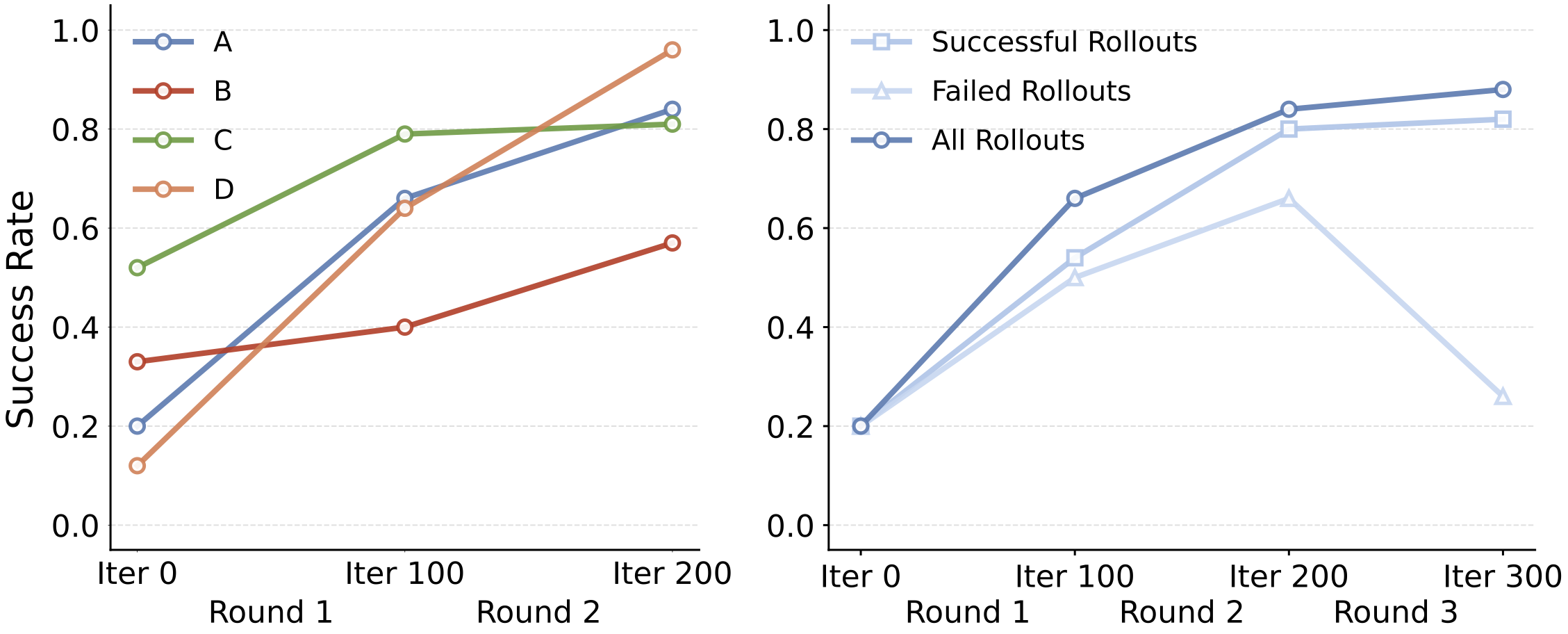

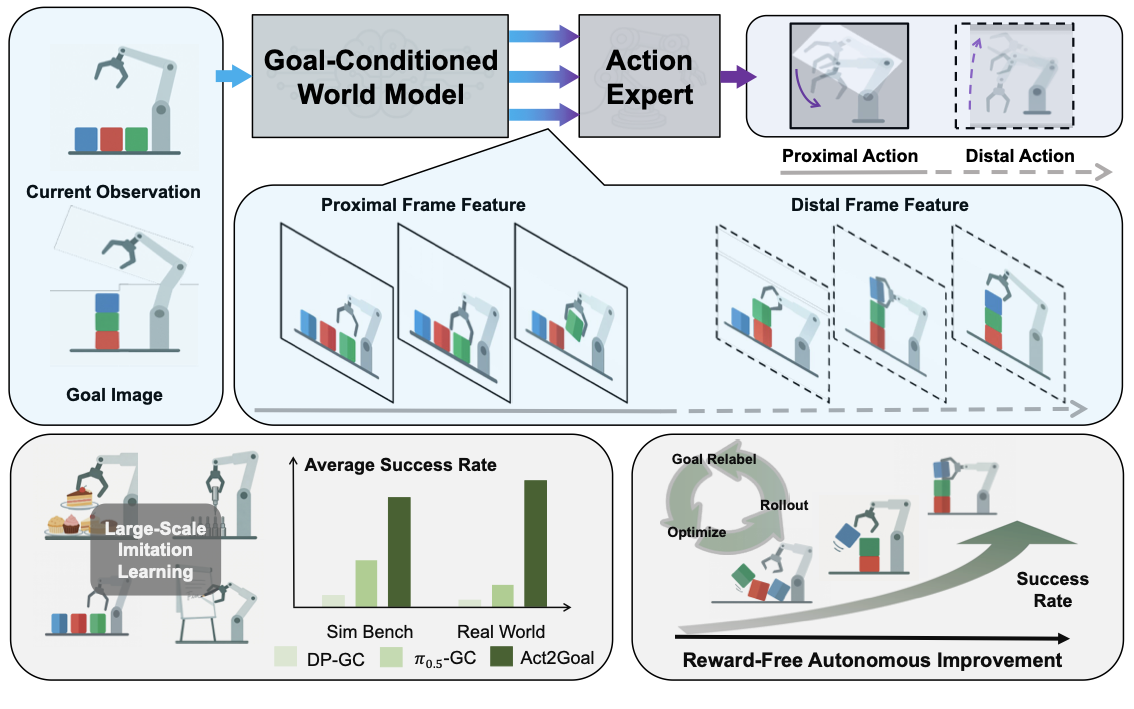

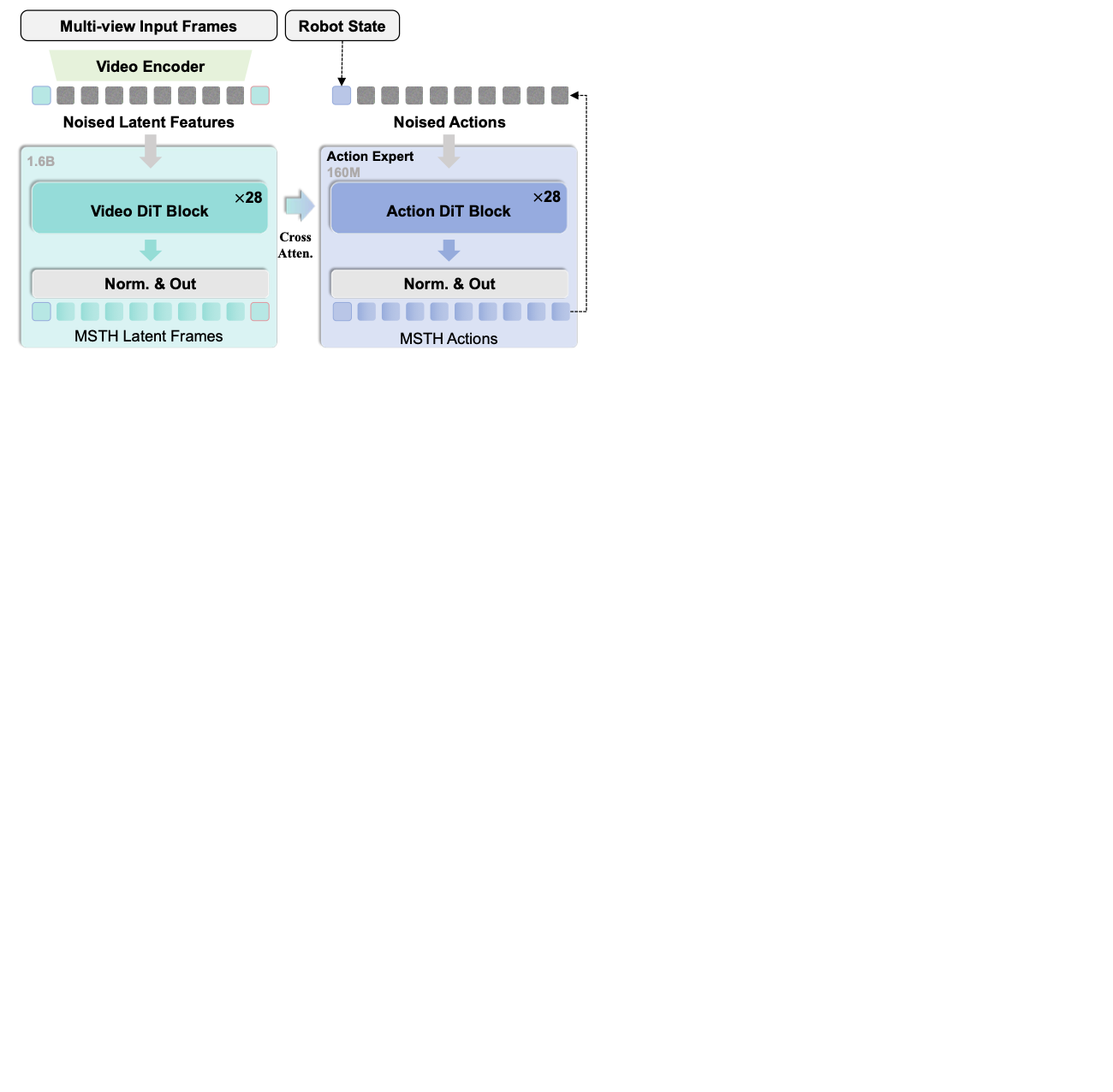

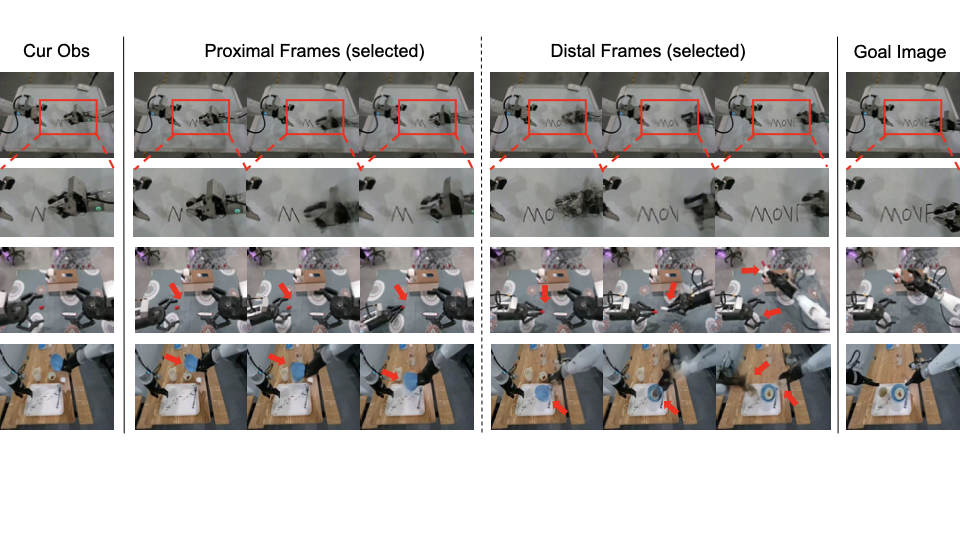

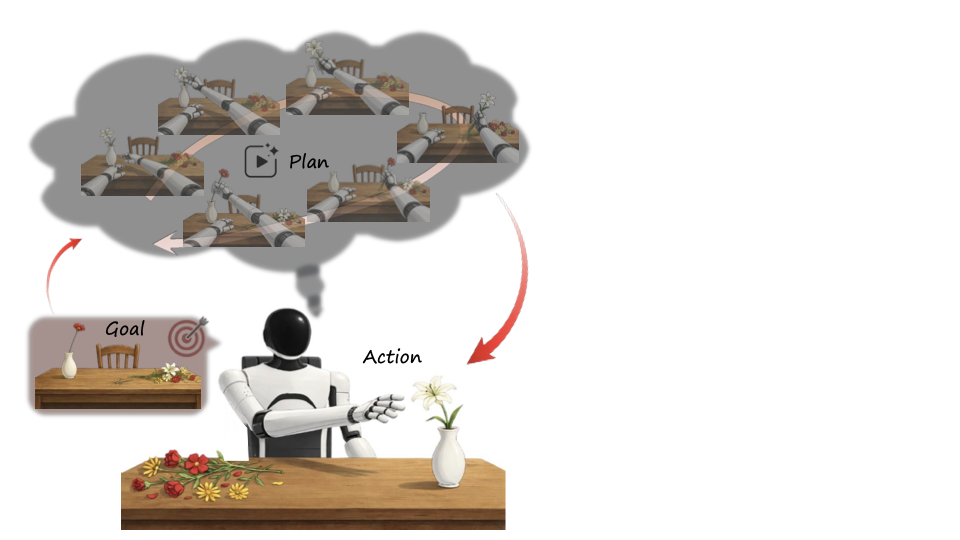

Specifying robotic manipulation tasks in a manner that is both expressive and precise remains a central challenge. While visual goals provide a compact and unambiguous task specification, existing goal-conditioned policies often struggle with long-horizon manipulation due to their reliance on single-step action prediction without explicit modeling of task progress. We propose Act2Goal, a general goal-conditioned manipulation policy that integrates a goal-conditioned visual world model with multi-scale temporal control. Given a current observation and a target visual goal, the world model generates a plausible sequence of intermediate visual states that captures long-horizon structure. To translate this visual plan into robust execution, we introduce Multi-Scale Temporal Hashing (MSTH), which decomposes the imagined trajectory into dense proximal frames for fine-grained closed-loop control and sparse distal frames that anchor global task consistency. The policy couples these representations with motor control through end-to-end cross-attention, enabling coherent long-horizon behavior while remaining reactive to local disturbances. Act2Goal achieves strong zero-shot generalization to novel objects, spatial layouts, and environments. We further enable reward-free online adaptation through hindsight goal relabeling with LoRA-based finetuning, allowing rapid autonomous improvement without external supervision. Real-robot experiments demonstrate that Act2Goal improves success rates from 30% to 90% on challenging out-of-distribution tasks within minutes of autonomous interaction, validating that goal-conditioned world models with multi-scale temporal control provide structured guidance necessary for robust long-horizon manipulation. Project page: https://act2goal.github.io/💡 Summary & Analysis

1. **Impact of Deep Learning**: Understand how deep learning contributes to natural language processing tasks. 2. **Model Comparison**: Directly compare RNN, LSTM, and transformer models to analyze their differences. 3. **Superiority of Transformers**: Show how transformers are more efficient in handling longer text sequences.Simple Explanation:

- Deep learning teaches computers to understand human languages.

- RNN and LSTM help remember the order of words, but transformers process overall text structures better.

- This leads to improved sentiment analysis for long documents.

Sci-Tube Style Script:

- “Today we’re looking into how computers learn to understand human language and which methods are most effective. While RNNs and LSTMs help remember word order, transformers handle the overall context of texts better, leading to more accurate sentiment analysis in longer documents.”

3 Levels of Difficulty:

- Beginner: Learn about how computers process human language.

- Intermediate: Explore differences between RNN, LSTM, and transformer models and see why transformers are more effective.

- Advanced: Dive deeper into improving accuracy for sentiment analysis with long texts.

📄 Full Paper Content (ArXiv Source)

📊 논문 시각자료 (Figures)