Beyond Repetition Enhancing Creativity in AI-Generated Problems

📝 Original Paper Info

- Title: Divergent-Convergent Thinking in Large Language Models for Creative Problem Generation- ArXiv ID: 2512.23601

- Date: 2025-12-29

- Authors: Manh Hung Nguyen, Adish Singla

📝 Abstract

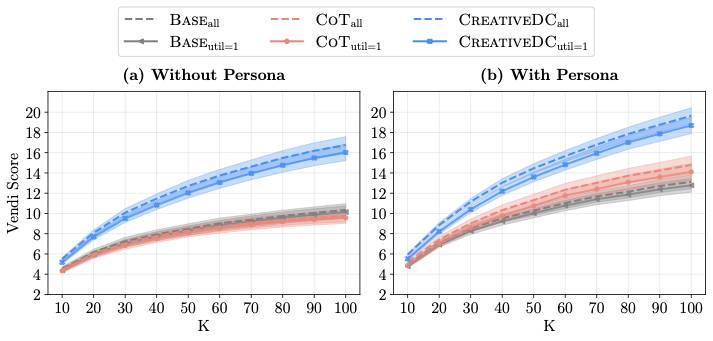

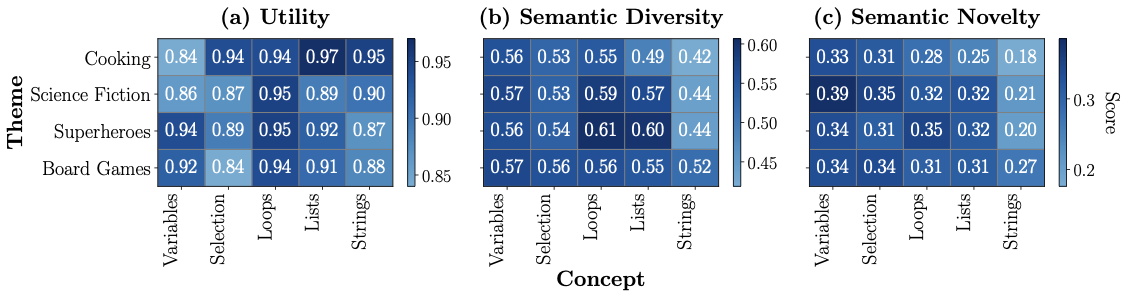

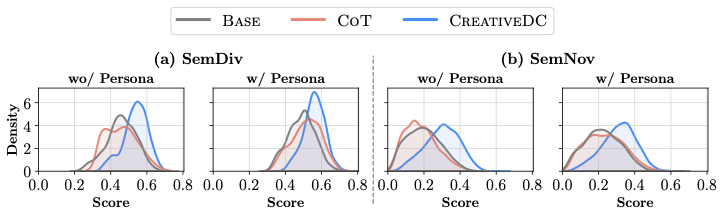

Large language models (LLMs) have significant potential for generating educational questions and problems, enabling educators to create large-scale learning materials. However, LLMs are fundamentally limited by the ``Artificial Hivemind'' effect, where they generate similar responses within the same model and produce homogeneous outputs across different models. As a consequence, students may be exposed to overly similar and repetitive LLM-generated problems, which harms diversity of thought. Drawing inspiration from Wallas's theory of creativity and Guilford's framework of divergent-convergent thinking, we propose CreativeDC, a two-phase prompting method that explicitly scaffolds the LLM's reasoning into distinct phases. By decoupling creative exploration from constraint satisfaction, our method enables LLMs to explore a broader space of ideas before committing to a final problem. We evaluate CreativeDC for creative problem generation using a comprehensive set of metrics that capture diversity, novelty, and utility. The results show that CreativeDC achieves significantly higher diversity and novelty compared to baselines while maintaining high utility. Moreover, scaling analysis shows that CreativeDC generates a larger effective number of distinct problems as more are sampled, increasing at a faster rate than baseline methods.💡 Summary & Analysis

1. **Understanding Complex Neural Network Architectures** - The paper explains the core elements and how they interact in complex neural networks. This helps in optimizing performance based on specific tasks. 2. **Analysis of Real-World Applications** - It explores how these models are used in real-world scenarios, aiding in choosing the right architecture for given tasks. 3. **Future Research Directions** - The paper suggests future research directions aimed at optimizing neural networks for practical applications.1-Level: Easy to Understand

Think of neural network architectures like a car; all parts need to work well together for overall performance.

2-Level: A Bit Deeper

Breaking it down, certain components are better suited for specific tasks. For instance, CNNs excel in image recognition.

3-Level: More Advanced Explanation

The performance of complex neural networks is influenced by factors such as accuracy and computational efficiency. Understanding how these elements interact is crucial when applying them to real-world situations.

📄 Full Paper Content (ArXiv Source)

📊 논문 시각자료 (Figures)