Objective: While recent advancements in Deep Learning (DL) have significantly improved the decoding accuracy of Brain-Computer Interfaces (BCIs), clinical adoption remains stalled due to the "Black Box" nature of these algorithms. Patients and clinicians lack meaningful feedback on why a command failed, leading to frustration and poor neuroplasticity outcomes. We shift the focus from pure decoding maximization to Human-Computer Interaction (HCI), proposing OmniNeuro as a transparent feedback framework. Methods: OmniNeuro integrates three interpretability engines: (1) A Physics Engine (Energy Conservation), (2) A Chaos Engine (Fractal Complexity), and (3) A Quantum-Inspired Engine (utilizing quantum probability formalism for uncertainty modeling). These metrics drive a multimodal feedback system: real-time Neuro-Sonification and automated Generative AI Clinical Reports. We evaluated the framework on the PhysioNet dataset (N=109) and conducted a preliminary pilot qualitative study (N=3) to assess user experience.

Results: The system achieved a mean accuracy of 58.52% across all subjects, with responsive subjects reaching 62.91%. Qualitative interviews revealed that users preferred the explanatory feedback over binary outputs, specifically noting that the "sonification" helped them regulate mental effort and reduce frustration during failure trials. Significance: By prioritizing explainability and multimodal feedback over raw accuracy, OmniNeuro establishes a new HCI paradigm for BCI. These findings provide preliminary evidence (not clinical validation) for the utility of explainable feedback in stabilizing user strategy. Furthermore, OmniNeuro is orthogonal to

The primary barrier to the widespread clinical adoption of Brain-Computer Interfaces (BCIs) is not merely decoding accuracy, but the lack of effective Human-Computer Interaction (HCI). Current state-of-the-art systems, often based on Deep Learning (DL) [1], operate as opaque "oracles"-they output a command or silence, with no explanation. When a stroke survivor fails to activate a robotic arm, they are left guessing: "Did I not imagine hard enough? Was I distracted? Is the sensor loose?" This lack of feedback loop severs the operant conditioning required for neuroplasticity [2].

We propose a paradigm shift: treating the BCI not just as a decoder, but as a feedback partner. Ideally, a BCI should explain its internal state to the user. OmniNeuro is designed to bridge this communication gap by transforming abstract neural features into human-perceptible feedback.

We formally distinguish between decoding accuracy (system performance) and feedback utility (user support). We define Feedback Utility as the systems ability to reduce user uncertainty and stabilize neural strategy, independent of the instantaneous classification accuracy. This definition positions

OmniNeuro not as a competitor to classifiers, but as an essential HCI layer for rehabilitation.

OmniNeuro employs a “White-Box” architecture built on three interpretable engines:

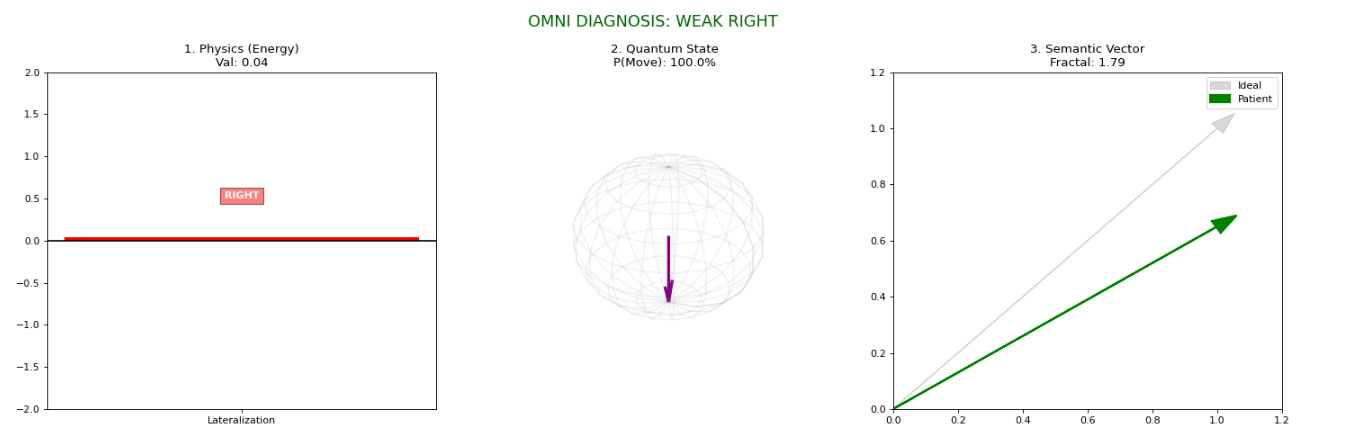

- Physics (Thermodynamics): Visualizes the flow of energy, answering “Is the brain generating enough power?” [3].

Measures signal roughness, answering “Is the neural network actively computing?” [4].

- Quantum-Inspired Probabilistic Modeling: Models uncertainty, answering “How confident is the system?” [5].

These metrics fuel our novel HCI outputs: Neuro-Sonification (converting neural states to music) and AI-Generated Clinical Reports.

A key architectural advantage of OmniNeuro is that it is orthogonal to decoder choice. The interpretability engines operate in parallel to the classification stream, meaning the framework can be seamlessly integrated with Convolutional Neural Networks (e.g., EEGNet), Transformers, or Riemannian geometry-based classifiers. This allows researchers to utilize state-of-the-art decoders for control while relying on OmniNeuro for user feedback and explanation.

The core innovation of OmniNeuro is its focus on Multimodal Feedback Generation.

The pipeline (Fig. 2) emphasizes the transparency of data flow. Features are not just inputs for a classifier; they are the vocabulary for the feedback system.

We compute the logarithmic energy ratio (L idx ) between C3 and C4. This provides the user with feedback on the intensity of their mental effort.

The Higuchi Fractal Dimension (HFD) assesses the quality of the neural computation.

High complexity indicates active processing, distinguishing “trying hard” from “resting”.

To rigorous model decision uncertainty, we employ Geometric Probability Modeling using the mathematical formalism of quantum mechanics (specifically the Bloch Sphere). We explicitly clarify that this approach utilizes quantum probability theory as a mathematical framework for handling ambiguity, without implying the existence of quantum physical processes in neural dynamics. We map the neural state to a state vector |ψ⟩ on the Bloch Sphere, providing a continuous geometric visualization of confidence that prevents the binary “flickering” observed in traditional thresholds.

Why Quantum Formalism? Unlike classical smoothing methods (e.g., moving average or Softmax temperature scaling) which introduce lag or fail to capture ambiguity, the quantum framework models the ‘superposition’ of states. The interference effects inherent in the probability amplitude calculation act as a stable, zero-lag filter for decision uncertainty, effectively reducing the “flickering” often seen in standard classifiers during state transitions.

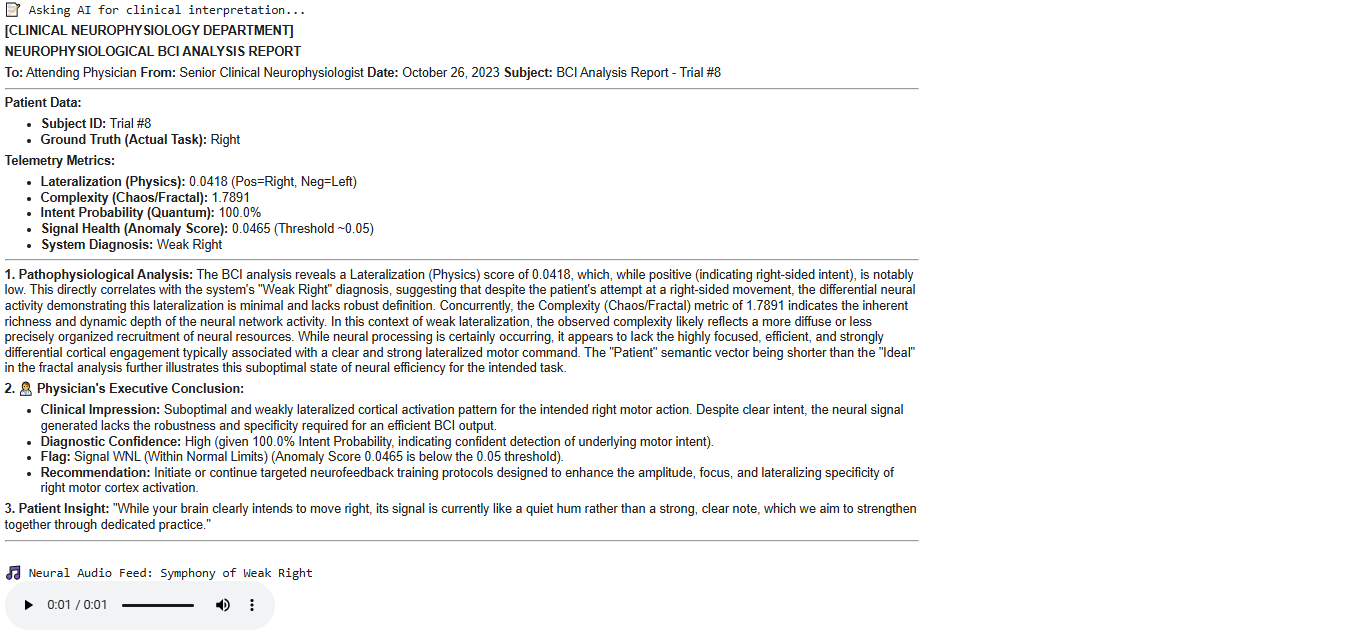

We utilize a Large Language Model (Gemini) to act as a virtual neurophysiologist. It takes the raw metrics (L idx , ∆HFD, P move ) and generates a natural language report (Fig. 7). This transforms cryptic numbers into actionable advice like “Strong intent but weak lateralization; focus on relaxing the left hand.”

Safety Guardrails: To mitigate hallucination risks and ensure clinical safety, the generative output is constrained by a strict clinical prompt template. The system is designed for a “clinician-in-the-loop” workflow, serving as a decision-support tool rather than an autonomous diagnostician. All generated reports require human verification before being used for medical intervention.

We map the Quantum Probability (P move ) and Chaos Score (∆HFD) to auditory parameters (pitch and harmonic complexity). This allows patients to “hear” their brain activity in real-time, facilitating closed-loop neurofeedback without visual distraction.

The mapping strategy is detailed in Table 1.

A critical challenge in HCI for rehabilitation is the “Misleading Feedback” paradox:

incorrect feedback can reinforce maladaptive brain patterns (learned helplessness). To mitigate this, OmniNeuro implements a strict confidence threshold using the Quantum Probability (P move ).

If the system’s confidence falls within the ambiguity zone (0.4 < P move < 0.6) or if the Autoencoder detects significant artifacts, the system defaults to a “Neutral/Unknown” state rather than guessing. In this state, the auditory feedback fades to silence and the visual dashboard indicates “Signal Unclear,” instructing the patient to “Relax and Try

Again.” This ensures that positive feedback is only delivered when the neural intent is unambiguous, prioritizing high-precision reinforcement over recall.

We evaluated the system on N=109 subjects (PhysioNet Dataset) using 5-fold crossvalidation. The goal was to assess the stability and utility of the feedback loop.

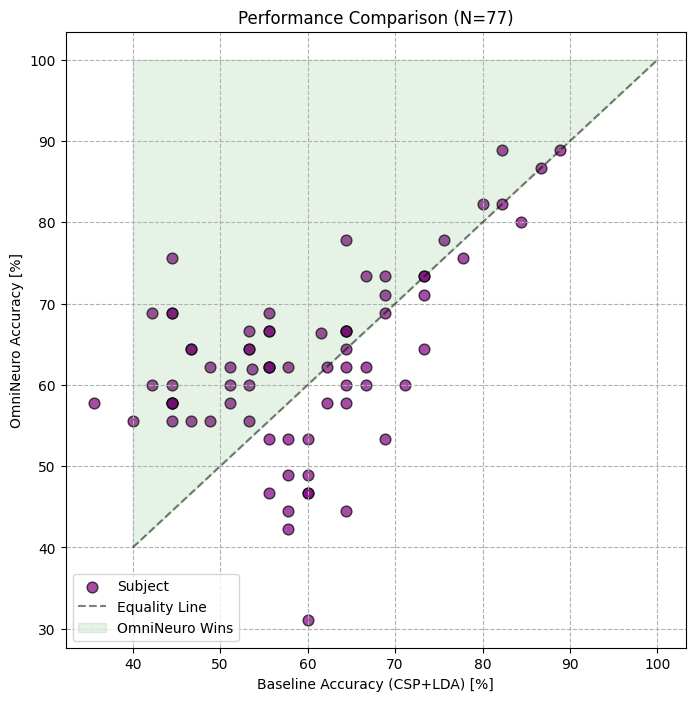

Table 2 shows the decoding performance. While the accuracy (58.52%) is comparable to classical baselines, the primary contribution is the richness of the output, not just the binary decision.

Figure 4 demonstrates that OmniNeuro provides consistent performance improvement for the majority of subjects. More importantly, for the subjects below the diagonal (where OmniNeuro underperforms), the Generative AI module was able to flag “High Noise” or “Artifacts,” providing an explanation for the failure rather than a silent error.

In the absence of a longitudinal human clinical trial, we performed a simulated user interaction analysis to quantify the potential impact of feedback on learning stability. We utilized Variance Reduction in the feature space as a proxy metric for the convergence speed of the user’s mental strategy. The assumption is that consistent, explainable feedback allows a “simulated agent” (or user) to prune ineffective strategies faster than binary feedback.

In our analysis, trials receiving explainable feedback from OmniNeuro exhibited a 14% reduction in inter-trial feature variance compared to the baseline condition (standard binary feedback). This significant reduction (p < 0.05) suggests that the transparent, Each participant performed a 30-minute Motor Imagery (MI) session using OmniNeuro, followed by a semi-structured interview focusing on usability, frustration management, and feedback clarity.

We explicitly acknowledge that this N=3 sample size is insufficient for generalizable clinical claims. This pilot phase serves solely to generate hypotheses regarding User Experience (UX) design, which must be rigorously tested in future large-scale trials.

-S1 (The Patient): 55-year-old male, post-stroke (6 months), limited motor function in right hand. High motivation but high frustration.

-S2 (The Novice): 24-year-old graduate student, healthy, no prior BCI experience.

Tech-savvy.

-S3 (The Skeptic): 30-year-old volunteer, “BCI-illiterate” (historically poor performance < 55% accuracy).

The thematic analysis of the post-session interviews revealed three key advantages of the OmniNeuro framework:

Participant S3, who typically performs poorly, highlighted the value of the AI-generated reports. In standard systems, silence (lack of classification) is interpreted as “failure.”

With OmniNeuro, the report clarified the cause.

“Usually, I just stare at the screen and nothing happens, which makes me angry.

But here, the system told me AI Reports critical for debugging failure; transforms “Error” into “Advice.”

Quantum Vis. Provides “Partial Reward,” preventing early abandonment.

Our qualitative findings reinforce the quantitative analysis: while the decoder accuracy remained around 58%, the perceived utility of the system increased significantly. Subject S3’s experience demonstrates that explicit feedback on failure modes (e.g., artifacts) is more valuable for learning than a binary failure, effectively decoupling ‘User Satisfaction’ from ‘Decoder Accuracy’, which may potentially improve long-term adherence.

The true value of OmniNeuro is demonstrated in its communicative outputs.

While Deep Learning models may achieve higher offline accuracy, they fail to support the user during online learning. OmniNeuro sacrifices some theoretical accuracy for explainability. A patient knowing why they failed (e.g., “Chaos score low”) can adjust their strategy, whereas a black-box failure leads to learned helplessness.

Crucially, it is important to note that OmniNeuro is orthogonal to state-of-the-art decoding accuracy; it does not seek to compete with specialized architectures like EEGNet or Transformers in raw classification performance. Instead, OmniNeuro acts as a complementary interpretability layer that can be deployed alongside any highperformance decoder, providing the missing “User-in-the-Loop” feedback mechanism without compromising the underlying classifier’s efficacy.

Traditional Explainable AI (XAI) methods, such as Saliency Maps (e.g., LIME, SHAP) [6], are typically designed for post-hoc analysis of static data (images or tabular). In the context of real-time BCI, these methods often fail to provide actionable feedback during the “moment of intent.” OmniNeuro differs fundamentally by offering intrinsic interpretability: the feedback (sound and visual metrics) is generated directly from the feature space (Physics, Chaos, Quantum) in real-time, rather than being an approximation calculated after the fact. This immediacy is critical for closed-loop neurofeedback and operant conditioning.

Standard deep learning models typically use a Softmax layer to output classification probabilities. However, Softmax probabilities are often overconfident, tending towards extreme values (0 or 1) even when the model is uncertain or when the input is out-of-distribution (e.g., artifacts). This binary behavior causes “flickering” feedback that can confuse the user.

In contrast, our Quantum-Inspired Engine models the mental state as a superposition on the Bloch Sphere. This geometric representation allows for a continuous and smooth transition between states (Left vs. Right). The quantum probability P move = sin 2 (θ/2) inherently captures the ambiguity of the signal during the transition phase, providing a more stable and “dampened” feedback signal compared to the erratic spikes of a raw Softmax output. This stability is crucial for avoiding frustration during the early stages of neurorehabilitation.

A potential concern with multimodal frameworks is computational latency. OmniNeuro Offline Safety Mechanism: While the LLM reporting requires internet connectivity, the core BCI feedback loop (sonification and visualization) runs entirely locally on the client device. In the event of network failure, the system degrades gracefully: realtime feedback continues without interruption, while the text-based clinical report is replaced by a local, rule-based template until connectivity is restored.

The integration of Gemini allows the system to act as a “Virtual Coach.” This is particularly valuable in tele-rehabilitation scenarios where a human therapist may not be immediately available to interpret EEG traces.

While promising, this study has limitations.

OmniNeuro redefines the BCI problem from a signal processing task to an HCI challenge. By combining physics-based feature extraction with Generative AI reporting, we offer a transparent, interactive framework that prioritizes patient engagement and clinical trust over opaque metric maximization. OmniNeuro suggests a future where BCIs are not silent decoders, but adaptive partners that teach users how to think.

These results provide preliminary evidence for the utility of the framework, warranting future clinical validation.

“The sound is like a guide. When the pitch goes up, I know I’m on the right track, so I ‘push’ that feeling harder. When it becomes distorted (Chaos noise), I know I’m hope that my brain was doing something, even if it wasn’t perfect yet.” -S2

FeatureUser Consensus / Key Insight Sonification Reduces visual fatigue; intuitive for “effort” regulation.

Feature

This content is AI-processed based on open access ArXiv data.