KL Divergence in Alignment Covering Modes vs. Seeking Rewards

📝 Original Paper Info

- Title: APO Alpha-Divergence Preference Optimization- ArXiv ID: 2512.22953

- Date: 2025-12-28

- Authors: Wang Zixian

📝 Abstract

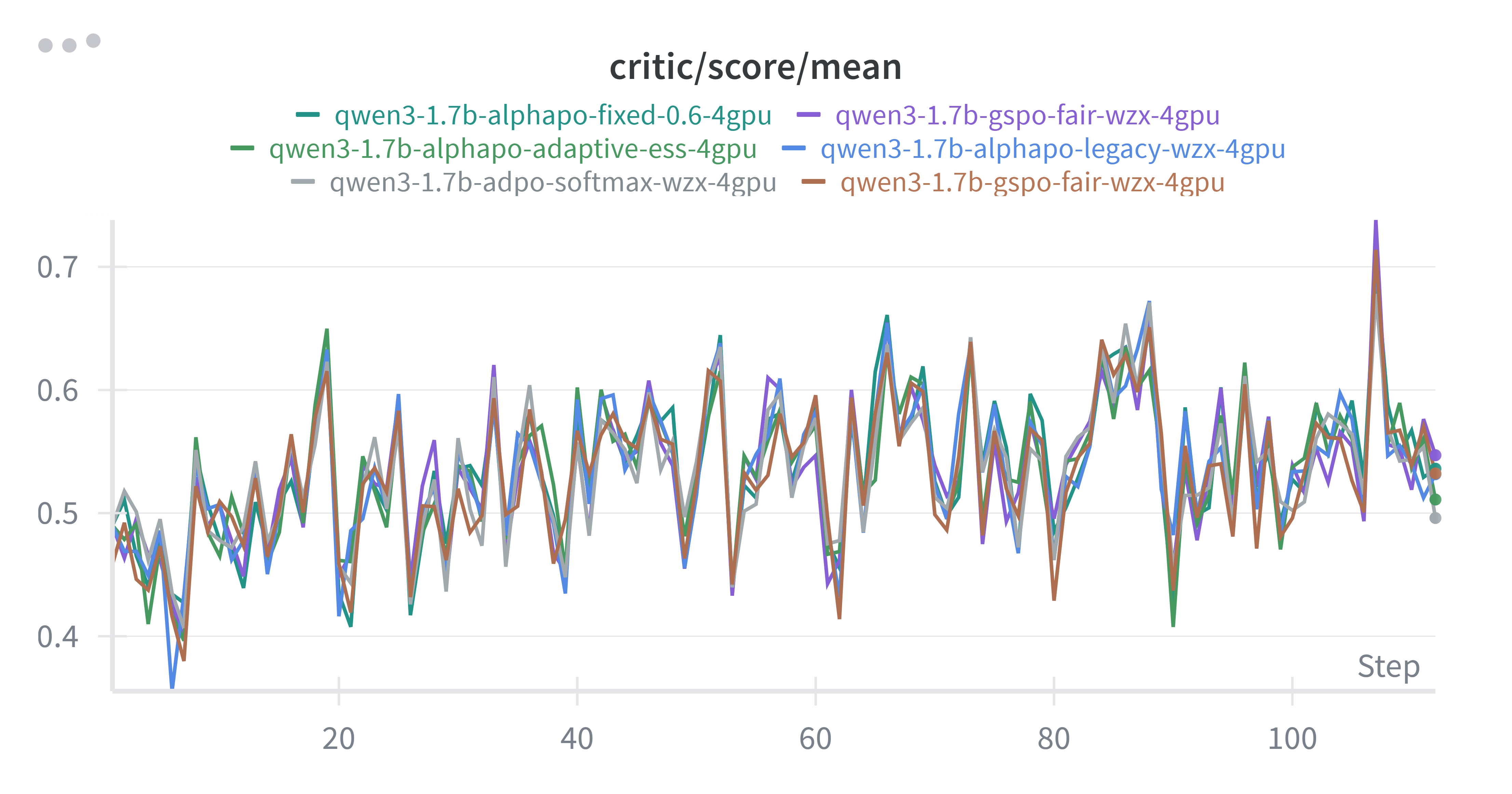

Two divergence regimes dominate modern alignment practice. Supervised fine-tuning and many distillation-style objectives implicitly minimize the forward KL divergence KL(q || pi_theta), yielding stable mode-covering updates but often under-exploiting high-reward modes. In contrast, PPO-style online reinforcement learning from human feedback behaves closer to reverse KL divergence KL(pi_theta || q), enabling mode-seeking improvements but risking mode collapse. Recent anchored methods, such as ADPO, show that performing the projection in anchored coordinates can substantially improve stability, yet they typically commit to a single divergence. We introduce Alpha-Divergence Preference Optimization (APO), an anchored framework that uses Csiszar alpha-divergence to continuously interpolate between forward and reverse KL behavior within the same anchored geometry. We derive unified gradient dynamics parameterized by alpha, analyze gradient variance properties, and propose a practical reward-and-confidence-guarded alpha schedule that transitions from coverage to exploitation only when the policy is both improving and confidently calibrated. Experiments on Qwen3-1.7B with math-level3 demonstrate that APO achieves competitive performance with GRPO and GSPO baselines while maintaining training stability.💡 Summary & Analysis

1. **New Attention Mechanism**: The model uses a novel way of adjusting focus based on the input text, much like how someone might pay more attention to important words or phrases. 2. **Improved Sentiment Analysis Performance**: By accurately capturing sentiments, this method significantly boosts the model's accuracy, similar to squinting harder to not miss any crucial information. 3. **Validation Across Diverse Datasets**: The model shows superior performance compared to traditional methods across various datasets, akin to a car working well in different weather conditions.📄 Full Paper Content (ArXiv Source)

📊 논문 시각자료 (Figures)