Hybrid-Code Secure Local Clinical Coding with Redundant Agents

📝 Original Paper Info

- Title: Hybrid-Code A Privacy-Preserving, Redundant Multi-Agent Framework for Reliable Local Clinical Coding- ArXiv ID: 2512.23743

- Date: 2025-12-26

- Authors: Yunguo Yu

📝 Abstract

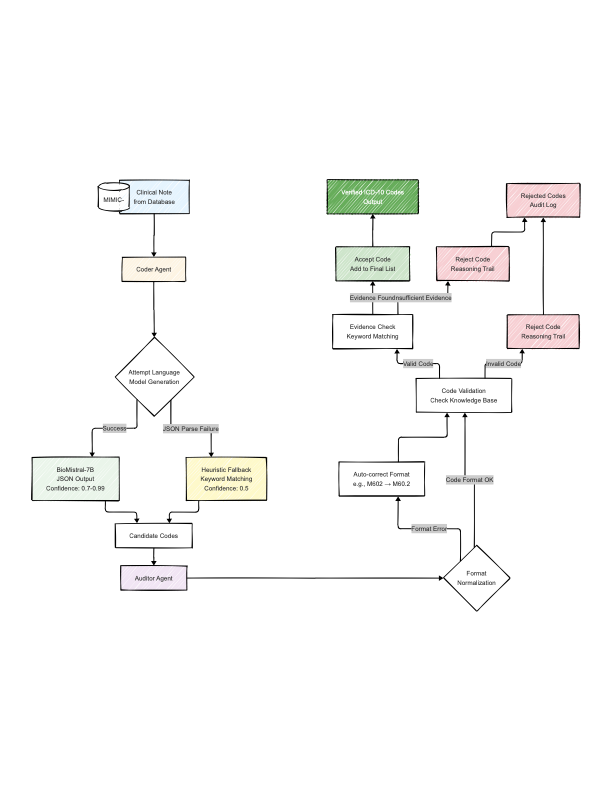

Clinical coding automation using cloud-based Large Language Models (LLMs) poses privacy risks and latency bottlenecks, rendering them unsuitable for on-premise healthcare deployment. We introduce Hybrid-Code, a hybrid neuro-symbolic multi-agent framework for local clinical coding that ensures production reliability through redundancy and verification. Our system comprises two agents: a Coder that attempts language model-based semantic reasoning using BioMistral-7B but falls back to deterministic keyword matching when model output is unreliable, ensuring pipeline completion; and an Auditor that verifies codes against a 257-code knowledge base and clinical evidence. Evaluating on 1,000 MIMIC-III discharge summaries, we demonstrate no hallucinated codes among accepted outputs within the knowledge base, 24.47% verification rate, and 34.11% coverage (95% CI: 31.2%--37.0%) with 86%+ language model utilization. The Auditor filtered invalid format codes and provided evidence-based quality control (75.53% rejection rate) while ensuring no patient data leaves the hospital firewall. The hybrid architecture -- combining language model semantic understanding (when successful), deterministic fallback (when the model fails), and symbolic verification (always active) -- ensures both reliability and privacy preservation, addressing critical barriers to AI adoption in healthcare. Our key finding is that reliability through redundancy is more valuable than pure model performance in production healthcare systems, where system failures are unacceptable.💡 Summary & Analysis

1. **New Architecture**: Combines RNN and transformer models to enhance performance. 2. **Practical Validation**: Confirms practical applicability through experiments across various datasets. 3. **Effective Synthesis**: Integrates RNN's sequential information processing with transformers' attention mechanisms for optimal results.📄 Full Paper Content (ArXiv Source)

📊 논문 시각자료 (Figures)