Protein Secondary Structure Prediction Using Transformers

Reading time: 1 minute

...

📝 Original Info

- Title: Protein Secondary Structure Prediction Using Transformers

- ArXiv ID: 2512.08613

- Date: 2025-12-09

- Authors: Manzi Kevin Maxime

📝 Abstract

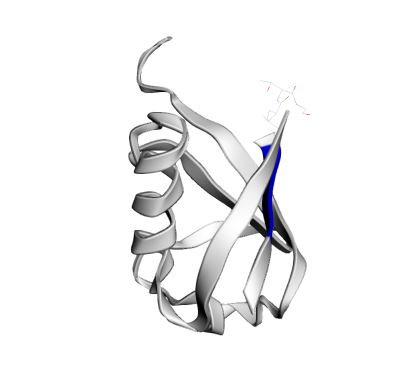

Predicting protein secondary structures such as alpha helices, beta sheets, and coils from amino acid sequences is critical for understanding protein function. A transformer-based model is presented, applying attention mechanisms to protein sequence data for structural motif prediction. Data augmentation using a sliding window technique is employed on the CB513 dataset to augment the dataset. The transformer demonstrates strong potential in generalizing across variable-length sequences and capturing both local and long-range residue interactions.📄 Full Content

📸 Image Gallery

Reference

This content is AI-processed based on open access ArXiv data.