Toward Faithful Retrieval-Augmented Generation with Sparse Autoencoders

Reading time: 2 minute

...

📝 Original Info

- Title: Toward Faithful Retrieval-Augmented Generation with Sparse Autoencoders

- ArXiv ID: 2512.08892

- Date: 2025-12-09

- Authors: Guangzhi Xiong, Zhenghao He, Bohan Liu, Sanchit Sinha, Aidong Zhang

📝 Abstract

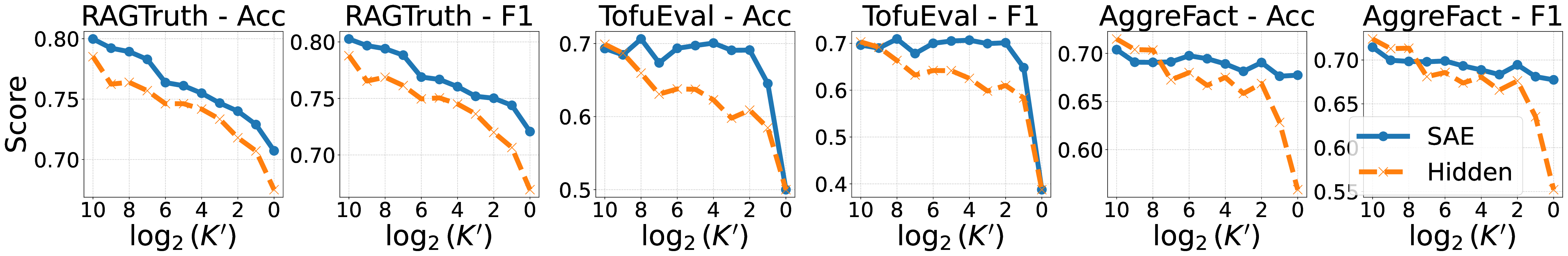

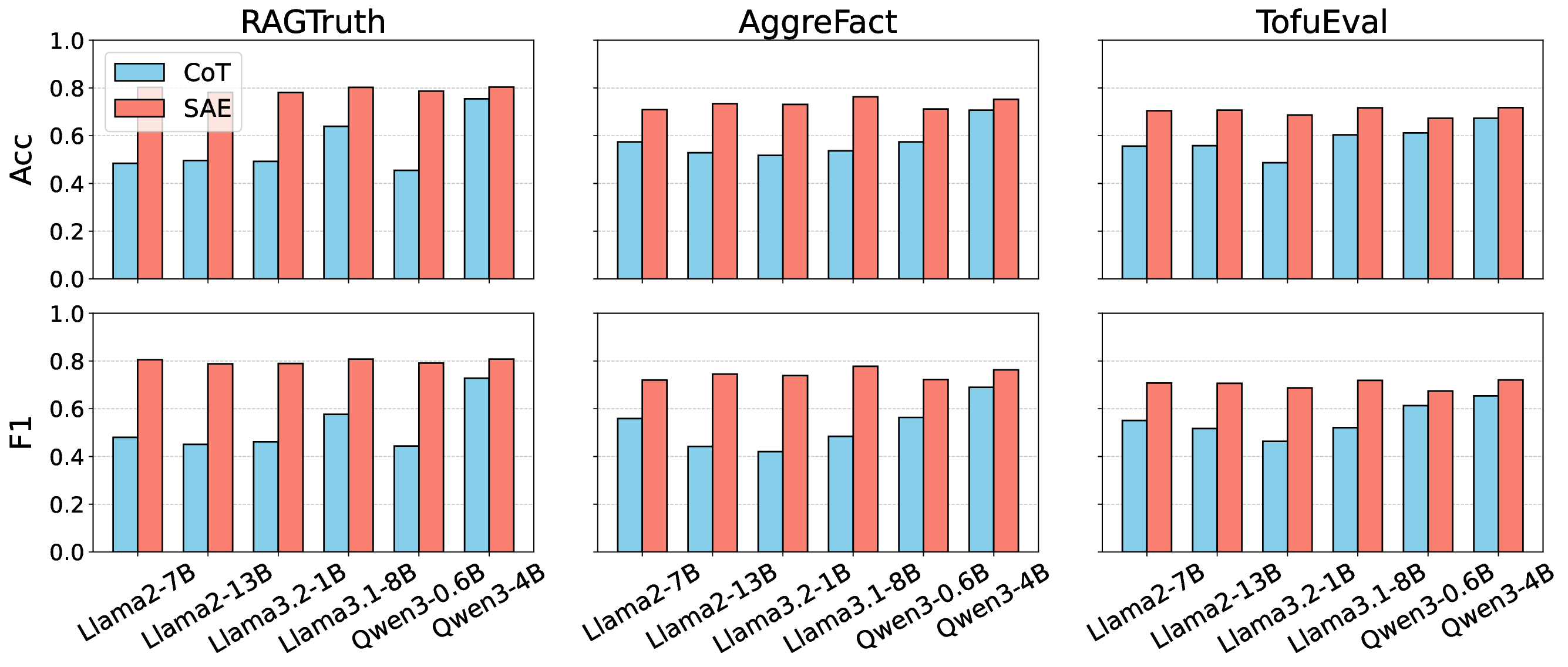

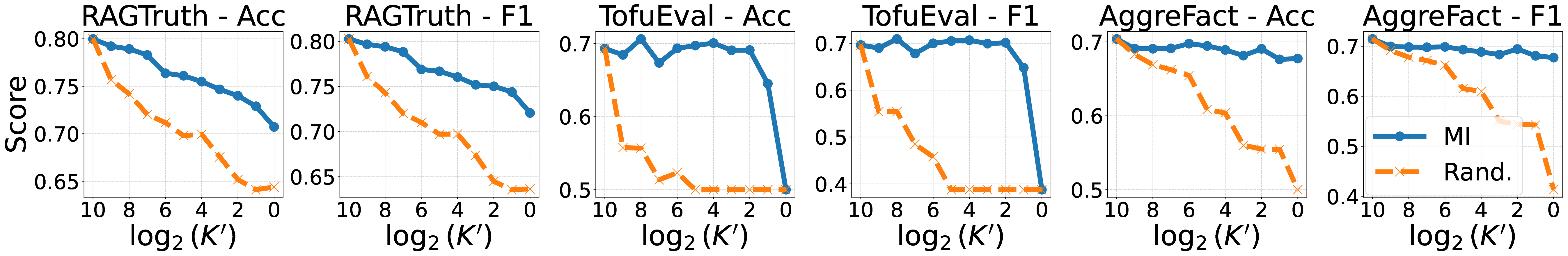

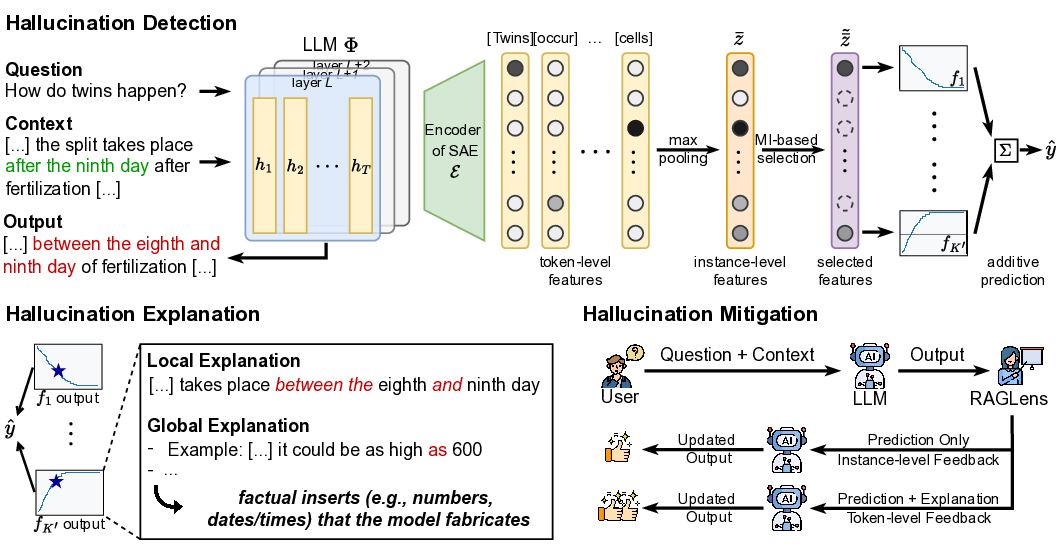

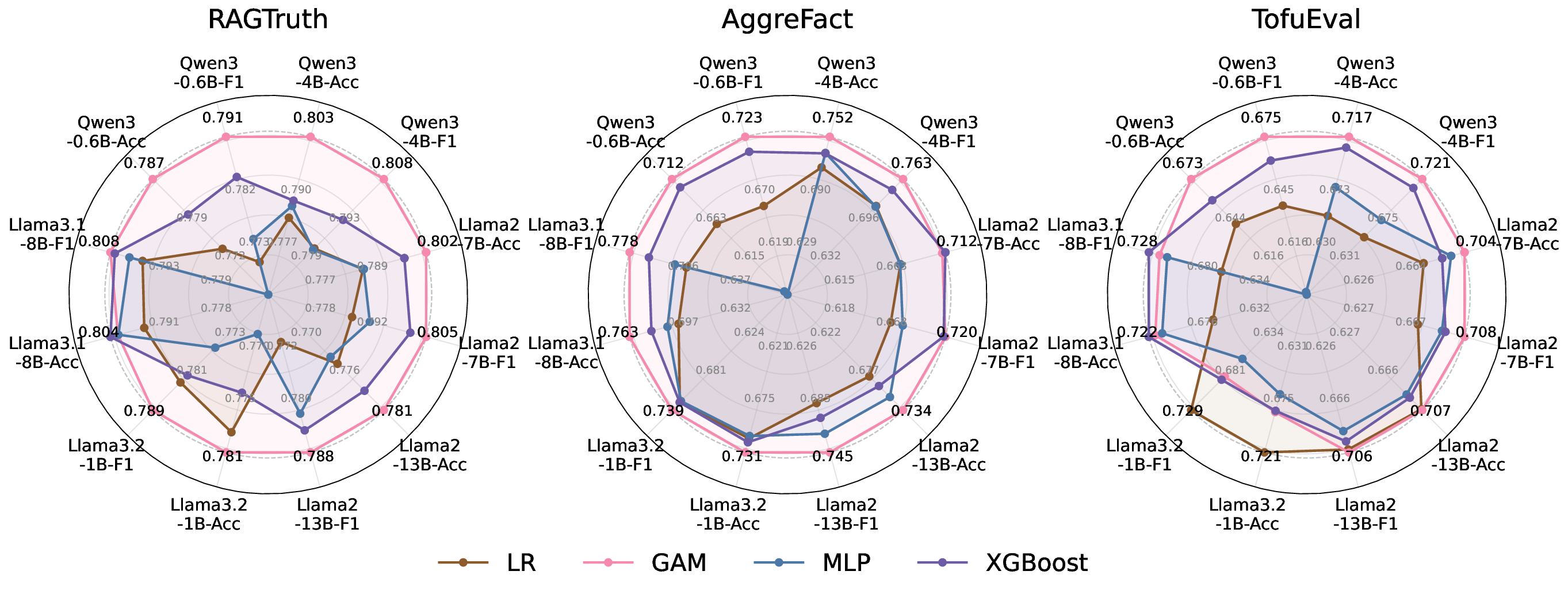

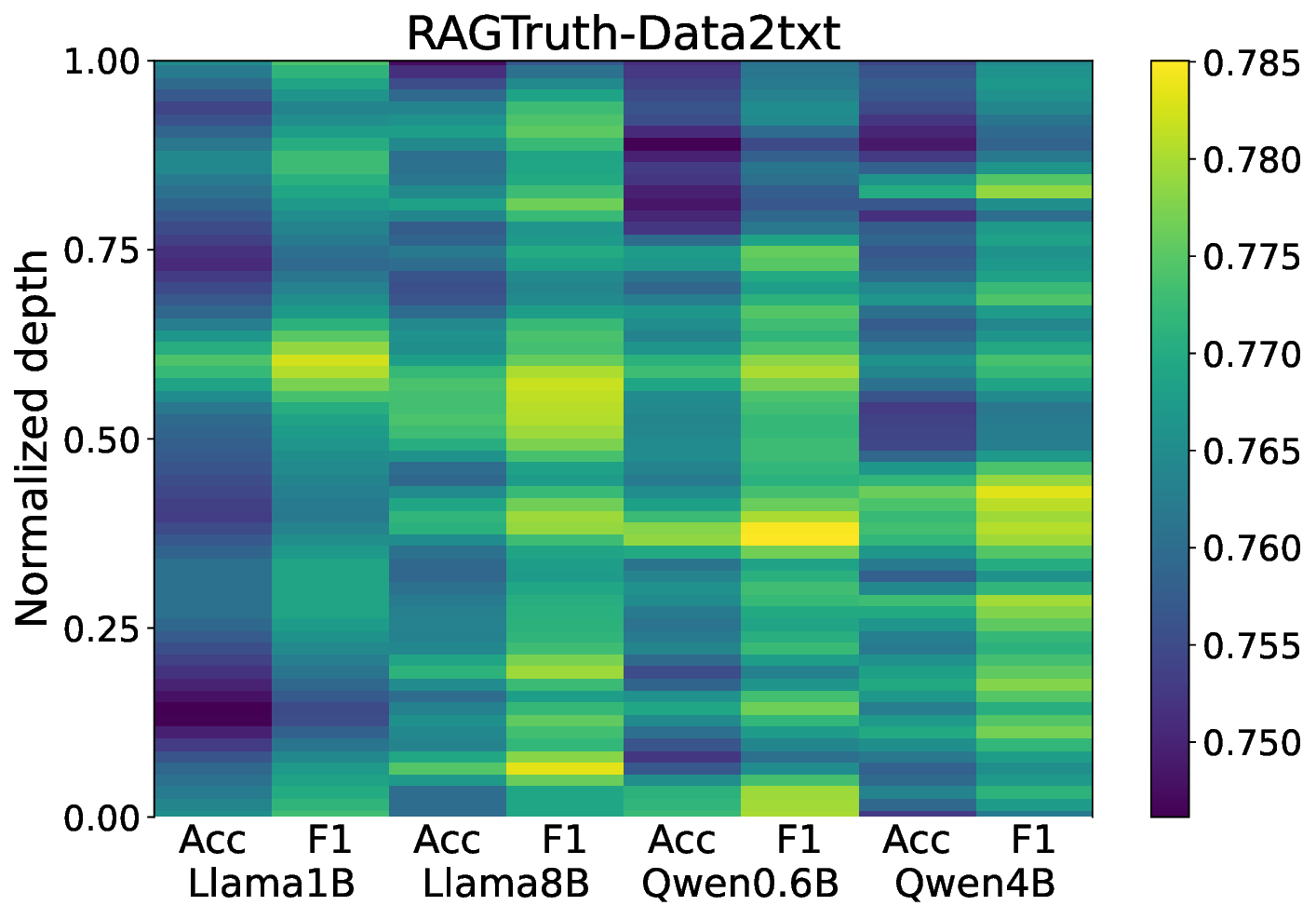

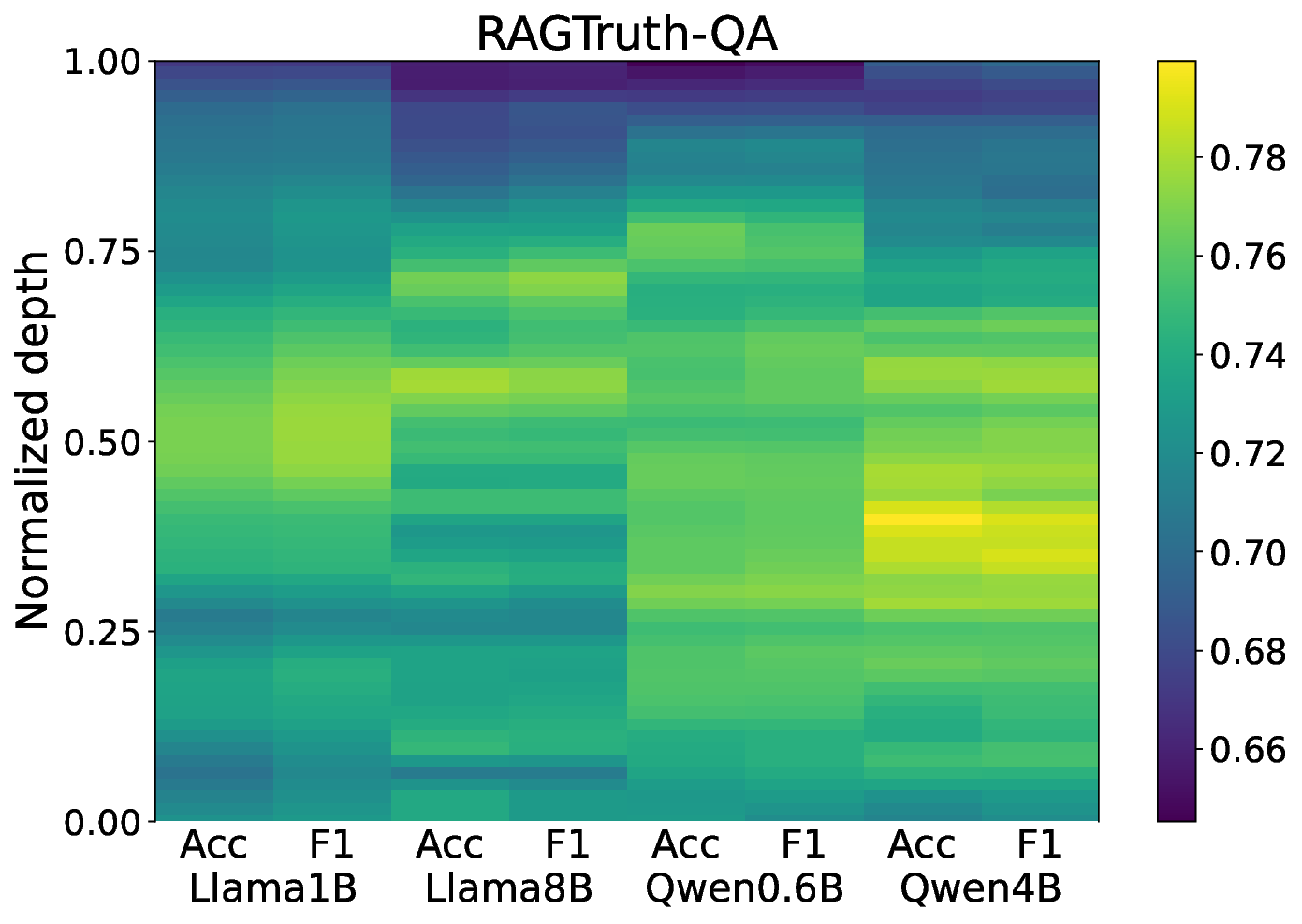

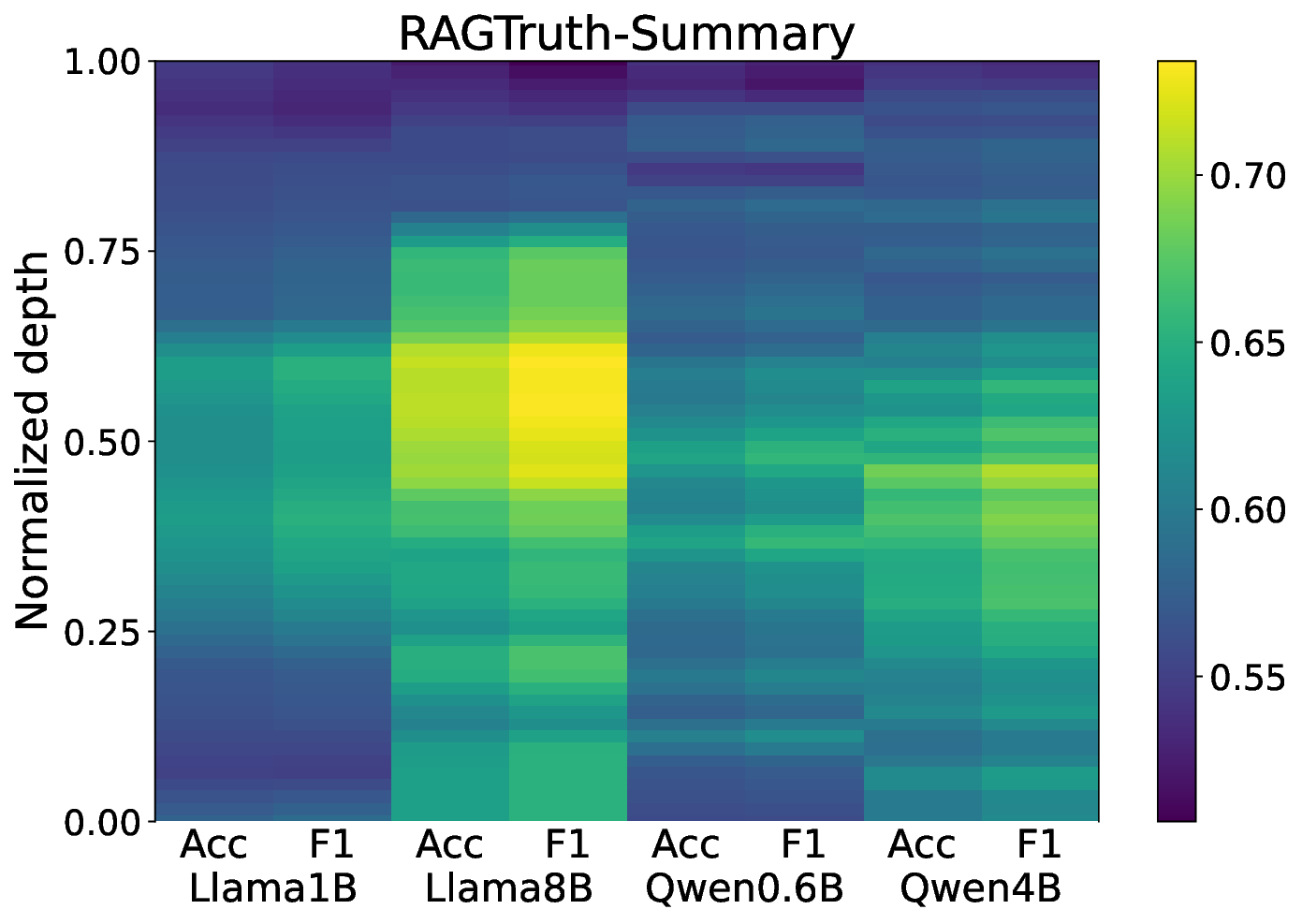

Retrieval-Augmented Generation (RAG) improves the factuality of large language models (LLMs) by grounding outputs in retrieved evidence, but faithfulness failures, where generations contradict or extend beyond the provided sources, remain a critical challenge. Existing hallucination detection methods for RAG often rely either on large-scale detector training, which requires substantial annotated data, or on querying external LLM judges, which leads to high inference costs. Although some approaches attempt to leverage internal representations of LLMs for hallucination detection, their accuracy remains limited. Motivated by recent advances in mechanistic interpretability, we employ sparse autoencoders (SAEs) to disentangle internal activation...📄 Full Content

📸 Image Gallery

Reference

This content is AI-processed based on open access ArXiv data.