No Labels, No Problem: Training Visual Reasoners with Multimodal Verifiers

Reading time: 2 minute

...

📝 Original Info

- Title: No Labels, No Problem: Training Visual Reasoners with Multimodal Verifiers

- ArXiv ID: 2512.08889

- Date: 2025-12-09

- Authors: Damiano Marsili, Georgia Gkioxari

📝 Abstract

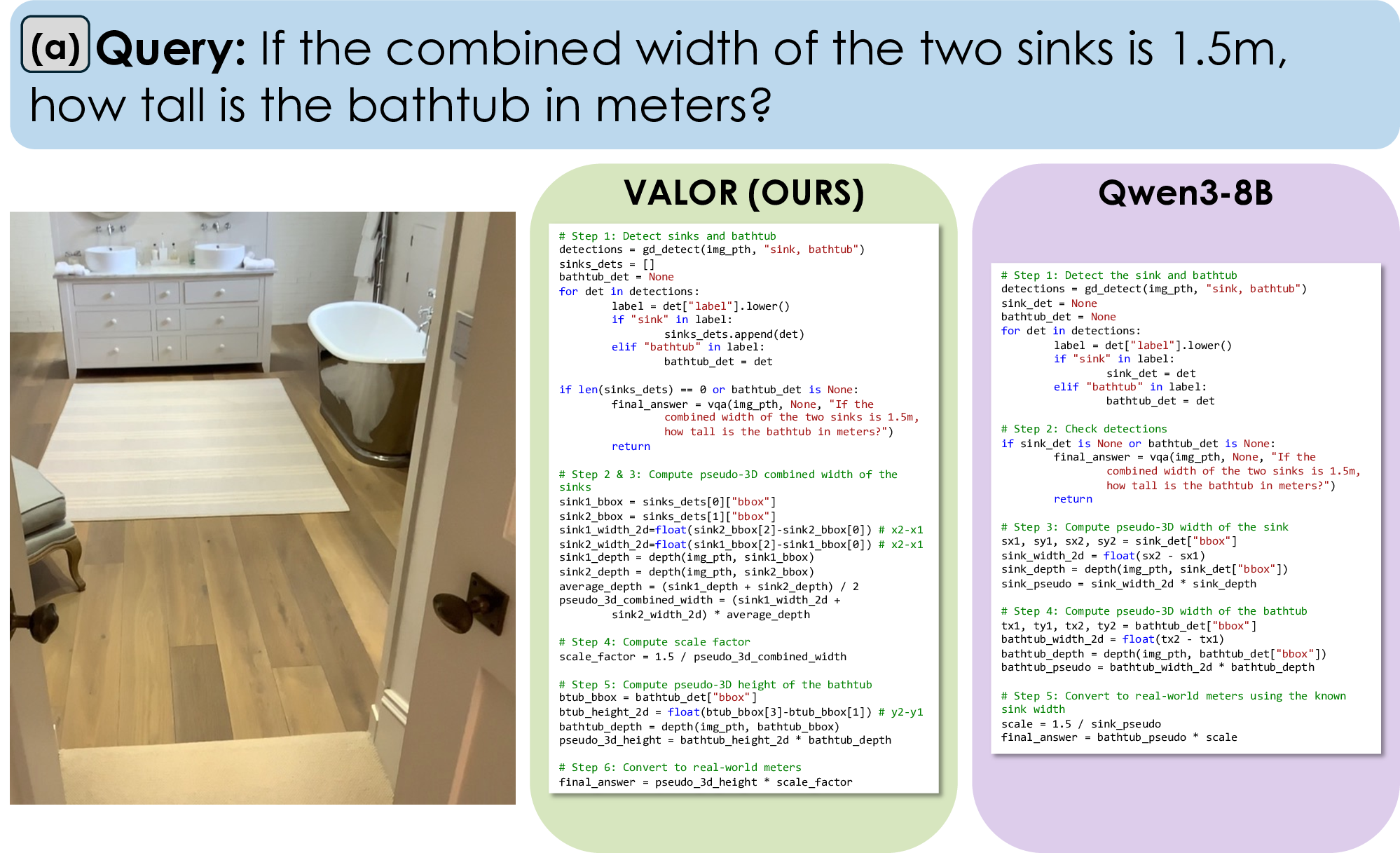

Visual reasoning is challenging, requiring both precise object grounding and understanding complex spatial relationships. Existing methods fall into two camps: language-only chain-of-thought approaches, which demand large-scale (image, query, answer) supervision, and program-synthesis approaches which use pretrained models and avoid training, but suffer from flawed logic and erroneous grounding. We propose an annotation-free training framework that improves both reasoning and grounding. Our framework uses AI-powered verifiers: an LLM verifier refines LLM reasoning via reinforcement learning, while a VLM verifier strengthens visual grounding through automated hard-negative mining, eliminating the need for ground truth labels. This design com...📄 Full Content

📸 Image Gallery

Reference

This content is AI-processed based on open access ArXiv data.