Fed-SE: Federated Self-Evolution for Privacy-Constrained Multi-Environment LLM Agents

Reading time: 2 minute

...

📝 Original Info

- Title: Fed-SE: Federated Self-Evolution for Privacy-Constrained Multi-Environment LLM Agents

- ArXiv ID: 2512.08870

- Date: 2025-12-09

- Authors: Xiang Chen, Yuling Shi, Qizhen Lan, Yuchao Qiu, Min Wang, Xiaodong Gu, Yanfu Yan

📝 Abstract

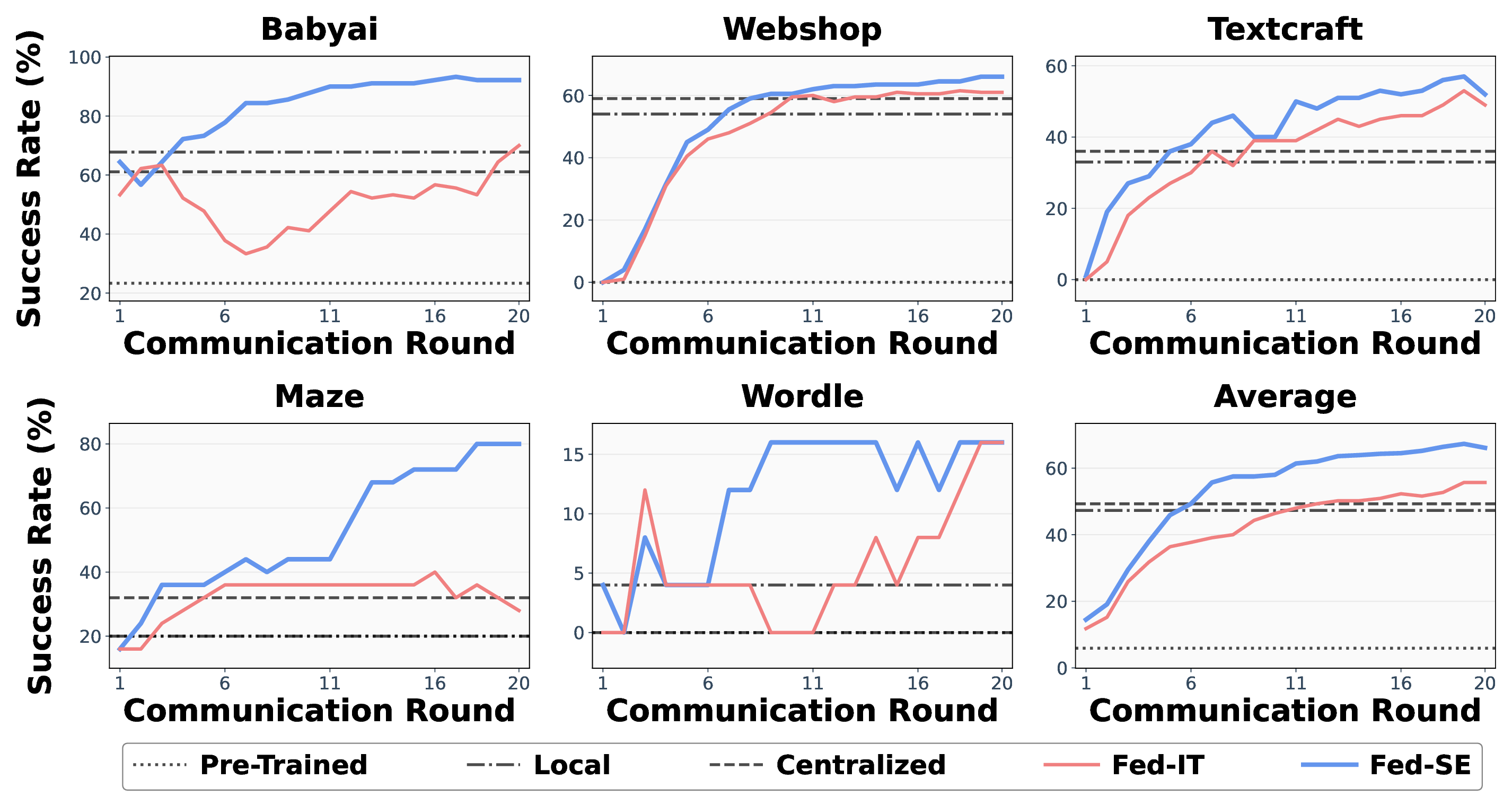

LLM (Large Language Model) agents are widely deployed in complex interactive tasks, yet privacy constraints often preclude centralized optimization and co-evolution across dynamic environments. Despite the demonstrated success of Federated Learning (FL) on static datasets, its effectiveness in open-ended, selfevolving agent systems remains largely unexplored. In such settings, the direct application of standard FL is particularly challenging, as heterogeneous tasks and sparse, trajectory-level reward signals give rise to severe gradient instability, which undermines the global optimization process. To bridge this gap, we propose Fed-SE, a Federated Self-Evolution framework for LLM agents that establishes a local evolution-global aggregation...📄 Full Content

📸 Image Gallery

Reference

This content is AI-processed based on open access ArXiv data.