This study focuses on event detection in optical fibers, specifically classifying six events using the Phase-OTDR system. A novel approach is introduced to enhance Phase-OTDR data analysis by transforming 1D data into grayscale images through techniques such as Gramian Angular Difference Field, Gramian Angular Summation Field, and Recurrence Plot. These grayscale images are combined into a multi-channel RGB representation, enabling more robust and adaptable analysis using transfer learning models. The proposed methodology achieves high classification accuracies of 98.84% and 98.24% with the EfficientNetB0 and DenseNet121 models, respectively. A 5-fold cross-validation process confirms the reliability of these models, with test accuracy rates of 99.07% and 98.68%. Using a publicly available Phase-OTDR dataset, the study demonstrates an efficient approach to understanding optical fiber events while reducing dataset size and improving analysis efficiency. The results highlight the transformative potential of image-based analysis in interpreting complex fiber optic sensing data, offering significant advancements in the accuracy and reliability of fiber optic monitoring systems. The codes and the corresponding image-based dataset are made publicly available on GitHub to support further research: https://github.com/miralab-ai/Phase-OTDR-event-detection.

Event detection and classification involve identifying, analyzing, and categorizing patterns within event streams to provide insights into real-world occurrences. [1,2] This process finds applications across diverse fields, from emergency response and crisis management to monitoring trends and potential threats on social media platforms. [3,4,5,6] Modern sensor systems enhanced by deep learning techniques serve a wide spectrum of event detection applications. Deep learning models facilitate precise analysis in various domains: acoustic sensors enable noise source identification in urban environments, [7,8] image processing systems detect object movements and monitor traffic flow, [9,10,11] seismic sensors classify earthquakes based on magnitude and location, [12,13] and wearable devices detect medical events such as heart arrhythmias or epileptic seizures. [14,15,16] Phase-sensitive Optical Time-Domain Reflectometer (Phase-OTDR) represents a distributed sensing technology that monitors acoustic events along optical fiber. Unlike conventional OTDR systems, Phase-OTDR utilizes coherent light sources to measure phase information of backscattered lightwaves. Vibrations or disturbances cause regional changes in fiber length and refractive index, enabling spatially resolved event localization and characterization. This distributed sensing capability makes Phase-OTDR particularly valuable for real-time monitoring of large areas including pipelines, [17] railways, [18] and security systems. [19] The ability to track phase changes along an optical fiber as a function of position and time offers a wide range of event detection possibilities for Phase-OTDR. However, interpreting these phase traces is challenging due to inherent noise sources and the similar strain characteristics exhibited by the fiber for different events. Consequently, significant effort has been dedicated to accurately recognizing event types from phase traces. Historically, signal processing techniques such as wavelet transform, [20] Fourier analysis, [21] and statistical methods [22] were instrumental in this recognition process. Nevertheless, the field has evolved, with machine learning methods gaining prominence. These data-driven approaches excel at discerning complex patterns, leading to their increasing dominance and marking a significant shift in event detection methodologies.

Machine learning and deep learning have significantly advanced Phase-OTDR event detection and classification, with numerous studies exploring various models and preprocessing techniques. For instance, Cao et al. [23] utilized SVM and CNN with spatio-temporal matrix normalization, achieving 94.0% accuracy. Chen et al. [24] employed a Dendrite Net (DD) with VTTCCG, reaching 98.6% accuracy after normalization and spatio-temporal feature extraction. Gan et al. [25] applied transfer learning with VGGish and SVM, while Hu et al. [26] used AlexNet within a TSC framework, incorporating normalization and StyleGAN for data augmentation. Kamanga et al. [27] achieved 98.2% accuracy with a modified AlexNet using MFCC-DP and active learning. Li et al. [28] introduced a semi-supervised MT-ACNN-SA-BiLSTM model. Additionally, Sasi et al. [29] used LightGBM with feature selection, and Wang et al. [30] explored traditional machine learning models like SVM, RF, KNN, and NB. Recent advancements in 2025 include Duan et al. ’s [31] ISAT model, which uses a 1D CNN and a modified Transformer Encoder for high accuracy. Hu et al. [32] proposed SDENet with Gaussian noise addition and spatiotemporal maps. Li et al. [33] introduced DASFormer, a Transformer-based model for long sequence classification, achieving 99.6% accuracy. Luo et al. [34] utilized knowledge distillation with a 4-layer CNN and ResNet-34. Wang et al. [35] developed an IRMS-CNN with Contrastive Prototype Learning, and Cheng et al. [36] presented Φ-GLMAE, a Global-Local Masked Autoencoder. A comprehensive overview of these studies, including their preprocessing methods, architectures, dataset configurations, and reported accuracies, is provided in Table 5.

Despite significant progress in Phase-OTDR event classification, critical gaps persist. While some recent studies explore image-based transformations of 1D time series data, there is a notable lack of research focusing on combining multiple distinct image transformation techniques to create rich, multi-channel image representations. Existing approaches often rely on single transformation methods or basic deep learning architectures, overlooking the potential for integrating diverse feature perspectives. Furthermore, a comprehensive comparative analysis of how different image transformation methods, especially when combined, impact classification performance within the Phase-OTDR domain remains underexplored. Crucially, the potential for these advanced image-based transformations to enhance data management efficiency by significantly reducing the raw dataset size has not been thoroughly investigated as a primary benefit. Addressing these gaps is essential for developing more robust, efficient, and highly accurate Phase-OTDR event detection systems.

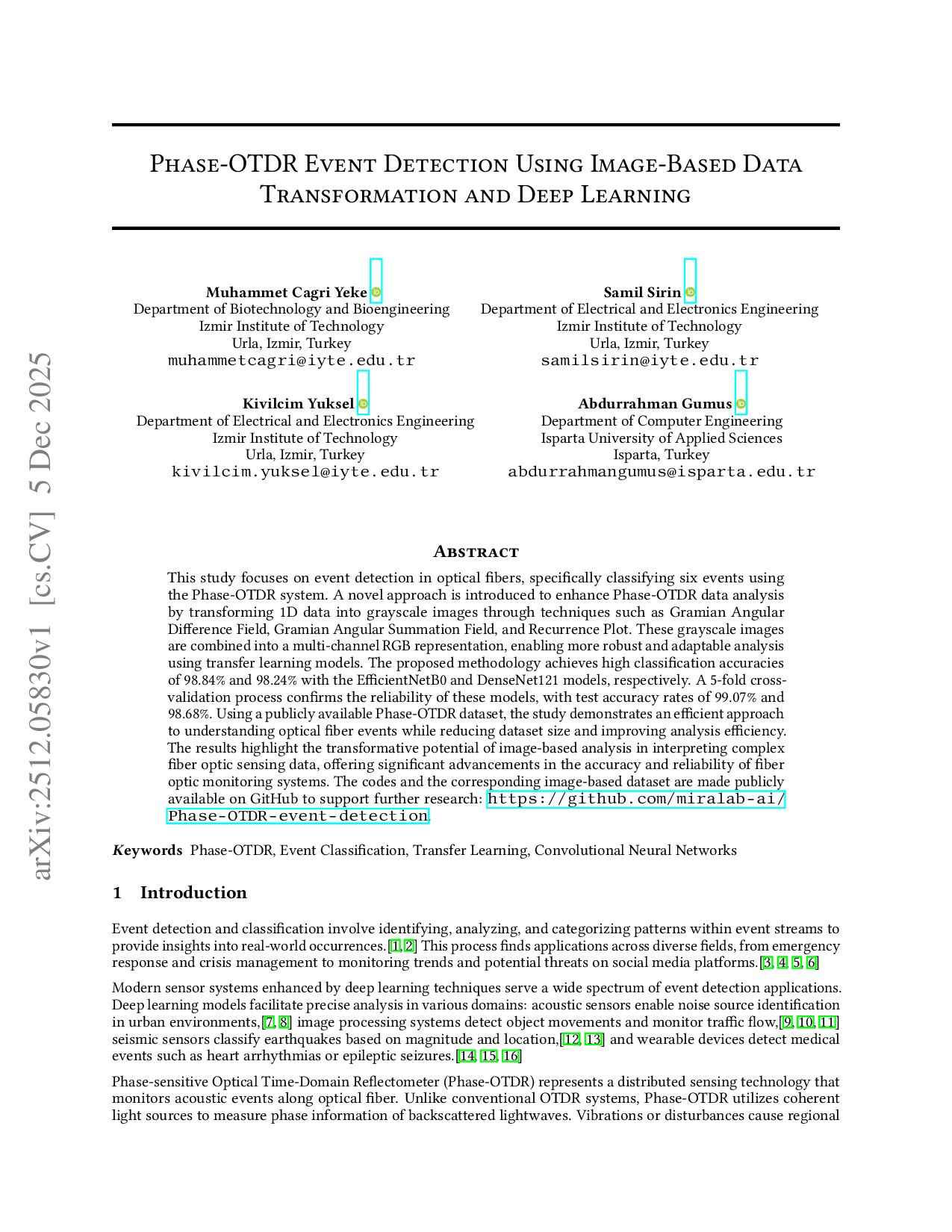

This study addresses the identified literature gaps by introducing a novel image-based transformation approach for enhanced Phase-OTDR data analysis. Our motivation stems from the need to overcome the limitations of traditional 1D signal processing and to leverage the capabilities of powerful pre-trained deep learning models fully. We propose a methodology that uniquely employs Gramian Angular Difference Field (GADF), Gramian Angular Summation Field (GASF), and Recurrence Plots (RP) to create multi-channel RGB image representations from 1D time series data. This innovative transformation facilitates advanced feature extraction, improved event discrimination capabilities, and offers the potential for more efficient data management. Figure 1 illustrates the overall framework of transforming 1D Phase-OTDR data into images and employing deep learning models for event classification.

This study presents a framework for event detection in optical fibers, addressing the limitations of traditional Phase-OTDR data analysis. Our primary contributions are multifaceted: • We introduce a methodology for converting one-dimensional Phase-OTDR data into two-dimensional grayscale images, forming the basis for an image-based analysis approach. This transformation enhances the visual representation of events, facilitating more effective feature extraction and pattern recognition. • A color allocation process is developed to integrate multiple grayscale images into a single multi-channel RGB representation. This approach enriches the data’s information density, providing a more comprehensive input for deep learning models. • The proposed image transformation and integration significantly reduce the dataset size while preserving critical information. This contributes to more efficient data handling and processing, which is crucial for real-time applications. • The research explores the efficacy of transfer learning for Phase-OTDR event classification using established CNN architectures, including 8 transfer learning models. This involves a comparative analysis of these different models, alongside an evaluation of fine-tuning versus feature extraction strategies, demonstrating a robust and adaptable approach.

This paper presents a novel image-based analysis approach for Phase-OTDR event classification using multi-channel data transformation and deep learning. The remainder is organized as follows: Section 2 describes the methodology including data collection, dataset details, image transformation techniques, and deep learning models. Section 3 presents results and performance analysis. Section 4 concludes the study.

This study presents a comprehensive methodology for Phase-OTDR event classification using image-based data transformation and transfer learning approaches. The methodology utilizes the publicly available dataset from Cao et al. [23] and applies novel image transformation techniques to convert 1D time series data into multi-channel representations suitable for deep learning analysis.

This study utilizes the Phase-OTDR dataset collected by Cao et al. [23] using their experimental setup. The original measurement system, as described by Cao et al., is illustrated in Figure 2. Light generated by the laser is amplified in an Erbium-Doped Fiber Amplifier (EDFA) and filtered to eliminate amplified spontaneous emission (ASE) noise. An Acousto Optic Modulator (AOM) driven by a function generator converts continuous light to 400 ns pulses with 40 m spatial resolution and 12.5 or 8 kHz repetition frequency. The sensing fiber comprises three sections: a 5-10 km leading fiber kept isolated from vibration in a soundproof box, and two 50 m armored sections where the second section is exposed to vibrational events. Rayleigh backscattered light is guided by the circulator to the photodetector for intensity detection and data acquisition.

The dataset comprises 15,612 samples collected by sending successive 10,000 pulses and detecting backscattered light intensity from the last 120 m fiber section. [23] Each measurement contains 12 intensity values of Rayleigh backscattered signal from 12 equidistant spatial points on the 120 m fiber section, forming a 12×10,000 intensity matrix per sample. During each measurement, one of six distinct disturbance events was applied on the second fiber section: background, digging, knocking, watering, shaking, and walking. Figure 3 presents sample measurement data for each event type. The dataset was originally divided into training and test sets with an 8:2 ratio. Event measurements are stored in .mat format files corresponding to each event type. Table 1 presents the distribution of samples across different event categories, showing the sample counts and corresponding labels used for classification. [37,38] Three mathematical transformation techniques are employed: Gramian Angular Difference Field (GADF), Gramian Angular Summation Field (GASF), and Recurrence Plot (RP). [39,40,41,42] This approach transforms 1D Phase-OTDR data into visually interpretable images using mathematical transformations, including GADF, GASF, and RP. This conversion simplifies the analysis of intricate 1D data by providing a visual representation of monitored events. Furthermore, by employing these three distinct methods, a multi-channel RGB image is constructed, where each channel corresponds to a different transformation. This multi-channel representation facilitates a comprehensive analysis by integrating diverse feature perspectives. Meaningful features are then extracted from these RGB image data using transfer learning model architectures, which enhances the classification accuracy of fiber optic sensor data. This image-based analysis is a key aspect of the methodology, designed to improve Phase-OTDR data analysis capabilities.

Each technique captures distinct temporal characteristics: GADF emphasizes temporal variations and differences between data points, GASF highlights cumulative patterns and temporal correlations, while RP reveals recurrent behaviors and periodic structures in the time series. These complementary representations provide comprehensive feature extraction from different mathematical perspectives. The transformation process converts data from 12 spatial points into individual 500×500 pixel images using each technique. These images are organized into a 3×4 grid format, creating 1500×2000 pixel grayscale representations. Each technique’s output is allocated to specific RGB channels (GADF→Red, GASF→Green, RP→Blue), producing multi-channel color images. Final images are downsampled to 224×224 pixels for compatibility with pre-trained deep learning architectures, as illustrated in Figure 4.

The Gramian Angular Field (GAF) is a powerful technique for analyzing time series data by converting it into a visual format that facilitates the exploration of temporal relationships. [43] This transformation involves representing the data in polar coordinates, where angular information encodes the relationships between data points, and radial values represent temporal data. Three key components of GAF are the Gram Matrix, GASF Matrix, and GADF Matrix.

The Gram Matrix, also known as the Gramian Matrix, is a fundamental mathematical construct in GAF. It is a matrix representation of the inner products of the data vectors. If we have a dataset with vectors, x 1 , x 2 , . . . , x n , the Gram Matrix G is constructed as follows:

Here, X T represents the transpose of the data matrix X. The Gram Matrix G summarizes the pairwise inner products between data points and serves as a crucial step in the GAF transformation.

GASF Matrix is derived from the Gram Matrix. It captures the cosine of the summation of angular values between data points. Each element of the GASF Matrix corresponds to a pair of data points and is calculated as:

where Θ i and Θ j are the angular values associated with two specific data points. The GASF Matrix emphasizes cumulative patterns and relationships between data points, providing insights into how they collectively evolve over time.

The GADF Matrix is another component of the GAF transformation. It is also derived from the Gram Matrix and calculates the cosine of the difference between angular values of data points. Each element of the GADF Matrix is calculated as:

The GADF Matrix highlights variations and deviations in temporal relationships between data points, shedding light on how data points differ from each other. Visual examples of GASF and GADF transformations for different sinusoidal signals are presented in Figure 5.

Recurrence Plot (RP) introduced by Eckmann and his colleagues in 1987 for visualizing and analyzing recurrent behaviors and structures in dynamic systems and time series data. [44] It has found widespread use, particularly in understanding complex systems and discovering specific behaviors. The fundamental mathematical basis of the RP involves measuring the similarity or proximity between two time points. If the distance between two points falls below a specified threshold value, the two points are considered close to each other. Mathematically, the distance d(i, j) between two time points i and j is calculated as follows:

Here, x(i) and x(j) represent the values of time series data at indices i and j, respectively.

This process is repeated for all data points in the time series, resulting in a matrix. This matrix forms the basis of the RP. The i-th row and j-th column of the matrix represent the distance between time points i and j. If this distance is less than a specified threshold (ϵ), the corresponding element in the matrix is set to 1; otherwise, it is set to 0. The mathematical representation of the RP can be expressed as:

Here, R i,j is an element of the matrix that indicates the similarity between the i-th and j-th time points. ϵ serves as a threshold, determining how close two points in time need to be. This matrix constitutes the RP.

CNNs are computational models designed for learning from image-based data. [45,46] These networks comprise two stages: feature extraction and classification. Convolutional and pooling layers handle feature extraction. Convolutional layers perform convolution operations using filters to process input data:

MaxPooling(x) = max i,j∈R

where x n,i represents the ith feature map, W n-1,k and b n,k are weights and biases of the kth filter, and y n-1,m is the mth feature map from the preceding layer. Pooling layers reduce feature map dimensions while preserving essential information. Fully connected layers use ReLU activation for feature processing and softmax for multi-class classification.

[47]

Transfer learning transfers knowledge acquired in one domain to other domains, reducing resource-intensive requirements such as large datasets and high computational power typically needed for training deep learning models. [48,49] It has wide applications across computer vision, natural language processing, and healthcare. [50,51,52] All pretrained models are initially loaded with ImageNet weights, and their final classification layers are replaced with 6 neurons corresponding to Phase-OTDR event categories.

Two implementation strategies are evaluated for layer configuration. When setting layers as “Trainable: True, " these layers retain their initial weights from pre-training but are fine-tuned using the new dataset. This increases the number of trainable parameters, leading to longer learning process and higher computational load. It is advantageous when the new dataset is relatively small and closely related to the original pre-training dataset. Conversely, selecting “Trainable: False” freezes these layers as feature extractors, preventing weight updates during training. This significantly reduces trainable parameters and expedites training but potentially constrains adaptability. This option is beneficial when the new dataset is significantly different or when the original dataset is vast compared to the new one.

The transfer learning architectures evaluated in this study are summarized in Table 2. Simple and effective, but requires high memory and computational power.

Fall Detection [54]. POF Weight Detection [55]. Optical Measurement Technology [56].

Deeper version of VGG-16 with 19 layers and approximately 144M parameters [53].

Higher accuracy but more computationally expensive.

FBG Tactile Recognition [57]. Fiber Performance Prediction [58].

Image-based Classification [59].

ResNet50 50-layer network with residual blocks and skip connections to address vanishing gradient problem. About 25.6 million parameters [60].

Enables very deep networks but can be overkill for small datasets.

Broken Wire Identification [61]. Weld Defect Recognition [62].

Vibration Recognition [63].

Uses parallel convolutional layers with varying kernel sizes for feature extraction. Approximately 23.5M parameters [64].

Efficient and accurate, but complex to implement.

Perimeter Security Detection [65].

Pathology Classification [66]. Fiber Security Detection [67].

Combines inception modules with residual connections. Approximately 55.8M parameters [68].

High accuracy but computationally expensive.

Photonic DNN Acceleration [69]. CLS Disease Detection [70]. Modulation Format Recognition [71].

Lightweight architecture with depthwise separable convolutions. Approximately 3.4M parameters [72].

Efficient for mobile and embedded devices, but less accurate for complex tasks.

Feature Extraction [73]. POF Motion Recognition [74]. Fiber Defect Detection [75].

Balances network depth, width, and resolution using compound scaling. Approximately 5.3M parameters [76].

Highly efficient but requires careful tuning.

Wavefront Detection [77]. Deep Learning Regression [78].

Wavefront Sensing [79].

Connects each layer to every other layer to improve feature reuse. Approximately 8M parameters [80].

Efficient and reduces overfitting, but memory intensive.

Vibration Pattern Recognition [81]. DAS Event Recognition [82]. Intrusion Signal Recognition [83].

Model validation was performed using two techniques: holdout validation and 5-fold cross-validation. Holdout validation divided the dataset into training (70%), test (15%), and validation (15%) subsets. In contrast, 5-fold crossvalidation split the data into five folds, with each fold serving as the test set once while the remaining four folds were used for training. This approach provided a more robust evaluation by averaging performance metrics across all folds. Performance metrics were selected to address the challenges of multi-class classification with potential class imbalances. Accuracy measured overall correctness, while sensitivity (recall) assessed the true positive detection rate. Precision quantified the reliability of positive predictions, and the F1 Score provided a harmonic mean of precision and recall. These validation techniques and performance metrics are illustrated in Figure 6, which provides insights into their application and importance for Phase-OTDR event classification.

This study investigates the effectiveness of an image-based deep learning approach for event detection in Phase-OTDR data. The inherent challenges in interpreting phase traces due to noise and similar strain characteristics for different events have motivated a shift towards image-based deep learning. Our approach transforms 1D Phase-OTDR data into multi-channel RGB images using mathematical transformations such as GADF, GASF, and RP, enabling the extraction of meaningful features via transfer learning models. This innovative methodology aims to significantly advance Phase-OTDR data analysis.

The training and validation outcomes for three transfer learning models-DenseNet121, EfficientNetB0, and Mo-bileNet-are presented in Figure 7. These results were obtained using holdout validation under two distinct configurations: with trainable layers set to “True” (fine-tuning) and “False” (feature extraction). As depicted in Figure 7.a, when trainable layers were enabled, all models demonstrated rapid learning and achieved high peak accuracies. For instance, DenseNet121’s training accuracy rapidly increased from an initial 58.94% to a peak of 99.54%, with validation accuracy similarly rising from 79.96% to 98.72%. EfficientNetB0 and MobileNet exhibited comparable performance trajectories, reaching peak training accuracies of 99.61% and 99.58%, and validation accuracies of 99.31% and 98.68%, respectively. The swift convergence from relatively lower initial accuracies to near-perfect peak values highlights the effectiveness of fine-tuning pre-trained models on our image-transformed Phase-OTDR dataset. This rapid improvement indicates that the models quickly adapted their pre-learned features to the specific patterns of our event data, demonstrating strong learning capabilities. Conversely, Figure 7.b illustrates the performance when trainable layers were frozen. While still achieving respectable accuracies, a noticeable reduction in peak performance and a slower learning trajectory were observed across all models. DenseNet121, for example, reached a peak training accuracy of 93.16% and validation accuracy of 94.48%. EfficientNetB0 achieved 96.58% training and 95.59% validation accuracy, while MobileNet reached 97.71% training and 88.91% validation accuracy. The difference in performance between the “True” and “False” configurations underscores the critical role of fine-tuning in adapting generic pre-trained features to the nuanced characteristics of Phase-OTDR event images, leading to superior classification performance. Figure 8 presents the confusion matrices for DenseNet121, EfficientNetB0, and MobileNet, illustrating their classification performance on the test dataset under holdout validation. A clear visual distinction is observed, with models operating in the fine-tuning mode (“Trainable: True”) demonstrating superior event classification compared to the feature extraction mode (“Trainable: False”). This indicates that allowing the models to adapt their pre-trained weights to the specific characteristics of Phase-OTDR event images significantly improves their ability to accurately distinguish between different events. The enhanced performance in fine-tuning mode is crucial for real-world Phase-OTDR applications, as it translates directly to more reliable detection of critical events like digging or walking, minimizing false alarms and missed detections. Table 3 provides a comprehensive overview of performance metrics for various deep learning models under both “Trainable: True” and “Trainable: False” configurations using holdout validation. When trainable parameters were enabled, models such as DenseNet121, EfficientNetB0, InceptionResNetV2, InceptionV3, MobileNet, and ResNet50 consistently achieved exceptional classification accuracies exceeding 97%, with EfficientNetB0 reaching over 99%. This high performance, particularly with fine-tuning, highlights the models’ strong adaptability to the transformed Phase-OTDR data. In contrast, freezing the trainable parameters (“Trainable: False”) resulted in a noticeable decline in performance across most models. Notably, InceptionResNetV2 experienced a substantial reduction in accuracy, while InceptionV3, MobileNet, and VGG19 also showed significant degradation. This performance disparity underscores that fine-tuning is essential for optimizing model performance on this specific dataset, allowing the models to learn and adapt features relevant to Phase-OTDR event detection rather than relying solely on generic pre-trained features.

The ability to achieve high accuracy is critical for practical applications, where precise and reliable event detection directly impacts system effectiveness and operational safety. The t-SNE visualizations in Figure 9 illustrate the feature space separation generated by DenseNet121, EfficientNetB0, and MobileNet for six distinct event classes: background, digging, knocking, watering, shaking, and walking. These visualizations qualitatively confirm the quantitative results by showing clear clustering of different event types. Specifically, the “Trainable: True” configuration consistently yields more distinct and well-separated clusters compared to the “Trainable: False” configuration. This improved separation in the feature space directly correlates with the enhanced classification performance observed in fine-tuning mode, indicating that the models learn more discriminative features when their layers are allowed to adapt. The ability to visually distinguish between event classes reinforces the effectiveness of our image-based approach and the fine-tuning strategy in capturing the underlying patterns of Phase-OTDR events. The 5-fold cross-validation analysis, depicted in Figure 10 and detailed in Table 4, provides a robust evaluation of model stability and reliability. Figure 10 illustrates the training and validation curves for DenseNet121, EfficientNetB0, and VGG16, highlighting consistent performance across different folds. The solid lines represent the mean performance metrics, while shaded areas indicate variations. Table 4 shows that in the “Trainable: True” configuration, DenseNet121, EfficientNetB0, and VGG16 achieved mean classification accuracies of approximately 98.68%, 99.07%, and 96.86%, respectively. These high mean accuracies, coupled with relatively low standard deviation values (e.g., 0.0012 for DenseNet121 accuracy), demonstrate the models’ exceptional stability and generalizability across different data subsets. This consistency across folds implies that the models are not overfitting to specific data partitions and can reliably perform on unseen data, which is a crucial aspect for real-world deployment of Phase-OTDR event detection systems. Conversely, the “Trainable: False” configuration resulted in slightly lower mean accuracies and marginally higher standard deviations, further emphasizing the benefits of fine-tuning for robust and consistent performance. Figure 11 further supports these findings through comparative boxplots, visually representing the variability and central tendencies of key performance metrics across both configurations. Our study introduces a novel image-based methodology for Phase-OTDR data analysis for event detection, leveraging multi-channel RGB images generated through GADF, GASF, and RP transformations. The fine-tuning approach consistently yielded superior results, with EfficientNetB0 achieving approximately 98.8% accuracy in holdout validation and 99.1% in 5-fold cross-validation, and DenseNet121 achieving 98.2% and 98.7% respectively. These results are highly competitive when compared to existing deep learning models specifically for Phase-OTDR event detection, as summarized in Table 5. This comprehensive table details various preprocessing methods, model architectures, dataset configurations, and reported accuracies from the literature. Our approach, represented by “Yeke et al. ( 2025)” in the table, demonstrates comparable or superior performance to many state-of-the-art methods, particularly considering the comprehensive image transformation framework and the use of diverse transfer learning models. For instance, our holdout accuracy of 98.84% and 5-fold CV accuracy of 99.07% for EfficientNetB0 are among the highest reported, showcasing the effectiveness of converting 1D Phase-OTDR data into image format for enhanced classification. This underscores the consistency and generalizability of our model, marking a substantial advancement in Phase-OTDR data analysis and holding significant potential across diverse application domains, including critical infrastructure monitoring and security.

This study successfully introduced a novel image-based classification approach that significantly advances Phase-OTDR event detection. Unlike traditional 1D signal processing or basic deep learning architectures, our methodology uniquely transforms 1D temporal traces into multi-channel RGB images using Gramian Angular Difference Field (GADF), Gramian Angular Summation Field (GASF), and Recurrence Plot (RP) techniques. This innovative transformation not only enhances the visual interpretability of complex Phase-OTDR data but also drastically improves data management efficiency by reducing the dataset size from 2.03 GB to 180 MB. Leveraging transfer learning with fine-tuning, our EfficientNetB0 and DenseNet121 models achieved outstanding classification accuracies of approximately 98.8% and 98.2% in holdout validation, and 99.1% and 98.7% in 5-fold cross-validation, respectively. This superior performance, particularly when compared to models used as mere feature extractors, underscores the critical role of adapting pre-trained networks to the nuanced characteristics of Phase-OTDR event images, thereby enabling more precise and reliable event classification. While this image-based approach marks a substantial advancement, a key limitation is its current reliance on a single, controlled dataset. Future research will focus on validating the generalizability of this methodology across diverse and larger Phase-OTDR datasets, exploring real-time processing implementations, and optimizing image transformation parameters for enhanced robustness in varied operational environments. This research, therefore, presents a compelling argument for the transformative impact of image-based analysis on Phase-OTDR data, paving the way for more accurate and efficient fiber optic monitoring systems in critical infrastructure and security applications.

Phase-OTDR Event Detection Using Image-Based Data Transformation and Deep Learning

This content is AI-processed based on open access ArXiv data.