STRIDE: A Systematic Framework for Selecting AI Modalities -- Agentic AI, AI Assistants, or LLM Calls

📝 Original Info

- Title: STRIDE: A Systematic Framework for Selecting AI Modalities – Agentic AI, AI Assistants, or LLM Calls

- ArXiv ID: 2512.02228

- Date: 2025-12-01

- Authors: Shubhi Asthana, Bing Zhang, Chad DeLuca, Ruchi Mahindru, Hima Patel

📝 Abstract

The rapid shift from stateless large language models (LLMs) to autonomous, goaldriven agents raises a central question: When is agentic AI truly necessary? While agents enable multi-step reasoning, persistent memory, and tool orchestration, deploying them indiscriminately leads to higher cost, complexity, and risk. We present STRIDE (Systematic Task Reasoning Intelligence Deployment Evaluator), a framework that provides principled recommendations for selecting between three modalities: (i) direct LLM calls, (ii) guided AI assistants, and (iii) fully autonomous agentic AI. STRIDE integrates structured task decomposition, dynamism attribution, and self-reflection requirement analysis to produce an Agentic Suitability Score, ensuring that full agentic autonomy is reserved for tasks with inherent dynamism or evolving context. Evaluated across 30 real-world tasks spanning SRE, compliance, and enterprise automation, STRIDE achieved 92% accuracy in modality selection, reduced unnecessary agent deployments by 45%, and cut resource costs by 37%. Expert validation over six months in SRE and compliance domains confirmed its practical utility, with domain specialists agreeing that STRIDE effectively distinguishes between tasks requiring simple LLM calls, guided assistants, or full agentic autonomy. This work reframes agent adoption as a necessity-driven design decision, ensuring autonomy is applied only when its benefits justify the costs.📄 Full Content

We distinguish three modalities: (i) LLM calls, providing single-turn inference without memory or tools, which is ideal for straightforward query-response scenarios; (ii) AI assistants, which handle guided multi-step workflows with short-term context and limited tool access that is suitable for structured processes requiring human oversight; and (iii) Agentic AI, which autonomously decomposes tasks, orchestrates tools, and adapts with minimal oversight, which is necessary for complex, dynamic environments requiring independent decision-making. Table 1 contrasts these modalities.

Current practice often overuses agentic AI, deploying autonomous systems even when simpler modalities would suffice. This tendency leads to unnecessary cost, complexity, and risk, particularly in enterprise contexts where reliability and governance are critical. A principled framework for deciding when agents are truly necessary has been missing, leaving design-time choices largely intuition-driven rather than evidence-based. While agentic AI unlocks transformative value in domains like SRE, compliance verification, and complex automation, deploying it indiscriminately carries risks:

• Overengineering: using agents for simple queries wastes compute and developer effort.

• Security & compliance risks: uncontrolled tool use and API calls may leak sensitive data.

• System instability: recursive loops and unbounded workflows degrade reliability.

We propose STRIDE, a novel framework for necessity assessment at design time: systematically deciding whether a given task should be solved with an LLM call, an AI assistant, or agentic AI. STRIDE analyzes task descriptions across four integrated analytical dimensions:

• Structured Task Decomposition: Tasks are decomposed into a directed acyclic graph (DAG) of subtasks, systematically breaking down objectives to reveal inherent complexity, interdependencies, and sequential reasoning requirements that distinguish simple queries from multi-step challenges. • Dynamic Reasoning and Tool-Interaction Scoring: STRIDE quantifies reasoning depth together with tool dependencies, external data access, and API requirements, identifying when sophisticated orchestration beyond basic language processing is necessary. • Dynamism Attribution Analysis: Using a True Dynamism Score (TDS), the framework attributes variability to models, tools, or workflow sources, clarifying when persistent memory and adaptive decision-making are required. • Self-Reflection Requirement Assessment: Assesses need for error recovery and metacognition, and integrates all factors into an Agentic Suitability Score (ASS) that guides the choice of LLM call, assistant, or agent.

This unified methodology ensures that AI solution selection is not an ad-hoc judgment call, but a structured, repeatable process that balances capability requirements with efficiency, cost, and risk management. Just as scaling laws have guided model development by quantifying performance as a function of parameters and data, we argue that analogous principles are needed for environmental and task scaling. Not every task requires autonomy: simple queries map to LLM calls, structured processes to guided assistants, and only dynamic, evolving workflows demand full agentic AI. STRIDE introduces such a structured scaling perspective for modality selection.

Strategic Integration and Impact: STRIDE acts as a “shift-left” decision tool-i.e., it moves critical choices from deployment time to the design phase-embedding modality selection into early workflows. This prevents over-engineering, avoids under-provisioning, and provides defensible criteria for balancing capability, efficiency, computational cost, and risk.

• We introduce STRIDE, the first design-time framework for AI modality selection, shifting decisions left in the pipeline. • We define a novel quantitative Agentic Suitability Score with dynamism attribution, balancing autonomy benefits against cost and risk. • We evaluate STRIDE on 30 real-world tasks across SRE Jha et al. [2025], compliance, and enterprise automation, demonstrating reduced agentic over-deployment by 45% while improving expert alignment by 27%.

Beyond efficiency, this framing directly supports responsible AI deployment. By preventing overengineering, STRIDE reduces unnecessary surface area for errors, governance failures, and hidden costs, while ensuring that truly complex tasks receive the level of autonomy they demand.

Recent advances have expanded AI from simple LLM calls to guided assistants and adaptive agentic systems. While assistants follow structured workflows, agents plan and make inference-time decisions in dynamic environments. This shift has driven research into task complexity, reasoning depth, and self-reflection, but few works address the design-time question of when agents are truly needed. [2025]; and tool-calling studies quantify volatility from nested or parallel use Masterman [2024], factors we incorporate in the True Dynamism Score. Reflection has been explored in ARTIST Plaat et al. [2025] and MTPO Wu et al. [2025]. We instead treat reflection as a necessity criterion rather than a performance add-on.

Industry and patents. Frameworks such as LlamaIndex, Google ADK, and CrewAI LlamaIndex [2025] enable modular workflows, while patents from Anthropic and OpenAI Zhang et al. [2024], AFP [2025] describe autonomous travel and compliance. STRIDE differs by focusing on design-time necessity assessment, embedding explainability and risk-awareness into early choices.

While prior work evaluates agent capabilities post-deployment, no framework automates modality selection at design time. STRIDE fills this gap with task complexity scoring, variability attribution, drift monitoring, and persona-specific recommendations, uniquely addressing the question of whether agents are needed at all and transforming solution selection into a structured, evidence-based discipline.

In this section, we present our end-to-end framework, STRIDE (Systematic Task Reasoning Intelligence Deployment Evaluator), for assessing whether a task requires the deployment of agentic AI, an AI assistant, or a stateless LLM call. STRIDE systematically evaluates task complexity, reasoning depth, tool dependencies, dynamism of task, and self-reflection requirements to provide a quantitative recommendation. Figure 1 illustrates the workflow

STRIDE analyzes task descriptions, inputs/outputs, and tool dependencies to recommend the appropriate AI modality. This process comprises producing an Agentic Suitability Score (ASS) for each subtask. This score is then aggregated to guide the final modality recommendation:

• Task Decomposition: Breaks tasks into a DAG of subtasks to expose dependencies.

• Reasoning & Tool Scoring: Quantifies reasoning depth, tool reliance, and API orchestration requirements. • Dynamism Analysis: Attributes variability across model, tool, and workflow sources using a True Dynamism Score (TDS) to determine whether adaptive agentic reasoning is needed. • Self-Reflection Assessment: Detects when iterative correction is required and integrates all factors into an Agentic Suitability Score (ASS) to give final recommendation.

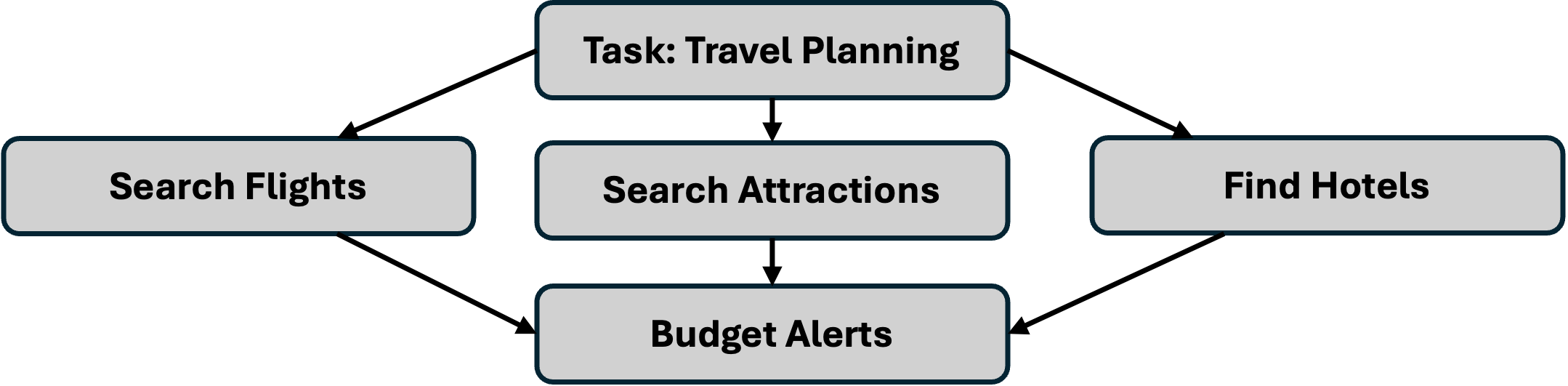

In this stage, STRIDE transforms free-form task descriptions into structured, actionable subtasks using a fine-tuned LLM with specialized prompting. The system identifies key action verbs (like “search,” “validate,” “analyze”) and target nouns (such as “flights,” “budget,” “data”) to create meaningful work units. To illustrate with a practical example, if the initial task is “Plan a 5-day travel itinerary”, the Task Decomposition phase would generate subtasks like “Search Flights”, “Find Hotels”, “Budget Planning”, and “Activity Research”.

The system automatically discovers relationships between subtasks through 1) Temporal Analysis:

Recognizing sequence requirements (“search flights before booking hotels”), 2) Data Flow Tracking: Identifying when one subtask’s output feeds into another (“Search Flights” results inform “Budget Alerts”), and 3) Semantic Role Labeling: Mapping precise input/output relationships.

STRIDE creates a directed acyclic graph (DAG) where each subtask node contains, 1) Historical Patterns: “Search Flights” appears as the starting point in 85% of travel planning tasks, 2) Tool Recommendations: Proven integrations for similar subtasks, and 3) Performance Insights: Success rates and optimization guidance from past executions. By converting ambiguous requests into precise, interconnected subtasks, STRIDE establishes the foundation for intelligent automation decisions. This structured approach ensures no critical dependencies are missed while enabling parallel execution where possible. Let T = {s 1 , s 2 , . . . , s n } represent the extracted subtasks, organized in graph G = (T, E) where edges E capture both ordering constraints and data dependencies between tasks.

For each subtask s i , STRIDE computes an Agentic Suitability Score (ASS) that objectively measures whether the subtask benefits from autonomous agent capabilities:

where:

• R(s) = Reasoning depth (0 = Shallow; simple lookup or direct response, 1 = Medium; requires comparison or basic inference, 2 = Deep; multi-step analysis or complex decisionmaking),

• T (s) = tool need (0 = None; no external tools required, 1 = Single; single tool integration, 2 = Multiple; multiple tool orchestration needed), • S(s) = state/memory requirement (0 = None; stateless operation, 1 = Ephemeral; single session, 2 = Persistent;), • ρ(s) = Risk Score (compliance violations, computational Overhead, infinite loop potential).

The weighting system (w r , w t , w s , w ρ ) adapts to different task domains: Reasoning-Heavy Tasks: w r ) prioritizes complex multi-step tasks (e.g., w r = 0.4 for itinerary planning) Tool-Intensive Workflows: (w t ) emphasizing tasks requiring multiple tools (e.g., w t = 0.3 for API-heavy workflows) Context-Dependent Operations: (w s ) accounting for persistent context needs (e.g., w s = 0.2 for multi-turn interactions) Risk-Sensitive Applications: (w ρ ), penalizing high-risk operations (e.g., w ρ = 0.1 for compliance tasks) STRIDE continuously refines these weights through grid search optimization on labeled historical task data, then refines via reinforcement learning from deployment outcomes and expert feedback integration for domain-specific calibration. This scoring mechanism prevents over-engineering simple tasks with complex agentic AI solutions, while ensuring that sophisticated problems receive appropriate autonomous capabilities. The result is precise resource allocation and optimal performance across diverse task types.

Variability alone does not justify implementing AI agents. For instance, a task like “Generate a random greeting message” may produce different outputs each time due to model stochasticity (model-induced variability), but it can be handled effectively by a stateless LLM with temperature adjustments-no agentic autonomy is required. STRIDE distinguishes:

• Model-induced variability, stems from AI model limitations, including prompt ambiguity (unclear prompts causing inconsistent outputs) and stochastic randomness (probabilistic models producing different results from identical inputs). This variability typically resolves through improved prompt engineering, temperature controls, or deterministic sampling rather than requiring agentic capabilities. • Tool-induced variability, arises from external dependencies, including API volatility (changing response formats, rate limits, downtime) and dynamic tool responses (varying data based on real-time conditions). These challenges typically require robust error handling, retry mechanisms, and adaptive response parsing rather than autonomous agent decision-making. • Workflow-induced variability, involves systemic execution complexity, including conditional branching (different inputs triggering varied decision trees) and environmental changes (system load, user context, data availability altering optimal paths). This category most strongly indicates agentic solution needs, as it requires dynamic decision-making and adaptive planning that benefit from autonomous reasoning capabilities.

By distinguishing sources of variability, STRIDE avoids over-engineering and activates agentic AI only when autonomous reasoning materially improves task outcomes.

The True Dynamism Score (TDS) isolates workflow-driven variability:

where W (s) is workflow variability, V (s) tool volatility, and M (s) model instability. A high TDS implies that autonomy and adaptivity are required.

Self-reflection is required when subtasks involve mid-execution decision points or validation of nondeterministic tools.

Mid-execution decision points occur when workflows cannot be fully predetermined and require dynamic evaluation during execution. AI Agents implement procedural mechanisms to incorporate tool responses mid-process, while Agentic AI introduces recursive task reallocation and cross-agent messaging for emergent decision-making Sapkota et al. [2025]. These situations arise when initial Compute W (s), V (s), M (s) and derive TDS(s) 5:

Evaluate C(s), N (s), V (s) to derive SR(s) 6: end for 7: Aggregate features into task profile x T 8: Return ŷ = arg max m f (x T ; K) conditions change unexpectedly, multi-step processes reveal information influencing subsequent actions, or quality checkpoints require evaluating whether intermediate outputs meet success criteria. The Reflexion framework demonstrates how agents reflect on task feedback and maintain reflective text in episodic memory to improve subsequent decision-making Shinn et al. [2023], with studies showing significant problem-solving performance improvements (p < 0.001) Renze and Guven [2024].

Validation of nondeterministic tools becomes critical when working with external systems producing variable outputs. LLM-powered systems present challenges where outputs are unpredictable, requiring custom validation frameworks. This includes API responses with different data structures, LLMgenerated content requiring accuracy evaluation, and web scraping tools exhibiting behavior changes due to evolving website structures. Neural network instability can lead to disparate results, requiring rigorous validation through adversarial robustness testing.

Without self-reflection, agents risk propagating errors, making incorrect assumptions about tool outputs, or failing to adapt when strategies prove insufficient. Self-reflection enables task coherence and reliability in dynamic environments. STRIDE encodes this as a decision rule:

where C(s) = conditional branches, N (s) = nondeterministic tools, V (s) = mid-execution validation, and θ = dynamism threshold. If SR(s) = 1, reflection hooks (e.g., error recovery, re-planning, ReAct) are triggered.

We evaluated STRIDE across 30 real-world tasks spanning SRE, enterprise automation, legal compliance, and customer support. The objective was to test whether STRIDE reliably distinguishes between LLM calls, assistants, and agents, minimizing over-engineering while ensuring accurate, cost-efficient design-time decisions. While modest in size, our task set emphasizes depth over breadth, demonstrating STRIDE’s value in real-world settings. Across all 30 tasks, STRIDE achieved 92% accuracy, reduced unnecessary agent deployments by 45%, and delivered 37% lower compute/API usage compared to always deploying agents. These results demonstrate that principled design-time selection yields tangible efficiency gains compared to intuition-driven deployment. We compared STRIDE against two baselines. The Naive Agent baseline always deployed agentic AI regardless of task complexity, providing an upper bound on cost but no efficiency. The Heuristic Threshold baseline deployed agents only when reasoning depth ≥ 2 and tool requirements ≥ 2, but often failed on borderline cases where task dynamism or reflection was the deciding factor. STRIDE consistently outperformed both approaches.

To ground these aggregate results, we highlight representative tasks where STRIDE discriminates between simple lookups, medium-complexity assistance, and fully autonomous agent workflows. These cases illustrate how STRIDE’s scoring pipeline translates into practical deployment recommendations. Human-in-the-loop validation also played a role: omitting expert feedback reduced alignment with domain judgments, demonstrating the value of incorporating expert calibration into design-time recommendations.

Beyond aggregate numbers, we tested robustness across domains. STRIDE achieved 95% accuracy in SRE, 91% in compliance, 89% in automation, and 93% in customer support (Figure 3). This consistency suggests that STRIDE generalizes well across heterogeneous real-world tasks without overfitting to any specific domain. Errors primarily arose in borderline scenarios, such as multidocument summarization, where dynamism was underestimated. Notably, STRIDE sometimes recommended assistants when experts preferred agents, but never the reverse-avoiding costly over-engineering mistakes.

Expert validation further confirmed STRIDE’s recommendations. In 78% of cases, experts fully agreed, 15% showed partial agreement (e.g., suggesting an assistant instead of an agent for borderline tasks), and only 7% disagreed (Figure 4). This resulted in a 27% improvement in expert alignment compared to the Heuristic Threshold baseline. Feedback from engineers and compliance officers improved STRIDE through better task decomposition, adjusted TDS weights, and persona-aware outputs tailored to developers and managers (Table 5). Our robustness validation was not a one-off annotation exercise, but the result of extended collaboration with subject matter experts. For the SRE domain, three Kubernetes incident response experts engaged with STRIDE iteratively over a six-month period (March-August 2025), providing feedback on decomposition, reflection, and dynamism scoring. In the compliance domain, two legal verification experts participated in a shorter but focused engagement of 1-2 months (May-June 2025), helping calibrate task scoring against regulatory criteria. This sustained, multi-month collaboration ensured that STRIDE’s assessments aligned with the nuanced realities of enterprise practice. Expanded task patterns and historical performance metrics for SRE and compliance tasks.

STRIDE reduces the costs, risks, and misaligned expectations of unnecessary agents. By shifting selection to design time, it prevents over-engineering, ensures autonomy only where required, and reframes adoption from intuition-driven to structured decision process that directly translates into lower compute/API expenditure and reduced operational costs. At the same time, we acknowledge limitations. STRIDE’s scoring functions are heuristic by design, striking a balance between interpretability and generality.

Finally, STRIDE complements existing benchmarks, such as AgentBench, SWE-Bench, and Tool-Bench. While those benchmarks evaluate how well agents perform after deployment, STRIDE focuses on whether agents are needed at all before deployment. This creates opportunities for integration: STRIDE could serve as a design-time filter that guides which tasks should be benchmarked with agents, or as a planning tool embedded into enterprise AI workflows. Together, these directions position STRIDE as both a practical engineering aid and a guardrail for responsible AI deployment.

We introduced STRIDE (Systematic Task Reasoning Intelligence Deployment Evaluator), a framework for systematically determining when tasks require agentic AI, AI assistants, or simple LLM calls. STRIDE integrates five analytical dimensions -structured task decomposition, dynamic reasoning and tool-interaction scoring, dynamism attribution analysis, self-reflection requirement assessment, and agentic suitability inference. In evaluating 30 real-world enterprise tasks, STRIDE reduced unnecessary agent deployments by 45%, improved expert alignment by 27% and cut resource costs by 37%, directly mitigating over-engineering risks and containing compute costs.

Looking ahead, we will extend evaluation beyond the 30 tasks to include multimodal tasks (vision/audio), integrate reinforcement learning for weight tuning, and validate STRIDE at enterprise scale. These extensions will further strengthen its role as a practical guardrail for responsible AI deployment.

Example: Currency Lookup. “What is the exchange rate between USD and EUR today?” This task requires shallow reasoning (0-hop), a single API call, and no state persistence. STRIDE assigned a low True Dynamism Score (0.10) and recommended LLM_CALL. This minimized cost and latency, avoiding unnecessary orchestration overhead while retaining accuracy.AI Assistant Example: Meeting Summarization. “Summarize today’s team meeting notes and suggest action items.”

Example: Currency Lookup. “What is the exchange rate between USD and EUR today?” This task requires shallow reasoning (0-hop), a single API call, and no state persistence. STRIDE assigned a low True Dynamism Score (0.10) and recommended LLM_CALL. This minimized cost and latency, avoiding unnecessary orchestration overhead while retaining accuracy.

39th Conference on Neural Information Processing Systems (NeurIPS 2025) Workshop: LAW -Bridging Language, Agent, and World Models.

📸 Image Gallery