Automated Risk-of-Bias Assessment of Randomized Controlled Trials: A First Look at a GEPA-trained Programmatic Prompting Framework

📝 Original Info

- Title: Automated Risk-of-Bias Assessment of Randomized Controlled Trials: A First Look at a GEPA-trained Programmatic Prompting Framework

- ArXiv ID: 2512.01452

- Date: 2025-12-01

- Authors: Lingbo Li, Anuradha Mathrani, Teo Susnjak

📝 Abstract

Assessing risk of bias (RoB) in randomized controlled trials is essential for trustworthy evidence synthesis, but the process is resource-intensive and prone to variability across reviewers. Large language models (LLMs) offer a route to automation, but existing methods rely on manually engineered prompts that are difficult to reproduce, generalize, or evaluate. This study introduces a programmable RoB assessment pipeline that replaces ad-hoc prompt design with structured, code-based optimization using DSPy and its GEPA module. GEPA refines LLM reasoning through Pareto-guided search and produces inspectable execution traces, enabling transparent replication of every step in the optimization process. We evaluated the method on 100 RCTs from published meta-analyses across seven RoB domains. GEPA-generated prompts were applied to both open-weight models (Mistral Small 3.1 with GPT-oss-20b) and commercial models (GPT-5 Nano and GPT-5 Mini). In domains with clearer methodological reporting, such as Random Sequence Generation, GEPA-generated prompts performed best, with similar results for Allocation Concealment and Blinding of Participants, while the commercial model performed slightly better overall. We also compared GEPA with three manually designed prompts using Claude 3.5 Sonnet. GEPA achieved the highest overall accuracy and improved performance by 30%-40% in Random Sequence Generation and Selective Reporting, and showed generally comparable, competitively aligned performance in the other domains relative to manual prompts. These findings suggest that GEPA can produce consistent and reproducible prompts for RoB assessment, supporting the structured and principled use of LLMs in evidence synthesis.📄 Full Content

For this study, the test dataset comprised RCTs from ten meta-analyses, randomly selected from Q1-ranked medical journals spanning diverse clinical fields, including cardiovascular disease, metabolic disorders, women’s health, and pediatrics. In total, 111 RCTs were identified; after excluding non-English trials, 100 were randomly selected for inclusion, with their distribution across clinical domains and meta-analyses detailed in Table 1. Full-text RCTs constituted the textual corpus for automated RoB evaluation, with author-reported RoB 1 judgments from the original meta-analyses acting as the initial human reference standard (hereafter referred to as the Gold Labels). This approach prioritized a diverse and neutral evaluation corpus, enabling comprehensive benchmarking of model performance across varied reporting styles and methodological contexts. The automated model’s outputs were compared against Gold Labels to evaluate prediction agreement and assess performance across RoB domains. Cardiovascular disease [31,32,33] 3 31 30 Metabolic disorders [34,35,36] 3 22 22 Women’s health [37,38] 2 30 23 Pediatrics [39,40] 2 28 25

Total 10 111 100

A customized GEPA implementation was developed within DSPy to enable domain-specific reasoning for automated RoB assessment. All core components, including the curated training and validation datasets, paired DSPy Signatures definitions, module configurations were specifically designed for this study. The subsequent subsections outline the operational workflow, dataset optimization and model-environment settings.

The framework processes full-text RCTs (converted from PDF to plain text using MinerU [41]) through a two-stage reasoning pipeline. For each of the seven Cochrane RoB 1 domains, the system employs sequential reasoning guided by paired questions based on Cochrane criteria: one focusing on identifying information relevant to the methodological process (e.g., “Extract the exact sentence(s) describing how the allocation sequence was generated.”) and the other assessing the adequacy of the reported method (e.g., “Was the allocation sequence adequately generated?”). The reasoning process integrates textual information and evaluative inference within a coherent reasoning framework.

Evidence identification operates as an internal reasoning step that contextualizes each domain-specific judgment, while the final output represents a categorical risk-of-bias classification (“low,” “high,” or “unclear”).

For each RoB 1 domain, a balanced dataset was constructed, consisting of 18 training and 12 validation examples evenly distributed across the three risk categories. Each example comprised a domain-specific text excerpt from an RCT, accompanied by a structured set of elements including an evidence identification question, the corresponding evidence span, an evaluative question, the annotated risk-of-bias label, and the textual justification supporting that label. This setup supported few-shot learning and iterative supervision under constrained data conditions. GEPA optimization employed the auto=“light” configuration (approximately 428 metric calls), balancing exploration depth with computational efficiency. All optimization runs employed fixed random seeds (42) and deterministic decoding parameters (temperature = 0.0, top_p = 1.0). Nevertheless, because GEPA employs heuristic multi-objective search, slight variations in the Pareto optimization process can produce distinct yet equally valid optima, even under identical configurations. We assessed the stability of GEPA optimization by conducting five domain-level runs under identical settings, generating distinct optimized prompts. Each prompt was then evaluated three times on the test set to measure inference consistency and performance variability.

GEPA operates within DSPy (version used was 3.0.4b1) through a dual-model setup, comprising a base model responsible for primary reasoning and a reflection model guiding optimization feedback. Two configurations were implemented to assess performance under different computational constraints: an open-weight setup (Mistral 3.1 small [42] + GPT-oss-20b [43]) and a commercial setup (GPT-5-nano [44] + GPT-5-mini [45]). All experiments were conducted via the OpenRouter [46] API (September-October 2025) under uniform computational settings. This setup ensured methodological consistency and enabled direct comparison between the open-source and commercial model families. All Python scripts are available at the GitHub repository: https://github.com/Ivylee6108/GEPA_RoB . git.

We implemented a multi-dimensional evaluation framework to assess both the internal validity and external comparability of the GEPA-based RoB assessment system. The evaluation comprised two complementary components: (1) quantitative assessment of model performance and stability, and (2) comparison with manually crafted RoB assessment prompts from prior studies.

Model performance was evaluated by comparing each model’s RoB predictions against Gold Labels derived from published meta-analyses. For each RoB domain, we computed standard agreement metrics, including the correct assessment rate (accuracy), sensitivity, specificity, positive predictive value (PPV), and negative predictive value (NPV), each accompanied by 95% confidence intervals. Sensitivity was defined as the proportion of instances labeled as “low risk” in the Gold Labels that were correctly identified by the model. Specificity captured the proportion of instances labeled as “unclear” or “high risk” that were not correctly classified as “low risk” by the model. PPV represented the proportion of model-assigned “low risk” judgments that were also labeled as “low risk” in the Gold Labels, whereas NPV reflected the proportion of model-assigned “unclear” or “high risk” judgments that were consistent with the reference labels. Overall consistency was further quantified using Cohen’s Kappa coefficient (κ, 95% CI). All metrics were calculated directly from the resulting predictions.

We also compared the proposed framework’s performance against three manually crafted RoB assessment prompt sets from prior literature [22,24,23] (denoted as A [23], B [22], and C [24]). Prompt A was developed under the RoB 2 tool and originally evaluated using Claude-2. Because our framework follows the RoB 1 criteria, we matched the domains for cross-version comparability by mapping A’s “random sequence generation and allocation concealment” domain to the ROB 1 counterparts, “randomization process” and “allocation concealment”. Prompt B and C were both designed with RoB 1-like criteria but evaluated using different LLMs (ChatGPT and Claude for B, Moonshot-v1-128k and Claude-3.5-Sonnet for C). Both prompts used a four-level categorical scale (“definitely yes,” “probably yes,” “probably no,” “definitely no”). We harmonized these ratings with the tripartite RoB 1 scheme by mapping “definitely yes/no” to low/high risk and “probably yes/no” to unclear risk. Following this normalization, we merged their blinding-related subdomains to match the seven canonical RoB 1 domains: items concerning patients and providers were aggregated as blinding of participants and personnel, while those related to data collectors, outcome assessors, and analysts were grouped under blinding of outcome assessment. Within each merged domain, we applied a hierarchical rule: any subdomain rated “high” yielded an overall “high” rating; if all were “low,” the domain was marked “low”; otherwise, “unclear.”

Although the three prior studies employed different language models, all incorporated at least one model from the Claude family, therefore, we re-evaluated prompt A-C using Claude-3.5-Sonnet [47]. We also maintained continuity with these Claude-based evaluation settings by adopting Claude-3.5-Sonnet as the teacher model in our GEPA framework, providing a coherent and comparable assessment environment across all approaches. Each manual prompt set (A-C) was executed three times under identical conditions, and results were averaged across runs. For the GEPA-based framework, five distinct prompts were generated through the student-teacher interaction process (student: Mistral Small 3.1; teacher: Claude-3.5-Sonnet). Each generated prompt was evaluated three times using the same RCTs in Claude-3.5-Sonnet, and the results were aggregated to yield the final framework performance. Model performance was assessed using standard quantitative metrics (accuracy) computed at both the domain and overall RoB judgment levels.

This section outlines the evaluation results of our GEPA-based RoB assessment pipeline. We present overall and domain-specific performance for two pair models, comparing results against Gold Labels.

RoB judgments generated by the two evaluated pair-models are summarized in Table 2. The commercial model pair demonstrated higher overall accuracy and domain-level consistency compared with the open-weight pair. Across most RoB domains (D1-D5), the commercial models achieved higher correct assessment rates, with relative improvements of approximately 2-4 percentage points (e.g., 0.799 vs. 0.773 in D1 and 0.671 vs. 0.654 in D5). Sensitivity gains were similarly observed, indicating enhanced ability to identify “low risk” cases (0.773 vs. 0.745 in D1; 0.671 vs. 0.654 in D5). Specificity remained high for both systems, though the commercial pair showed slightly lower values in some domains (e.g., 0.888 vs. 0.910 in D1), suggesting a modestly more inclusive classification tendency. Both models achieved high positive predictive values (PPV > 0.9), while the commercial pair maintained marginally higher negative predictive values (NPV), such as 0.721 vs. 0.714 in D1 and 0.804 vs. 0.781 in D3, reflecting greater stability in “non-low-risk” assessments. Cohen’s κ values followed a similar pattern, with the commercial pair showing higher agreement with human reference judgments in most domains (e.g., κ = 0.602 vs. 0.567 in D1). Agreement was weaker in selective reporting (D6) and other bias (D7). Appendix Table 6, the gold-standard label distribution was markedly imbalanced in several domains, particularly D6, where 89% of studies were labeled “low risk.” Overall, the commercial models achieved higher and more consistent performance across all evaluated metrics, with improvements in accuracy, sensitivity, and NPV, and minimal trade-offs in specificity and PPV.

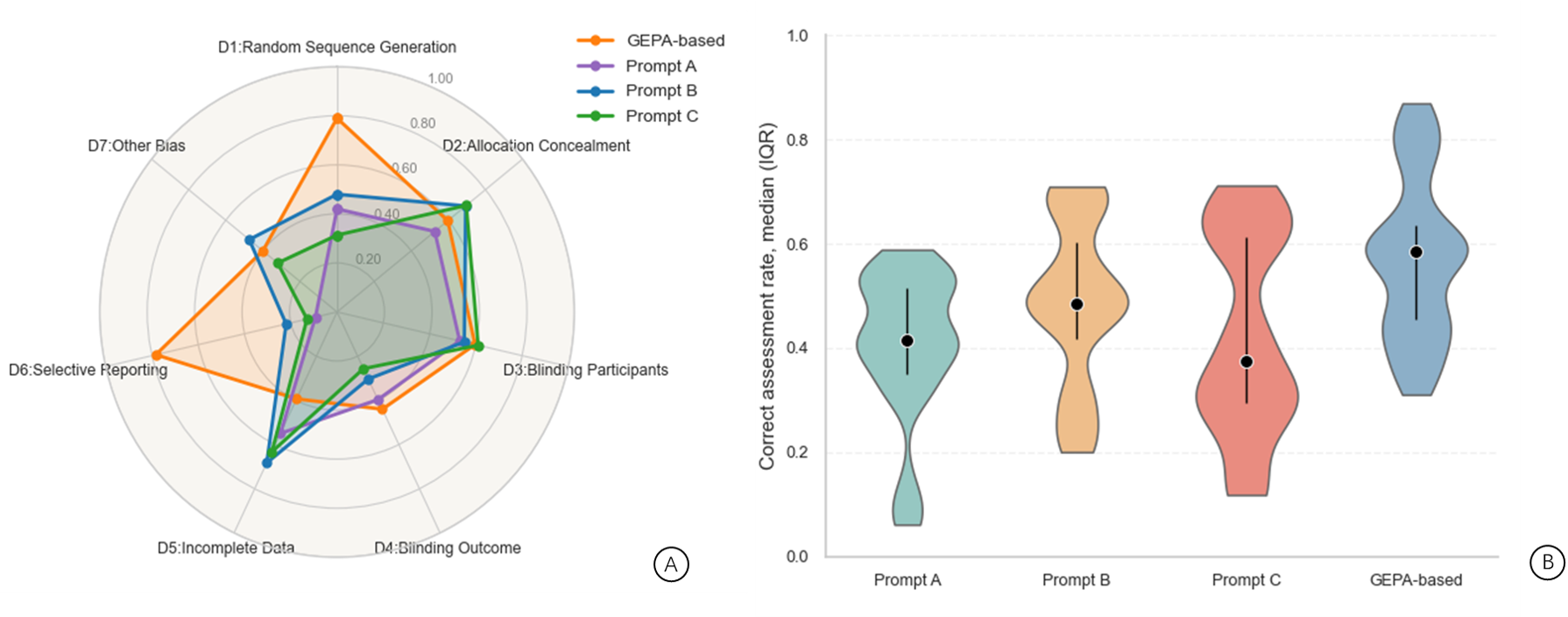

We compared the GEPA-generated prompt performance with three representative manually designed prompts (Prompt A, Prompt B, and Prompt C) to examine its generalizability and consistency (see Figure 2). The GEPA-generated prompt presents a more balanced radar shape than the manually designed prompts, suggesting steadier performance across different types of bias assessment. The most pronounced advantages of the GEPA-generated prompt are observed in Random Sequence Generation (mean = 0.79, 95% CI 0.74-0.84) and Selective Reporting (mean = 0.78, 95% CI 0.70-0.86), where its accuracy exceeds that of the best manual prompts by approximately 0.30-0.40. The higher accuracy suggests that the prompts generated by GEPA guided the LLM to make more accurate risk judgments than the manually constructed prompts. The GEPA-generated prompt also performs slightly better, though less markedly, in Blinding of Outcome Assessment (mean = 0.44, 95% CI 0.40-0.48), showing a modest improvement over manual prompts. In Allocation Concealment (mean = 0.60, 95% CI 0.56-0.63) and Blinding of Participants (mean = 0.60, 95% CI 0.57-0.62), GEPA performs on par with the strongest manual prompts (mean = 0.61-0.70). For Other Bias, where only Prompts B, C, and GEPA were compared, the GEPA-generated prompt reaches a mean accuracy of 0.40 (95% CI 0.34-0.46), comparable to Prompt B (mean = 0.47, 95% CI 0.43-0.51) and higher than Prompt C (mean = 0.32, 95% CI 0.29-0.35). GEPA maintains accuracy within the upper range of manual prompts. In the Incomplete Data domain, the GEPA-generated prompt performed less well than the manually designed prompts. Its mean correct assessment rate was 0.39 (95% CI 0.33-0.45), compared with 0.55 (95% CI 0.50-0.60) for Prompt A, 0.68 (95% CI 0.64-0.72) for Prompt B, and 0.63 (95% CI 0.60-0.66) for Prompt C. The lower accuracy suggests that GEPA was less effective in distinguishing between the different risk levels for incomplete data. Across all evaluations, the GEPA-generated prompts achieved the highest overall accuracy, with a median of 0.59 when averaged across the seven risk-of-bias domains. In comparison, the manually designed prompts reached medians of 0.49 for Prompt B, 0.38 for Prompt C, and 0.42 for Prompt A. Among them, Prompt A covered six domains, while Prompt B, Prompt C, and the GEPA-based approach included all seven. The higher median for the GEPA-generated prompts indicates that they enable the model to make more accurate and stable risk judgments across domains.

The GEPA framework demonstrated high computational efficiency and affordability across all evaluated model configurations (see Table 3). The training process consisted of two measurable components: the main generation cost, incurred by the primary LLM, and the reflection cost, generated during the self-evaluation stage by reflective LLM. Across model pairs, both components remained modest. For example, in the openweight configuration (Mistral small 3.1 with GPT-oss-20b), the main cost for each domain averaged $0.04, with an additional reflection cost of $0.01-$0.02. Even when employing more capable models such as Claude 3.5-sonnet, the reflection cost remained highly manageable ($0.2-$0.5 per domain). Total training time ranged from approximately 22 minutes for Mistral small 3.1 with Claude 3.5-sonnet to under three hours for the GPT-5 Nano with GPT-5 mini setup. Importantly, the testing or inference cost per article was extremely low(typically below $0.001-$0.05), and the processing time per document remained within a few minutes, depending on the model used. These findings confirm that the GEPA procedure is transparent, quantifiable, and cost-efficient, offering reproducible and affordable time-cost trade-offs suitable for RoB synthesis applications. Note. * Cost and time are measured per article (i.e., total cost and total processing time for one document).

We analysed disagreements across all seven RoB domains to investigate where and why GEPA-based automated judgments diverged from human ratings. Rather than assuming that such divergences necessarily reflected errors, we sought to understand how LLMs, prompted with structured reasoning templates from GEPA, may be interpreting the available evidence in ways that differ from human reviewers. We focused on two dimensions: the direction of disagreement and the evidentiary characteristics that appeared to trigger these divergences.

Quantitative results showed substantial variability in GEPA-gold agreement across domains (See Table 2, Figure 2). Mean accuracies ranged from 0.30 to 0.83, and Cohen’s κ from -0.04 to 0.57, indicating that consistency was uneven and typically limited to slight or fair agreement. Only random sequence generation (D1) achieved a moderate level of agreement (mean κ = 0.57), while most other domains fell below κ = 0.40. An instructive outlier was D6 (selective reporting): Claude 3.5 sonnet achieved high accuracy (0.80), yet κ was close to zero. This reflects the impact of extreme class imbalance in the gold data (89 low risk vs. 11 unclear, 0 high), which suppresses κ despite meaningful agreement.

In such cases, raw accuracy is a more valid indicator of alignment.

Despite this variability, the direction of disagreement was remarkably consistent: models were systematically more cautious than the reference, most often downgrading low/high risk ratings to unclear. Across domains, models tended to require explicit procedural detail before assigning a low/high risk label, even in cases where human reviewers accepted adequacy based on conventional phrasing or contextual inference. Rather than indicating random inconsistency or optimism, these divergences point to a prompt-conditioned inferential style grounded in textual literalism. This “conservatism bias” was particularly salient when reports used formulaic expressions (e.g., “participants were randomized,” “sealed envelopes were used”) without elaborating operational safeguards. In such cases, models treated absent detail as residual uncertainty, whereas human raters inferred adequacy from tacit norms.

Model scale modulated the strength of this tendency, but not the direction. Larger and more instruction-tuned LLMs achieved higher agreement, particularly in domains requiring interpretive ability (e.g., selective reporting by Claude 3.5 sonnet achieve 80% accuracy, while mistral 3.1 small and GPT-5nano only have accuracy below 50%, strong LLM granted low risk when the reporting was stylistically polished or implied sufficiency.) Yet even the strongest models retained the core conservatism shaped by GEPA prompts, hesitating to assign low/high risk without direct textual confirmation. This suggests that improved alignment arose not from optimism, but from smarter caution.

While the overall direction of disagreement revealed a prompt-driven conservatism in model behavior, its manifestation varied across domains. This section presents illustrative examples from each domain to highlight the types of textual cues that most frequently triggered divergences, and how the LLMs’ interpretive strategies conflicted with Gold Labels. These examples reflect the typical failure modes or thresholds of caution observed in that domain under GEPA-prompted inference. We include a representative example from the domain of “allocation concealment” in Table 4 to concretely illustrate the how LLMs demand for explicit textual confirmation led to a downgrade relative to human judgment. Additional examples across all domains are provided in Appendix Table 7. D1: Randomization generation Disagreements in this domain were relatively rare, with most labels aligning between LLM predictions and the gold standard. When disagreements occurred, they typically reflected the influence of the GEPA-generated prompts, which emphasized the need for explicitly documented randomization procedures. For example, studies that merely stated “participants were randomized” were frequently labeled unclear by the model, even when human reviewers judged them as low risk. Since the GEPA framework was trained on annotated data to produce prompts that foreground direct evidence (e.g., “computer-generated sequence”, “random number table”), the resulting prompts shaped the model’s interpretive stance to demand such concrete phrasing before assigning low risk. Rather than relying on conventional assumptions, the model treated vague or formulaic expressions as insufficient, reflecting a more text-bound evidentiary threshold encouraged by the GEPA-guided prompting.

D2: Allocation concealment This domain showed a high rate of disagreement, primarily reflecting LLMs’ insistence-guided by GEPA prompts-on detailed procedural transparency. GEPA prompts highlighted key indicators such as opaque, sequentially numbered, and independently administered concealment methods. In contrast, many trial reports used generic phrases like “envelopes were used” without specifying safeguards. The LLMs, following GEPA’s stricter evidentiary framing, typically assigned unclear unless all key qualifiers were present.

D3: Blinding participants Disagreements in this domain were frequent and predominantly reflected the conservative evidentiary stance encouraged by the GEPA-generated prompts. In trials involving physical, behavioral, or lifestyle interventions, the models rarely assigned low risk unless explicit descriptions of blinding procedures were present. GEPA’s structured prompting emphasized the need to verify whether participants and personnel were blinded and whether the lack of blinding could plausibly influence performance-related outcomes. As a result, when reports did not clearly state whether blinding was implemented, or when blinding was inherently infeasible due to the nature of the intervention, the LLMs defaulted to high or unclear risk.

D4: Blinding outcome assessment Disagreements in this domain were common and primarily stemmed from the models’ insistence on explicit evidence of assessor blinding. Guided by GEPA-derived prompts, the LLMs required concrete details, such as who conducted the outcome assessments, whether they were blinded, and how blinding was implemented. Human reviewers, by contrast, frequently assigned low risk when measurement tools were objective or standardized, inferring adequate protection against detection bias. This divergence reflects GEPA’s conservative evidentiary stance: lacking direct textual confirmation, the model defaulted to unclear, prioritizing procedural transparency over contextual inference.

D5: Incomplete outcome data Disagreements in this domain also reflected the LLMs’ limited access to information presented in non-textual elements, such as CONSORT flow diagrams. While GEPA-trained LLMs required explicit numerical reporting (e.g., dropout counts, reasons for attrition) and detailed analytical descriptions (e.g., intention-totreat implementation) in the text before assigning low risk, human reviewers often integrated information from figures or supplementary materials. Consequently, human raters judged some cases as low risk when visual data indicated minimal, balanced attrition, even though the textual record alone appeared incomplete. This divergence highlights how LLMs, constrained by textual input and GEPA’s rigid evidentiary thresholds, may underestimate reporting adequacy compared to human reviewers’ more holistic interpretations.

D6: Selective reporting Disagreements in this domain were shaped by LLMs’ differing thresholds for what constitutes sufficient evidence of outcome reporting transparency. Despite being trained on GEPA examples where comprehensive outcome reporting sufficed for low risk, even without quoting registry contents, smaller models (e.g., Mistral, GPT-5 nano) often defaulted to unclear unless a direct comparison to a registered protocol was present. This conservatism persisted even when reports listed all primary and secondary outcomes transparently. In contrast, stronger models like Claude more accurately captured the intended standard. When trial reports mentioned a protocol or registration and provided detailed, structured outcome reporting, Claude was more likely to assign low risk, reflecting better generalization of the training signal. Divergence in this domain was therefore more about the models’ varying ability to infer adequacy from indirect cues.

D7: Other bias Disagreements in this domain were highly variable and largely stemmed from the inherently subjective nature of the “Other bias” category in RoB 1. Human reviewers themselves often applied this domain inconsistently, as it covers a wide array of loosely defined concerns, ranging from funding sources to adherence issues or baseline imbalances. GEPA-trained LLMs struggled to apply stable criteria, particularly when trial texts mentioned potential bias-adjacent issues (e.g., small sample sizes or recruitment concerns) without clarifying their methodological implications. In such cases, models typically defaulted to unclear, reflecting both a conservative stance and ambiguity in the task framing. Some disagreements arose from the models’ heightened caution, while others reflected divergent assumptions about what constitutes a risk-relevant concern.

In this study, we introduce a programmatic approach for optimizing prompts used in LLM, namely assisted RoB assessment. Rather than relying on manually crafted instructions, which can vary widely across different teams, GEPA formalizes prompt development as an explicit, data-driven optimization procedure. Our findings demonstrate that optimized prompts can meaningfully improve the consistency and robustness of automated RoB judgments, while also revealing important methodological considerations related to the inherent subjectivity of RoB assessment, domain-specific variability, and the continued need for human oversight.

Prompt development for LLM-assisted evidence synthesis has historically relied on expert intuition and iterative trial-and-error. Hand-crafted prompts embed tacit heuristics and stylistic tendencies that differ across reviewers, leading to substantial variability and limited reproducibility. Moreover, manual prompts are inherently volatile: small changes in wording or formatting can produce disproportionately different LLM outputs, undermining the stability required for reliable RoB assessment. This fragility is particularly consequential in domains where judgments depend on contextual interpretation. As a result, handcrafted prompts constrain the transparency, portability, and standardization needed for evidence-based workflows and create persistent challenges whenever models are updated or replaced. GEPA addresses these methodological limitations by formalizing prompt design as a structured optimization problem. Rather than depending on subjective refinement, GEPA systematically searches a broad space of candidate prompts and identifies those that satisfy predefined criteria for accuracy, faithfulness, and conciseness. Crucially, the entire optimization process produces comprehensive execution traces, including intermediate candidates, reasoned model outputs, Pareto-front evaluations, and the final compiled prompt, which can be inspected, versioned, and shared with the scientific community. This level of transparency is rarely achievable with handcrafted prompts and represents a substantial advance in the reproducibility of LLM-driven RoB assessment. Because every step of the search and selection process is logged, GEPA produces prompts that are transparent, reproducible, and model-agnostic. Our experiments show that optimized prompts transfer effectively across related models, indicating that GEPA captures task-level regularities instead of overfitting to model-specific linguistic quirks. This methodological stability enables GEPA to serve as a durable foundation for LLM-based evidence synthesis, even as LLM architectures continue to change.

Beyond methodological reproducibility, GEPA-optimized prompts inform on how human reviewers interact with LLM-assisted workflows. In conventional pipelines, a major barrier to reliable automation is the substantial manual effort required to design and validate prompts for each RoB domain. This task demands methodological expertise and can vary widely across reviewers, introducing inefficiencies and inconsistencies into the workflow. As prompts often need re-engineering when models evolve, maintaining such pipelines becomes labor-intensive and error-prone. GEPA alleviates this burden by generating stable, criteria-oriented prompts without requiring manual engineering. With optimized prompts in place, LLMs can provide consistent, text-grounded first-pass assessments, allowing human reviewers to redirect their expertise toward higher-value activities, such as adjudicating ambiguous cases, integrating evidence across trials, and ensuring coherence with the broader literature. This redistribution of effort enhances efficiency, reduces workflow fragility, and is particularly advantageous for teams with heterogeneous training or when processing large volumes of evidence rapidly. Rather than replacing human judgment, GEPA supports a more structured, dependable human-in-the-loop process by removing the need for handcrafted prompts.

The performance of GEPA-optimized prompts requires deeper consideration on the conduct of human RoB assessment itself. Prior works [49,50,51] repeatedly shown that reviews differ in how strictly they apply RoB criteria, and RoB judgments are inherently subjective, with only moderate agreement even among trained reviewers. Many systematic review teams rely on contributors with varying levels of methodological expertise, and reviewers frequently draw on tacit knowledge, prior experience, or domain-specific expectations that are not explicitly codified in guidance documents. These factors introduce variability into human assessments that is difficult to eliminate, even with standardized tools such as the Cochrane RoB tool. As a result, the GEPA-optimized prompts reflect of a mixture of high-quality judgment, heterogeneous heuristics, and variable interpretive practices. Their judgement do not affect all RoB domains equally. Domains with clearly specified criteria, such as random sequence generation and allocation concealment, tend to elicit more consistent human assessments, providing GEPA with a stronger and more coherent supervisory signal. In contrast, domains such as other bias or incomplete outcome data involve greater interpretive flexibility, lower inter-rater reliability, and more heterogeneous reasoning. GEPA faces an intrinsically more difficult optimization problem, and performance ceilings naturally differ across domains. However, this variability also highlights an important methodological advantage of GEPA. By optimizing prompts against patterns that are stable across many annotations, GEPA distills a more standardized and reproducible interpretation of RoB criteria than can typically be achieved by individual reviewers. In contrast to handcrafted prompts, GEPA learns decision boundaries shaped by aggregate judgment behavior, smoothing over idiosyncratic tendencies. Through this process, GEPA tends to adopt a more conservative and criteria-driven stance, particularly when textual justification is incomplete. This strictness corresponds to the normative intent of RoB tools, which emphasize cautious inference when reporting is insufficient, and contrasts with the more permissive patterns sometimes observed among untrained or inconsistently trained reviewers. Therefore, within the constraints of what is documented in trial reports, GEPA provides a stable, defensible baseline that is often more consistent than typical human practice, especially in resource-limited review settings.

Adoption of GEPA has implications that extend beyond automating discrete components of RoB assessment. By replacing handcrafted prompts with a reproducible optimization procedure, GEPA offers a practical mechanism for standardizing the interface between human judgment and LLM-based reasoning. This development is particularly relevant for systematic reviews and living evidence syntheses, where variability in reviewer expertise and constraints on time and resources often limit the consistency of methodological appraisal. Equally important, GEPA’s complete execution logs provide an auditable record of how reasoning strategies were derived. These traces can be packaged and shared alongside the compiled prompts, facilitating scientific replication, model-to-model comparison, and long-term reproducibility in evidence synthesis workflows. More broadly, GEPA illustrates how programmatic optimization can be used to formalize and stabilize workflows that have traditionally relied on tacit knowledge or iterative trial-and-error.

Embedding such standardized components into evidence synthesis pipelines has the potential to enhance reproducibility, reduce reviewer workload, and support scalable review processes as evidence bases continue to expand. While human oversight remains essential, GEPA demonstrates a pathway toward more structured, transparent, and sustainable integration of LLMs in evidence synthesis practice.

This study introduced a programmatic and fully auditable approach to automating risk-of-bias assessment, replacing volatile manually crafted prompts with a transparent and optimizable computational process. The GEPA-based optimization pipeline systematically learns and refines reasoning strategies through structured Pareto-guided search rather than ad-hoc textual instructions. The method achieved expert-level consistency in objective domains, such as random sequence generation and allocation concealment, while maintaining interpretability, transparency, and reproducibility. Importantly, every step of the optimization procedure generates inspectable execution traces that can be packaged and shared to support full scientific replication. In more subjective domains, including blinding outcome assessment and other bias, its cautious performance highlights the continued need for contextual reasoning and human oversight.

Despite these contributions, several limitations warrant attention and provide directions for future research. First, our framework is built around the RoB 1 tool, which relies on domain-level judgments and does not incorporate the signaling-question-based structure introduced in RoB 2. Extending GEPA to accommodate multi-step reasoning chains and conditional decision rules would enhance its applicability and alignment with current methodological standards. Second, our preprocessing relied on PDF-to-text conversion, resulting in the loss of figures and flow diagrams that may contain relevant methodological information. Integrating multimodal inputs or structured metadata may help mitigate this limitation. Third, the dataset used for optimization was selected to balance coverage and feasibility, but the optimal scope and characteristics of such datasets remain unclear. Variability in human RoB judgments, particularly in domains with lower inter-rater agreement, also limits the achievable ceiling for fully automated systems. Future work should examine how larger, more diverse, and more consistently annotated datasets could improve stability and generalizability.

Beyond these methodological considerations, GEPA illustrates a broader opportunity for extending programmatic optimization within evidence synthesis. By replacing ad-hoc prompt design with a principled optimization pipeline, the framework demonstrates how interpretive reasoning tasks can be formalized, audited, and scaled. Although the present study focuses on RoB assessment, the same principles could be applied to other components of meta-analytic workflows, including literature retrieval, data extraction, and evidence aggregation, where reproducibility, traceability, and scalable human oversight are essential. Randomization mentioned but method not described.

Non-random or predictable method (e.g., alternation, date of birth).

Concealment mechanism prevents foreknowledge (e.g., sealed opaque envelopes, central allocation).

Insufficient details on concealment method.

Allocation predictable or not concealed.

Blinding implemented and likely effective.

Blinding unclear or partial.

No blinding, and outcomes likely influenced by knowledge of intervention.

Outcome assessors blinded and unlikely to be influenced.

Blinding not reported or uncertain.

Assessors not blinded and outcomes subjective or easily biased.

Few or balanced dropouts; reasons explained; appropriate handling (e.g., ITT analysis).

Incomplete data or handling not described.

High or unequal attrition without adequate explanation or handling.

All prespecified outcomes reported as planned.

Unclear whether all outcomes reported.

Evidence of omitted or selectively reported outcomes.

No additional concerns.

Possible but unverified sources of bias.

Clear evidence of other problems (e.g., early stopping, baseline imbalance). C Illustrative disagreements across all RoB domains Low Unclear “When the FS met criteria for pharmacologic treatment and after consent, the infant received the study drug based on the randomization schedule (4 per block), available only through the investigational drug unit of the Department of Pharmacy.”

- The evidence indicates use of a block randomization approach (4 per block), which is a valid randomization method in principle 2. However, crucial details are missing: -The method used to generate the randomization schedule is not specified -No information about who generated the sequence -No description of the actual sequence generation process 3. While the involvement of the pharmacy department suggests proper implementation, this relates more to allocation concealment than sequence generation 4. The lack of specific information about how the randomization schedule was created prevents a “Low” risk assessment 5. There are no clear signs of systematic bias that would warrant a “High” risk assessment Therefore, due to insufficient information about the sequence generation process, an “Unclear” risk assessment is most appropriate.

We agree the LLM’s “Unclear” rating is appropriate, as the study does not describe the method used to generate the randomization sequence, despite mentioning block randomization.

Low Unclear “Enrolment of participants was performed continuously by the same two researchers, and randomisation was carried out continuously using pre-prepared opaque sealed envelopes with an equal allocation ratio of 1:1:1.”

- The study uses opaque sealed envelopes, which is a potential method for adequate concealment 2. However, two critical aspects raise concerns: -The envelopes are not described as sequentially numbered, which is essential for preventing selection bias -The same researchers handled both enrollment and randomization, which could potentially compromise the concealment process 3. While some concealment measures were in place (opaque sealed envelopes), the description lacks sufficient detail to ensure the process was truly secure 4. Without confirmation of sequential numbering and separation of duties, we cannot be certain that foreknowledge of assignments was prevented 5. Therefore, while not clearly high risk, there is insufficient information to confirm adequate concealment, leading to an unclear risk assessment

We agree the LLM’s “Unclear” rating is appropriate, as the study lacks key details about allocation concealment, including whether envelopes were sequentially numbered and whether roles in enrollment and assignment were separated. 37). Each participant was administered her treatment assignment sequentially in order of enrollment, via coded opaque envelopes containing general study instructional materials, a welcome letter, and information pertinent to the yoga or educational film program. Each group (yoga and film intervention) attended a 90-minute class twice weekly for 8 weeks. Classes for the two programs were scheduled at the same times and in similar settings. Film group participants also received the yoga home practice materials, as well as coupons to attend local Iyengar yoga classes. Similarly, yoga group participants were also offered the opportunity to view the educational films following completion of the final assessment. Participants randomized to the yoga program completed a gentle Iyengar yoga program specifically designed for older, sedentary adults. In addition to attending classes, participants were asked to complete at least 30 minutes of home practice on nonclass days, with the aid of a DVD and a booklet illustrating the yoga home practice routines. This standardized educational film program, described in detail elsewhere, was designed to be easily replicable and to ensure comparability of staff attention, class time, and social interaction. Each class began with a brief meet and greet period, followed by viewing of an educational film chosen for its relevance and interest to our study population, then concluded with a 10-15 minute group discussion facilitated by a health professional with expertise in complementary and alternative therapies and women’s health.

To increase credibility and reduce participant bias, the educational film program was presented to potential participants as an informative, engaging, and relaxing alternative to the yoga classes. Participants underwent a comprehensive assessment at baseline and following the 8-week intervention period."

- The study design inherently precludes effective blinding of participants and personnel:

-Participants actively engaged in either yoga practice or watching films, making it impossible for them not to know their assignment -Yoga instructors and film program facilitators necessarily knew which intervention they were delivering -The interventions were fundamentally different in nature (physical activity vs. passive viewing) 2. While the study implemented some measures to minimize bias: -Assessment staff were blinded to treatment assignment -The educational film program was presented as a credible alternative -Similar class times, settings, and attention levels were maintained These measures help reduce some forms of bias but do not achieve true blinding of participants and personnel.

- The inability to blind participants and personnel could influence outcomes, particularly for self-reported measures like sleep quality, stress, mood, and anxiety, which are susceptible to performance bias.

We agree the LLM’s “High” rating is appropriate, as blinding of participants and personnel was not feasible given the nature of the interventions, increasing the risk of performance bias for subjective outcomes. “Although an intent-to-treat approach was adopted for the analyses, there were some missing data across assessments due to participant refusal to answer certain questions, failure to return some questionnaires, or refusal to participate in physiological testing or body composition assessment using the DXA.”"

The high risk assessment is justified through the following steps: d. If evidence contains conflicting statements (e.g., calls study randomized but elsewhere describes alternation), treat as High if any clear non-random process is described; otherwise treat as Unclear.

- Do NOT infer adequacy from indirect signs: -Do not infer Low just because groups are equal in size or because the study calls itself " randomized" without method. -Do not assume an adequate computer method unless it is named.

-Start with a concise statement of what the evidence says (quote or paraphrase the critical phrase). -Link that phrase to the rule that led to your risk_level (e.g., “explicit random number table →Adequate →Low”). -If Unclear or High, name why (missing details or explicit non-random method) and what specific additional information would change the judgment (e.g., “If the authors reported a computergenerated sequence, this would support Low”). -Keep the justification in bullet points and be explicit about which parts of the excerpt you used.

-Provide a short (1-3 sentence) summary explaining your interpretive logic (this is the concise chain-of-thought style explanation of how you mapped evidence →risk level). Do not include long introspective chains or extensive private reasoning–keep it outcome-focused and factual.

- Confidence score:

-Provide a numeric confidence between 0 and 1.

-Guidance:

-High confidence (0.8-0.95) when the evidence clearly and unambiguously matches the Adequate or Inadequate lists. -Moderate confidence (0.5-0.79) when evidence suggests but is not fully explicit.

-Low confidence (0.2-0.49) when evidence is very sparse or ambiguous.

-Do not use 0 or 1; avoid extremes.

-reasoning: <concise summary, 1-3 sentences> -risk_level: one of {Low, Unclear, High} -justification: bullet-point list referencing the evidence and applying the rules above -confidence: numeric (0-1) per guidance 9. Tone and style: -Be concise, factual, and explicit.

-Do not speculate beyond what the evidence supports; if something is unknown, call it Unclear and say what would be needed to upgrade/downgrade the judgment.

-“Randomization was performed by a study coordinator using a random number table prepared prior to recruitment.” →Low (explicit random number table). High confidence (e.g., 0.8-0.9). -“This study was designed as a randomized… trial.” →Unclear (study claims randomized but no method). Moderate/low confidence (e.g., 0.5-0.65). -“Divided into two equal groups using the simple randomisation method.” →Unclear, unless the mechanism is specified. Do not infer Low based on the phrase “simple randomisation” alone.

Adhere strictly to these rules each time you assess an input.

D.3 Mistral small 3.1 with Claude-3.5-sonnet

Task: Assess the risk of bias for allocation sequence generation in research studies using the ROB1 (Risk of Bias 1) criteria. Evaluate how participants were assigned to different study groups and determine the level of potential bias in the allocation process.

Input Format:

- justification: Detailed explanation supporting the risk level, including:

-Specific quotes or evidence from the text -Analysis of whether the method could introduce systematic differences -Impact on group comparability -Discussion of any stratification or blocking methods used 4. confidence: Score between 0-1 reflecting assessment certainty -High (>0.9): Explicit description of randomization method with technical details -Medium (0.7-0.9): Some methodological information but missing specific details -Low (≤0.7): Minimal or vague information about allocation Key Assessment Guidelines: 1. Simple randomization methods like coin toss, when properly implemented and documented, should be considered low risk 2. Any allocation based on participant preference or non-random criteria should be considered high risk 3. Predetermined allocation lists without details about their generation should be considered unclear risk 4. The presence of a research coordinator or observer does not affect the risk level -focus on the method itself 5. Consider whether the described method could allow prediction of future assignments 6. When in doubt between risk levels, focus on the specific details of the sequence generation method 7. The mere mention of “randomization” without supporting details is insufficient for low risk Domain-Specific Knowledge: 1. Coin toss, when properly implemented and documented, is an acceptable randomization method 2. Participant preference-based allocation always introduces high risk of bias 3. The presence of observers or coordinators does not affect the randomization quality 4. Predetermined lists require explicit details about their generation method 5. Transparency in procedure does not compensate for inadequate randomization methods Remember: Focus specifically on how the sequence was generated, not on other aspects of randomization like allocation concealment or implementation.

0.133 (0.117, 0.149) 133.8 (95.1, 152.4) D6 (‘selective reporting’) 0.681 (0.559, 0.804) 0.169 (0.143, 0.195) 125.6 (104.8, 146.3) D7 (‘other bias’) 0.867 (0.596, 1.138) 0.143 (0.114, 0.172) 138.9 (109.1, 168.8) Mistral small 3.1 with Claude-3.5-sonnet D1 (‘randomization generation’) 0.030 (0.029, 0.032) 0.254 (0.220, 0.287) 21.3 (20.5, 22.2)

📸 Image Gallery