Deep Unsupervised Anomaly Detection in Brain Imaging: Large-Scale Benchmarking and Bias Analysis

📝 Original Info

- Title: Deep Unsupervised Anomaly Detection in Brain Imaging: Large-Scale Benchmarking and Bias Analysis

- ArXiv ID: 2512.01534

- Date: 2025-12-01

- Authors: Alexander Frotscher, Christian F. Baumgartner, Thomas Wolfers

📝 Abstract

Deep unsupervised anomaly detection in brain magnetic resonance imaging offers a promising route to identify pathological deviations without requiring lesion-specific annotations. Yet, fragmented evaluations, heterogeneous datasets, and inconsistent metrics have hindered progress toward clinical translation. Here, we present a large-scale, multi-center benchmark of deep unsupervised anomaly detection for brain imaging. The training cohort comprised 2,976 T1 and 2,972 T2-weighted scans (≈ 461,000 slices) from healthy individuals across six scanners, with ages ranging from 6 to 89 years. Validation used 92 scans to tune hyperparameters and estimate unbiased thresholds. Testing encompassed 2,221 T1w and 1,262 T2w scans spanning healthy datasets and diverse clinical cohorts. Across all algorithms, the Dice-based segmentation performance varied between ≈ 0.03 and ≈ 0.65, indicating substantial variability and underscoring that no single method achieved consistent superiority across lesion types or modalities for any task. To assess robustness, we systematically evaluated the impact of different scanners, lesion types and sizes, as well as demographics (age, sex). Reconstruction-based methods, particularly diffusioninspired approaches, achieved the strongest lesion segmentation performance, while feature-based meth-ods showed greater robustness under distributional shifts. However, systematic biases, such as scannerrelated effects, were observed for the majority of algorithms, including that small and low-contrast lesions were missed more often, and that false positives varied with age and sex. Increasing healthy training data yields only modest gains, underscoring that current unsupervised anomaly detection frameworks are limited algorithmically rather than by data availability. Our benchmark establishes a transparent foundation for future research and highlights priorities for clinical translation, including image native pretraining, principled deviation measures, fairness-aware modeling, and robust domain adaptation.📄 Full Content

graphic factors such as age and sex, genetic predispositions, environmental influences, and lifestyle factors. Moreover, technical variability stemming from magnetic resonance imaging (MRI) acquisition protocols, scanner hardware, and image quality 38;76 adds another layer of complexity, making robust characterization and analysis particularly challenging. In clinical practice, diagnosis of brain lesions and treatment planning generally rely on human evaluation of MRI, including lesion identification 5;73 . Yet, even for experienced clinicians, the heterogeneity of disease-related changes makes manual assessment time-consuming and error-prone 82 . To reduce misdiagnosis, streamline planning, enable longitudinal monitoring, and alleviate workload, auto-mated lesion detection and decision-support systems have emerged 15;35 . State-of-the-art systems typically use supervised deep learning to map images to manually annotated lesion masks. While the performance on curated datasets can be strong, generalization to unseen lesion types and different scanners remains limited 25;78 , and assembling large expert-annotated datasets is costly 23 . This tension underscores the need for methods that reduce dependence on manually created lesion maps. Such approaches must not only generalize across diverse lesion types, sizes, and contrasts but also adapt to the wide range of individual brain anatomies and disease presentations observed in clinical populations while remaining robust to demographic variability and nuisance variables.

Unsupervised Anomaly Detection (UAD) has emerged as a widely adopted alternative to supervised approaches in MRI analysis and lesion detection. The central principle of UAD is to model a representation of normal brain structure using clean data and to flag deviations from this learned concept of normality as potential anomalies in unseen cases 63 . By shifting the focus from predefined lesion labels to deviations from normative patterns, UAD provides a framework that can potentially capture unexpected, subtle, or previously uncharacterized pathologies, making it particularly valuable for heterogeneous and poorly understood brain disorders. In brain MRI, recent deep UAD methods typically fall into two main categories: (i) reconstruction-based approaches, which learn to compress and reconstruct healthy images and then use voxel-level residuals between the input and reconstruction to localize anomalies 11;22;48 , and (ii) feature-based approaches, which extract intermediate representations from neural networks and apply a secondary detection model for pixel-level anomaly localization 20;62;79 . Furthermore, training strategies based on synthetic-anomalies have been introduced to further reduce reliance on real lesion maps by altering images of healthy individuals. Often, these approaches incorporate reconstructionbased methods to mitigate either detection or generalization problems 59;80 .

Analogous unsupervised anomaly detection techniques have already been established in other domains, such as industrial surface defect detection 12;20;62;79 , autonomous driving 30 , and time series analysis 4;13 , where standardized datasets and evaluation protocols have catalyzed progress. In contrast, progress in medical imaging has long been hampered by the lack of comparable large-scale resources. Only with the advent of initiatives such as the Human Connectome Project 14;70;74 and UK Biobank imaging 57 has this begun to change. Perhaps unsurprisingly, as medical data is inherently sensitive, it is more difficult to acquire, share, and pool at scale 60 . To compensate, some studies have resorted to practices utilizing a “healthy” reference from clean slices of anomalous volumes. This strategy potentially introduces systematic biases and undermines the generalizability and robustness of findings, as non-anomalous slices in brains with lesions can hardly be considered to belong to a healthy cohort of brains 10 . Beyond the challenge of reference definition 29 , evaluations in medical imaging often differ in fundamental aspects, including performance metrics, lesion taxonomies, brain regions analyzed, preprocessing strategies, and the degree of control for scanner and demographic factors that render the comparison of methods impossible. Although valuable steps toward unification have been made 8;45 , these efforts remain fragmented and leave critical aspects inconsistently addressed, such as evaluation with healthy data, the diversity of the lesions tested, the impact of demographics on predictions, and missing thresholding strategies to derive the predictions. As a result, a stringent and clinically grounded benchmark is needed to establish the current state of the field, create a fair basis for comparison, and guide the development of more robust and generalizable methods in the future.

To address this need, we introduce a comprehensive, multi-center benchmark of deep UAD for brain MRI (see Fig. 1). Our training cohort is five times larger than that in previous studies and includes scans across a large age range and both sexes. Evaluation spans a holdout set of healthy individuals, diverse lesion types, and complex diseases while explicitly assessing the effects of different scanners and demographics (in particular, age and sex). Alongside leading and established UAD approaches developed for medical image analysis, we include industrial surface defect detection methods to test crossdisciplinary insights and enable direct comparisons of state-of-the-art techniques across fields. Our evaluation of stroke, tumors, multiple sclerosis (MS), and white matter hyperintensities (WMH) demonstrates that reconstruction-based approaches, many of which are based on diffusion models, achieved the strongest overall performance, while feature-based methods showed greater robustness to distributional shifts. At the same time, systematic biases linked to lesion size, image contrast, age, sex, and scanners remain major obstacles to clinical translation. The performance of the algorithms on T1w images were reported using two different thresholds, the optimal threshold, defined as the maximum possible Dice score optimized in the test set, thus potentially susceptible to bias but standard in the field. Second, the estimated threshold optimized on the validation data set, then fixed and performance for that threshold reported on the untouched test set, thus unbiased but not the standard in the field. For the large lesions we did not observe a substantial difference between the thresholding procedures. b) The performance of the algorithms with the optimal and estimated thresholds on structural T2 weighted images. c-d) Example images and labels for each dataset and modality. Taken together, we show heterogeneous performance across lesions and modalities, no single method was superior across tasks. Across all evaluations the best performing methods were Disyre followed by ANDi.

We trained six different models belonging to the broader category of reconstruction and feature-based methods. All models described in Sec. 4.3 were trained separately using T1-weighted (T1w) and T2weighted (T2w) MRIs. We used 2976 T1w scans and 2972 T2w from healthy individuals, which amounts to approximately 461,000 imaging slices aggregated from multiple datasets for training. Details regarding the data are described in Tab. 1 and Secs. 4.1 and 4.2.

After training, the models represent a normative con-cept that can be used to calculate deviations at the voxel level and are referred to as anomaly maps.

The calculated anomaly maps are continuous, and a threshold τ is needed for the binary classification of a voxel as either healthy or anomalous. In the literature, this threshold is usually not estimated 11;22;48;55 , and the test set, which typically contains only a single lesion type, is used to determine the optimal τ , potentially leading to inflated benchmarks 83 . In a clinical scenario, this corresponds to instances in which the condition is already known. By contrast, we estimated the threshold by using a separate validation dataset that contains all lesion types observed during the test phase, including tumors, chronic stroke, multiple sclerosis (MS), and white matter hyperintensities (WMH). Specifically, the threshold is tuned for each method by finding the best mean Dice score, which results in an unbiased threshold based on optimal performance in the validation set concerning lesion detection. Note that tuning the hyperparameters or the threshold using anomaly data is in contrast to unsupervised learning, but is the standard approach for deep learning methods in anomaly detection 69 .

We evaluated all implemented algorithms with the optimal and estimated thresholds on a highly heterogeneous collection of lesions spanning multiple pathologies, sizes, contrasts, and distributions, and we refer the reader to Sec. 2.1 for the results. In addition to the evaluation of volumes containing lesions, the performance of the algorithms at the estimated threshold was evaluated on hold-out healthy data in Sec. 2.2. In Sec. 2.3, the effects of domain shifts, lesion size, and their interaction on detection performance were examined. In Sec. 2.4, the effects of demographic variations were systematically evaluated. Lastly, the influence of data abundance for reference definition has been examined in Sec. 2.5. The concept of the benchmark and the categories of the methods used are shown in Fig. 1. For more details regarding the individual methods and the data used, we refer the reader to Sec. 4.

Among all evaluated methods, Disyre 59 achieved the highest overall performance, followed by ANDi 22 and FAE 55 (see Fig. 2). In general, algorithms performed better on larger lesions, on hyperintense lesions (e.g., in T2w images), and on in-distribution (ID) samples. By contrast, feature-based methods consistently struggled to detect small lesions such as MS and WMH, as well as the predominantly small lesions in the ATLAS-N dataset. Interestingly, the same methods appeared more robust to out-of-distribution (OOD) samples in ATLAS-O, suggesting a trade-off between sensitivity to small, subtle lesions and ro-bustness to distributional shifts. Threshold selection emerged as a critical factor for performance, particularly for smaller lesions (MS, WMH, ATLAS-N), indicating that deviations are shaped not only by lesion type but also by lesion size. For larger lesions, threshold sensitivity was more limited and was primarily observed in a subset of algorithms, such as Patch-Core, ANDi, and RIAD. This finding underscores the importance of realistic evaluations and suggests that adaptive thresholding strategies based on the specific individual or their demographics could improve future methods.

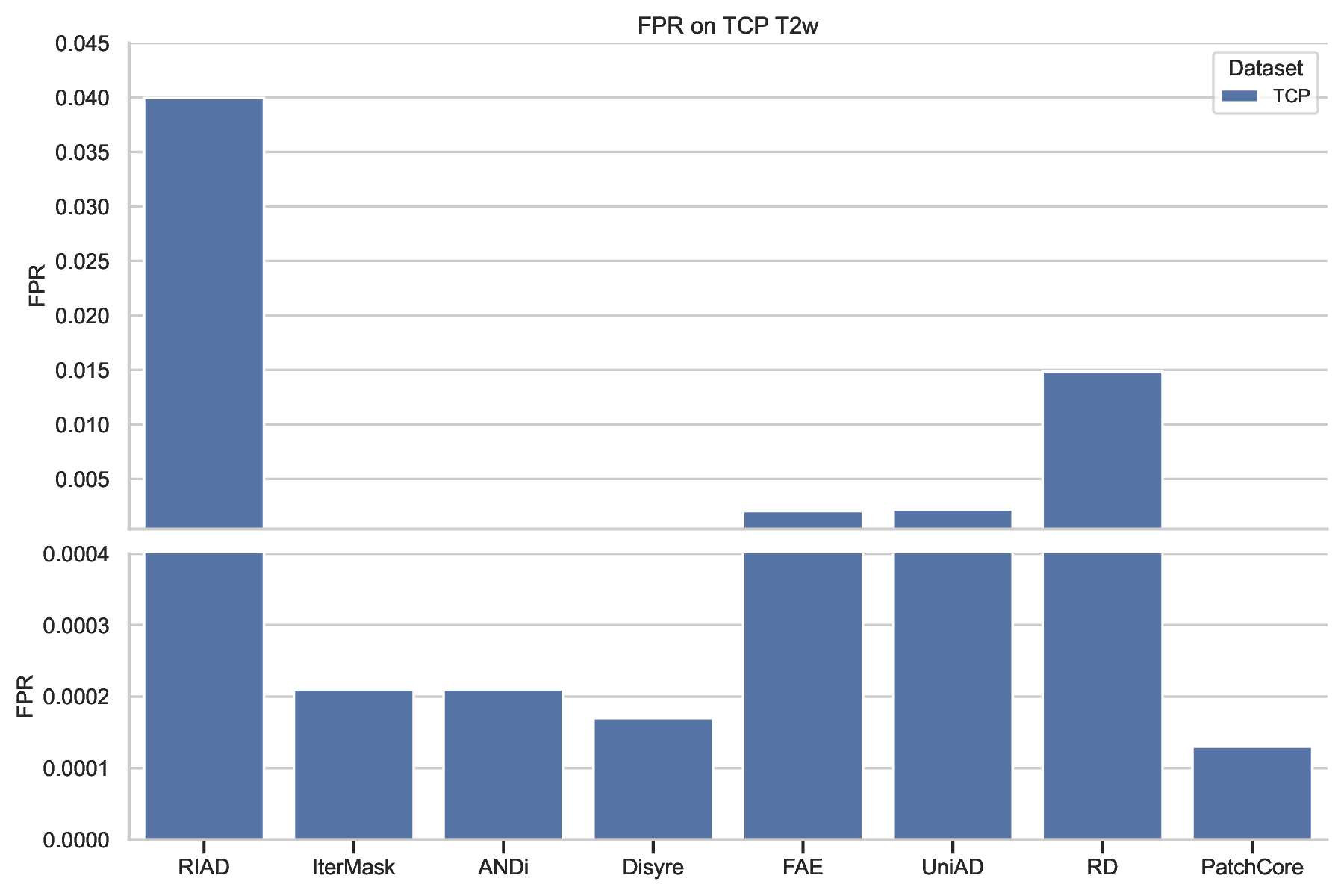

In classical anomaly detection, the maximum acceptable false positive rate is predefined, and the threshold is chosen accordingly 27;47 . Here, we optimized the threshold using the Dice score on the validation dataset, as it balances precision and recall and is the standard metric for medical image segmentation 58 . Since different lesion types (tumors, stroke, MS, WMH) each have distinct ideal balances with the negative class (healthy voxels), we used a validation dataset containing all lesion types to define an unbiased operating point with meaningful detection accuracy and low false positive rates. We evaluated the resulting false positive rate in the healthy cohort to understand how the realistic operating point influences false alarms for healthy individuals under domain shifts that could not have been accounted for with the ideal balance in the validation dataset. Three datasets containing only healthy individuals, imaged with different protocols, were used to evaluate the false positive rate of the algorithms. Note that these datasets represent the general population, where lesions are extremely rare, and samples are generally lesion-free. Applying our algorithms to such data, therefore, provides a good estimate. As shown in Fig. 3, all algorithms performed differently across the three distinct datasets, indicating a strong influence from either imaging protocols or demographics. Overall, the methods showed the highest false positive rates (FPRs) on the 1.5T FCON scans and the best performance on TCP. We attribute the reduced performance on FCON-1.5T to lower image quality and the limited number of 1.5T scans in the training data, while FCON-3T likely includes OOD samples from unseen scanners or protocols. TCP scans belong to the ID distribution, which could explain the higher performance. In general, FPRs in healthy samples correlated with lesion detection performance. Disyre performed best, followed by ANDi and Patch-Core. Interestingly, some feature-based approaches (e.g., PatchCore, UniAD) achieved lower FPRs but exhibited weaker detection, and their performance varied significantly across datasets, indicating bias from other factors. For T2w scans, FPR values were computed on TCP (Suppl. Fig. S1). Importantly, this analysis highlights threshold selection as a key bottleneck for clinical translation: despite using a diverse validation dataset, the thresholds produced large FPR differences across healthy datasets.

)35

Figure 3: False positive rate on healthy brains across all tested algorithms associated with estimated threshold. For some methods we identified high false positive rates and heterogeneous performances across the healthy cohort for T1w images (for T2w see supplement). This shows that the methods were biased for either imaging protocols or demographics and selecting the right decision threshold is critical.

Robustness to domain shifts, particularly those induced by differences in MRI scanner hardware, is essential for clinical translation. Using the ATLAS dataset, we partitioned the data into ID and OOD subsets, stratifying cases by lesion load, i.e., number of anomaly voxels in the volume, to investigate how lesion burden interacts with domain variability (see Fig. 2 and Sec. 2.1). We constructed eight datasets corresponding to four matched ID-OOD pairs, stratified by lesion load percentiles derived from the full ATLAS cohort. We group volumes above the 75th percentile, those above the 50th percentile to the 75th, those above the 25th percentile to the 50th percentile, and those below the 25th percentile. This design ensured that each pair contained scans with comparable lesion burdens, thereby isolating the effects of scanner heterogeneity from lesion size. Statistical evaluation using Mann-Whitney U tests with Benjamini-Hochberg correction revealed a significant decline in segmentation performance for most reconstruction-based methods when applied to OOD data with high lesion burden, while featurebased methods remained largely unaffected, as shown in Fig. 4. The only exception was FAE, which showed significant performance losses in the upper and middle lesion load percentiles. Across all methods, Dice scores decreased with decreasing lesion load, where lesions are smaller and more subtle, thereby challenging algorithmic sensitivity, and the performance gap between ID and OOD samples narrowed for smaller lesions, indicating that lesion size exerts a stronger influence on model performance than scanner-related factors. Nevertheless, even top-performing algorithms displayed marked drops in the top and upper lesion load strata under domain-shift conditions, exposing critical vulnerabilities to scanner differences and lesion variability. The p-values and corrected p-values can be found in Suppl. Table 6. To further test the interaction between lesion load and domain variability, we conducted a Scheirer-Ray-Hare test with the lesion load categories and the ID-OOD dummy variable as the independent variables to predict the Dice scores. All algorithms were significantly The ATLAS dataset had been split by the percentiles of the lesion load. Top corresponds to a lesion load above the 75th percentile, upper -above 50th percentile to 75th, middle -above 25th percentile to 50th percentile, lower -below 25th percentile. Columns marked with * correspond to the distributions of Dices scores that are different according to the Mann-Whitney U test after multiple testing correction with the Benjamini-Hochberg adjusted significance level α = 0.05. Taken together, reconstruction-based approaches are more sensitive to imaging protocols, while feature-based methods are generally robust to this source of variation in the data.

impacted by lesion load; ANDi and Disyre were impacted by the ID-OOD split, and Disyre exhibited a significant interaction term. The degrees of freedom and p-values for the Scheirer-Ray-Hare tests can be found in Suppl. Table 7. These results underscore the urgent need for models that generalize reliably across acquisition settings and patient populations, particularly in scenarios with small, clinically relevant lesions.

The performance of unsupervised anomaly detection methods varied substantially across individuals due to multiple interacting factors. Lesion size was a key determinant of detection accuracy, with larger lesions producing stronger deviations and being more readily detected, while false positive rates showed considerable variation across methods and datasets (see Fig. 3). To further analyze this behavior, we studied the impact of inter-individual anatomical variability linked to demographic attributes such as age and sex in the HCP Aging and BraTS datasets. As shown in Fig. 5, the Spearman rank correlation test with α = 0.05 indicated that models tended to overestimate the occurrence of anomalies in older individuals in the HCP Aging dataset, likely due to age-related changes in brain structure. Interestingly, there was no effect of age on the Dice score. To evaluate differences between the sexes, we calculated a Mann-Whitney U test on the FPR as a proxy for the fairness of our algorithms. All algorithms showed significant differences in performance for the different sex groups, with male brains being predicted as more anomalous than female brains. Most algorithms ex-hibited higher effect sizes on the T1w images, as measured by Cohen’s d, indicating stronger sensitivity to sex-related differences in this modality. Importantly, the top-performing algorithms were among those with the lowest bias, suggesting that high overall accuracy can imply, in our scenario, better fairness across biological groups. Detailed results, including the means and standard deviations of the FPR values for both groups, the corresponding Cohen’s d values, and the full plots for the T1w analyzes, are provided in Suppl. Fig. S2. These findings highlight the need to develop and rigorously validate anomaly detection models that are robust against biological variability. Such resilience is critical not only for the reliable detection of lesions but also for ensuring stable performance in healthy cohorts, thereby supporting both clinical applicability and generalizability across diverse populations.

To assess the influence of data abundance on performance, we tested the impact of diminished training data and experimented with an additional training dataset that comprised 3% of the described training dataset used in the main analysis. For the performance evaluation of the newly trained models in the detection task, only the validation dataset was used due to computational constraints. As shown in Fig. 6, all algorithms except UniAD performed almost identically in terms of the mean Dice score on the validation dataset. Similarly, performance on false positive rates measured with the TCP dataset was stable across training datasets, with two exceptions: UniAD and IterMask. These results suggest that straightforward scaling strategies alone, by enlarging training data, are insufficient to overcome fundamental limitations for most unsupervised anomaly detection tasks.

The analysis was performed on the T1w images, and RIAD was excluded due to difficulties encountered during the optimization procedure for all conducted experiments.

This study presents one of the most comprehensive evaluations of UAD for brain MRI to date, spanning multiple scanners, demographic groups, and four major brain diseases, ranging from stroke and tumors to multiple sclerosis (MS) and white matter hyperintensities (WMH). While recent advances demonstrate clear progress, current approaches remain far from achieving the robustness, sensitivity, and fairness required for clinical deployment 40 . Reconstructionbased methods, particularly diffusion-inspired approaches such as Disyre and ANDi 22;59 , achieved the highest segmentation accuracy, especially for large and hyperintense lesions. Feature-based methods, by contrast, were more resilient to scanner variability but consistently struggled with subtle or small anomalies. Several newly developed reconstructionbased methods have achieved state-of-the-art performance, but no algorithm achieves clinically relevant performance defined by sufficient detection performance, robustness to domain shifts caused by imaging protocols and potentially new populations, and without unintended discriminatory biases 40 . On the contrary, our findings highlight systematic sources of bias, namely that lesion size and image contrast were dominant drivers of accuracy, and false positive rates in healthy cohorts were systematically influenced by demographics, particularly age and sex. Taken together, these results emphasize that future advances must go beyond incremental performance gains. Methodological priorities include increasing sensitivity to small and low-contrast lesions, improving robustness to distributional shifts across scanners and protocols, reducing demographic biases, and refining evaluation procedures to more closely mirror real-world conditions. A central theme that emerges from this study is the critical role of thresholding and evaluation practices. Because UAD produces continuous anomaly maps, binarization requires a decision threshold. While threshold-independent evaluation exists, it does not provide an indication of the performance under a specific binary decision that is needed for any future diagnosis or longitudinal monitoring; therefore, it is less valuable in a medical context. In much of the literature, thresholds are optimized directly on the test set, potentially resulting in inflated performance estimates that would not hold in clinical settings. In contrast, we determined thresholds using a heterogeneous validation set encompassing multiple lesion types and then evaluated them on unseen test data. This more principled procedure yielded clinically realistic performance estimates that showed differences from standard thresholding procedures for some of the compared models (see Fig. 2). Such effects underscore that thresholds are not simple post-hoc technicalities but integral components of the detection pipeline. Effective evaluations and eventual deploy- This analysis showed that the majority of algorithms are irresponsive to more data, highlighting the need for methods that are capable to grain performance when integrating large scale data for anomaly detection.

ment, therefore, require adaptive thresholding strategies potentially conditioned on scanner, protocol, or demographic information, as well as standardized validation procedures to ensure fair and reproducible comparisons across methods. Closely related to this issue are the implicit assumptions underpinning reconstruction-based approaches, which we deem to be a major cause of their shortcomings with respect to clinical usability. The first assumption is that a model trained exclusively on healthy data will always reconstruct a healthy sample. While diffusion-based methods partly relax this assumption through the forward process, enabling a tradeoff between reconstruction fidelity and an image from the data distribution, interference in this process is practically non-trivial 9;22 . Furthermore, control of the reverse process to create conditional samples remains mathematically opaque for diffusion and flow models 50 . More classical methods, such as RIAD or IterMask, employ complex masking strategies to encourage useful reconstructions; however, these do not guarantee that anomalies are removed. Even subtle intensity perturbations can suffice when residual error is used. The second assumption is that the deviation measure between input and reconstruction is inherently suitable for anomaly localization. The commonly used residual error has well-documented drawbacks 54 , as it treats each pixel independently and ignores spatial context. Alternatives such as the Structural Similarity Index Measure (SSIM), as used in the FAE 55 , partly address this by incorporating locality. However, no deviation metric or measure has yet been explicitly designed for brain MRI. Therefore, we suggest that the choice of deviation is as critical as the reconstruction itself, and developing neuroanatomically informed measures could represent a key avenue for advancing reconstructionbased UAD.

In contrast to previous reports 45 , feature-based approaches did not achieve the highest performance in our benchmark. Consistent with earlier analyzes, however, we observed that these methods were particularly weak at detecting small lesions. We hypothesize that this relative decline reflects the rapid advances in reconstruction-based and generative modeling approaches, which have shifted the comparative performance landscape 20;22;48;55;59;62;79;81 . The central assumption underlying feature-based frameworks is that pretrained features provide sufficient representational power to distinguish anomalies from normal tissue in the embedding space. All feature-based methods included here relied on ImageNet-pretrained networks, even when originally developed for brain MRI (e.g., FAE). While such features can yield com-petitive performance, the use of ImageNet pretraining is suboptimal, and the extension to MRI-specific pretraining appears to be a natural progression. Indeed, prior work suggests that MRI-based pretraining can yield small but consistent improvements 45 . We did not pursue this option in the present benchmark, as no recent UAD method has demonstrated state-ofthe-art performance using MRI-pretrained features. The scarcity of such approaches in the literature likely reflects both practical and conceptual barriers. One important barrier is the lack of MRI datasets that are comparably large and harmonized to the scale of ImageNet 1 . Another difficulty is the design of pretext tasks that transfer effectively to the downstream challenge of anomaly detection. Overcoming the former barrier is likely infeasible, but the latter could reinvigorate feature-based pipelines and potentially restore their competitiveness, especially if paired with strategies that address scanner heterogeneity and small-lesion sensitivity.

Sensitivity to domain shifts caused by scanner effects emerged as an important take-home message as a result of our benchmark. By stratifying the ATLAS dataset into in-distribution and out-ofdistribution subsets matched for lesion load, we were able to disentangle the effects of scanner variability from lesion size. Reconstruction-based methods showed pronounced degradation on OOD data, particularly when lesion burdens were high, suggesting that larger lesions may amplify reliance on scannerspecific statistical cues. Feature-based methods were generally more stable across scanners. However, exceptions such as the FAE model highlight how deviation measures strongly influence generalization. We suspect that the FAE’s susceptibility to OOD shifts arises from its use of an image-derived deviation measure, which treats high-resolution feature maps as if they were natural images and therefore inherits scanner sensitivities. Importantly, our results reveal that lesion size and domain shifts can interact, with large lesions amplifying vulnerability to acquisition-related biases, whereas small lesions are inherently more difficult to detect. These findings emphasize that robust generalization to heterogeneous imaging protocols remains an unsolved challenge for any possible future deployment. Address-ing this issue will require explicit domain adaptation strategies, including scanner-aware harmonization 76 , training on more diverse acquisition protocols, and potentially test-time adaptation to local distributions to ensure consistent performance across imaging environments. Demographic factors also had a systematic effect. False positives increased with age in healthy cohorts, reflecting normal age-related changes misclassified as pathology. All algorithms showed significant sex-related differences, with male brains more often flagged as containing anomalous regions. Although the top-performing methods exhibited somewhat reduced demographic bias, these results underscore the need for fairness-aware modeling 10 . Incorporating demographic factors into normative reference frameworks 64 and anomaly detection workflows, explicitly quantifying subgroup biases 65 , and potentially calibrating thresholds in a stratified manner will be essential to ensure equitable performance. More broadly, these observations point to the necessity of developing models that are resilient to natural anatomical diversity and capable of distinguishing genuine pathology from normative variability.

We observed a limited impact of scaling training data. Whereas supervised machine learning typically benefits substantially from larger datasets 21;31 , simply adding more healthy scans yields only minor improvements in UAD performance for some algorithms. This reflects the algorithmic constraints of the UAD approaches, for which training solely on healthy cohorts cannot effectively teach models which deviations are clinically meaningful. Additionally, subtle lesions are often indistinguishable from normal anatomical variation. In line with prior work 45 , our experiments confirmed that even when the training data were drastically reduced, most methods showed comparable lesion detection accuracy and false positive rates for in-distribution evaluations. These results suggest that naive scaling is insufficient to overcome the intrinsic challenges of neuroimaging anomaly detection. Future progress will require qualitatively new strategies, including anatomically informed deviation measures, large-scale medical pretraining with task-aligned self-supervised objectives, and robust domain adaptation frameworks that explicitly account for clinical variability.

Although our study drew on a broad range of datasets spanning multiple lesion types and imaging protocols, the collection cannot fully reflect the diversity of real-world clinical neuroimaging. Rare pathologies 46 , pediatric cohorts 70 , and cases acquired under extreme or atypical imaging conditions remain underrepresented, which may limit the generalization of our conclusions. Similarly, although we explicitly examined the demographic effects of age and sex, other important axes of biological and social variability were not captured. Factors such as ethnicity, comorbidity, medication status, or socioeconomic background can shape brain anatomy and imaging characteristics 51 , and their influence on anomaly detection remains unexplored. Another limitation lies in our choice of evaluation targets. Thresholds were optimized with respect to segmentation accuracy, which provides a clear and widely accepted benchmark but may not align with clinical decision-making needs. Tasks such as patient-level triage, prognosis, or monitoring treatment response may require different operating points or evaluation metrics, emphasizing sensitivity, specificity, or predictive value over voxel-level overlap. Future studies should, therefore, consider multiple evaluation frameworks that reflect the range of clinical contexts in which anomaly detection could be applied. Methodological scope is another constraint. Although we implemented a broad set of state-of-the-art algorithms, not all contemporary or emerging approaches could be included. Having said that, we implemented relevant and current state-of-the-art algorithms while aiming for algorithmic diversity in the methods used. Moreover, although our pipeline was carefully designed to minimize data leakage and benchmark bias 75 , it still cannot reproduce all conditions relevant to clinical deployment. In practice, anomalies are rare, heterogeneous, and often subtle, and scans are embedded in complex diagnostic workflows. These realities pose additional challenges, such as handling incidental findings, balancing false positives against clinical workload, and integrating uncertainty estimates that remain outside the scope of this study. Taken together, these limitations highlight that while our benchmark offers an important step toward the systematic evaluation of UAD in neuroimaging, further work is needed to expand dataset diversity, incorporate broader demographic and clinical factors, refine evaluation criteria, and explore algorithmic strategies in conditions that more closely approximate realworld deployment.

Looking forward, several priorities emerge for future research. First, the design of principled deviation metrics should become a central focus. Residual errors and generic SSIM-based measures have well-documented limitations 54;77 , and future work should aim for neuroanatomically grounded deviations. Having said that, this task is admittedly extremely difficult, and determining whether this ambition is feasible is still subject to current research 7;19;42;43 . Benchmarks like ours, along with further developments using the principles outlined here, will allow us to evaluate such methods in more depth than is currently common. Second, largescale medical pretraining on curated neuroimaging datasets, combined with meaningful self-supervised tasks, could provide domain-native representations that are more sensitive to subtle anomalies than Im-ageNet features. Third, harmonization and domain adaptation need to be built directly into the modeling pipeline to ensure robust performance across scanners and acquisition protocols. Fourth, fairnessaware modeling should be prioritized 10 , with systematic evaluation of demographic biases and strategies to mitigate them. Finally, the evaluation itself must incorporate thresholds, as outlined here, and the reporting of uncertainty, robustness, and fairness alongside accuracy is essential to establish clinical trust.

In conclusion, this benchmark underscores both the promise and the current limitations of UAD in brain MRI. While modern approaches can detect a broad range of lesions, their performance is uneven across lesion types, scanners, and populations. They remain susceptible to bias and thresholding procedures. Addressing these challenges will require innovations that go beyond scaling data or marginal architectural tweaks. By developing principled deviation metrics, MRI-native pretraining, robust domain adaptation, fairness-aware pipelines, and clinically meaningful evaluation frameworks, the field can move closer to reliable, equitable, and actionable anomaly detection in neuroimaging.

Here, we provide information on the data (see Sec. 4.1 and Tab. 1), preprocessing (Sec. 4.2), and the evaluated methods (see Sec. 4.3 and Tab. 2). Additional information about training and tuning for all methods can be found in the supplementary methods.

For training, we used large-scale healthy cohorts, including the Cambridge Center for Aging and Neuroscience dataset (CAMCAN) 72 , the Human Connectome Project (HCP) Young Adult (S1200) dataset 74 , the HCP Development dataset 70 , and the IXI dataset 52 , resulting in 2,976 T1w and 2,972 T2w scans acquired across six scanners. For validation, we randomly selected approximately 10% of the individuals from all other lesion datasets, described below, yielding about 92 T1w and T2w scans. This dataset was used to tune hyperparameters and estimate unbiased thresholds. For testing, we included both healthy and clinical cohorts. The healthy cohort comprised the Transdiagnostic Connectome Project (TCP) 16 , a subset of the 1000 Functional Connectomes Project (FCON) 68 , and the HCP Aging dataset 14 . The clinical cohort was made up of the Multimodal Brain Tumor Segmentation Challenge (BraTS 2020) 5;6;56 , the Anatomical Tracings of Lesions After Stroke (ATLAS v2.0) 49 , the MSSEG and Ljubljana MS lesion datasets from the 2021 Shifts Challenge 53 , and the White Matter Hyperintensities (WMH) Challenge dataset from MICCAI 2017 44 . In total, the test set consisted of 2,221 T1w and 1,262 T2w scans, with the distribution of lesions and healthy samples summarized in Tab. 1 and visualized in Fig. 1. To analyze scanner-related domain shifts, the FCON dataset was divided into 139 scans acquired on 1.5T scanners (München, Oulu, Orangeburg) and 105 scans acquired on 3T scanners (Atlanta, Palo Alto, Ann Arbor). The ATLAS dataset was further partitioned into AtlasI (262 scans from scanners also used during training), AtlasO (227 scans from unseen scanners), and AtlasN (166 scans from scanners with unidentifiable information, which by chance contained mostly smaller lesions). Addi-tionally, the full ATLAS cohort was stratified into four groups (Top, Upper, Middle, and Lower) based on lesion load, using the 25th, 50th, and 75th percentiles, and combined with the scanner-based partitions, resulting in 57 individuals for ID Lower, 34 for OOD Lower, 51 for ID Middle, 58 for OOD Middle, 77 for ID Upper, 58 for OOD Upper, 79 for ID Top, and 77 for OOD Top. These splits allowed us to systematically evaluate algorithm robustness under distributional shifts induced by both scanner variability and lesion burden.

For preprocessing the CAMCAN, IXI, FCON, TCP, ATLAS, and WMH datasets, we applied a pipeline similar to the UK Biobank protocol 2 . Using FSL 37 , all images were reoriented to match MNI152 space 28 (rotation only, no registration). For multimodal datasets, rigid transformations to the individual’s T1w image were computed with FLIRT 36 and applied to align the modalities. This ensured that all subsequent transformations could be calculated on the T1w and transferred to other contrasts. Because Siemens gradient nonlinearity correction requires proprietary files and not all scans were Siemens acquisitions, this step was omitted. Instead, preprocessing proceeded with a field-of-view reduction on the T1w images, as in the UKB pipeline. Nonlinear registration to MNI152 space was performed with FNIRT 3 to generate a standard-space brain mask, which was then inverted and applied for skull stripping across modalities. After skull stripping, all scans were rigidly registered to the SRI24 atlas 61 and resampled to 1 mm isotropic resolution. In cases where large anomalies (e.g., ATLAS lesions) rendered FNIRT unreliable, ROBEX 34 was used for skull stripping. For HCP datasets, we used the minimally preprocessed scans 26 , additionally applying rigid registration and interpolation to SRI24 at 1 mm isotropic resolution for consistency with other datasets. Shifts challenge data 53 was already denoised, skull stripped, bias-field corrected, and interpolated; we therefore only applied rigid registration to SRI24. One Ljubljana case with a failed skull stripping was excluded. BraTS scans had un-

The reconstruction-based approaches used in this study include Reconstruction-by-Inpainting Anomaly Detection (RIAD) 81 , Iterative Spatial Mask-Refining (IterMask) 48 , Aggregated Normative Diffusion (ANDi) 22 and Diffusion-Inspired Synthetic Restoration (Disyre) 59 . We parameterized all reconstruction-based approaches with the same U-Net architecture that was inspired by the DDPM++ model from 71 .

RIAD 81 is a self-supervised approach trained to predict masked regions of varying sizes within an image. The image is first partitioned into regions of size k × k, each of which is randomly divided into n disjoint subsets that serve as the masking patterns. For each subset, the network predicts a reconstruction, and the n reconstructions are combined to yield an image in which every pixel has been estimated by the model. This process is repeated multiple times while varying the parameter k, thereby enforcing predictions at multiple spatial scales. The reconstruction losses across scales are averaged using the multi-scale gradient magnitude similarity measure, which encourages fidelity to structural features. The key idea is to obscure potentially anomalous regions while enabling the network to generalize across diverse lesion types through complex multi-scale masking strategies. In our experiments, we increased k to ensure coverage of large brain lesions while proportionally increasing n, thereby maintaining sufficient contextual information for accurate reconstruction.

IterMask 48 is a self-supervised approach that utilizes two models trained with multiple masking strategies to predict the original image. One model is trained using the masking of low-frequency components in an image. The other model is trained by additionally using randomly generated masks in pixel space. Consequently, both models receive the highfrequency components of the image as input, while the second one is able to integrate the complete frequency information from random image locations for The threshold is determined on a healthy validation set, and the iterative process is terminated once the relative change between consecutive steps falls below a predefined ratio.

ANDi 22 is a diffusion model 32;33;41 trained using Gaussian pyramidal noise, which injects perturbations at multiple spatial scales and thereby enhances sensitivity to low-frequency structures. This training strategy allows the network to effectively reason about broad, slowly varying anomalies throughout the entire denoising trajectory, rather than focusing solely on high-frequency details in the early time steps. To compute anomaly evidence, ANDi evaluates a selected subset of diffusion time steps. At each step, the predicted mean of the Gaussian transition is compared against the image-conditioned mean, and the squared reconstruction error is used as the local deviation measure. This step-wise evaluation captures discrepancies that may emerge at different stages of the diffusion process. To derive a single anomaly map, deviations across timesteps are aggregated using the geometric mean, a choice that emphasizes consistent deviations across scales while attenu-ating spurious errors occurring at isolated steps. The resulting anomaly map thus integrates information across multiple diffusion time steps and frequency ranges, offering a principled estimate of abnormality that is both spatially localized and robust to noise. Disyre 59 is inspired by diffusion models and employs synthetic anomalies to construct a corruption process. Anomalies are generated using a novel Disentangled Anomaly Generation (DAG) framework, which independently samples shape, texture, and intensity attributes from uniform distributions. The shape of each anomaly is created by randomly selecting cuboids, spheres, or other 3D primitives, which are further modified using affine transformations and smoothed with a Gaussian kernel to blend with the surrounding tissue. Texture is defined through Foreign Patch Interpolation (FPI), where a random patch from the training set is inserted into the target image using a convex combination with a randomly sampled interpolation factor. The patch is normalized before insertion to prevent confounding between texture and intensity. Intensity is determined by sampling a bias factor and a tissue class identified through k-means clustering, and then adjusting the intensities of the selected tissue within the anomaly mask according to the bias factor. In combination, these disentangled attributes govern the corruption process, and the network is trained in a diffusionstyle framework to predict the original anomaly-free image, effectively learning to reverse the synthetic corruption.

Feature-based approaches included in this study were Structural Feature-Autoencoders (FAE) 55 , Unified Model for Multi-class Anomaly Detection (UniAD) 79 , Reverse Distillation (RD) 20 and Patch-Core 62 . All feature-based approaches were parameterized by their original network or partially modified to fit the image resolution used in the experiments.

FAE 55 is a convolutional autoencoder that operates on embeddings extracted from multiple layers of a ResNet pretrained on ImageNet. The network is trained to reconstruct these embeddings using the Structural Similarity Index Measure (SSIM) as the loss function. At inference, anomaly detection is performed by computing the SSIM between the input and reconstructed feature maps, followed by averaging the similarities to produce the final anomaly score.

UniAD 79 is a transformer-based network that introduces specialized layer types for anomaly detection. They are introduced to prevent the “identical shortcut” that causes the network to simply copy the input. A key innovation is the neighbor masked attention layer, a variant of standard attention in which tokens from the local neighborhood are masked prior to the attention calculation. In addition, the architecture features a novel decoder that employs multiple query embeddings, which are fused with encoder representations and integrated with the output from the previous layer. In order for the network to learn the identity mapping, the query embeddings need to be sensitive to the input, thereby reducing the reconstruction ability for abnormal samples. Similar to the FAE, it leverages embeddings from an ImageNetpretrained network, specifically EfficientNet; however, unlike the FAE, Gaussian noise is added to the input features, forcing the network to jointly learn reconstruction and denoising. The model is trained with an L2 loss. At inference, anomalies are detected by computing the Euclidean distance between the input and reconstructed pixel-level features.

RD 20 applies a knowledge distillation framework to anomaly detection. A pre-trained ImageNet encoder serves as the teacher network, while a trainable bottleneck embedding module maps the teacher’s representations into a more compact code. In conjunction, a decoder is trained to reconstruct the teacher’s em-beddings from this bottleneck representation, with training guided by maximizing cosine similarity between the reconstructed outputs and the teacher’s original embeddings. The key idea is that, due to the heterogeneous architecture and the compression introduced by the bottleneck, the student network cannot perfectly replicate the teacher’s embeddings for novel or anomalous data. As a result, anomalies can be identified at test time by measuring the cosine similarity between the pixel-level features of the input and output, with lower similarity indicating abnormality.

PatchCore 62 employs a memory bank of preprocessed embeddings for anomaly detection. A WideResNet-50 pretrained on ImageNet is used as the backbone, from which embeddings are extracted from intermediate layers. Prior to assembling the memory bank, the features are refined to enlarge the receptive field: overlapping patches are sampled from the feature maps, and adaptive average pooling is applied to aggregate information from each neighborhood into a single feature vector. The resulting representations form the memory bank of nominal features. To improve efficiency at inference, the memory bank is downsampled using greedy coreset subsampling. Anomaly detection is then performed by computing the mean L2-distance to the nearest entries in the memory bank. In our MRI experiments, we observed that performance improved when considering only the distance to the single closest entry, rather than averaging across multiple neighbors.

All models were trained for a maximum of 100,000 gradient update steps, and checkpoints were saved after every 5000 steps. Then, the best-performing checkpoint was chosen from the validation dataset for each method. We noticed that many methods reached optimal performance on the validation dataset before convergence.

All code, along with our adaptations of the evaluated methods into reproducible and testable workflows, is available on GitHub (https://github. com/AlexanderFrotscher/UAD-IMAG) and has been forked to the MHMlab repository (https://github. com/MHM-lab). Prior to submission, we contacted the lead authors of the original papers and invited them to review our implementations via the shared GitHub repository. This process was designed to ensure transparency, fairness, and author-validated benchmarking.

After obtaining the anomaly maps, three-dimensional median filtering with a kernel size of four is applied to all algorithms. Threshold selection has been performed on the original anomaly maps and has been transferred to the median-filtered ones. For evaluating the anomaly map, many different metrics can be used; that is, all metrics that can be used for binary classification can, in principle, work for the evaluation of the UAD methods. In the medical community, two of these are now widely accepted as the standards: the Dice score and the area under the precision-recall curve (AUPRC). Note that for brain MRI, the area under the receiver operating characteristic curve is less important due to the high class imbalance between positives and negatives. Here, we mainly report the Dice score and present all AUPRC values in the Supplementary Information. Table 1 -4. All threshold-dependent metrics have been calculated on a per-individual basis and averaged across individuals when reporting mean values, whereas the AUPRC has been calculated on the complete dataset once. To evaluate the influence of the scanner (the specific MRI device) and sex effects, the distributions of the performance measures and groups have been analyzed using the Mann-Whitney U test. It is a rank-based test that does not assume a specific parametric distribution and can indicate significant differences for all aspects of the distribution, e.g., location, scale, and shape. Multiple testing corrections using the Benjamini-Hochberg method have been applied to correct for the four datasets corresponding to the different lesion loads. Furthermore, age effects have been analyzed using the Spearman rank correlation test to assess nonlinear correlations between performance and age. For all statistical tests, a significance level α = 0.05 has been used. To analyze the magnitude of the sex effects, Cohen’s d was calculated with the male group as the first group. Therefore, all positive Cohen’s d values indicate that the FPRs were higher for the male group.

AF and TW thank the International Max Planck Research School for Intelligent Systems (IMPRS-IS) for their support. TW acknowledges funding from the German Research Foundation (DFG) Emmy Noether: 513851350 and the BMBF/DLR Project FEDORA: 01EQ2403G. This work was supported by the BMBF-funded de.NBI Cloud within the German Network for Bioinformatics Infrastructure (de.NBI) (031A532B, 031A533A, 031A533B, 031A534A, 031A535A, 031A537A, 031A537B, 031A537C, 031A537D, 031A538A).

A.1 Algorithmic Performance Across Brain Lesions

Here, we report the results of the main analysis in tabular form for the BraTS20 dataset (see Tab. 3), the ATLAS dataset (see Tab. 4), the Shifts dataset (see Tab. 5), and the WMH dataset (see Tab. 6).

The threshold dependent metrics ⌈Dice⌉ and Dice Estimate are calculated per individual, and the average is reported. is calculated on the complete dataset once.

Table 3: Anomaly detection performance measured in AUPRC, ⌈Dice⌉ and Dice Estimate on the BraTS20 test dataset (332 subjects). We report the results without post-processing and when using three dimensional median filtering (MF) with a kernel size of four. In the main paper only the MF values are used in the plots. The false positive rates for all datasets can be found in Tab. 7 as well as the bar plot for the T2w FPRs in Fig. 7 Table 4: Anomaly detection performance measured in AUPRC, ⌈Dice⌉ and Dice Estimate on the T1w images of the ATLAS test dataset (ATLAS-I 243 individuals, ATLAS-O 212 individuals, ATLAS-N 159 individuals). We report the results without post-processing and when using three dimensional median filtering (MF) with a kernel size of four. In the main paper only the MF values are used in the plots.

Here we report the p-values for the different Mann-Whitney U tests before and after multiple testing correction. They can be found in Tab. 8.

A.4 Impact of demographics on false positive rates and lesion identification.

Here we show the results for T1w images of the studied impact of inter-individual anatomical variability linked to demographic attributes such as age and sex in the HCP Aging and BraTS datasets. All additional plots can be found in Fig. 8 and all results in numeric format can be found in Tab. 10.

To further test the performance of the feature-based approaches, different pretrained networks that were trained on the classification task of ImageNet have been selected. The models and pretrained weights from torchvision have been used for all experiments. We observed that version one of the set of weights performed better for all anomaly detection tasks tested and that ImageNet specific normalization for mean and standard deviation on the images yielded slightly reduced performance. In Fig. 9 we additionally show FAE and PatchCore with features extracted from EfficientNet-B4 and UniAD when using features from WideResNet50. Furthermore, we updated the network of the FAE, as it was the least sophisticated network used in the general analysis. We introduced residual connections and a second convolution at each stage to increase the depth of the originally shallow network. This configuration uses the features of the pretrained EfficientNet-B4, and we call it FAE-v2. The specific combination of the structural similarity index (SSIM) with deep features used by the FAE showed remarkable consistency throughout the different networks employed. The FAE-v2 showed no improvements over the FAE. We argue that this observation points to fundamental problems in the design of UAD methods. A better performance on the pretext task usually does not correlate with downstream detection performance. This behavior is observable for two of the reconstruction-based approaches and for all feature-based approaches in the conducted analysis. In the main paper, we approach this problem by using the checkpoints that achieved maximum performance on the validation dataset to determine when the method is most valuable for the downstream task. In order to understand the outstanding performance of Disyre in our benchmark, we conducted a small ablation study on the validation dataset. This analysis is similar to the scaling analysis shown in the main part of the manuscript. We decided to remove components of the Disyre method that could be used for the majority of the other methods. These include the specific preprocessing used and the patch-based paradigm. The results can be seen in Fig. 10. We observed that the patch-based paradigm could be removed without any performance loss; instead, we observed a small increase in performance. In contrast, the specific preprocessing used seems to be an integral part of the Disyre model and its ability to achieve state-of-the-art performance. The preprocessing includes an elastic transformation, various intensity transformations, and a mirror transformation. We did not study the effect of this preprocessing on the other methods and want to note that an interaction between the synthetic anomaly generation pipeline and this preprocessing is possible, i.e., only in combination is a robust way of generating synthetic anomalies possible.

Here, we report the architectures used and method-related details. In general, we aimed to reduce heterogeneity between the methods regarding preprocessing and architectural choices while maximizing individual performances. Nevertheless, a risk remains that the methods have not achieved optimal performance due to suboptimal choices of hyperparameters or misalignment between performance on the validation dataset and the test sets. All reconstruction-based methods that originally used a U-Net-like architecture were equipped with the same U-Net, which is a slightly modified version of the DDPM++ version used in the diffusion model literature 71 . We opted for this choice because it ensures that older methods start on equal footing with the newly published methods. The DDPM++ model integrates the BigGAN residual blocks and scales the residual connections by 1 √ 2 . Note that we usually omit the scaling for all non-diffusion model methods. Additionally, we introduced a modification known as efficient U-Net, which swaps the order of the downsampling operation and the first convolution of each block 66 , use the dropout modification proposed in 33 , employ the memory efficient attention mechanism provided by PyTorch, and zero-initialize the last convolution in each block.

In general, we build our RIAD implementation on the publicly available third-party code provided by https: //github.com/plutoyuxie/Reconstruction-by-inpainting-for-visual-anomaly-detection. We removed the median filtering in the gradient magnitude similarity, as we could not find this calculation step in the original paper. Additionally, we added a small number to the square root calculation used in the The performance of the algorithms on T1w images were reported using two different thresholds, the optimal threshold, defined as the maximum possible Dice score optimized in the test set, thus potentially susceptible to bias but standard in the field. Second, the estimated threshold optimized on the validation data set, then fixed and performance for that threshold reported on the untouched test set, thus unbiased but not the standard in the field. Here we tested multiple feature-based approaches while altering the pretrained network.

ANDi was developed by the first author of this paper, and this newly provided implementation can be seen as the up-to-date variant of the method. We mainly followed the diffusion model implementation of 33;67 and used a Variational Diffusion Model (VDM) with a cosine schedule that is adjusted for the image size used, as in 33 . We used 56x56 as the base size for the shifting operation. Additionally, we use velocity prediction during training and learn to minimize the distance between the ground truth noise and the predicted noise, which is calculated from the velocity. During inference, we calculate the difference between the ground truth latent and the predicted latent, as in the original ANDi implementation. We aggregate the individual deviations using the geometric mean and change the Pyramid noise to standard Gaussian noise. The new noise schedule requires different values for the parameters T l , T u and we have observed improvements in anomaly detection performance upon introducing this change. For the Pyramid noise, we opt for c = 0.9 and image slices are scaled to be in the range of [-1, 1] before using the network or adding the noise. All hyperparameters can be found in Tab. 13. on the validation dataset. First the full model, a model without the patch-based paradigm that takes as input the full slice and a model that omits the Disyre specific preprocessing. We observed that the specific preprocessing used is important for the Disyre model to achieve its optimum performance.

We built our Disyre implementation on the official implementation (https://github.com/snavalm/disyre ) and used Disyre v2 for all experiments. We downloaded the shapes provided on GitHub (needed for the DAG) and kept all hyperparameters of the official implementation, besides the tissue classes estimated with k-means and the architecture of the network. For the tissue classes, we run k-means on our training dataset for T1w and T2w images separately and use the respective clusters for the different training runs. As mentioned in Sec. A.6, we maintain the specific preprocessing pipeline of Disyre as well as the patch-based paradigm for our implementation and provide results for ablations regarding these options. After the DAG pipeline, we bring the slices to [-1, 1] to follow the typical diffusion model literature. All hyperparameters can be found in Tab. 14.

For FAE, we used the implementation provided by the UPD study 45 (https://github.com/iolag/UPD _ study/), as the authors of this benchmark are also the authors of the original article. For training, we use the SSIM with a window size of five, whereas for testing, we use a window size of 11. We experienced better results with this setup on the larger images (feature maps) and the architecture of the network is adapted to work with this larger resolution. We observed no difference when using the ImageNet specific feature normalization. All hyperparameters can be found in Tab. 15.

We built our implementation of UniAD on the official code available at https://github.com/zhiyuanyou/ UniAD. We have tried different variants of UniAD by altering the feature size and masking strategy used in the modified attention mechanism but ultimately found the original hyperparameters to work best. In our 9: P-values for the independent variables and the interaction term as well as the degrees of freedom (site, size, interaction) used for the Chi-Square approximation for the Scheirer-Ray-Hare tests. The Dice scores for each method were selected as the dependent variable and the lesion load categories and the ID-OOD dummy variable as the independent variables. The reported values are derived from median filtered anomaly maps and the estimated threshold.

first model, and then iteratively applying the second model on the resulting mask from the previous step, aiming to shrink the mask towards the anomalies. At each iteration, a new mask is generated by thresholding the reconstruction error from the previous step.

IterMask 1.67 × 10 -1 1.50 × 10 -1 2.15 × 10 -2 5.50 × 10 -4 w/ MF 1.60 × 10 -1 1.42 × 10 -1 1.71 × 10 -2 2.10 × 10 -4 ANDi 3.71 × 10 -2 2.80 × 10 -2 1.18 × 10 -2 8.30 × 10 -3 w/ MF 4.82 × 10 -3 5.83 × 10 -3 3.79 × 10 -3 2.10 × 10 -4 Disyre 2.56 × 10 -3 5.00 × 10 -3 1.69 × 10 -3 1.08 × 10 -3 w/ MF 8.20 × 10 -4 1.99 × 10 -3 4.00 × 10 -4 1.70 × 10 -4 FAE 1.77 × 10 -2 1.49 × 10 -2 3.01 × 10 -3 2.67 × 10 -3 w/ MF 1.44 × 10 -2 1.23 × 10 -2 2.23 × 10 -3 2.04 × 10 -3

📸 Image Gallery