ORION: Teaching Language Models to Reason Efficiently in the Language of Thought

📝 Original Info

- Title: ORION: Teaching Language Models to Reason Efficiently in the Language of Thought

- ArXiv ID: 2511.22891

- Date: 2025-11-28

- Authors: Kumar Tanmay, Kriti Aggarwal, Paul Pu Liang, Subhabrata Mukherjee

📝 Abstract

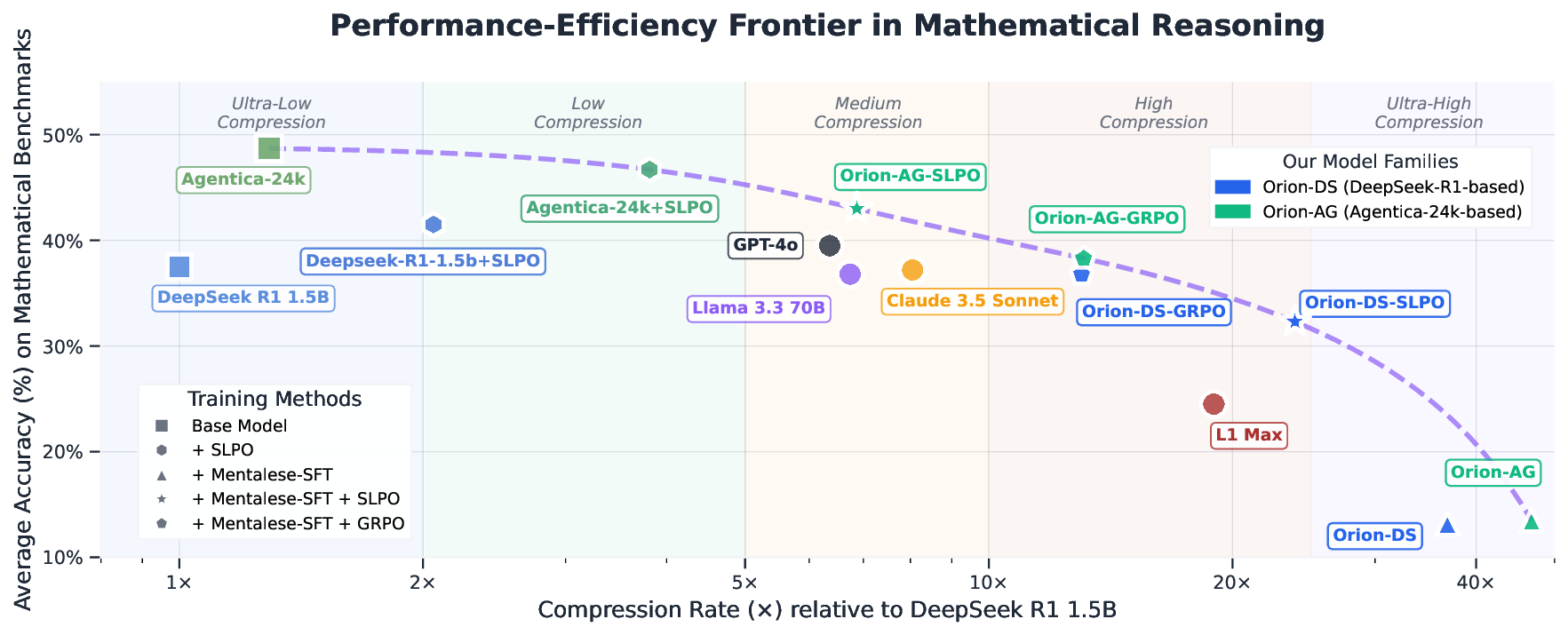

Large Reasoning Models (LRMs) achieve state-of-the-art performance in mathematics, code generation, and task planning. However, their reliance on long chains of verbose "thinking" tokens results in high latency, redundancy, and incoherent reasoning paths. Inspired by the Language of Thought Hypothesis -which posits that human reasoning operates over a symbolic, compositional mental language called Mentalese-we introduce a cognitively motivated framework that trains models to reason in a similar compact style. Mentalese encodes abstract reasoning as ultra-compressed, structured tokens, enabling models to solve complex problems with far fewer steps. To achieve both efficiency and accuracy, we propose SHORTER LENGTH PREFERENCE OPTIMIZATION (SLPO), a reinforcement learning method that directly optimizes models to generate concise yet correct reasoning by rewarding shorter solutions that maintain high accuracy while flexibly allowing longer reasoning when complexity demands it. When applied to Mentalese-aligned models, SLPO achieves much larger compression rates by enabling compressed reasoning that preserves the benefits of detailed thinking without the computational overhead, allowing us to present the best-performing models at each compression level along the performance-efficiency Pareto frontier. Across mathematical benchmarks -including AIME 2024 & 2025, Minerva-Math, OlympiadBench, Math500, and AMC -our ORION models generate reasoning traces with 4-16× fewer tokens, achieve up to 5× lower inference latency, and reduce training costs by 7-9× relative to the base DeepSeek R1 Distilled model, while maintaining 90-98% of the baseline accuracy. ORION models also surpass Claude and ChatGPT-4o by up to 5% in accuracy while maintaining 2× compression. Our findings demonstrate Mentalese-style compressed reasoning offers a breakthrough toward human-like cognitive efficiency, opening new possibilities for real-time, cost-effective reasoning without sacrificing accuracy. 1 1× 2× 5× 10× 20× 40× Compression Rate (×) relative to DeepSeek R1 1.5B 10% 20% 30% 40% 50% Average Accuracy (%) on Mathematical Benchmarks📄 Full Content

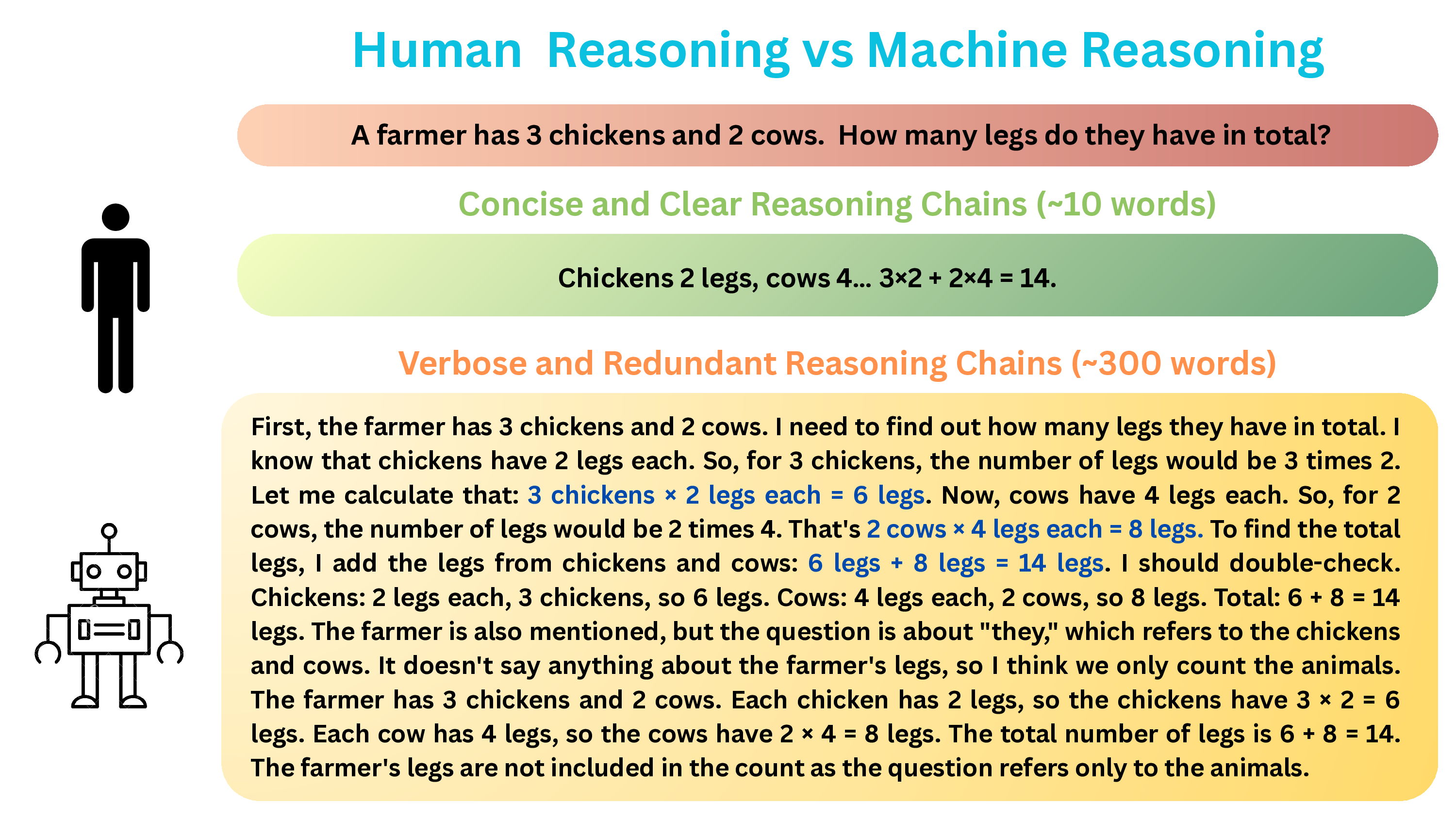

Recent advances such as OpenAI o1 (OpenAI et al., 2024b) and DeepSeek R1 (DeepSeek-AI et al., 2025) have reshaped how we think about language model reasoning. By letting models “think before they answer,” these systems dramatically improved credibility and performance-achievements that were once thought impossible for LLMs (Wu et al., 2024). Explicit reasoning has thus emerged as a central focus of LLM research (Xu et al., 2025). Recent work such as DeepScaleR: Surpassing o1-Preview with a 1.5B Model by Scaling RL demonstrates that even relatively small models (1.5B parameters) can outperform OpenAI’s O1-Preview-which is widely assumed to be significantly larger, though its scale has not been publicly disclosed-by leveraging reasoning-focused reinforcement learning techniques such as RLVR, where models generate intermediate “thinking” tokens for self-verification (Luo et al., 2025b). This finding underscores that scaling in reasoning depth can, in some contexts, rival scaling in parameter size. The key challenge now lies in transforming this promise into robust, efficient, and trustworthy deployments, which we address in the next section. However, the promise of RLVR comes with significant trade-offs. Training is computationally expensive, with rollout generation leaving GPUs idle for long periods (Fu et al., 2025). Even relatively small models such as 1.5B parameters can take days to train under RL fine-tuning regimes (Zheng et al., 2025). Moreover, R1-style reasoning traces (shown in Figure 2) are often verbose, redundant, and unnatural -a far cry from human cognition, which tends to rely on short and efficient thought steps (Sui et al., 2025). Building on the Language of Thought hypothesis (Fodor, 1975), which suggests that human cognition unfolds through short compositional thought units rather than verbose natural language traces, we propose a training framework that restructures the reasoning style of current reasoning-oriented LLMs in a symbolic internal language that we call Mentalese. While humans arrive at intuitive and concise solutions, LLMs often produce verbose and redundant reasoning chains even for simple problems. We bridge this gap by developing methods that encourage models to reason more like humans-clear, efficient, and direct-while preserving accuracy. Grounded in the Language of Thought hypothesis, human reasoning compresses complex ideas into minimal symbolic steps, reflecting cognitive efficiency. Emulating this compact reasoning reduces redundancy in machine outputs, improving both interpretability and token efficiency.

In our framework, models are first aligned with this reasoning process through supervised finetuning on reasoning traces in Mentalese, namely, concise compositional sequences that capture only the essential steps required for problem solving. However, aligning models to Mentalese by supervised fine-tuning alone leads to a substantial drop in performance relative to the base model. To overcome this, we introduce Shorter Length Preference Optimization (SLPO), a reinforcement learning objective with a verifiable reward that balances brevity and correctness. Unlike tokenpenalization methods (e.g., L1-style objectives (Aggarwal & Welleck, 2025)) that impose arbitrary length budgets-often forcing models to under-reason on difficult problems and over-reason on easy ones-SLPO instead rewards relative efficiency: among correct rollouts, shorter solutions receive a bonus. This naturally biases the model toward concise reasoning when tasks are simple, while still allowing it to allocate more steps when necessary in the same reasoning structure. By reward-ing concise but correct solutions, SLPO avoids verbosity while recovering most of the performance lost during supervised fine-tuning on Mentalese, thereby yielding efficient reasoning that scales at inference time.

To highlight both domain-specific performance and generalization, we evaluated our suite of trained models (ORION 1.5B) on mathematical reasoning in the domain and out-of-the-domain tasks such as GPQA, LSAT, and MMLU. Our ORION-AG-SLPO 1.5B surpasses GPT-4o (OpenAI et al., 2024a), Claude 3.5 Sonnet (Anthropic, 2024), and Llama 3.3 70B (Grattafiori et al., 2024) by an average of 6 pp2 in mathematical reasoning and outperforms DeepSeek-R1 1.5B (DeepSeek-AI et al., 2025) by 7 pp with a 7× reduction in reasoning length (Figure 1). ORION-DS-GRPO 1.5B achieves 14× compression relative to DeepSeek-R1 1.5B. Beyond in-domain gains, our ORION models also generalize well: on out-of-domain tasks, they improve over the base model by 1 pp while achieving 15× compression (Table 2). In addition to token efficiency, our experiments show that training with Mentalese stabilizes optimization and reduces training time by 7-9× compared to directly training the base model with RLVR, leading to substantial savings in training cost. Beyond benchmarks, we hypothesize that these ideas are especially relevant for agentic LLM systems, where reasoning models are rarely deployed due to latency and cost: verbose generations can overwhelm communication channels (Kim et al., 2025). A compressed reasoning style, reinforced through SLPO, has the potential to dramatically reduce this overhead-making reasoning-capable agents not only more accurate but also more practical to deploy in real-world settings. Our main contributions are as follows:

• Reasoning compression framework. We propose a novel and efficient reasoning compression framework via Mentalese alignment for restructuring the reasoning style of current LLM, producing compact yet faithful symbolic reasoning.

• Reward function. We propose Shorter Length Preference Optimization (SLPO), an adaptive objective that dynamically balances correctness with brevity, eliminating the need for rigid length penalties.

• Dataset. We release MentaleseR-40k, a dataset of ultra-compressed reasoning traces for 40k math problems, generated under symbolic constraints inspired by the Language of Thought Hypothesis (LOTH), to support future developments and foster research on efficient reasoning.

• Experiments and best practices. We conduct extensive evaluations and identify best practices to apply GRPO and SLPO, showing how they achieve different levels of compression and where each method is most effective.

Efficient Reasoning in Large Language Models. Since Wei et al. (2022b) demonstrated the effectiveness of chain-of-thought (CoT) prompting, subsequent work has focused on scaling testtime computation to improve performance in mathematical problem-solving, code generation, and complex reasoning tasks. Strategies include parallel sampling of multiple reasoning paths (Wang et al., 2022;Yue et al., 2024;Chen et al., 2023), tree search and planning (Yao et al., 2023;Besta et al., 2024), and iterative refinement methods (Madaan et al., 2023). Recent reasoningspecialized models, such as OpenAI’s o1 (OpenAI et al., 2024b), DeepSeek-R1 (DeepSeek-AI et al., 2025), and Qwen-QwQ (Yang et al., 2025), internalize the ability to generate extended reasoning traces.However, these methods often suffer from the overthinking phenomenon (Sui et al., 2025;Chen et al., 2025), where models generate excessively long reasoning traces. While increased length can improve accuracy up to a point (Wu et al., 2025), it also introduces redundancy, higher inference latency, and even accuracy degradation due to compounding errors (Hassid et al., 2025;Lee et al., 2025). This trade-off has motivated work on more efficient reasoning. RL-based post-training methods have been widely explored to control reasoning length. L1 (Aggarwal & Welleck, 2025) enforces user-specified budgets, DAST (Shen et al., 2025) adapts budgets based on problem difficulty, while O1-Pruner (Luo et al., 2025a) uses reference-based pruning. Other approaches, such as Kimi 1.5 (Team et al., 2025) and Training Efficient (Arora & Zanette, 2025), use sampled rollouts to reward shorter or average lengths. ShorterBetter (Yi et al., 2025) further introduces the idea of rewarding the shortest correct response, highlighting the existence of problem-dependent optimal reasoning lengths. Our work complements these by introducing SLPO, which adaptively prefers concise correct reasoning without penalizing necessary longer derivations, enabling over 10× compression with minimal loss in accuracy.

Chain-of-Thought and Alternative Reasoning Formats. CoT reasoning has become a dominant paradigm for enhancing reasoning in LLMs, either via prompting (Wei et al., 2022a;Fu et al., 2022;Zhou et al., 2022) or through post-training with supervised finetuning (Yue et al., 2023;Yu et al., 2023) and reinforcement learning (Trung et al., 2024;Shao et al., 2024b;Zhou et al., 2025). Theoretical analyses link CoT to increased expressivity and effective depth in transformers (Feng et al., 2023;Merrill et al., 2023;Li et al., 2024). However, natural-language CoT traces are verbose, redundant, and not always faithful to the model’s underlying reasoning process (Turpin et al., 2023;Chuang et al., 2024). Recent research has explored alternatives. Structured or symbolic CoT formats aim to compress reasoning into more compact representations, such as symbolic operators, patterns, or abstract primitives (Madaan & Yazdanbakhsh, 2022;Paul et al., 2024). Other works examine latent reasoning, where intermediate computation is implicit in hidden representations rather than externalized tokens (Yang et al., 2024;Biran et al., 2024;Shalev et al., 2024). Techniques such as back-patching (Biran et al., 2024), filler tokens (Pfau et al., 2024), or knowledge distillation into latent reasoning (Deng et al., 2023;2024) push beyond explicit CoT. Our proposed Mentalese Chain-of-Thought builds on this line of work by introducing a symbolic, cognitively motivated reasoning language inspired by the Language of Thought Hypothesis. By replacing verbose natural language with structured symbolic primitives, Mentalese CoT achieves order-of-magnitude compression while retaining faithfulness and interpretability. Combined with SLPO, this framework demonstrates that both representation and optimization are critical for efficient and reliable reasoning.

In this section, we present our methodology, which integrates symbolic reasoning alignment with reinforcement learning for concise yet accurate performance. We introduce Mentalese, a compact symbolic reasoning format, and Group Relative Policy Optimization (GRPO), a group-based extension of PPO for reasoning optimization. Our main contribution, Shorter Length Preference Optimization (SLPO), refines GRPO by rewarding brevity without penalizing necessary longer reasoning. Finally, we propose RLVR, a two-stage pipeline that first aligns models to Mentalese via supervised finetuning, then applies GRPO or SLPO with verifier feedback. Together, these components yield 10-20× compression in reasoning traces while maintaining accuracy and efficiency across benchmarks.

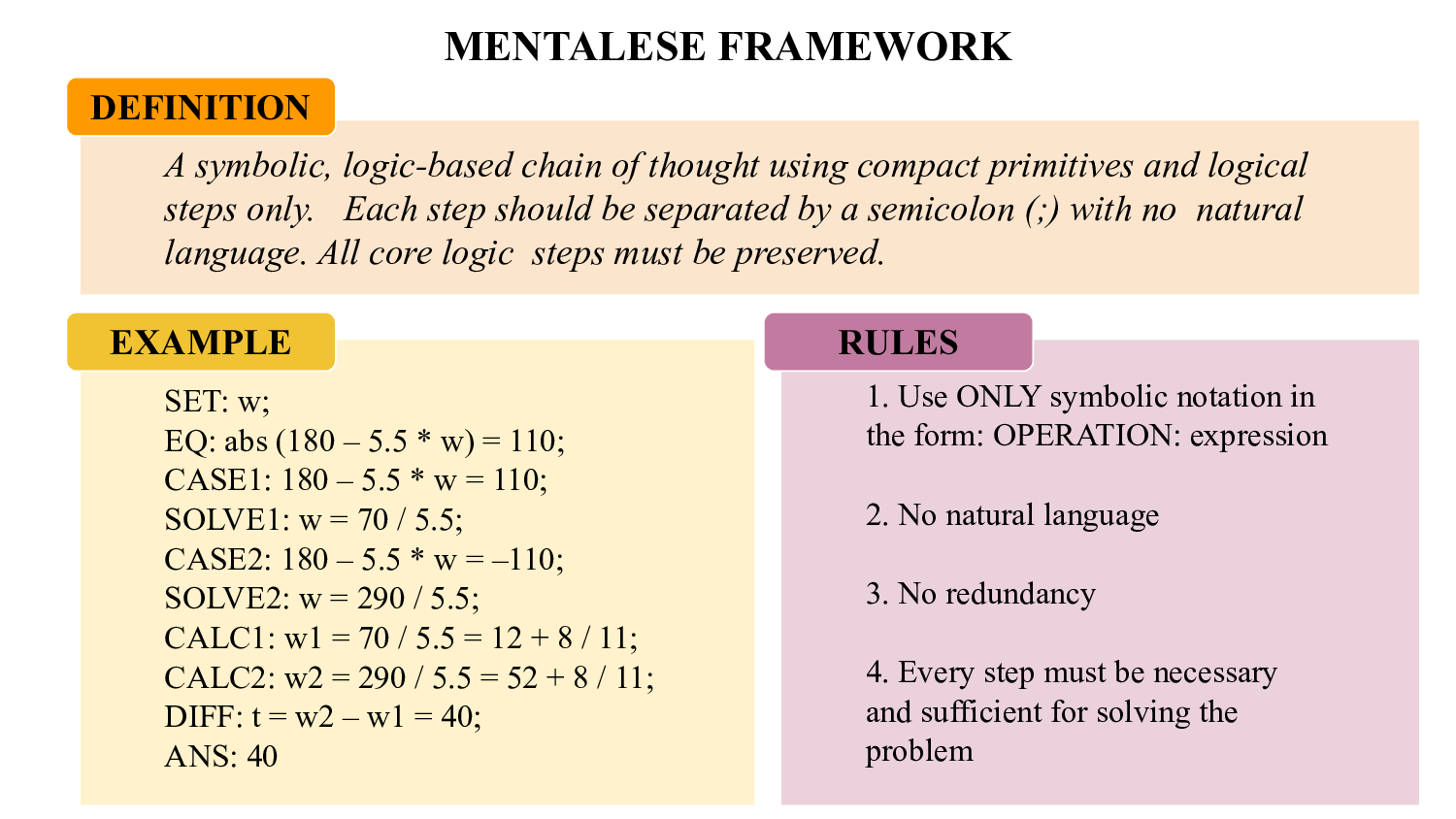

We first introduce Mentalese, a cognitively motivated reasoning format inspired by the Language of Thought Hypothesis (Fodor, 1975;Rescorla, 2024). According to this hypothesis, human cognition operates not directly in natural language, but in an internal representational system characterized by compact, symbolic structures. Translating this perspective to Large Reasoning Models (LRMs), we hypothesize that verbose natural language explanations commonly used in chain-of-thought prompting especially the DeepSeek R1 reasoning style, are not essential for reasoning, and that more efficient symbolic primitives can better capture the core logical operations underlying problem-solving.

Formal definition. Let O be a finite set of operators (e.g., SET, EQ, CASE, SOLVE, CALC, DIFF, ANS) and let E be the set of symbolic expressions over variables, numbers, and function symbols (e.g., +, -, ×, ÷, abs). A Mentalese step is a pair s t = (o t , c t ) with o t ∈ O and expression c t ∈ E rendered as the string OPERATION:expression;. A Mentalese trace for a question q is a finite sequence M = (s 1 ; . . . ; s T ) that is well-typed and executable under the step semantics below and that culminates in exactly one terminal ANS:e; step. The boxed final answer is e ⋆ , where e ⋆ is the value denoted by e. We denote the set of valid traces by M.

Unlike traditional CoT, which uses free-form text, Mentalese encodes reasoning in canonical steps of the form OPERATION:expression;, joined by semicolons to form minimal yet complete traces. This yields three advantages: (i) Compression -eliminating redundant tokens for up to 10× shorter reasoning; (ii) Faithfulness -each step is necessary and sufficient; (iii) Cognitive alignment -resembling structured mental representations rather than verbose text.

To build MENTALESE-40K, we adapted the DEEPSCALER-PREVIEW-DATASET (Luo et al., 2025b), covering 40k+ math problems from AIME (1983AIME ( -2023)), Omni-Math, and STILL. We used GPT-4.1 with a structured prompting framework (Figure 3)-including a formal definition, syntactic rules, and examples-to generate Mentalese traces. After light curation (removing 65 malformed cases), the resulting dataset was used for supervised fine-tuning. For RLVR, we instead relied on the original QA pairs, letting the verifier assess correctness while optimizing for concise reasoning. Refer Appendix A.3 for some of the samples from MENTALESER-40K.

A symbolic, logic-based chain of thought using compact primitives and logical steps only. Each step should be separated by a semicolon (;) with no natural language. All core logic steps must be preserved.

While PPO (Schulman et al., 2017) provides a strong baseline for policy optimization, it operates at the single-sample level: each rollout is evaluated independently using a value function to estimate its advantage. However, in reasoning tasks where multiple candidate solutions can be generated for the same question, evaluating rollouts in isolation discards useful information about the relative quality of responses within a group. For example, if a model generates five candidate solutions, some correct and some incorrect, we are less interested in their absolute values than in how each compares relative to others in the same set. This motivates Group Relative Policy Optimization (GRPO) (Shao et al., 2024a), which eliminates the explicit value function and instead estimates the advantage by normalizing rewards within groups of samples drawn for the same prompt.

Concretely, for a question-answer pair (q, a), we sample a group of G responses {o i } G i=1 from the current policy. The reward of each response r i is converted into a group-relative advantage via normalization:

This design ensures that advantages highlight which responses are better or worse relative to the group, rather than depending on an absolute critic model.

GRPO then optimizes a clipped surrogate objective similar to PPO but with a directly imposed KL penalty:

where r i,t (θ) = π θ (oi,t|q,oi,<t) π old (oi,t|q,oi,<t) is the token-level importance ratio.

There has been a growing interest in adaptive reasoning methods that redefine the GRPO formulation by incorporating explicit thinking budgets. For instance, prior works such as LCPO constrain reasoning lengths by enforcing unnatural fixed or maximum token budgets. More recently, grouprelative formulations have also been proposed that define rewards based on the relative lengths of responses within a group. However, these methods tend to be overly rigid: they over-penalize the longest solutions even when they converge to right answer, and in cases where no correct solution exists, their length-normalization can still distort the reward landscape. This strictness can suppress necessary reasoning and lead to degenerate behavior.

To overcome these issues, we introduce Shorter Length Preference Optimization (SLPO), a reinforcement learning strategy that balances conciseness with correctness softly. Crucially, SLPO never penalizes a correct but necessarily long reasoning when it is the only valid option, and it does not distort rewards in cases with no correct solution. Instead, it adaptively rewards shorter correct traces when multiple valid derivations exist, while preserving correctness as the primary training signal.

Different problems naturally require different amounts of reasoning. For example, a simple arithmetic task such as 2 + 2 requires no intermediate steps, while Olympiad-style geometry problems demand much longer derivations. A reward function that ignores this variability either pushes the model toward artificially verbose chains (reward hacking under fixed budgets) or toward overly terse and often incorrect responses (under strict length penalties). SLPO resolves this by defining preferences relative to the observed range of correct reasoning lengths for each problem instance.

Formally, for a given rollout group G(x i ) = {y 1 , y 2 , . . . , y n } corresponding to prompt x i , let C(x i ) = {y j ∈ G(x i ) : R correctness (y j ) = 1} denote the set of correct responses. We define:

where ℓ(y) is the token length of response y. The total reward for candidate y curr is then:

where L curr is the length of the current response, R correctness ∈ {0, 1} is determined by a verifier, and α controls the trade-off between accuracy and conciseness. Larger values of α emphasize brevity, whereas smaller values prioritize correctness regardless of length. In all experiments, we set α = 0.1, which provided a stable balance across benchmarks.

By construction, SLPO avoids the failure modes of previous group-relative and L1-based formulations: it does not over-penalize long but uniquely correct solutions, and it does not distort reward landscapes when no valid solutions exist. Instead, it consistently encourages models to discover the shortest correct reasoning trace whenever possible. This makes SLPO especially well-suited for mathematical reasoning, where optimal reasoning lengths vary significantly across problems.

We now present our complete training pipeline, which consists of two stages: supervised alignment on Mentalese traces, followed by reinforcement learning with verifiable rewards (RLVR).

Stage 1: Supervised Finetuning on Mentalese. Let D = {(q i , a i , m i )} M i=1 be our dataset with question q i , ground-truth final answer a i , and Mentalese reasoning trace m i . Each training prompt is structured as: τ (q i ) = q i + ‘Let’s think step-by-step and answer within \boxed{}.’ with target output as:

Starting from a pretrained base model π 0 , we obtain a Mentalese-aligned model π SFT via supervised finetuning:

Stage 2: Reinforcement Learning with Verifier Rewards (RLVR). The SFT model π SFT is further refined using verifier-based reinforcement learning. For each question q i , the policy generates N candidates G(q i ) = {y (1) , . . . , y (N ) }; a verifier checks the boxed answer â against a i and assigns a correctness reward R acc ∈ {0, 1}. The clipped surrogate objective with KL regularization is:

where r θ (y) = π θ (y|q) πold(y|q) and Â(y) is computed from either the GRPO or SLPO formulation (see previous subsections).

Depending on the chosen reward function, RLVR yields a policy π GRPO or π SLPO :

SFT alignment anchors the model to a compact single-chain reasoning format, ensuring that outputs conform to the Mentalese structure as shown in Figure 4. However, this alignment often comes at the cost of reduced accuracy, since the base model initially performs well with long and verbose reasoning chains. RLVR provides the complementary step: by instantiating it with either GRPO or SLPO, the model learns to recover accuracy while retaining the compact reasoning format. RLVR enables the model to refine and extend its reasoning inside the learned structure, adding useful steps when necessary but avoiding unnecessary verbosity. This combination not only restores the lost performance but also yields consistent improvements in overall reasoning efficiency and accuracy across benchmarks.

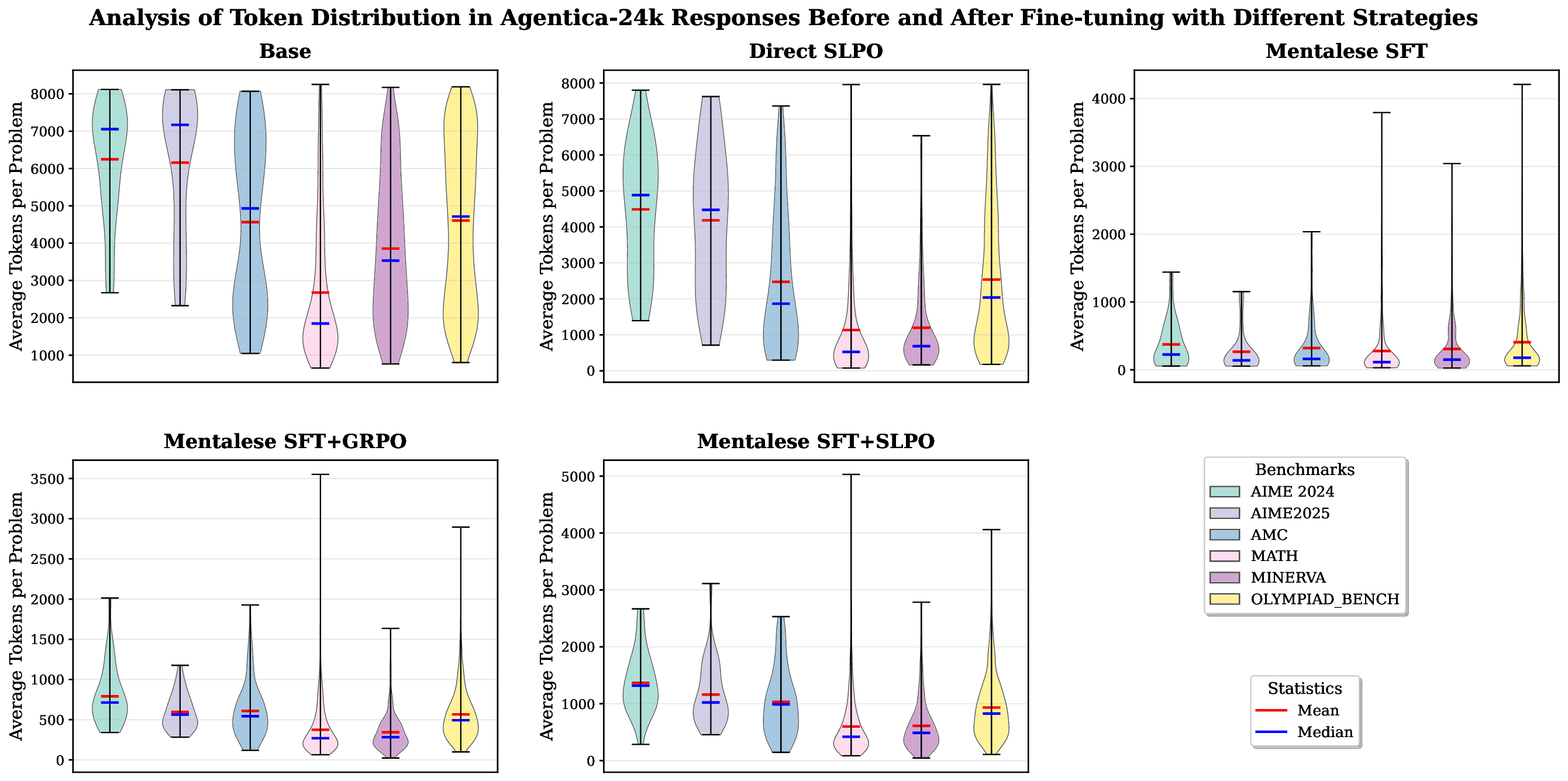

Our experimental study is organized around five research questions. First, we ask how effective the proposed Mentalese representation is in compressing reasoning traces while preserving task performance. Second, we investigate which reinforcement learning algorithm (GRPO or SLPO) best recovers the performance gap introduced by compression. Third, we evaluate the standalone effectiveness of SLPO in balancing conciseness and correctness. Fourth, we explore best practices by identifying when each algorithm is most suitable, particularly across different regions of compression. Finally, we analyze the efficiency and stability of our RLVR methods during training.

Models and Baselines. To evaluate our method, we consider both base models and competitive baselines. Our primary comparison point is DeepSeek-R1-Distill-Qwen-1.5B (DeepSeek-AI et al., 2025), a distilled variant of Qwen-2.5-1.5B-Instruct fine-tuned on reasoning traces from DeepSeek’s R1 model, which we denote as DEEPSEEK-R1-1.5B. We also include DeepScaleR-1.5B-Preview (Luo et al., 2025b), the original release without length-control modifications, referred to as AGENTICA-24K. For completeness, we tested the base Qwen-2.5-Math-1.5B model (Qwen et al., 2025); however, it collapsed under RLVR fine-tuning due to NaN gradient norms, so it is excluded from final evaluations. In addition, we benchmark against L1-Max (Aggarwal & Welleck, 2025), a strong baseline derived from AGENTICA-24K using Length-Controlled Policy Optimization (LCPO), which achieves more than 10× compression. While this approach effectively reduces verbosity by enforcing a fixed token budget, it lacks adaptability to varying problem difficulties.

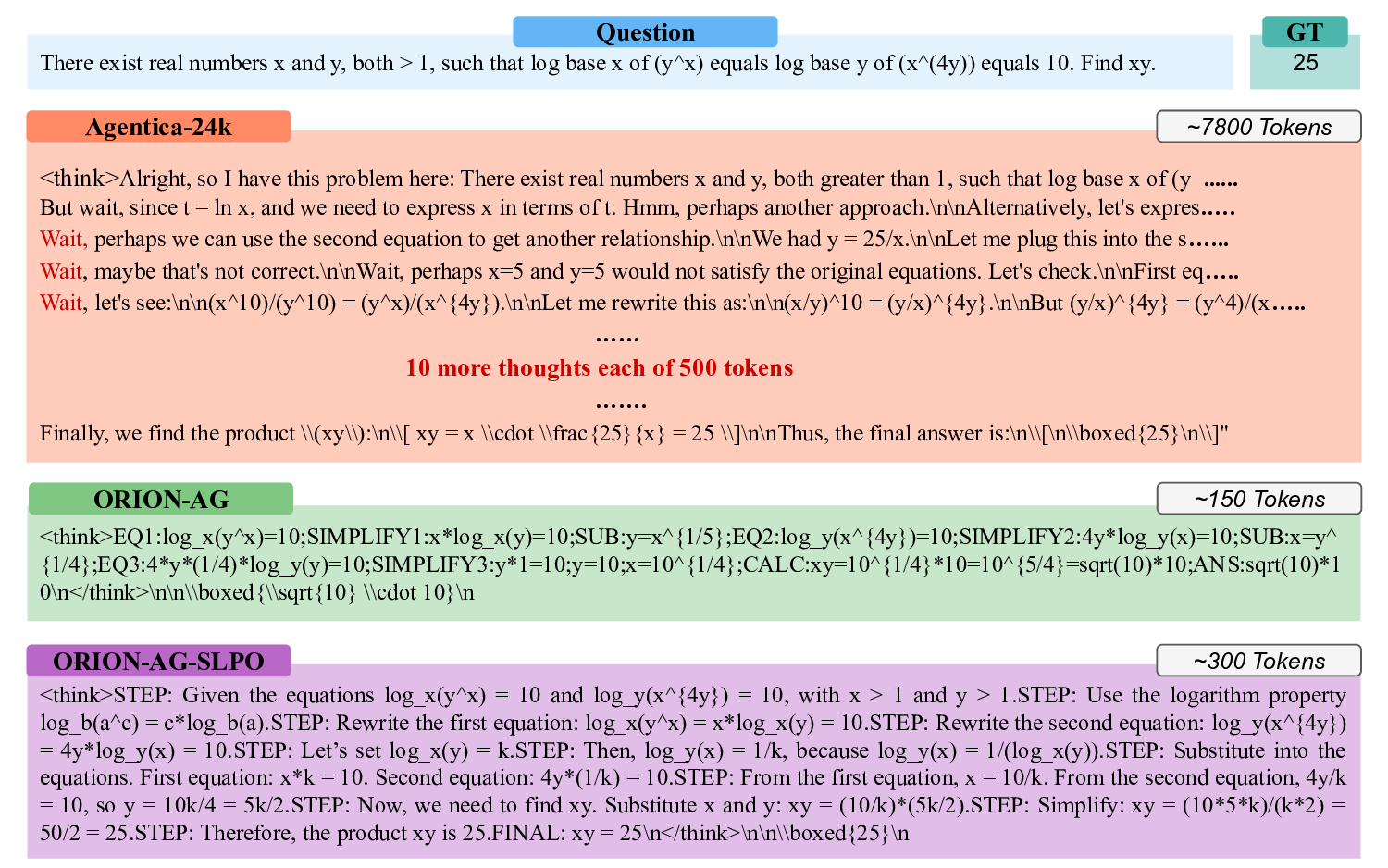

There exist real numbers x and y, both > 1, such that log base x of (y^x) equals log base y of (x^(4y)) equals 10. Find xy.

But wait, since t = ln x, and we need to express x in terms of t. Hmm, perhaps another approach.\n\nAlternatively, let’s expres.…. Wait, perhaps we can use the second equation to get another relationship.\n\nWe had y = 25/x.\n\nLet me plug this into the s…… Wait, maybe that’s not correct.\n\nWait, perhaps x=5 and y=5 would not satisfy the original equations. Let’s check.\n\nFirst eq….. Wait, let’s see:\n\n Beyond these 1.5B-scale models, we also report results from frontier-scale systems for context. Specifically, we include GPT-4o (OpenAI et al., 2024a), Claude 3.5 Sonnet (Anthropic, 2024), and LLaMA-3 70B-Instruct (Grattafiori et al., 2024) as strong reference points, situating our results relative to state-of-the-art closed-source and large-scale open-source LLMs.

Evaluation and Metrics. We evaluate our models on five in-domain mathematical reasoning datasets: AIME 2024 (Mathematical Association of America, 2024), AIME 2025 (Mathematical Association of America, 2025), MATH-500 (Hendrycks et al., 2021b), AMC (American Mathematics Competitions , 2023), Minerva-Math (Lewkowycz et al., 2022), and OlympiadBench (He et al., 2024). Additionally, we test on three out-of-domain benchmarks: GPQA (Rein et al., 2023), LSAT (Zhong et al., 2023), and MMLU (Hendrycks et al., 2021a), in order to assess generalization beyond mathematical reasoning. We report results using three primary metrics. Pass@1 measures the fraction of problems correctly solved under single-sample decoding, i.e., the proportion of test questions for which the model produces a correct solution on its first attempt. Token Length denotes the average number of tokens generated per response on a given benchmark, computed by averaging output lengths across all test questions. Compression Rate (CR) quantifies the degree of response shortening relative to DeepSeek-R1-1.5B, with higher values indicating greater compression (e.g., a CR of 10 means the model’s responses are ten times shorter on average). Full formal definitions are provided in Appendix A.1. Implementation Details. For supervised fine-tuning on the MENTALESER-40K dataset, we used LLAMA-FACTORY (Zheng et al., 2024), an open-source library for instruction tuning and posttraining. For reinforcement learning, we adopted VERL (Sheng et al., 2025), an open-source RL training library. We fine-tuned our 1.5B base models with a batch size of N = 128 and a rollout group size of n = 16. Training was conducted for 1500 steps with a fixed learning rate of 1 × 10 -6 . For reinforcement learning experiments, we used 32 H100 GPUs, while supervised finetuning was performed on 8 H100 GPUs. Inference was accelerated using the VLLM (Kwon et al., 2023) engine, which enables efficient large-scale generation. For length constraints, we set different maximum generation lengths depending on the training setup: 8K tokens for direct SLPO on base models, 2K tokens for SLPO on MENTALESER-40K fine-tuned models, and 1K tokens for GRPO relative to the base model. This decline stems from the fact that SFT encourages the model to restructure reasoning into a symbolic MENTALESE format, typically resulting in a single linear reasoning path. In contrast, DeepSeek R1-style reasoning traces often include “forking tokens” such as wait, but, or so, which allow the model to self-verify and revise its reasoning mid-generation-boosting accuracy through exploratory pathways. The strict structure imposed by SFT sacrifices these benefits, limiting the model’s flexibility and test-time scaling. However, applying RLVR largely reverses this effect: models regain most of the lost accuracy while maintaining significantly shorter reasoning traces-typically just a few hundred tokens longer than their SFT outputs. This highlights the complementary roles of the two stages: SFT enforces symbolic conciseness, while RLVR restores accuracy by reintroducing adaptive reasoning behaviors within that compact framework.

Training Time Efficiency. Large-scale reinforcement learning typically demands substantially more computational resources than supervised fine-tuning. In our experiments, we found that applying RLVR directly on the base models required 5-6 days of training on our dataset of 40k samples for 1500 RL steps. This inefficiency arises primarily from the generation of long reasoning chains (often exceeding 8k tokens), which introduces high latency in the VLLM inference engine and becomes the main bottleneck of RLVR training. The cost further increases with larger rollout group sizes. As shown in Figure 5, introducing an intermediate supervised fine-tuning stage on the MEN-TALESER dataset significantly reduced training cost by 7-10×, while achieving performance close to the base model but with 10× shorter reasoning traces. This demonstrates that aligning models to a more compact reasoning language before RL training not only improves efficiency but also provides a scalable mechanism for reinforcement learning in reasoning tasks.

Training Collapse Under Direct SLPO. As shown in Figure 5, applying SLPO directly to AGENTICA-24K resulted in a sudden collapse after approximately 300 training steps. Initially, the response length decreased by nearly half while accuracy improved marginally. However, beyond this point, the average response length rapidly expanded to the maximum generation limit (8k tokens), and the gradient norm curve exhibited NaN values. This instability ultimately caused the training to collapse, highlighting the difficulty of applying SLPO on raw base models without intermediate alignment. In contrast, introducing an intermediate SFT stage on the MENTALESER dataset maintained stability throughout the entire training process, underscoring the reliability of our proposed two-stage approach.

Reversion to Verbose Reasoning Under Large Generation Budgets During RLVR. We observed that when the maximum generation length was set to 4k or 8k tokens, the model tended to drift away from the compact reasoning style learned during the SFT stage and revert to its original base behavior of producing verbose chains. In some cases, this even led to model collapse (Figure 5). A likely explanation is that longer reasoning traces, although verbose, occasionally produced correct answers and were therefore rewarded, inadvertently steering the model away from the MENTALESE format.

To mitigate this effect, we restricted the maximum generation length to 1k tokens for GRPO-based training and 2k tokens for SLPO-based training. These limits preserved the symbolic reasoning behavior acquired during SFT while still allowing sufficient space for problem-solving.

We introduced a cognitively inspired framework for efficient reasoning that combines Mentalese, a compact symbolic reasoning format, with Shorter Length Preference Optimization (SLPO), a reinforcement learning strategy that adaptively balances conciseness and correctness. Our model achieves over 10× compression in reasoning traces while maintaining accuracy close to that of verbose large reasoning models, reducing both training and inference costs significantly. By aligning models toward concise and structured reasoning, we provide a pathway for deploying large reasoning capabilities within real-time and resource-constrained environments. Our results suggest that reasoning does not inherently require verbosity, and that carefully designed representations and optimization objectives can yield models that reason more like humans-symbolically, compositionally, and efficiently. This is particularly valuable for agentic systems, where efficient and reliable decision-making is critical, and inference overhead can quickly become a bottleneck.

SLPO collapses due to gradient instability, while ORION models stay stable; (2) Clip Ratio indicates more controlled updates in Mentalese methods, driven by reduced response truncation.; (3) Entropy Loss reflects better exploration-exploitation balance; (4) Training Time per RL Step shows higher computational efficiency; (5) Test Performance on AIME 2024 (∼22% Pass@1) confirms ORION models outperform direct SLPO on the base model. Shaded regions denote min-max ranges across runs. These results highlight the importance of structured intermediate representations (Mentalese) for stable, efficient RL in large language models.

pp = percentage points, denoting absolute differences between percentages (e.g., 22% vs. 16% = 6 pp).

📸 Image Gallery