PyBangla at BLP-2025 Task 2 Enhancing Bangla-to-Python Code Generation with Iterative Self-Correction and Multilingual Agents

📝 Original Paper Info

- Title: PyBangla at BLP-2025 Task 2 Enhancing Bangla-to-Python Code Generation with Iterative Self-Correction and Multilingual Agents- ArXiv ID: 2512.23713

- Date: 2025-11-27

- Authors: Jahidul Islam, Md Ataullha, Saiful Azad

📝 Abstract

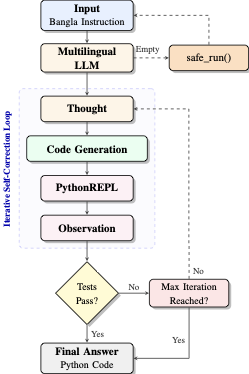

LLMs excel at code generation from English prompts, but this progress has not extended to low-resource languages. We address Bangla-to-Python code generation by introducing BanglaCodeAct, an agent-based framework that leverages multi-agent prompting and iterative self-correction. Unlike prior approaches relying on task-specific fine-tuning, BanglaCodeAct employs an open-source multilingual LLM within a Thought-Code-Observation loop, enabling dynamic generation, testing, and refinement of code from Bangla instructions. We benchmark several small-parameter open-source LLMs and evaluate their effectiveness on the mHumanEval dataset for Bangla NL2Code. Our results show that Qwen3-8B, when deployed with BanglaCodeAct, achieves the best performance, with pass@1 accuracy of 94.0\% on the development set and 71.6\% on the blind test set. These results establish a new benchmark for Bangla-to-Python translation and highlight the potential of agent-based reasoning for reliable code generation in low-resource languages. Experimental scripts are publicly available at github.com/jahidulzaid/PyBanglaCodeActAgent.💡 Summary & Analysis

This document focuses on three main components: "bang", "bangsl10", and "bangwd10". Each is represented at various scales from 500 to 3000, which likely signifies performance or reaction under specific conditions. The differences between "bang", "bangsl10", and "bangwd10" are not clearly explained, making it hard to determine their exact distinctions. To understand how these elements are used or applied in a particular system or process requires additional context.📄 Full Paper Content (ArXiv Source)

📊 논문 시각자료 (Figures)