Co-Evolving Agents: Learning from Failures as Hard Negatives

📝 Original Info

- Title: Co-Evolving Agents: Learning from Failures as Hard Negatives

- ArXiv ID: 2511.22254

- Date: 2025-11-27

- Authors: Yeonsung Jung, Trilok Padhi, Sina Shaham, Dipika Khullar, Joonhyun Jeong, Ninareh Mehrabi, Eunho Yang

📝 Abstract

Recent advances in large foundation models have enabled task-specialized agents, yet their performance remains bounded by the scarcity and cost of high-quality training data. Self-improving agents mitigate this bottleneck by using their own trajectories in preference optimization, which pairs these trajectories with limited ground-truth supervision. However, their heavy reliance on self-predicted trajectories prevents the agent from learning a coherent preference landscape, causing it to overfit to the scarce supervision and resulting in limited improvements. To address this, we propose a co-evolving agents framework in which an auxiliary failure agent generates informative hard negatives and co-evolves with the target agent by learning from each other's failure trajectories. The failure agent learns through preference optimization using only failure trajectories from both agents, thereby generating hard negatives that are close to success yet remain failures. Incorporating these informative hard negatives into the target agent's preference optimization refines the preference landscape and improves generalization. Our comprehensive experiments on complex multi-turn benchmarks such as web shopping, scientific reasoning, and SQL tasks show that our framework generates higher-quality hard negatives, leading to consistent improvements over baselines. These results demonstrate that failures, rather than being used as-is, can be systematically transformed into structured and valuable learning signals in self-improving agents.📄 Full Content

2025; Gemini Team, 2025) has facilitated the rise of taskspecialized agents across diverse domains, from opendomain dialogue to scientific reasoning (SU et al., 2025;Zeng et al., 2024;Fu et al., 2025;Bousmalis et al., 2024). These agents inherit the broad generalization capacity of pretrained models, enabling effective adaptation to new tasks with relatively limited supervision. This capability has motivated growing interest in adapting foundation models into reliable and effective domain-specialized agents. Recent advances in multi-agent systems and preference optimization further highlight the potential of combining broad pretraining with specialized adaptation.

Nevertheless, the effectiveness of such agents remains constrained by the quality of task-specific training data (Zhou et al., 2024;Zhao et al., 2024). High-quality datasets are essential for reliable reasoning and decision making, providing the signals required for adaptation to specialized domains. Yet, constructing such datasets is expensive and labor-intensive, often requiring domain expertise and extensive annotation. In many real-world scenarios, building large curated datasets is infeasible, and the need to repeatedly curate data to keep pace with non-stationary environments makes this approach impractical. This bottleneck has motivated growing interest in methods that enable agents to improve autonomously without relying on continuous manual dataset curation (Yuan et al., 2025b;Nguyen et al., 2025;Yin et al., 2025). A promising direction is to automatically curate training signals from agent interactions, allowing learning to scale beyond static, human-labeled corpora. The central challenge is to transform abundant but noisy interaction data into structured supervision that drives reliable improvement.

Self-improving agents (SU et al., 2025;Zeng et al., 2024;Fu et al., 2025) have emerged as a promising paradigm to reduce reliance on costly human annotation. Maintaining agents at state-of-the-art performance would require continuous human annotation, which is prohibitively costly and infeasible at scale. Instead, self-improving agents automate parts of the data construction process by synthesizing expertlike trajectories from external resources such as documentation or databases, and by repurposing predicted failures as preference data for training. However, in challenging downstream tasks where pretrained LLMs perform poorly, gener-ating high-quality trajectories themselves remains a major bottleneck, making fully autonomous self-improvement difficult in practice.

In this context, Exploration-Based Trajectory Optimization (ETO) (Song et al., 2024) constructs preference datasets by pairing the agent’s own predicted failure trajectories with ground-truth ones using a given reward model, enabling preference optimization (Rafailov et al., 2023). Despite the promise of autonomous improvement, this approach remains limited, as it relies on the model’s raw failure trajectories. These failures are substantially less informative than human-curated negatives, especially in tasks where pretrained LLMs lack prior knowledge. As a result, the dispreferred trajectories offer only weak contrast, making DPO focus on simply increasing the likelihood of expert trajectories rather than shaping a fine-grained preference landscape. This weak supervision causes the model to gravitate toward the small set of expert trajectories, ultimately leading to overfitting rather than robust task understanding.

To address this, we propose a co-evolving agents framework in which an auxiliary failure agent generates informative hard negatives and co-evolves with the target agent by learning from each other’s failure trajectories. The failure agent learns through preference optimization using only failure trajectories from both agents, thereby generating hard negatives that are close to success yet remain failures. Incorporating these informative hard negatives into the target agent’s preference optimization refines the preference landscape and improves generalization. Crucially, this design enables the agent to autonomously generate informative hard negatives (Robinson et al., 2021;Rafailov et al., 2023;Chen et al., 2020) that are high-reward failures close to success, without any external supervision. Incorporating these hard negatives into the target agent’s preference optimization provides stronger and more diverse contrastive signals, refining the preference landscape and improving generalization.

We validate our framework through comprehensive analysis and experiments across diverse domains. These evaluations are conducted on complex multi-turn benchmarks, including the online shopping environment WebShop (Yao et al., 2022), the science reasoning environment Science-World (Wang et al., 2022), and the interactive SQL environment InterCodeSQL (Yang et al., 2023). Our quantitative and qualitative analysis of failure trajectories on these benchmarks shows that the proposed failure agent does not merely imitate expert trajectories but consistently generates highreward failures that serve as informative hard negatives. Experiments across a diverse models further demonstrate substantial improvements over competitive baselines, achieving large gains on all benchmarks and reflecting stronger generalization to diverse tasks. These findings highlight that systematically harnessing failures as structured learning signals, rather than treating them as byproducts, opens a promising direction for advancing self-improving agents.

Our contributions are summarized as follows:

• We introduce a failure agent that continuously learns from failure trajectories, modeling a fine-grained failure landscape and autonomously generating high-reward failures as informative hard negatives.

• We propose a co-evolving agents framework where the target and failure agents improve jointly, with the failure agent generating hard negatives that strengthen preference optimization and improve generalization.

• Through quantitative and qualitative experiments on complex multi-turn benchmarks, we show that systematically harnessed failures become structured learning signals that yield consistent gains.

Self-Improving Agents Building high-performing agents requires high-quality datasets, which are costly and often infeasible in real-world scenarios. Self-improving agents address this by autonomously generating, refining, and reusing data for continual learning. Some approaches synthesize trajectories from tutorials, documentation, or persona hubs (SU et al., 2025;Zeng et al., 2024;Fu et al., 2025), while others use planning methods such as Monte Carlo Tree Search (MCTS) (Yuan et al., 2025b). Beyond dialogue, self-improvement has been explored in programmatic action composition (Nguyen et al., 2025), robotics (Bousmalis et al., 2024), and code generation (Yin et al., 2025). Another line leverages failure trajectories paired with expert ones for preference optimization (Song et al., 2024;Xiong et al., 2024), but these typically use failures as-is, limiting generalization. Multi-agent variants (Zhang et al., 2024) employ negative agents trained on curated failure datasets, yet these are frozen and restricted to dialogue tasks, offering only limited benefit compared to success-based supervision.

Hard Negatives in Contrastive Optimization Reinforcement Learning from Human Feedback (RLHF) (Lee et al., 2024) has been the standard paradigm for aligning language models, but it requires costly reward modeling and policy optimization. Recent contrastive methods such as Direct Preference Optimization (DPO) (Rafailov et al., 2023) and Generalized Preference Optimization (GRPO) (Tang et al., 2024) simplify this process by directly optimizing policies on preference pairs, bypassing explicit reward models. At the core of contrastive optimization is the idea that learning benefits most from informative comparisons. In particular, hard negatives that are difficult to distinguish from the preferred ones and thus yield small preference margins, are known to provide stronger supervision and promote sharper decision boundaries (Robinson et al., 2021;Rafailov et al., 2023;Chen et al., 2020).

The interaction between an LLM agent and its environment can be formalized as a partially observable Markov decision process (U, S, A, O, T, R), as in Song et al. (2024). Here, U denotes the instruction space, S the state space, A the action space, O the observation space, T : S × A → S the transition function, and R : S × A → [0, 1] the reward function. In our LLM-agents setting, U, A, and O are expressed in natural language.

At the beginning of each episode, the agent receives an instruction u ∈ U and generates its first action a 1 ∼ π θ (• | u) ∈ A from its policy π θ parameterized by θ. The action updates the latent state s t ∈ S and produces an observation o t ∈ O. Subsequent actions are conditioned on the full interaction history, so that

This process unfolds until either the task is solved or the step budget is exceeded. A trajectory can therefore be written as

with likelihood

where n is the trajectory length.

Finally, a reward r(u, e) ∈ [0, 1] is assigned to the trajectory, where r(u, e) = 1 corresponds to full task success and lower values indicate partial or failed attempts. This formulation sets up the basis for preference-based training methods that compare trajectories according to their rewards.

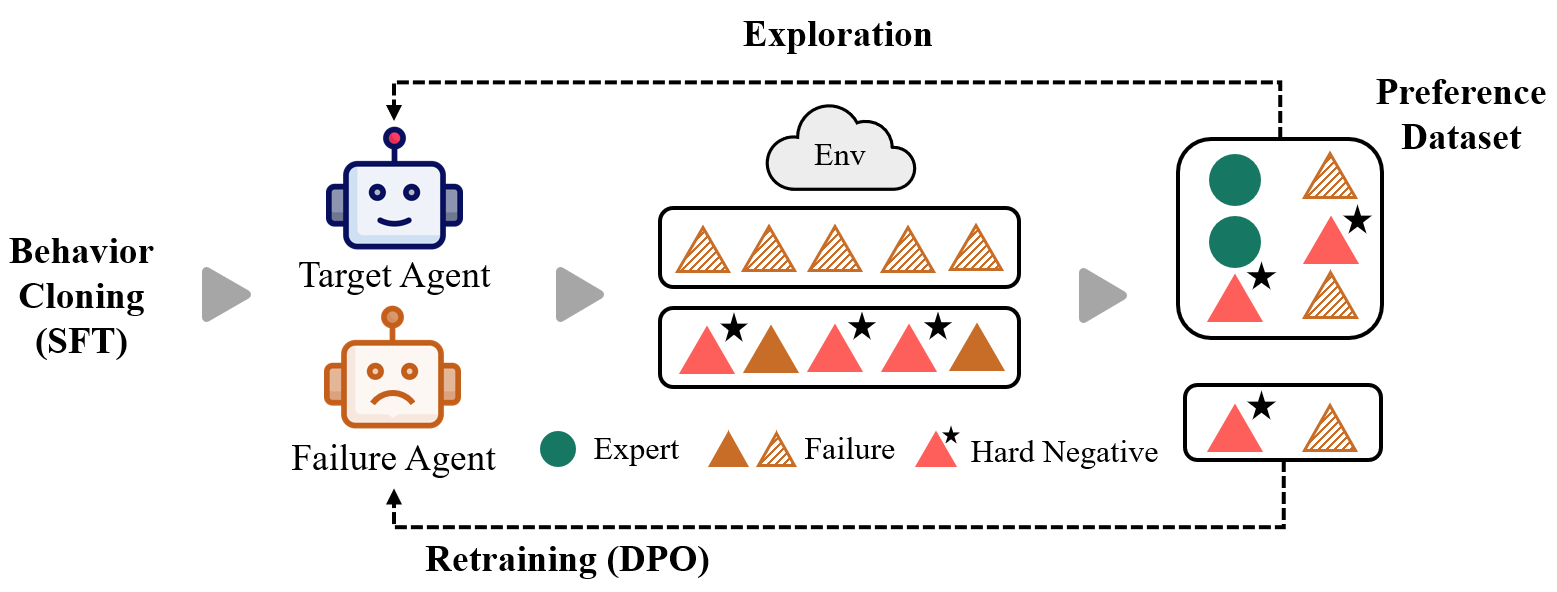

In this section, we present our co-evolving agents framework, where a target agent and a failure agent improve jointly through alternating training phases. Section 4.1 outlines the behavioral cloning stage for initializing the target policy, and Section 4.2 introduces the failure agent, which learns from failure trajectories and generates fine-grained hard negatives. Section 4.3 then describes how the target agent learns from the hard negatives generated by the failure agent through DPO (Rafailov et al., 2023), completing the co-evolutionary loop. The overall pipeline is illustrated in Figure 1.

We first initialize a base policy through behavioral cloning, which equips the agent with fundamental task-solving ability before self-improvement. Given an expert dataset

, each trajectory e = (u, a 1 , o 1 , . . . , a n ) consists of a task instruction u, actions a t ∈ A, and observations o t ∈ O. The agent policy π θ is trained with an autoregressive supervised fine-tuning (SFT) objective:

where the trajectory likelihood decomposes as

In practice, the instruction, actions, and observations are concatenated into a single text sequence t = (t 1 , t 2 , . . . , t l ).

The loss is then computed by applying the autoregressive likelihood only to tokens corresponding to agent actions:

where 1(t k ∈ A) is an indicator that selects tokens generated as agent actions.

This supervised fine-tuning stage provides the base policy π base , which serves as the starting point for both the target and failure agents. Using the same base policy for both agents ensures a comparable starting point while allowing only minor stochastic differences during training.

We introduce an auxiliary failure agent π θ f that specializes in learning from failure trajectories and transforming them into fine-grained, informative hard negatives. Unlike the target agent π θt , which is optimized toward expert success, the failure agent focuses solely on modeling the failure landscape. This complementary specialization enables the two agents to co-evolve through alternating training phases.

Preference Dataset. The preference dataset for the failure agent consists of failure trajectories generated by both the target and itself. Formally, let e tgt and e fail denote trajectories generated by the target agent and the failure agent, respectively. We define the corresponding failure sets as F tgt = {e tgt | r(u, e tgt ) < 1} and F fail = {e fail | r(u, e fail ) < 1} denote the sets of failure trajectories generated by the target and failure agents, respectively. We construct a preference dataset by pairing failures with different reward levels:

Here, e + denotes the preferred trajectory and e -the dispreferred one, with preference determined by their reward values. In this setting, both e + and e -are failure trajectories, where the higher-reward failure is assigned to e + and the lower-reward one to e -. This pairing allows the failure agent to leverage failures from both agents, yielding a richer set of comparisons. In addition, the target agent’s failures further provide weak supervision that guides the failure agent toward competent behaviors and prevents collapse into trivial failures.

Preference Optimization. We adopt the direct preference optimization (DPO) objective (Rafailov et al., 2023) for training on failure trajectories. Given a reference policy π ref , the failure agent π θ f is updated by

.

where u is the task instruction, σ(•) is the logistic sigmoid, and β is a scaling factor. This objective encourages the failure agent to learn fine-grained distinctions within the failure landscape, enabling it to identify both diverse and high-reward failures as hard negatives.

Hard Negatives. Preference optimization depends on strong contrast between preferred and dispreferred trajectories, yet weak or distant negatives often yield trivial comparisons that cause the target agent to collapse into overly simple modes with poor generalization. Our failure agent overcomes this limitation by learning fine-grained distinctions across diverse failure trajectories and generating nearsuccess failures that remain informative despite not solving the task. These hard negatives provide contrastive signals that simple expert-versus-failure pairs cannot offer, and incorporating them into the target agent’s DPO training sharpens the preference landscape and leads to substantially stronger generalization. To better understand the role of the failure agent, we further conduct both quantitative and qualitative analyses of the generated failure trajectories (Section 5.2).

In our framework, the target agent and the failure agent improve jointly through alternating optimization. The failure agent provides increasingly informative hard negatives, while the target agent leverages them to refine its preference landscape. This co-evolving process creates a feedback loop that strengthens both agents and drives improved generalization.

Preference Dataset. The target agent is updated using a preference dataset that combines expert demonstrations with failures from both agents. Specifically, we construct D tgt using (i) expert-target comparisons, (ii) expert-failureagent comparisons, and (iii) failure-failure comparisons between the two agents. Formally,

where e exp denote expert trajectories. This preference dataset provides more informative comparisons through interactions with hard negatives, enabling the target agent to learn a more coherent preference landscape.

Preference Optimization. The target agent is optimized with a weighted DPO objective (Rafailov et al., 2023) together with an auxiliary supervised fine-tuning (SFT) loss on the chosen trajectories:

As noted by Yuan et al. (2025a), DPO alone maximizes relative preference margins but can become unstable, since the space of chosen trajectories is much smaller than that of rejected ones. This imbalance may lead the model to over-penalize rejected samples while insufficiently reinforcing preferred ones. We therefore include an auxiliary SFT term to anchor the policy toward high-reward behaviors and stabilize optimization. Throughout all experiments, we use fixed weights of λ DPO = 1.0 and λ SFT = 0.1.

As training alternates between the target agent and the failure agent, the two models form an implicit arms race: the failure agent continually produces harder and more informative negatives, while the target agent learns to overcome them. This mutual pressure encourages both agents to explore more fine-grained distinctions within the task space. Through this co-evolving process, the target agent acquires a more coherent preference landscape and develops improved generalization.

Datasets We conduct experiments on three representative benchmarks: WebShop for web navigation, ScienceWorld for scientific reasoning, and InterCodeSQL for interactive SQL querying. All three environments provide continuous final rewards in [0, 1], enabling fine-grained evaluation of task completion. Expert trajectories are collected through a combination of human annotations and GPT-4-assisted generation in the ReAct format (Yao et al., 2023), with additional filtering based on final rewards to ensure quality. We present the overview statistics in Table 1.Also, Example trajectory samples for each dataset and further details of the environments and trajectory collection process are provided in Appendix A.

Implementation Details We adopt Llama-2-7B-Chat (Touvron et al., 2023), Llama-2-13B-Chat (Touvron et al., 2023), and Qwen3-4B-Instruct-2507 (Yang et al., 2025). All models are optimized with AdamW (Loshchilov & Hutter, 2017) 8 NVIDIA H100 GPUs with 80GB memory, and further implementation details are provided in Appendix B.

Baselines We compare our framework with standard imitation learning and several strong post-imitation baselines following Song et al. (2024). Supervised fine-tuning (SFT) (Chen et al., 2023;Zeng et al., 2024) trains agents via behavioral cloning on expert trajectories and serves as the base policy for other methods. Rejection Fine-Tuning (RFT) (Yuan et al., 2023) augments the expert dataset with success trajectories identified by rejection sampling, and we further strengthen this baseline using DART-style difficultyaware multi-sampling (Tong et al., 2024), which allocates additional rollouts to instructions estimated as more difficult. Proximal Policy Optimization (PPO) (Schulman et al., 2017) directly optimizes the SFT policy with reinforcement learning to maximize task rewards. We additionally include ETO (Song et al., 2024), which applies DPO over expertversus-predicted pairs without the co-evolution mechanism, thus serving as a DPO-only baseline for isolating the contribution of failure-agent learning. For reference, we also report results from GPT-3.5-Turbo (Ouyang et al., 2022) and GPT-4 (Achiam et al., 2023) with in-context learning. We report average reward as the primary evaluation metric.

To better understand the role of the failure agent, we conduct a quantitative and qualitative analysis of the generated failure trajectories.

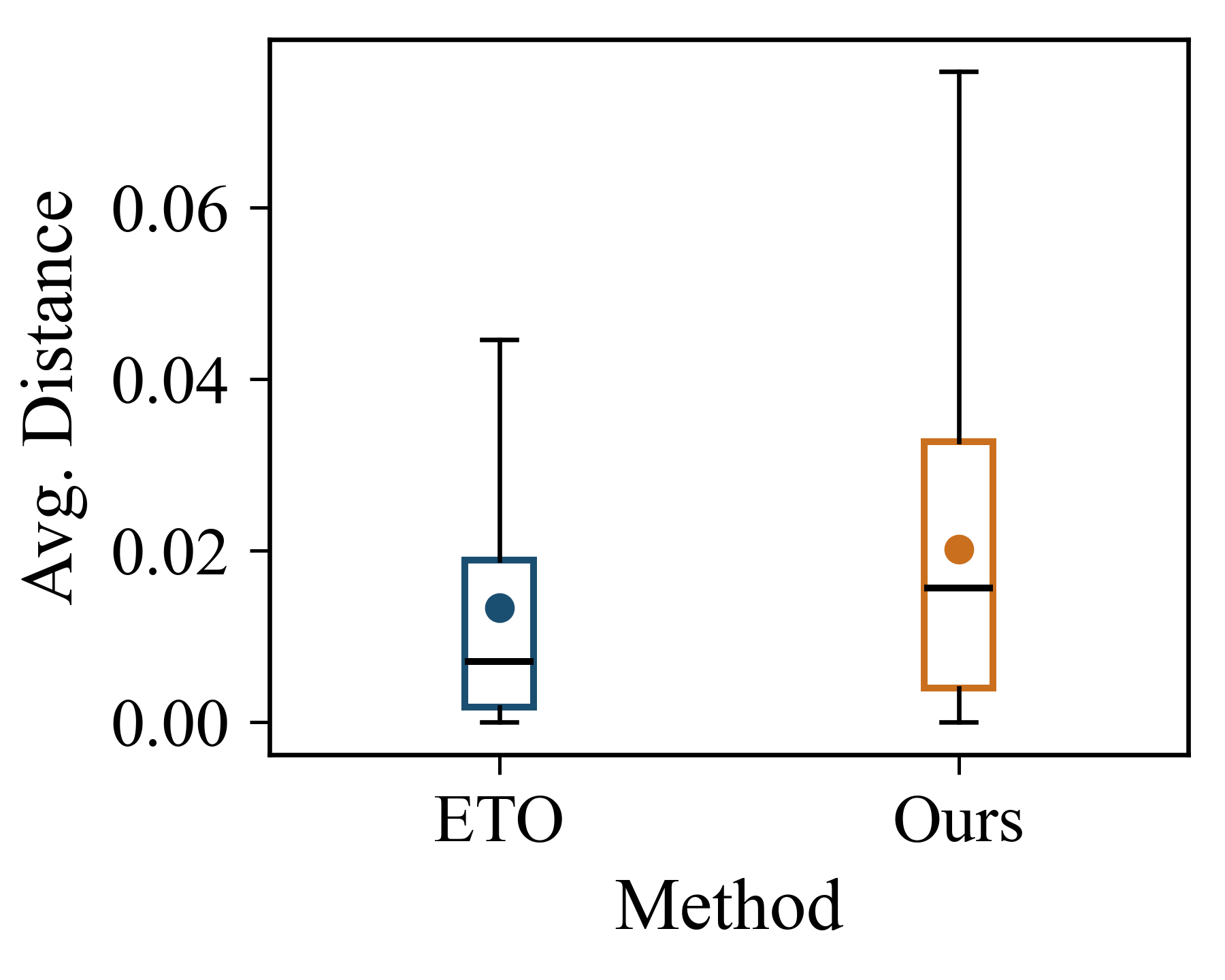

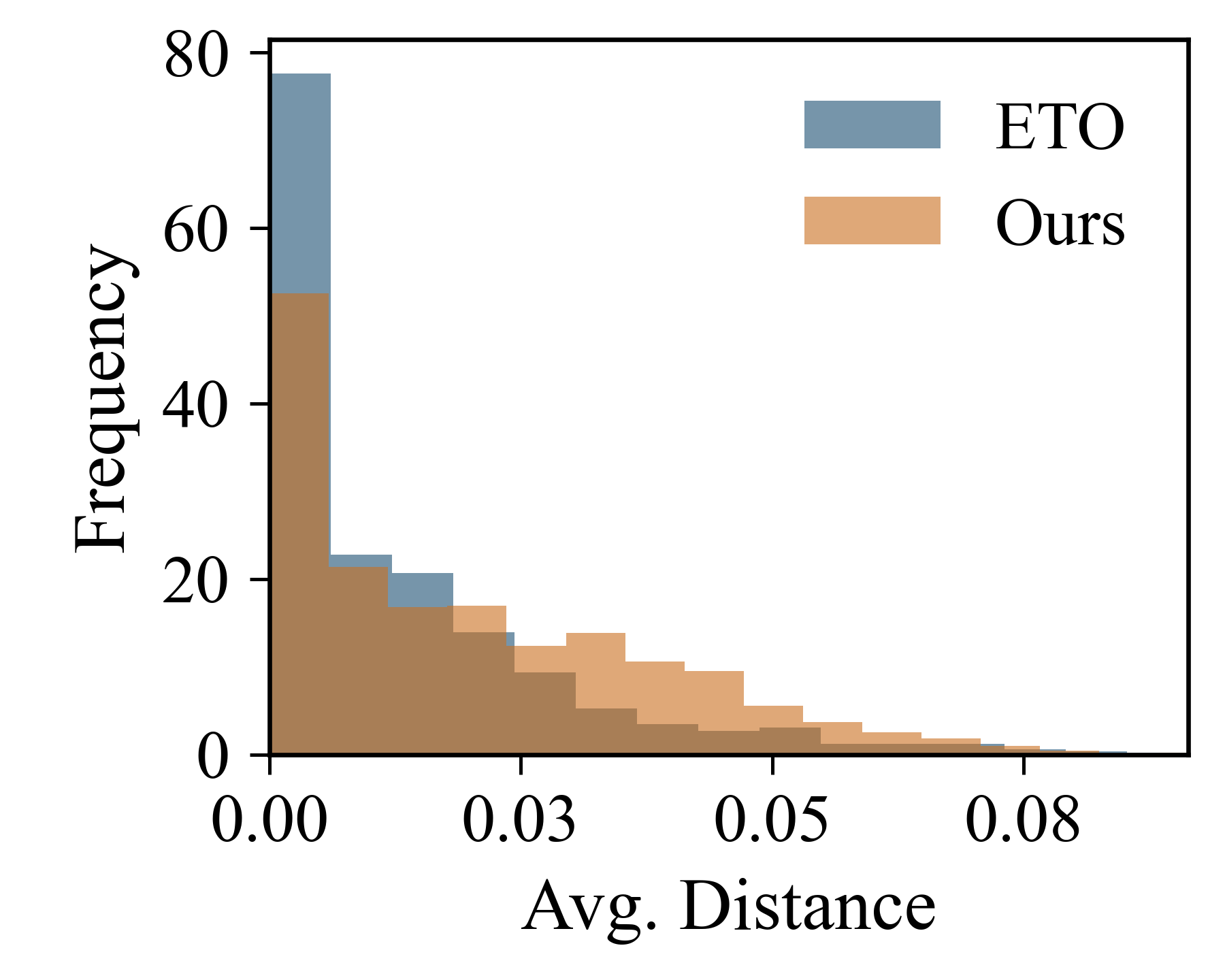

We analyze the failure trajectories generated by ETO and our method along three dimensions: (i) the distribution of successful, negative, and hard-negative trajectories produced during exploration and (ii) the diversity of generated failure trajectories. As in the baselines, we perform a single rollout per instruction due to the high computational cost of long, multi-turn trajectories.

Distribution of generated trajectories. We separate negative and hard-negative trajectories using a reward threshold of 0.6. Higher thresholds (0.7-0.8) would be ideal but occur in fewer than 1% of cases in current self-improving agents.

Table 2 summarizes the resulting statistics. Across all benchmarks, our failure agent Fail ours produces substantially more negative and hard-negative trajectories than ETO: negative trajectories increase by 9.5% (WebShop), 16.7% (Science-World), and 9.0% (InterCodeSQL), while hard negatives increase by 2.3%, 8.7%, and 4.3%, respectively. These consistent gains show that Fail ours effectively expands the informative failure space. The diversity of generated failure trajectories We assess trajectory diversity by embedding each generated failure using LLM2Vec-Meta-Llama-3-8B-Instructmntp (BehnamGhader et al., 2024) and computing the average pairwise distance among trajectories generated per instruction. As shown in Figure 2, the failure agent produces substantially more trajectories with larger pairwise distances than ETO, with the boxplot exhibiting a higher mean and variance. This indicates that the failure agent explores a broader and more diverse failure space rather than collapsing to narrow modes.

We qualitatively examine the role of hard negatives through representative examples, focusing on (i) their quality and (ii) their effect on learning.

The quality of the hard negatives We analyze the Web-Shop task of purchasing a machine-washable curtain. As shown in the example, ETO produces a shallow failure that overlooks key constraints such as washability and price.

In contrast, our method generates a more structured nearmiss: the agent filters mismatching items, checks relevant attributes, verifies constraints, and ends with an almost correct decision. Although still a failure, the trajectory reflects a coherent decision process and exemplifies a high-quality hard negative. Additional examples are provided in Appendix D.

Machine-Washable Curtains (52"×90")

Instruction: I need a machine-washable curtain for the living room, sized 52" wide by 90" long, priced under $60.00.

The agent clicks an early search result, selects the 52"×90" option, and buys it without verifying washability, comparing alternatives, or checking that the final price meets the budget. Reward: 0.50 Steps: 4 Outcome: Failure

Ours: The agent navigates through multiple product pages, filtering by washability, size, and price. It identifies a curtain with a 52"×90" option, verifies that it is machine-washable and within budget, and chooses the matching size variant before purchasing. Reward: 0.75 Steps: 8 Outcome: Failure

The effect of hard negatives We next examine how hard negatives influence the target agent’s behavior. In the Sci-enceWorld task of growing a lemon, ETO produces shallow failures: after planting seeds, the agent repeatedly issues waiting or invalid actions and never attempts soil preparation, tool use, or environment control, offering little usable supervision. In contrast, our method generates hard negatives that attempt multiple sub-skills and execute most of the required pipeline including navigation, soil collection, soil preparation, planting, and greenhouse regulation. Although still unsuccessful, these trajectories provide coherent multi-step behavior and much richer preference signals. As a result, the target agent acquires the underlying sub-skills more effectively and succeeds more reliably in later iterations. A full example is provided in Appendix D.

Instruction: Grow a lemon by planting seeds, preparing soil, and enabling cross-pollination.

We evaluate our framework on three challenging multi-step decision-making benchmarks: WebShop for web navigation, ScienceWorld for scientific reasoning, and InterCodeSQL for interactive SQL querying. All benchmarks use a normalized reward in [0, 1], allowing fine-grained comparison of task performance.

Results on Llama-2-7B. 2024)).

Our method achieves an average reward of 64.1, outperforming ETO by +5.8%.

Gains are consistent across all benchmarks: WebShop (+3.7%), seen Science-World (+4.1%), unseen ScienceWorld (+6.5%), and Inter-CodeSQL (+4.4%). The largest improvement on unseen ScienceWorld suggests that diverse near-success hard negatives significantly enhance generalization to unfamiliar scientific environments.

Results on Llama-2-13B. Table 5 shows that our method also improves performance on the larger Llama-2-13B model. Across WebShop and the ScienceWorld splits, our approach consistently outperforms both SFT and ETO, demonstrating that the benefits of co-evolutionary training scale beyond the 7B setting.

WebShop ScienceWorld

Llama Fine-tuning with ETO substantially boosts performance, and our method provides further consistent gains across all tasks: approximately +6.8% on WebShop, +6.5% on seen Sci-enceWorld, +3.3% on unseen ScienceWorld, and +10.3% on InterCodeSQL. These improvements demonstrate that our failure-agent framework yields substantial and reliable gains across architectures, highlighting the effectiveness of leveraging diverse, near-success hard negatives.

Comparison with DART-style Multi-Sampling. We also strengthen the RFT baseline by incorporating DART-style multi-sampling, using an initial n = 5 rollouts to estimate instruction-level difficulty and allocating a total budget of N = 10 samples per instruction. Successful trajectories from this process are added to the RFT training set.

However, the effect of DART is modest in our setting: on WebShop, RFT improves from 60.89 to only 61.90 with DART. This limited impact stems from the nature of our benchmarks. Unlike domains such as mathematics-where pretrained LLMs already possess strong priors and multisampling frequently yields correct trajectories-our tasks require grounded, environment-specific reasoning in web navigation, scientific procedures, and interactive SQL. Consequently, successful rollouts are rare, reducing the usefulness of difficulty-based selection.

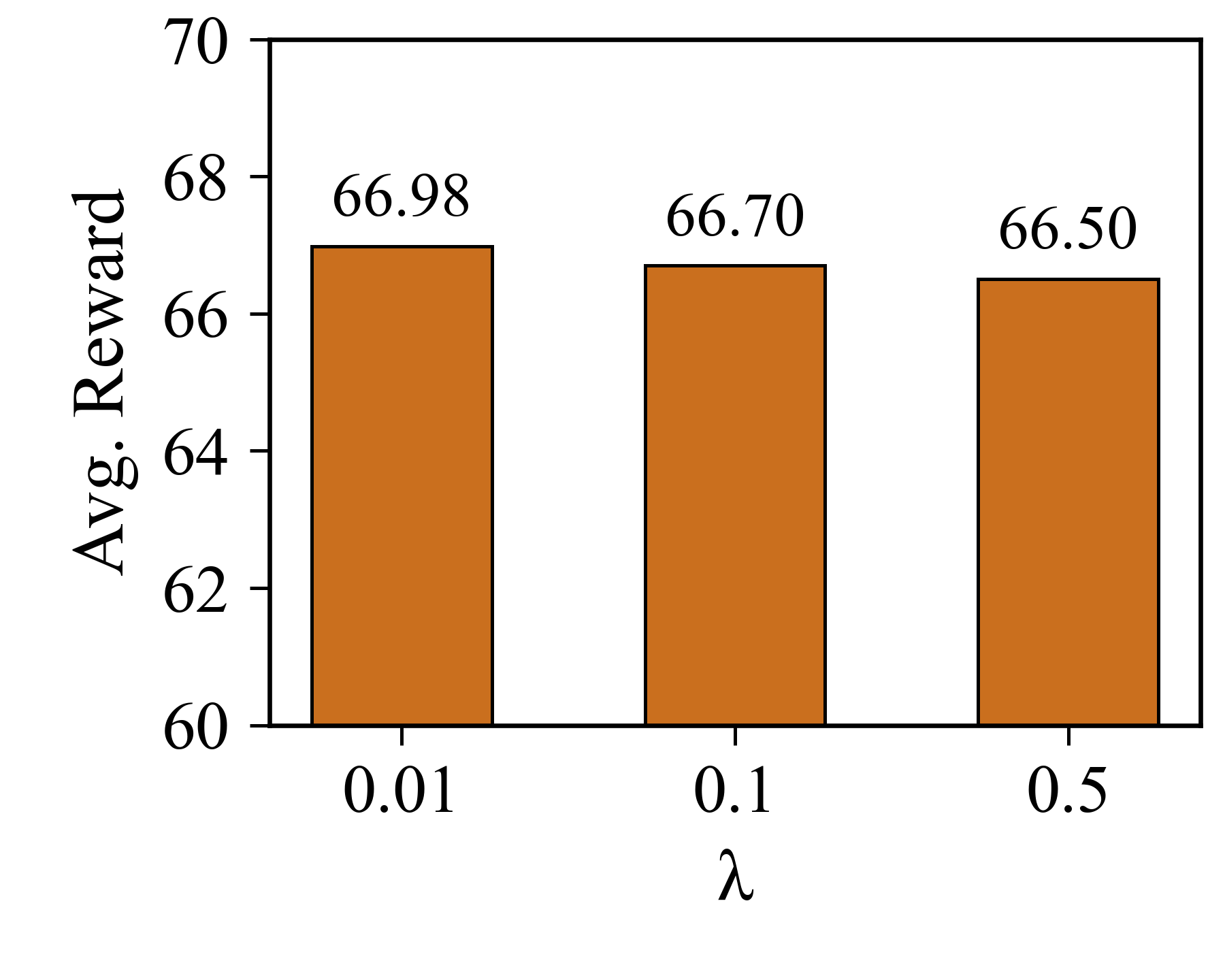

Ablation on Varying λ SFT . We examine the sensitivity of our method to the SFT weight λ SFT by evaluating three settings (0.01, 0.1, 0.5) on the WebShop benchmark using Llama-2-7B-chat. As shown in Table 6, the resulting average rewards (66.98, 66.70, 66.50) vary only slightly, indicating that the method is robust as long as the SFT term remains weaker than DPO.

λSFT=0.01 λSFT=0.1 λSFT=0.5

Avg. Reward 67.98 66.70 66.50 Table 6. Ablation on the SFT weight λSFT.

Parameter-Matched Ablation on the Failure Agent. To ensure that the performance gains do not arise from an increased parameter budget, we perform a parameter-matched ablation in which the failure agent is replaced with an auxiliary positive agent trained in the same manner as ETO using only expert and the agent’s own failures. This variant keeps the total number of trainable parameters identical to our coevolving framework. The positive-agent baseline achieves an average reward of 62.8 on WebShop, which is compa-rable to ETO and clearly below the 66.7 obtained with our method. These results indicate that explicitly modeling and refining failures provides substantial benefit beyond what can be achieved by simply adding another success-oriented agent.

We presented a co-evolving agents framework in which a target agent and a failure agent improve jointly through alternating preference-optimization phases. The failure agent, trained solely on failure-failure comparisons, learns a finegrained failure landscape and autonomously produces nearsuccess hard negatives. Incorporating these trajectories into the target agent’s preference optimization provides sharper and more informative contrasts than standard expert-failure supervision, leading to a more coherent preference landscape. Experiments on WebShop, ScienceWorld, and In-terCodeSQL demonstrate consistent improvements across domains and model architectures, showing that refining failures into structured supervision is an effective mechanism for strengthening generalization in self-improving agents. We hope these findings encourage more principled use of failure trajectories as a first-class training signal in the development of next-generation self-improving agents.

This work aims to advance the development of more robust self-improving agents by leveraging failure trajectories as learning signals. While strengthening autonomous decisionmaking systems can have broad downstream implications, including potential misuse or reinforcement of existing biases, our method does not introduce new data modalities or rely on sensitive information. As with any system that adapts autonomously, appropriate safeguards and careful evaluation are recommended in safety-critical applications. Beyond these general considerations, we do not identify additional societal impacts requiring specific attention.

WebShop. WebShop (Yao et al., 2022) is a goal-oriented web navigation environment where an agent interacts with an e-commerce platform to fulfill natural language shopping instructions. A final reward in [0, 1] is assigned based on how well the purchased item matches the instruction in terms of attributes and price. For training, we use the expert and GPT-4 ReAct-style trajectories provided by Song et al. (2024), retaining only those with final rewards above 0.7 to ensure quality.

Instruction: I need a long clip-in hair extension which is natural looking, and price lower than 40.00 dollars.

• Human: You are web shopping. I will give you instructions about what to do. Every round I will give you an observation and a list of available actions… • Human: Observation: … (product list with IDs and prices)

• GPT: Thought: The first option B09C337K8S fits the budget and is natural looking… Action: click[b09c337k8s]

• Human: Observation: … (product details shown)

• GPT: Thought: The details confirm it is natural looking and affordable. Action: click[buy now]

Reward: 1.0 ScienceWorld. ScienceWorld (Wang et al., 2022) is a text-based embodied environment for conducting elementary science experiments across ten domains, including thermodynamics and electricity. Each task consists of multiple optional subgoals, and the final reward in [0, 1] reflects the proportion of subgoals completed. Following Song et al. (2024), we exclude the two longest task types and subsample test instances with more than ten variations. We evaluate generalization using the original test set of unseen variations (e.g., training on boiling water and testing on boiling lead) and use the development set for seen scenarios. Expert trajectories are drawn from the official dataset, with additional GPT-4-generated trajectories filtered by final rewards, as in Song et al. (2024).

Instruction:

Your task is to determine if a metal pot is electrically conductive. The metal pot is located in the kitchen. If conductive, place it in the blue box; if nonconductive, place it in the orange box.

Conversation:

• Human: You are a helpful assistant to do some scientific experiment in an environment. (Lists available rooms and actions.)

• GPT: OK

• Human: Your task is to determine if metal pot is electrically conductive…

• GPT: Thought: I should locate the pot, probably in the kitchen. Action: look around

• Human: Observation: You are in the foundry…

• GPT: Thought: Wrong room, I need to go to the kitchen. Action: teleport to kitchen

• Human: Observation: You teleport to the kitchen.

• GPT: Thought: I should pick up the metal pot. Action: pick up metal pot

• Human: Observation: Pot moved to inventory.

• GPT: Thought: Now focus on the pot to test it. Action: focus on metal pot

• Human: Observation: You focus on the pot.

• GPT: Thought: I need to set up a circuit in the workshop. Action: teleport to workshop

• Human: Observation: You teleport to the workshop (battery, wires, bulbs available).

• GPT: Thought: Place the pot and build the circuit. Action: drop metal pot

• Human: Observation: Pot placed in workshop.

• GPT: Thought: Connect wires, battery, and pot in circuit. Action: connect battery anode to orange wire … (multiple connections omitted)

• Human: Observation: Circuit components connected.

• GPT: Thought: Wait and check if the bulb lights up. Action: wait1

• Human: Observation: Blue light bulb is on.

• GPT: Thought: The pot is conductive. Place it in the blue box. Action: move metal pot to blue box

InterCodeSQL. InterCodeSQL (Yang et al., 2023) is an interactive SQL querying environment where an agent must translate natural language questions into executable SQL programs through multi-turn interaction. The environment provides a binary reward, which we normalize to [0, 1], indicating whether the final query produces the correct result. We use the expert trajectories collected by Xiong et al. (2024) with GPT-4, retaining only those with reward 1.0. Since the original dataset does not contain intermediate reasoning, each trajectory is augmented with ReAct-style (Yao et al., 2023) thought annotations generated by GPT-4, as in Xiong et al. (2024).

Instruction: What are the enrollment and primary conference for the university which was founded the earliest?

Conversation:

• Human: You are a helpful assistant assigned with the task of problem-solving. To achieve this, you will interact with a MySQL Database system using SQL queries to answer a question. (Gives detailed formatting rules.)

• GPT: OK

• Human: What are the enrollment and primary conference for the university which was founded the earliest? • GPT: Thought: The earliest university has enrollment 19067 and primary conference Colonial Athletic Association (D-I). This is the final answer. Action: submit

We adopt Llama-2-7B-Chat (Touvron et al., 2023) as the primary base model for constructing LLM agents, following prior work (Song et al., 2024). All models are optimized with AdamW (Loshchilov & Hutter, 2017), and we use the same hyperparameter settings across datasets for fair comparison. During the supervised fine-tuning (SFT) stage, we train for 3 epochs with a batch size of 48 and a learning rate of 1e-5, using 3% linear warmup followed by a cosine decay schedule.

The base agent then explores each training instance once to collect failure trajectories. In the subsequent optimization phase, we train with a batch size of 32 and a learning rate of 1e-6 to 5e-7, with the DPO scaling factor β set to 0.1-0.5. The number of optimization epochs is fixed to 3, and the number of co-evolution iterations is set to 3 for WebShop and ScienceWorld and 5 for InterCodeSQL. All experiments are conducted on 8 NVIDIA H100 GPUs with 80GB.

To balance expert prediction supervision, we normalize the weights of all expert prediction pairs associated with a given instruction such that their total contribution sums to 1.0. This prevents these pairs, originating from both the target agent and the failure agent, from disproportionately dominating the training signal while still encouraging alignment with high reward behaviors.

For failure failure comparisons, we disable the SFT term by setting λ SFT = 0 and rely solely on DPO updates. This avoids injecting incorrect supervised signals from suboptimal trajectories and ensures that these pairs retain full influence in shaping the preference gradients. Such weighting preserves the value of fine grained failure distinctions, which are crucial for refining the agent’s preference landscape. Machine-Washable Curtains (52"×90")

Instruction: I need a machine-washable curtain for the living room, sized 52" wide by 90" long, priced under $60.00.

The agent clicks an early search result, selects the 52"×90" option, and buys it without verifying washability, comparing alternatives, or checking that the final price meets the budget. Reward: 0.50 Steps: 4 Outcome: Failure

Ours: The agent navigates through multiple product pages, filtering by washability, size, and price. It identifies a curtain with a 52"×90" option, verifies that it is machine-washable and within budget, and chooses the matching size variant before purchasing. Reward: 0.75 Steps: 8 Outcome: Failure

Hard Negative Justification: The trajectory conducts systematic elimination of mismatching candidates, checks all constraints, and produces an almost correct selection. Its structured decision process provides a prototypical hard-negative example.

Moving a Non-Living Object to the Green Box

Instruction: Find a non-living object, focus on it, and move it to the green box in the workshop.

The agent teleports to the workshop, selects the yellow wire as the non-living object, and moves it into the green box. However, it fails to perform the required focus step and drifts into repeated wait1 and look around actions, stalling without further task-aligned behavior. Hard Negative Justification: The trajectory follows the full instruction, including focusing on the target bulb and assembling a near-correct renewable circuit, and fails only due to subtle environment-level completion criteria.

Instruction: Your task is to grow a banana. This requires obtaining banana seeds, planting them in soil, providing water and light, and waiting until the banana grows.

The agent collects several relevant objects such as seeds and containers but struggles with interaction ordering and location choice. It issues redundant navigation and inspection commands and fails to complete a coherent cycle of planting, watering, and waiting in a suitable environment, leaving the plant underdeveloped. Reward: 0.36 Steps: 55 Outcome: Failure Ours: The agent explicitly gathers banana seeds, moves them to appropriate soil or planter objects, and performs a structured sequence of planting, watering, and exposing the plant to light. It repeatedly checks the growth state and adjusts its actions, closely following the intended multi-step procedure even though the environment does not register task completion. Reward: 0.50 Steps: 60 Outcome: Failure

Hard Negative Justification: The trajectory executes all key sub-tasks of seed collection, planting, watering, and monitoring, making it a faithful but slightly incomplete realization of the target behavior.

Instruction: List the titles and directors of films that were never presented in China.

The agent inspects several tables but repeatedly issues queries referencing non-existent columns (e.g., Market, country), incorrect table names (e.g., film market estimation), and invalid join paths. It ultimately fails to form any executable SQL command.

to internalize essential subskills that are otherwise absent in standard failure trajectories.

Instruction: Your task is to grow a lemon. This will require growing several plants and having them cross-pollinated to produce fruit. Seeds can be found in the bedroom. To complete the task, focus on the grown lemon.

The agent retrieves the seed jar from the bedroom, teleports to the greenhouse, and plants lemon seeds directly into the three water-filled flower pots. It then alternates between wait and look around for many steps, repeatedly issuing invalid actions such as focus on lemon and pick lemon even though no lemon ever appears in the observations. The agent never prepares soil, never manipulates the environment for pollination, and ends in a long, unproductive loop. Reward: 0.25 Steps: 60 Outcome: Failure ETO -Trained Failure: The agent again retrieves the seed jar and plants lemon seeds into the three pots containing only water, then repeatedly waits and checks the greenhouse. It issues multiple invalid focus actions on the lemon tree, but the environment state never progresses beyond “lemon seed in water,” indicating that the preconditions for growth and cross-pollination are not satisfied. No soil preparation or environmental control is attempted, so the episode remains a shallow failure without key subskills. Reward: 0.25 Steps: 49 Outcome: Failure

Ours -Prediction: The agent again retrieves the seed jar from the bedroom, collects soil outside using the shovel, and fills all three greenhouse pots with soil before planting the lemon seeds. It waits for the trees to reach the reproducing stage with flowers, then observes the appearance of lemons on one tree. To encourage stable pollination, it explicitly closes both the outside and hallway doors, creating a controlled greenhouse environment, and continues waiting until a lemon is present. Finally, it focuses on the grown lemon, satisfying the task’s success condition.

Reward: 1.00 Steps: 46 Outcome: Success

- Ours -Trained Failure: The agent retrieves the seed jar, then picks up a shovel in the greenhouse and repeatedly teleports outside to dig up soil. It transports soil back to the greenhouse and fills all three flower pots, explicitly constructing “soil + water” planting conditions before moving lemon seeds into each pot. After staged waiting, it observes that one lemon tree now bears a lemon, and repeatedly attempts to focus on or pick the lemon with overspecified object references. The growth and pollination pipeline is correct, but the episode fails due to action-format errors at the final “focus on lemon” step. Reward: 0.50 Steps: 60 Outcome: Failure (hard negative)

-

Plants seeds directly into water-filled pots and then loops through wait/look around or invalid focus actions. No soil preparation, tool use, or environment control is attempted. Reward: 0.25 Steps: 60 Outcome: Failure

ETO -Trained Failure: Repeats similar shallow behavior and never establishes the preconditions needed for growth or pollination. Reward: 0.25 Steps: 49 Outcome: Failure Ours -Prediction: Collects soil with a shovel, fills pots, plants seeds, waits for flowering, and regulates the greenhouse by closing doors before focusing on the lemon. Reward: 1.00 Steps: 46 Outcome: Success Ours -Trained Failure: Performs the full soil-plant-pollination pipeline with correct subskills but fails at the final focus action, forming a high-quality hard negative. Reward: 0.50 Steps: 60 Outcome: Failure

ETO:

Reward: 0.25 Steps: 15 Outcome: Failure Ours: Reward: 0.58 Steps: 30 Outcome: Failure

📸 Image Gallery