Selecting Belief-State Approximations in Simulators with Latent States

📝 Original Info

- Title: Selecting Belief-State Approximations in Simulators with Latent States

- ArXiv ID: 2511.20870

- Date: 2025-11-25

- Authors: Nan Jiang

📝 Abstract

State resetting is a fundamental but often overlooked capability of simulators. It supports sample-based planning by allowing resets to previously encountered simulation states, and enables calibration of simulators using real data by resetting to states observed in realsystem traces. While often taken for granted, state resetting in complex simulators can be nontrivial: when the simulator comes with latent variables (states), state resetting requires sampling from the posterior over the latent state given the observable history, a.k.a. the belief state (Silver and Veness, 2010). While exact sampling is often infeasible, many approximate belief-state samplers can be constructed, raising the question of how to select among them using only sampling access to the simulator. In this paper, we show that this problem reduces to a general conditional distributionselection task and develop a new algorithm and analysis under sampling-only access. Building on this reduction, the belief-state selection problem admits two different formulations: latent state-based selection, which directly targets the conditional distribution of the latent state, and observation-based selection, which targets the induced distribution over the observation. Interestingly, these formulations differ in how their guarantees interact with the downstream roll-out methods: perhaps surprisingly, observation-based selection may fail under the most natural roll-out method (which we call Single-Reset) but enjoys guarantees under the less conventional alternative (which we call Repeated-Reset). Together with discussion on issues such as distribution shift and the choice of sampling policies, our paper reveals a rich landscape of algorithmic choices, theoretical nuances, and open questions, in this seemingly simple problem.📄 Full Content

Despite often taken for granted in research papers, state resetting can be highly nontrivial in complex simulators, especially when they come with latent variables that are introduced to model the generative processes of the observables but cannot be measured in the real systems. Naïve approaches, such as loading the saved latent states (e.g., loading previously dumped RAM state (Ecoffet et al., 2019)), is not only infeasible in real systems-since the values of the latent variables are nowhere to be found-but also problematic for resetting to a previous simulation state; for example, policies trained with such naïve resetting may depend their actions on privileged information in the latent states, and thus may face performance degradation when distilled to a policy that operators only on observable information (Jiang, 2019;Weihs et al., 2021). The correct formulation is to view the simulator as a POMDP, which induces an MDP where the observable history (i.e., the sequence of observations and actions) is treated as the state. State resetting amounts to using the observable history to set the values of the latent variables. Mathematically, we should sample from the posterior distribution of latent variables conditioned on the observable history, a.k.a. the belief state of the POMDP (Silver and Veness, 2010).

While the belief state is conceptually well-defined for any POMDP, exact sampling from belief states can be computationally demanding, especially when the observation space and the latent state space are high-dimensional and the latent dynamics and the emission process are complex black-boxes. To address this challenge, algorithms for approximately sampling from such a distribution have been proposed: for example, the problem can be viewed as an instance of approximate Bayesian computation (ABC), and Silver and Veness (2010) apply rejection sampling to approximate the belief state. However, rejection sampling, when implemented exactly, incurs exponential-in-horizon sample complexity even when the observation space is finite and small, and requires problem-specific heuristics to trade off accuracy for efficiency. Likewise, techniques from related areas such as Simulation-based Inference (SBI) also come with design choices that need to carefully tuned. This naturally gives rise to the following question:

Given multiple approximations to the belief state, how can we select from them, and what theoretical guarantees can be obtained?

In this paper, we explore the multi-faceted nature of this problem. We first show that finding a good belief-state approximation can be reduced to a general conditional distributionselection problem, and provide a new algorithm and an analysis for the latter under only sampling access to the candidate conditionals (Section 3). Building on this reduction, we then show that belief-state selection itself admits two distinct formulations: latent state-based selection, which directly targets the posterior of latent state given history, and observation-based selection, which targets the induced observable transition model (Section 4). These two formulations behave differently in the presence of redundant latent variables and, perhaps more importantly, interact in subtle but consequential ways with how we use the selected belief state in downstream tasks. Perhaps surprisingly, we show that, when the selected belief-state approximation is used to estimate Q-values via Monte-Carlo roll-outs, observation-based selection can have degenerate behavior under the most natural roll-out procedure which we call Single-Reset, but enjoy guarantees under the counter-intuitive Repeated-Reset roll-out (Section 5; see also Table 1). We conclude the paper with further discussions on the issues related to distribution shift and the design of sampling policies. Collectively, these results and insights reveal a rich landscape of choices and nuances in this seemingly simple problem.

Markov Decision Processes (MDPs) We consider H-step finite-horizon MDPs, defined by the state space S, action space A, reward function R : S → [0, R max ], transition function P : S × A → ∆(S), initial state distribution ρ 0 ∈ ∆(S); here we assume S, A are finite for convenience, and ∆(•) is the probability simplex. We adopt the convention of layered state space that allows for time-homogeneous notation for time-inhomogeneous quantities: that is, let S = 0≤t≤H S t , where ρ 0 is supported on S 0 . P (s ′ |s, a) is always 0 unless s ∈ S t and s ′ ∈ S t+1 , thus any state that may appear as s t always belongs to S t . Any policy π : S → ∆(A) (note that this captures time-inhomogeneous policies) induces a distribution over the trajectory (or episode) s 0 , a 0 , r 0 , . . . , s H-1 , a H-1 , r H-1 , s H by the following generative process: A standard objective that measures the performance of a policy π is the expected return, J(π) := E P π [ t≥0 r t ]. Let V max = HR max denote the range of the cumulative rewards. As a central concept in RL, a (Q)-value function is defined as

Partially Observable MDPs (POMDPs) A POMDP Γ is specified by an underlying MDP plus an emission process, E : S → ∆(O), which generates observation o t ∈ ∆(O) based on a latent state s t ∈ S as o t ∼ E(•|s t ); similar to before we assume O is layered, i.e., o t is always supported on O t . An episode in a POMDP is generated similarly to the MDP: s 0 ∼ ρ 0 , and at any time step t, an observation is generated as o t ∼ E(•|s t ), the agent takes action a t that only depends on the observable history o 0:t := {o 0 , . . . , o t } and a 0:t-1 . Then, a latent transition s t+1 ∼ P (•|s t , a t ) occurs and a reward r t is generated, and so on and so forth. We assume that the information of reward r t is always encoded in o t+1 , so with a slight abuse of notation we write r t = R(s t+1 ) = R(o t+1 ). POMDPs are often used to model processes where the observations violate the Markov property. That is, we only observe o t in the real system, and introduce s t to explain the dynamics and evolution of o t . In this case, s t are latent states that are not observed in the real-system traces.

, where τ t = (o 0:t , a 0:t-1 ) is an observable history. It is useful to think of the evolution of the observable variables of a POMDP as a history-based MDP. That is, let the t-step history τ t be the state, and upon action a t , the reward r t and next-state τ t+1 are generated as

We use M Γ (o t+1 |τ t , a t ) to denote the conditional distribution and the induced MDP dynamics. (Note that since reward r t is encoded in o t+1 , it can also be determined from τ t+1 and thus is consistent with our MDP formulation.) This MDP naturally fits the layered convention, where H t , the space of τ t , is the t-th step state space. This way, any historydependent policy is simply a Markov policy in the history-based MDP, π : H → ∆(A). Concepts such as value functions for a POMDP can be immediately defined through its history-based MDP, that is, when we mention the Q-function in a POMDP such as

Additional Notation For two distributions p, q ∈ ∆(X ), define their Total-Variation (TV) distance as D TV (p, q) := x∈X |p(x)-q(x)|/2, and let ∥p/q∥ ∞ := max x∈X p(x)/q(x).

As mentioned in the introduction, we are interested in complex simulators where, when modeled as a POMDP, the latent-state and the observation spaces S and O are potentially very large, and the latent transition and the emission process P and E are complex blackboxes, to which we only have sampling access. While the notion of belief state, b ⋆ , is conceptually and information-theoretically well-defined, it is not easy to access them in a computationally efficient manner, and methods from ABC, SBI, and particle filtering may be used to approximate the said belief state (Cranmer et al., 2020). Since these methods often require domain-specific design choices and heuristics, the model-selection problem naturally arises: given a candidate set of belief-state approximations B = {b (i) } m i=1 with b (i) : H → ∆(S), we are interested in selecting the best approximation by interacting with the simulator. Throughout the paper, we will assume realizability as a simplification: Assumption 1 (Realizability). b ⋆ ∈ B.

The problem of selecting/learning the posterior distribution in a computationally-efficient manner is closely related to Simulation-based Inference (SBI). As a standard approach in SBI, we can generate trajectories with latent states in the form of (s 0:H , o 0:H , a 0:H-1 ) using some behavior policy π b : H → ∆(A), and obtain τ t = (o 0:t , a 0:t-1 ) and s t pairs for any 0 ≤ t ≤ H. The joint distribution of (τ t , s t ) generated in this way satisfies that

and we write τ t ∼ Γ π b to denote that τ t is generated from a trajectory induced by policy π b in POMDP Γ. On the other hand, for any candidate b ∈ B, we can also generate

for each τ t in the above dataset. Then, testing whether b = b ⋆ can be reduced to the problem of conditional 2-sample test based on the samples {(τ t , s t , st )}, that is, to tell whether s t |τ t and st |τ t are identically distributed.2 We call this approach latent statebased selection to distinguish it from alternative approaches we will consider later.

Reduction to Joint 2-Sample Tests A naïve approach is to reduce the conditional test to a joint test: we can simply test if (τ t , s t ) is identically distributed as (τ t , st ). If the joints are identical, it implies that the conditionals are also identical on the supported τ t . Unfortunately, this approach comes with significant practical hurdles: 2-sample tests often involve some kind of discriminator class F that need to be carefully designed (Gretton et al., 2012), which in this case operates on H × S. However, given that a history τ ∈ H is a combinatorial object of variable length, designing effective discriminators can be practically challenging. This begs the question:

Can we design algorithms that do not rely on discriminators over the H space?

We now provide a solution to the general problem of selecting from conditional distributions in the form of P (Y |X), in a way that only requires discriminator classes operating on Y , avoiding the demanding task of feature engineering or neural architecture design over X which are often complex combinatorial objects (such as histories) in our settings of interest.

General Problem Formulation We are given n i.i.d. (X, Y ) pairs, {(X j , Y j )}, sampled from a real joint distribution (X, Y ) ∼ P ⋆ , and the task is to select from m candidate conditionals P i (y|x) where

Computationally, we assume we can efficiently sample from P i (•|x) for any given x, but we can only sample joints from P ⋆ .

Inspired by Scheffé tournament (Devroye and Lugosi, 2001), we first consider the case of m = 2 and later extend to general m via a tournament procedure of pairwise comparison.

Pairwise Comparison between 2 Candidates When m = 2, we propose the following procedure, which requires a discriminator class F : Y → {0, 1} to serve as classifiers: for each X j in the data sampled from P ⋆ ,

-

Use the above 2N data points to train a classifier fj ∈ F that predicts whether Y is sampled from P 1 (•|X j ) or P 2 (•|X j ). For theoretical analyses and presentation ease, we assume ERM on 0/1 loss, and adopt the convention (which is w.l.o.g.) that P i ⋆ gets label 1.

-

Use fj to classify the real Y j . Additionally sample 1 data point from each of P 1 (•|X j ) and P 2 (•|X j ), denoted as Y

(1) j and Y

(2) j , and classify them with f j as well.

Finally, we choose between P 1 , P 2 based on arg min k∈{1,2}

. For m > 2, we perform the above procedure for each pair of candidate conditionals (data sampled from P k may be reused in multiple comparisons), and let f i,k j be the classifier trained to distinguish between P i (•|X j ) and P k (•|X j ). The final choice is arg min

(1)

In words, for each real data point X j , we draw “synthetic data” from the candidate conditionals P i (•|X j ) and P k (•|X j ) to train a classifier, and apply it to a single “real” data point Y j ; in total, nm(m -1)/2 classifiers will be trained. When i ⋆ ∈ {i, k}, the classifier learns to separate Y generated using P ⋆ = P i ⋆ from that using the wrong conditional. Therefore, we may choose between P i and P k based on f i,k j (Y j ), which predicts whether Y j (sampled from P ⋆ = P i ⋆ ) is more likely to be produced by P i or P k . Of course, the signal from classifying a single data point Y j is weak and contains randomness, and aggregating such signals across all j can reduce the noise and amplify the signal.

While the above idea is reasonable, it may run into issues when the data from P i and P k are not cleanly separable by F : the algorithm minimizes an overall error rate with a mixture of correct data (from P i ⋆ ) and incorrect data, but the classifier is eventually only applied to Y j from P i ⋆ , meaning that the ultimate loss we suffer is the False Negative Rate of f i,k j , which is only loosely controlled by the overall error rate. In contrast, we follow the spirit of Scheffé estimators and treat the classifier f i,k j as an approximate witness of the totalvariation distance between P i (•|X j ) and P k (•|X j ), and make the final selection via the IPM loss in Eq.( 1), which leads to the relatively weak assumption in the theoretical analysis below.

Theoretical Guarantee We now provide the assumptions and the theoretical guarantees for this procedure. The key assumption we need is that F contains nontrivial classifiers that separate P i (•|X j ) from P k (•|X j ) when i ⋆ ∈ {i, k}, as formalized by the following assumption. Assumption 2 (Expressivity of F ). Assume F is a finite class. Define

be the Bayes-optimal classifier for distinguishing between P i ⋆ (•|x) and P i (•|x), and

We assume the existence of f i,i ⋆ X ∈ F that satisfies the following: for some 0 < α ≤ 1 that applies to all i, 2)) is the best possible classifier for separating

, and we can get straightforward guarantees if we simply assume f i,i ⋆ X,⋆ ∈ F. Instead, we only assume F realizes “better-than-trivial” classifier f i,i ⋆ X , and the rest of this assumption introduces definitions to quantify what “better-than-trivial” means.

The acc i,i ⋆ X term is the classification accuracy, with the convention (which is w.l.o.g.) that P i ⋆ is assigned label 1 and P i is assigned label 0. Note that when F is closed under negation (1f ∈ F, ∀f ∈ F ), it is trivial to find a classifier in F with 1/2 accuracy, so E(i, i ⋆ ) is a measure of how separable P i and

Given all these definitions, we require F to contain a classifier f i,i ⋆ X whose margin is only a multiplicative fraction of E(i, i ⋆ ), and this does not need to hold for every X, but only on average w.r.t. the marginal of X.

We are now ready to give the guarantee; the proof is deferred to Appendix A. Theorem 1 (Sample complexity). Under Assumption 2, for î identified by Eq.( 1), with probability at least 1 -δ,

Invoked on X = τ t , Y = s t , and P ⋆ is distribution under behavior policy π b , we have

where τ t ∼ Γ π b is a partial trajectory naturally simulated in Γ under policy π b without using resetting.

The above procedure is closely related to and a variant of the method of Li et al. (2022), both of which can be viewed as the extension of Scheffé estimators to conditional distributions. The difference is that Li et al. assume access to the density functions of P i (y|x), which allows them to explicitly compute the Bayes-optimal classifier in Eq.( 2). In contrast, we only allow blackbox sampling access to P i (•|x), and approximate the above classifier using a discriminator class F via training on sampled synthetic data. What we show is that F does not need to realize the above Bayes-optimal classifier for every single x, and it suffices to have marginally-better-than-trivial classifiers in an average sense. Theoretically, Li et al. show that the sample complexity of the conditional selection problem should not depend on the complexity of the X space (see also Bilodeau et al. (2023)), which is echoed by our motivation of not having to design discriminators over X . In model-based RL, similar insights and Scheffé-style constructions have been used in learning model dynamics from IPM losses (Sun et al., 2019). The idea of discriminators to help learn or test conditional distributions are also found in the SBI literature (Lueckmann et al., 2021).

Outside belief-state approximation, our procedure may also be relevant to model selection in conditional generative models, such as prompt-based image generation. One potentially relevant property is that our procedure has a low sample-complexity burden on n, the number of “real” data points from P ⋆ . In belief-state approximation, both n and N can be increased by spending more computation; in tasks of learning from real datasets, however, the real data (from P ⋆ ) can be more expensive to collect compared to the synthetic data (from P i ), and the independence of n on the complexity of |F| can be appealing.

This model can be equivalently treated as a history-based MDP, as it describes how the next state (length-(t + 1) history) can be sampled from the current state-action pair (length-t history and action): given τ t , a t , the generative process for τ t+1 is simply

where • is concatenation. It is not hard to see that when b = b ⋆ , M Γ,b ⋆ describes the true history-based MDP induced by POMDP Γ, i.e., M Γ,b ⋆ = M Γ . Given that most key RL concepts in POMDPs, such as value functions and optimal policies, can be defined through the induced observable model (see Section 2.1), a natural idea is to apply the conditional selection procedure in Section 3 but with the following setup:

• P ⋆ correspond to generating X, Y pairs by sampling trajectories using some behavior policy π b : H → ∆(A).

Then under Assumption 2, we can directly invoke Theorem 1 for an expected TV guarantee. For example: Corollary 2. Bind X = (τ t , a t ) and Y = o t+1 and define P ⋆ , {P i } as described above.

Under the conditions of Theorem 1, with probability at least 1 -δ, we will select a belief state approximation b, such that (note that

Furthermore, such a guarantee are immediately implied from (and thus weaker than) that for latent state-based selection: Proposition 3. Fixing any τ t , a t , we have

Therefore, Eq.( 3) immediately implies Eq.( 5).

Proof. Conditioned s t , the process of generating o t+1 is independent of whether s t is generated from b ⋆ or b, so the claim is a direct consequence of the data processing inequality.

If the final goal is to compute objects that solely depend on the observable model M Γ , such as value functions or optimal policies, it seems that observation-based selection is an equally legitimate solution. and Sutton, 2002;Singh et al., 2004) and minimal information state (Subramanian et al., 2022), and is also manifested in recent statistical results for learning in POMDPs (Zhang and Jiang, 2025) (see also Section 5.3). As we will see next, however, observation-based selection can suffer from surprising degeneracy in downstream use cases when latent state-based selection is well-behaved, which seems to contradict the spirit of behavioral equivalence.3

In this section, we investigate how the errors in b (measured in the forms of Eq.( 3) or ( 5)) can affect the downstream RL tasks. We consider the most basic yet representative use of state resetting enabled by an approximate b:

Roll-out: Sample trajectories to obtain a Monte-Carlo estimate of Q π Γ (τ t , a t ) for a given target policy π.

This procedure is useful for debugging and simulator selection (Sajed et al., 2018;Liu et al., 2025), enables Monte-Carlo control (Sutton and Barto, 2018), and forms the basis of Monte-Carlo Tree Search (Kocsis and Szepesvári, 2006;Silver and Veness, 2010;Browne et al., 2012). Perhaps surprisingly, despite the simplicity of the task, there are many nuances to the question. latent state-based selection and observation-based selection interact in subtle but consequential ways with the roll-out method, and observationbased selection can have degenerate behaviors when used with the most natural roll-out procedure.

We now consider what error guarantees can be obtained for estimating Q π Γ (τ t , a t ) via Monte-Carlo roll-outs using an approximate belief state b ≈ b ⋆ , e.g., one with guarantees established in Theorem 1. In particular, the obvious (and seemingly unique) roll-out procedure, which we call “Single-Reset Roll-out” (whose meaning will be clear shortly), is:

- Take given action a t , and generate s t+1 ∼ P (•|s t , a t ), o t+1 ∼ E(•|s t+1 ).

Step 2 for subsequent time steps by taking actions according to π, and collect H t ′ =t+1 R(o t ′ ) as a Monte-Carlo return.

Since the error due to finite roll-outs can be easily handled by Hoeffding’s inequality, we will focus on the expected value (i.e., assuming infinitely many roll-outs) obtained through the above procedure, denoted as Q π 1-Reset(Γ,b) (τ t , a t ), and analyze its error relative to the groundtruth Q π Γ (τ t , a t ). Concretely, the guarantee in the form of Eq.( 3), obtained via latent state-based selection, immediately implies the proposition below. Since our guarantee for b in Eq.( 3) is not pointwise, it should not be surprising that the error bound for Q π 1-Reset(Γ,b) is also given in an average sense w.r.t. the distribution of trajectories under π b , since that is what we use to train the classifiers and select for s t |τ t . Proposition 4. If Eq.(3) (guarantee for latent state-based selection) holds for some t, then

Proof. The procedure for rolling out using b vs. b ⋆ is identical after s t is sampled, so

is the same for both b and b ⋆ , where the expectation is w.r.t. the randomness of the Single-Reset procedure. The final result is just taking its expectation w.r.t.

Plugging this into E (τt,at)∼Γ π b [•] completes the proof.

As a natural follow-up question, can we obtain similar error bounds from Eq.( 5), which is obtained from observation-based selection?

Recall that observation-based selection enjoys the guarantee in Eq.( 5):

It is tempting to provide guarantees for the expected roll-out value by directly reducing to existing MDP analysis, using the following argument: note that M Γ,b and M Γ,b ⋆ are two history-based MDPs. When we treat history as state, Eq.( 5) is similar to the kind of guarantees obtained from MLE in MDPs, 4 which immediately leads to a policy-evaluation guarantee: Theorem 5. Suppose two MDPs only differ in the transition dynamics, P vs. P ′ . In addition, for every t, assume

When the roll-out policy π = π b , it holds that for every t,

This result is standard in model-based RL, and we provide its proof in Appendix B for completeness. It is also possible to extend the result to other roll-out policies π ̸ = π b by paying for a coverage coefficient, which we will discuss in Section 6.1 but not consider in this section. The exact match between Eq.( 6) and Eq.( 5) immediately implies that: Corollary 6. If Eq.( 5) holds for all t, then for π = π b we have (note that

While the guarantee here has an additional horizon factor (H -t), it is nevertheless a solid guarantee. The final piece of the puzzle is the observation that Single-Reset seems to be the only way to sample from Γ using b, so it probably coincides with M Γ,b , i.e.,

for any π. This intuitive statement, however, is in sharp conflict with the following example:

cannot enjoy the guarantee of Corollary 6). Consider an arbitrary POMDP Γ, except that the emission E(•|•) is state-independent at some time step t 0 . Every candidate b predicts the correct belief state b ⋆ (•|τ t ) for any t ̸ = t 0 , but can predict arbitrary distributions for t = t 0 . In this case, the observable behavior (i.e., the induced law of M Γ,b ) of b is indistinguishable from that of b ⋆ at any time step, so any b could be selected. However, a Single-Reset roll-out starting at t 0 -1 will generally be incorrect since the arbitrarily generated s t 0 -1 will have a lingering effect, i.e., producing an incorrect distribution of s t 0 and subsequent latent states.

We are facing a paradox: on one hand, Example 1 shows that observation-based selection cannot enjoy the guarantee in Corollary 6 for Single-Reset roll-out; on the other hand, the observable model M Γ,b , a history-based MDP determined by Γ and b, does enjoy 4. The guarantee of MLE has a square on the TV distance, i.e., E[DTV(•) 2 ] ≤ . . ..

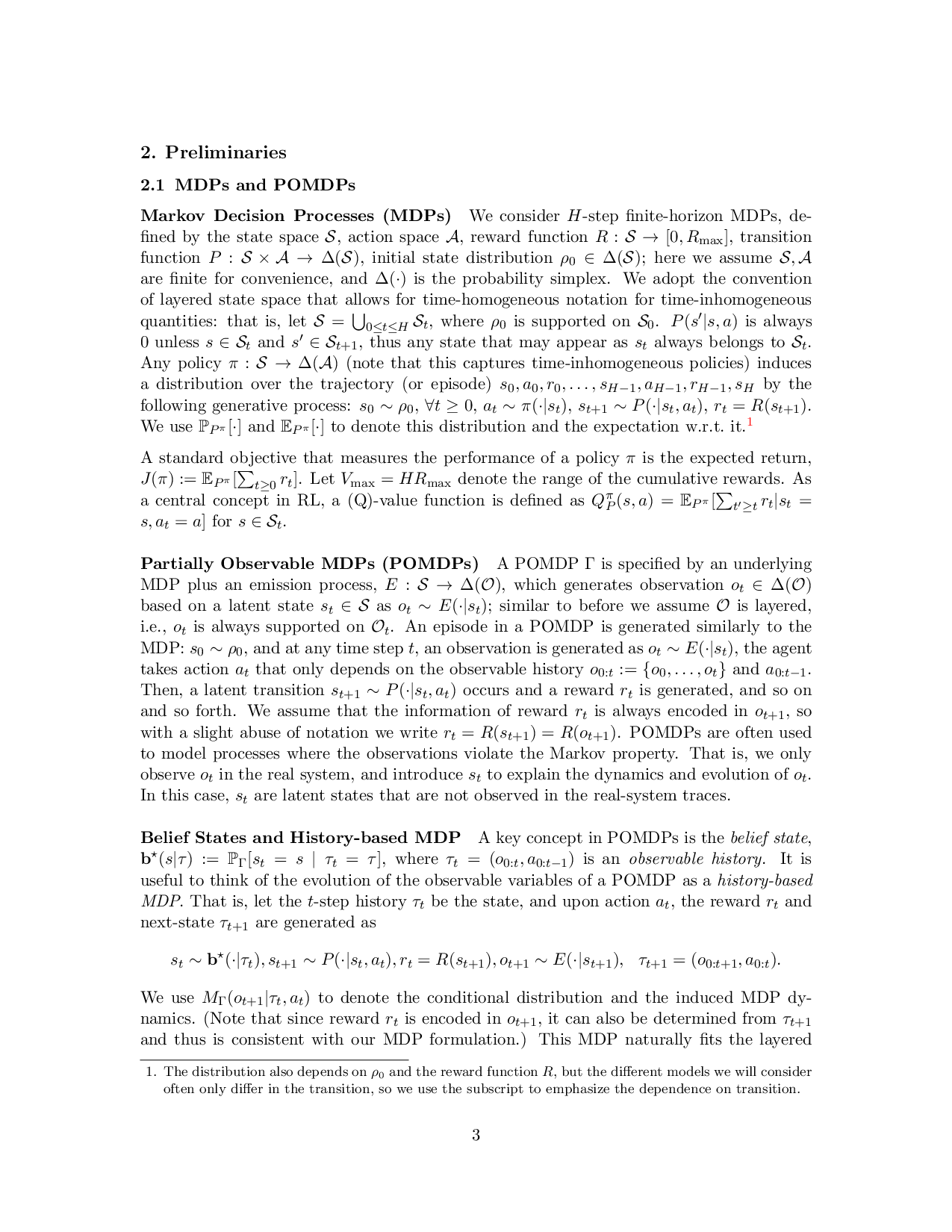

Figure 1: Toy example for illustrating the difference between Single-Reset and Repeated-Reset. In this binary-observation, action-less system, “X” represents the occurrence of an event. Every time an event happens (“X”), the system samples the interval till next event from some distribution. The history of interest is “X O O”, and the first row shows the real trajectory. b always sets the latent state to be 0, i.e., predicts that next event will occur immediately. a standard guarantee for its induced Q-value. Given that Single-Reset seems to be the only reasonable way to roll-out trajectories using Γ and b, it is reasonable to believe that such roll-outs correspond to the Q-value of M Γ,b . So what gives? Single-Reset and Repeated-Reset are equivalent if b = b ⋆ but are generally different; see Figure 1 for an example. We conclude this section with the following remarks that reconcile the earlier paradox:

• The main difference between Single-Reset and Repeated-Reset is that, at any time t, the observable trajectory τ t is a sufficient statistics for simulating the rest of the trajectory in Repeated-Reset. For Single-Reset, however, the sufficient statistic is (τ t , s t ). Therefore, when we only have observation-based selection guarantee but not that of latent state-based selection, Single-Reset is only guaranteed to produce the correct o t+1 , but not future observations due to the lingering effect of possibly wrong s t .

• Observation-based selection can still enjoy the guarantee in Corollary 2 via Repeated-Reset roll-out, but it suffers from an additional horizon factor compared to Proposition 4 due to the repeated application of the inaccurate b (c.f. Figure 1). On a related note, Proposition 4 only requires Eq.(3) to hold for the t that is the subscript of the τ t we start roll-out from, but Corollary 2 requires Eq.( 5) to hold for all t ′ ≥ t. Moreover, when the roll-out policy π ̸ = π b , Corollary 6 needs to pay for an additional coverage coefficient (see Section 6.1) while Proposition 4 does not. Therefore, while latent state-based selection + Repeated-Reset can also enjoy Corollary 2 via Proposition 3, it is in- • As another possible misconception, it may be tempting to think that the observable dynamics of Single-Reset is M Γ,b at time step t and M Γ for subsequent time steps, since all later simulations do not involve the use of the inaccurate b and hence should be “correct”. This is not true due to, again, the lingering effect of wrong s t distribution. (In fact, if this held, Corollary 2 would hold for Q π 1-Reset(Γ,b) without the horizon factor.) That is, although all subsequent simulations seem correct, the induced conditional law of the observables, o t ′ +1 |τ t ′ , a t ′ for t ′ > t is not the same as the true M Γ .

• As potential future directions, it will be interesting to consider if the inconsistency between Single-Reset and Repeated-Reset can be turned into a method for selecting belief-state approximations, and whether the insights can be used to reduce the error accumulation of observation-based selection.

The previous sections may leave the impression that latent state-based selection is more superior to observation-based selection other than a minor disadvantage regarding redundant latent variables. Below we study a motivating scenario mentioned earlier, where it is beneficial to integrate both latent state-based selection and observationbased selection into the solution and exploit the strength of each.

Problem Setup. Consider the problem of learning from real-system data. Let Γ ⋆ be a real system, from which we draw observable trajectories with policy π b . The goal is to use these data trajectories to select among candidate simulators {Γ k } K k=1 that best matches the dynamics of the real system, and as before we assume realizability i.e., Γ ⋆ ∈ {Γ k }. In a way, this is essentially a model estimation problem in POMDPs, and the standard approach (as mentioned earlier in Section 4) is MLE (Liu et al., 2022):

where all variables with (j) subscript come from the j-th data trajectory.

Unfortunately, this solution is not directly applicable to our setting, since we do not assume probability mass/density access to P or E in any given simulator Γ and thus cannot compute

. Instead, we can directly leverage our observation-based selection (Section 4):

while it is initially designed to select M Γ from {M Γ,b : b ∈ B}, the procedure and analyses immediately apply to the problem here where we select M Γ⋆ from {M Γ k }.

But that brings a further problem: observation-based selection requires efficient sampling access to the candidate conditionals, which is M Γ (o t+1 |τ t , a t ) for Γ ∈ {Γ k } here. That requires having sampling access to the belief state in Γ (Eq.( 4)), which is not available. However, that is exactly the problem we have been dealing with so far! So let’s assume that we are given B,

Invoking the analyses in Corollaries 2 and 6, we immediately have: 7 for the selected (Γ, b) pair,

where ϵ 0 can be determined by the number of data trajectories from Γ ⋆ through the samplecomplexity statement in Theorem 1. 8

The problem is, if we want to roll-out trajectories using the selected (Γ, b) to approximate Q π b Γ⋆ , the only valid approach is Repeated-Reset (Eq.( 7)), and Single-Reset will not enjoy any guarantee given Example 1. However, Repeated-Reset is computationally costly especially when sampling from b has a nontrivial cost, and in practice Repeated-Reset is often a bad idea given repeated injection of the error of b ̸ = b ⋆ (Figure 1). This begs the the question: can we enjoy a guarantee similar to Eq.( 7) while rolling out from the selected (Γ, b) using Single-Reset? Two-Stage Solution We now show that the natural two-stage solution achieves the goal if we use latent state-based selection in the first stage (selecting b for Γ). The analysis turns out to be somewhat nontrivial, which we provide below: 5. The candidate set B can be designed for each Γ ∈ {Γ k } separately, but we assume B is the same across candidate simulators for ease of presentation. 6. We still use b ⋆ to refer to the true belief state of the simulator Γ under consideration. Note that we do not need to refer to the belief state of the real system Γ⋆ and hence does not give it a notation. 7. We relax (H -t) in Corollary 6 to H here for readability. 8. Concretely, to achieve ϵ0 error, the number of trajectories needed is O(log(mK/δ)/α 2 ϵ 2 0 , where m and K are the sizes of B and {Γ k } K k=1 , respectively.

Theorem 7. Assume that the selected (Γ, b) satisfies

Then

The conditions of the theorems are the guarantees of latent state-based selection in Stage 1 (Eq.( 3)) and observation-based selection in Stage 2 (Eq.( 5) when Γ ⋆ is the groundtruth).9 The final guarantee resembles Eq.( 7), except that it permits the use of Single-Reset roll-out. The error bound is slightly worse than Eq.( 7) by a multiplicative constant and an additional dependence on ϵ 1 . However, note that ϵ 0 is determined by the amount of real-system data which often is fixed, while ϵ 1 is determined by the amount of data sampled from each simulator Γ. So overall the guarantee is still comparable to Eq.( 7).

Proof of Theorem 7. Eq.( 8) implies that

Both this inequality and Eq.( 9), through the sub-additivity of TV distance for product distributions, implies

because we can use Q π b Γ as the bridge term, and both Q π b 1-Reset(Γ,b) and Q π b M Γ,b are close to it thanks to Proposition 4 and Corollary 6. On the other hand, Eq.( 9) enables the following through Corollary 6:

Finally, putting everything together:

where the third line changes the distribution from Γ π b ⋆ to Γ π b by paying the for the TVdistance multiplied by the boundedness of the function.

The roll-out guarantees in Theorem 1 and Corollary 2 all consider Q-function errors under the distribution (τ t , a t ) ∼ Γ π b , under which we train the classifiers to select the beliefstate approximation, and Corollary 2 further restricts the roll-out policy to be π = π b . Naturally, one would wonder what happens when the error is measured under a different sampling distribution (e.g., the occupancy induced from a different roll-in policy π ′ ), and when Repeated-Reset is given a general roll-out policy π ̸ = π b . These questions are well-understood in the MDP literature (especially offline RL theory) that we can pay some form of coverage coefficient to translate the error from one distribution to another: Proposition 8. Consider an MDP with transition P .

- ( Single-Reset, extension of Proposition 4) Given any Q : S t × A → R where

where ρ (•)

t is the marginal distribution (a.k.a. occupancy) of (s t , a t ) induced by a policy in P .

- ( Repeated-Reset, extension of Proposition 2) Consider another MDP with transition P ′ where Eq.( 6) holds for all t. Let (π ′ ) t • (π) t ′ -t be the policy that follows π ′ for the first t steps and π for the next t ′ -t steps, then

These results can be directly applied to POMDPs by mapping state s t in the proposition to the observable history τ t , P to the observable dynamics of Γ (i.e., M Γ,b ⋆ ), Q to Q π 1-Reset(Γ,b) , and P ′ to M Γ,b . However, while the coverage coefficients that appear in the proposition are often acceptable in MDPs, their behaviors are not as benign in POMDPs: for example, Eq.( 10) becomes

where P π ′ Γ [τ t , a t ] is the probability assigned to the partial trajectory (τ t , a t ) in Γ under π ′ as the sampling policy, and

is the infamous cumulative product of importance weights found in importance sampling (Precup et al., 2000). This is actually a general problem whenever we apply MDP results to POMDPs via a reduction to history-based MDPs, and circumventing it often requires algorithms and coverage concepts specifically designed for POMDPs (Zhang and Jiang, 2024). It remains an interesting question whether those ideas (such as the notion of belief & outcome coverage proposed by Zhang and Jiang (2024)) are useful for the belief-state selection problem considered in this paper.

Generalization via Sufficient Statistics A mitigation to the above problem is to make and leverage structural assumptions on b. In particular, we may assume that b(•|τ t ) is generated via a two-stage procedure:

That is, we first compute the sufficient statistic of τ t via a function ϕ b , and then sample s t conditioned on z t . (With a slight abuse of notation we reuse b for the conditional distribution in the second stage.) As a starter, if z t is a discrete variable and the correct ϕ b ⋆ is known, i.e., ϕ b = ϕ b ⋆ ∀b ∈ B, we can improve the guarantee of latent state-based selection in Eq.( 11) to the following (see proof in Appendix C):

.

Therefore, if z t takes on a small number of values, there is hope that π b may induce an exploratory distribution over z t and covers the distribution under π ′ . The result can also be easily extended to the case of unknown ϕ b ⋆ (i.e., ϕ b can be different for each b), as we can simply replace z t in the above bound with the pair (ϕ b (τ t ), ϕ b ⋆ (τ t )). In this case, the bound requires π b to induce an exploration joint distribution over the pair of statistics. For continuous-valued ϕ b , favorable coverage guarantees might still be obtainable if structural assumptions are imposed on the b(•|ϕ b ) process.

Task-specific Approaches Another route to circumvent the issue related to coverage is to take approaches specific to the task at hand. As an example, for the most basic task of policy evaluation (i.e., estimating Q π Γ for a given π), there are simple regression based methods10 and selection algorithms based on estimating some variant of the Bellman error. For the latter, the coverage guarantee often does not depend on the coverage in the original MDP, but in an MDP compressed through a low-dimensional representation related to the candidate Q-functions (Xie and Jiang, 2021;Zhang and Jiang, 2021;Liu et al., 2025). Such a deviation from the original dynamics may be a desirable property for POMDPs when the coverage in the original dynamics is not well-behaved.

For most part of the paper, we assume the π b , which is used to collect the data needed for the selection of the conditional distributions (Section 3), is given. In practice, the choice of π b is an important hyperparameter with nuanced effects, which we already had a glimpse in Section 5.3: while we choose to select belief-state approximations in each simulator Γ by using the same policy π b as the one used to sample the real-system data, the analysis needs to handle the mismatch between the roll-in distributions of Γ π b and Γ π b ⋆ , which shows up in the final error bound. While this mismatch is shown to be controlled by (ϵ 0 + ϵ 1 ), there is the possibility of using a different roll-in policy π b ′ in simulators such that Γ π ′ b may be a better approximation of Γ π b ⋆ than Γ π b is. The issue is further complicated when there is misspecification in {Γ k } and B, which we leave for future investigation.

Another important motivating scenario for selecting b is to use it for learning a good policy in the simulator. In this case, we want b to be accurate not just under some fixed distributions, but throughout the learning process when we explore using different policies. A naïve approach is to separately optimize one policy for each candidate b ∈ B, and rolling out these policies in the simulator to find the best performing one. However, given the computational intensity of policy optimization, an interesting question is whether we can adjust the choice of b as policy optimization unfolds and avoid performing m = |B| separate policy optimization processes.

where the last step uses the fact that π = π b , and inductively expanding the analysis till the end proves the theorem statement.

This observation-based selection approach reflects a prevailing theme in RL research on POMDPs, namely behavioral equivalence, that the observable behavior of a POMDP is what ultimately matters, and latent states are ungrounded objects and only a convenient way to help express the observable behavior (e.g., the latent state transition P and emission E are a convenient way to parameterize the observable dynamics M Γ ). This philosophy is most pronounced in the research of Predictive State Representations (Littman

Input: τ t , a t , π.1. Sample s t ∼ b(•|τ t ).2. Take given action a t , and generates t+1 ∼ P (•|s t , a t ), o t+1 ∼ E(•|s t+1 ).3. Replace s t+1 with a fresh sample from b(•|τ t+1 ) where τ t+1 = τ t • a t • o t+1 . 4. Repeat Steps 2 and 3 for subsequent time steps by taking actions according to π, and collect H t ′ =t+1 R(o t ′ ) as a Monte-Carlo return.

Input: τ t , a t , π.1. Sample s t ∼ b(•|τ t ).2. Take given action a t , and generates t+1 ∼ P (•|s t , a t ), o t+1 ∼ E(•|s t+1 ).

Input: τ t , a t , π.1. Sample s t ∼ b(•|τ t ).2. Take given action a t , and generate

Input: τ t , a t , π.1. Sample s t ∼ b(•|τ t ).

Input: τ t , a t , π.

The distribution also depends on ρ0 and the reward function R, but the different models we will consider often only differ in the transition, so we use the subscript to emphasize the dependence on transition.

Strictly speaking, 2-sample test is different from and arguably harder than the selection problem, since we can leverage the realizability assumption in selection.

The paradox is resolved by realizing that we rely on P and E for efficient sampling which ground the latent states.

There is a slight caveat in Stage 2’s guarantee due to the violation of realizability, i.e., after stage 1, the true belief state of Γ k ⋆ = Γ⋆ might have been eliminated. See Appendix D for further discussions.

That is, we can generate trajectories in Γ with π ′ roll-in at time step t and π roll-out, and split each trajectory into a regression data point (τt, at) → t ′ ≥t r t ′ . Q π 1-Reset(Γ,b) and Q π M Γ,b are treated as candidate regressors, and the true Q π Γ has the least mean squared error.

📸 Image Gallery