Learning the Value of Value Learning

📝 Original Info

- Title: Learning the Value of Value Learning

- ArXiv ID: 2511.17714

- Date: 2025-11-21

- Authors: Alex John London, Aydin Mohseni

📝 Abstract

Standard decision frameworks address uncertainty about facts but assume fixed options and values. We extend the Jeffrey-Bolker framework to model refinements in values and prove a value-of-information theorem for axiological refinement. In multi-agent settings, we establish that mutual refinement will characteristically transform zero-sum games into positive-sum interactions and yield Pareto-improvements in Nash bargaining. These results show that a framework of rational choice can be extended to model value refinement. By unifying epistemic and axiological refinement under a single formalism, we broaden the conceptual foundations of rational choice and illuminate the normative status of ethical deliberation.📄 Full Content

To demonstrate that axiological refinement cannot be collapsed into purely epistemic refinement, we provide results that go beyond individual decision-making contexts and show that there is strategic, not merely epistemic, value from consulting one’s values. In particular, we demonstrate that in two-player zero-sum games, value refinement tends to transform interactions into ones that are positivesum. Similarly, in Nash bargaining scenarios, value refinement tends to result in Pareto improvements for all involved parties. In these contexts, agents who refine their preferences systematically outperform naïve expected utility maximizers, both individually and collectively.

Our results are important on several levels. First, the most compelling arguments for the normative recommendation to maximize expected utility rest on the presumption that the agent in question has a stable set of values that can be represented by a unique (up to positive affine transformation) utility function. Yet actual agents-humans, machines, and institutions-typically fall short of idealized expected utility maximization. Agents’ preferences or choice behavior may be intransitive, incomplete, context dependent in ways that violate independence axioms, or otherwise inconsistent.

Further, agents frequently face uncertainty not just about external states of the world, but also about their own options and valuation thereof. This latter challenge raises a significant practical and normative question: when agents should treat their current utility function and representation of the situation as authoritative-take direct action to maximize expected utility in light of those utilities-and when they should instead pause to reflect-to refine, revise, or better represent their options and values.

Second, although there is an intuitive sense in which ethical reflection is relevant to decision and game theory, this connection is often implicit. Our results make this connection explicit in a way that can be represented within the mathematical formalism of decision theory. This reveals an expanded scope for decision theory and also opens the door for more rigorous exploration of the interaction between epistemic and axiological uncertainty.

In §2, we discuss relevant previous work and situate our work in the literature. §3 introduces the Jeffrey-Bolker decision framework and details how we model value refinement. In §4, we prove a value-of-value-refinement theorem for a single agent. In §5, we show how refinement can sometimes dissolve apparent dilemmas involving incommensurable values. We extend the analysis to strategic settings in §6, demonstrating that mutual refinement turns zero-sum conflict into positive-sum cooperation, and in §7, where we derive guarantees of Pareto improvement in the context of Nash bargaining. In §8, we conclude with normative and methodological implications and outline directions for future work.

Good (1967) established a central result in decision theory: within the Savage framework, costless evidence cannot reduce a rational agent’s expected utility. 2Challenges to an unqualified reading of this result already appeared in strategic contexts: Hirshleifer (1971) showed that information can reduce welfare because others respond to its acquisition or disclosure, undermining the conditions that guarantee nonnegative information value. Subsequent decision-theoretic work clarified the theorem’s logical structure and limits. Skyrms (1990) highlighted its reliance on dynamic consistency, while Kadane, Schervish, and Seidenfeld (2008) showed how it can fail outside the classical Bayesian setting, including with improper or non-countably additive priors and imprecise credal states; Seidenfeld (2009) further identified act-state dependence as an independent source of failure. Even so, the core insight remains: under a broad and well-understood range of conditions, rational agents should expect to do better (or at least no worse) by acquiring information prior to action.

In contrast to the extensive scholarship on epistemic uncertainty, axiological uncertainty-uncertainty about the nature, content, or scope of one’s own values-remains underexplored, despite its prevalence in real-world decision-making for both humans and artificial agents. The existence of value uncertainty has been recognized by a few authors. For example, Dewey and Tufts (1936, pp. 172-176) distinguish cases of weakness of will, where agents struggle to act in conformity with their judgments of right and wrong from cases where the agent is uncertain about how to reconcile their values in order to arrive at a determination about right and wrong. In this second case, they argue that what is required is not strength of will, but reflection and clarification of the values in question. On their view, such reflection is a core component of theorizing in ethics. But their work does not provide a formal model of what such reflection should look like or how agents should approach decisions in the face of such axiological uncertainty. Levi (1990) provides a formal model for decisions under value uncertainty. He identifies two sources: pragmatic constraints (insufficient time to reconcile values) and deep incommensurability (no unique tradeoff function exists). We add a third, normative source: some ethical theories hold that commensuration, though possible, is morally impermissible-Kantian theories, for instance, hold that treating rational agents as having a price violates their dignity (Bjorndahl et al. 2017).

Levi’s procedure requires agents to identify relevant values, entertain all convex combinations, and treat as admissible any act on or above the resulting surface. When multiple acts remain admissible, second-order values are applied iteratively. The resulting value structure is weaker than a utility function: agents acting on utility functions can be represented as employing a particular value structure, but Levi-rational choices need not correspond to any utility function.

Our approach differs. Levi models value inquiry as identifying relative weights among fixed values. We show that inquiry can alter values themselves, resolving intra-personal and interpersonal conflicts. Our results are sufficiently general to apply within Levi’s framework (demonstrated in §5) and extend to other approaches accommodating incommensurability, parity, or ambiguity.

The works closest in spirit to ours appear in two literatures. In rational choice and belief change, the most relevant are Bradley (2017), Steele &Stefánsson (2021), andPettigrew (2024) on awareness growth. In game theory, the most relevant are Halpern and Rêgo (2009;2012;2013) on games with unaware agents, and Cyert andDeGroot (1975, 1979) on adaptive preferences.

Bradley (2017) models awareness growth through expansion and refinement operations that partition existing propositions or introduce new sample space elements. These operations obey reverse Bayesianism, preserving the agent’s original likelihood ratios. Bradley’s refinement process parallels ours; however, we extend refinement to decision-theoretic contexts involving utility uncertainty, not just probability uncertainty. This extension requires relaxing strict reverse Bayesianism while observing more general Bayesian reflection principles that we detail below. Steele & Stefánsson (2021) propose two principles for awareness growth: awareness reflection and preference awareness reflection. Awareness reflection applies the law of total probability to awareness changes: an agent’s current credence should equal their expected credence conditional on anticipated awareness changes. Preference awareness reflection addresses utility uncertainty and requires that rational agents not expect their preference rankings to change. Our proposed reflection principles satisfy both constraints while providing a formal account of how awareness growth might be realized in individual and strategic settings. Halpern and Rêgo (2009;2012;2013) model agents who are unaware of all actions potentially available to them, analyze the implications for solution concepts, and argue that representing such agents requires augmenting standard game-theoretic machinery. Whereas they investigate what happens when agents cannot represent their options, we investigate when it is worthwhile for agents to refine their representation of options and evaluations, and what rational principles constrain this process. Cyert andDeGroot (1975, 1979) provide models of adaptive preferences that treat uncertainty about one’s values as a special case of parametric inference-agents’ values for a good are given by an unknown parameter about which they learn via Bayesian updating. Our focus differs: we examine how refinement of one’s decision representation, uncertainty about value, and rational principles jointly determine when to engage in refinement.

Finally, our results are distinct from problems of diachronic coherence, including how “transformative” experiences can alter agents’ preferences (Ullmann-Margalit 2006;Paul 2014;Pettigrew 2015Pettigrew , 2019)). The changes we model arise not from preference transformation but from more precise specification of commitments that an agent already endorses within particular decision contexts.

Value of information guarantees break down in game-theoretic contexts where act-state independence fails (Skyrms 1985). When actions alter the relative probabilities of the realization states, additional information can decrease expected utility. Given this, one might conclude that value refinements offer no advantage in strategic settings. We demonstrate otherwise: learning about one’s values yields substantial benefits in game-theoretic contexts of extreme conflict.

A key question for decision theory asks when limited agents can implement expected utility maximization. Multiple arguments converge on this normative recommendation. Representation theorems (von Neumann and Morgenstern 1944;Savage 1954;Jeffrey 1965) show that if an agent’s choices satisfy fundamental axioms, their preferences can be represented by a unique utility function (up to positive affine transformation) coupled with a credence function satisfying probability axioms. Dutch book arguments (Ramsey 1931;de Finetti 1937;Kemeny 1955) prove agents violate probability axioms if and only if they accept guaranteed losses in fair bets. Accuracy arguments (Joyce 1998;Pettigrew 2016;Schoenfield 2017) establish that credences violating probability axioms are weakly dominated by conforming ones. Consequentialist arguments (Hammond 1988;McClennen 1990) demonstrate that maximizing expected utility in sequential decisions produces outcomes optimal by the agent’s standards.

When bounded agents can implement these recommendations remains unclear. Research on bounded rationality (Simon 1955;Gigerenzer and Selten 2002;Kahneman 2003) explores various departures from idealized rationality. We address a more focused question: what if an agent is uncertain about their values and can act to refine that understanding? We explore conditions where they should refine their situation understanding and values-in individual decisions, non-cooperative interactions, and Nash bargaining-before maximizing expected utility. Moreover, such refinements alter the way an agent represents the decision problem to be addressed. This connection between value specification and problem representation offers an important insight into the role that values play in problem formulation.

The Jeffrey-Bolker decision framework (Jeffrey 1965;Bolker 1966) suits this project well. Unlike Savage’s (1954) separation of states, acts, and outcomes, Jeffrey-Bolker employs propositions as the fundamental evaluation unit. Its atomless Boolean algebra structure allows preferences to be defined over propositions directly, with probability and desirability assigned to the same entities. This facilitates refinement operations where agents subdivide coarse propositions into finer ones as understanding develops. Because propositions in the algebra can be refined in well-defined ways, the framework’s flexibility makes it ideal for modeling epistemic and axiological refinements. That said, we conjecture that analogous results to those presented here can be proven in the decision frameworks of Savage, von Neumann-Morgenstern, and others.

3.1. A Fruitful Vignette: The Arborist and the Baker. We begin with a vignette to illustrate value refinement. Consider an arborist and a baker who jointly discover a rare orange in the wild. Both claim the fruit and find themselves in conflict over the prize.

Initially, each sees two possibilities: cooperate and divide the orange in some fashion, or fight over the whole thing at the risk of getting none. Given their current understanding, the situation appears like a zero-sum contest for a constrained resource-any gain for one party constitutes a loss for the other.

Before proceeding, both parties step back and refine their representation of the situation. In practice, this might occur through reflection, introspection, conversation, or third-party counsel. Through this process, they discover that “divide the orange” actually contains distinct possibilities they had not initially distinguished. The arborist, they realize, primarily values the fruit for its seeds-a means of propagating a rare specimen. The baker values the rind for its zest in specialty pastries.

This refinement transforms how they conceptualize the available options. Where before they saw only “zero-sum split” versus “conflict,” they now distinguish between different qualitatively distinct ways of dividing the resource: one where the arborist gets the seeds and the baker gets the rind, another where these assignments are reversed, and the original option of conflict.

After refinement, the picture changes. Both parties now see that one particular division-arborist takes seeds, baker takes rind-is strictly better than their initial conception of generic cooperation, which itself seemed no better than conflict. This sort of refinement can transform what appeared to be a zero-sum conflict into a positive-sum arrangement: each party can expect a better outcome without leaving the other worse off. 3This vignette illustrates the qualitative dynamics of one of our central results: when agents refine their options and valuations, they can reveal unrecognized dimensions of value and transform perceived zero-sum interactions into positive-sum arrangements, systematically improving both individual and collective welfare. To make this case with greater precision, we begin with value refinement for a single agent and progress carefully to strategic interactions.

3.2. The Jeffrey-Bolker Decision Framework. We employ the Jeffrey-Bolker decision framework (Jeffrey 1965;Bolker 1966). Bolker’s representation theorem establishes that if an agent’s preferences over propositions satisfy standard rationality conditions-ordering, averaging, impartiality, and continuity-then there exist a finitely additive probability measure P : A → [0, 1] and utility function U : A \ {⊥} → R representing those preferences. 4 The Jeffrey-Bolker decision framework has several features that make it wellsuited to modeling value refinement. First, in Jeffrey-Bolker, acts, states, and consequences are all propositions in a single Boolean algebra. This allows the agent to assign probabilities to propositions about their own acts. This is essential to represent uncertainty about the structure of fine-grained actions that may result from refining their algebra. Collapsing the state/act/outcome distinction streamlines the analysis of value refinement, because every potential more detailed description of an option is already present in the algebra.

An agent’s representation of a decision situation is a tuple ⟨A, A, U, P ⟩: an algebra A of relevant possibilities they are entertaining; a partition A ⊆ A encoding their act partition; and functions U and P encoding their utilities and credences over A. The agents may have coarser or finer representations A 0 ⊂ A 1 ⊂ A.

Let A ′ = A \ {⊥} denote the non-empty elements. A ′ is atomless if for all x ∈ A ′ , there exists y ∈ A ′ with y ̸ = x and y ⊆ x. A is complete if every subset has a supremum and infimum in A. Atomlessness guarantees arbitrary precision: any x ∈ A ′ can be split into x ∧ y and x ∧ ¬y, or more generally decomposed into finite partitions x ≡ n i=1 y i . Since actions are propositions in such an algebra, any refinement of an agent’s act partition can be the sensible objects of belief and value for that agent-that is, the domain of credence and utility functions.

3.3. Modeling Value Refinement. We extend the Jeffrey-Bolker framework by introducing a refinement operation on an agent’s representation of a decision problem. We begin with an idealized benchmark, then develop the machinery for bounded agents who can refine their understanding and valuations of the actions available to them.

3.3.1. The Unbounded Benchmark. Consider an unbounded agent who has evaluated all possible refinements. Their complete representation is D = ⟨A, A, P, U ⟩ where A is a complete, atomless Boolean algebra containing all conceivable propositions; A is an act partition including all maximally fine-grained acts; 5 and P : A → [0, 1] and U : A ′ → R are fully specified. 6 4 Unique up to positive affine transformation of U with compensating positive scalar rescaling of P .

5 We consider the finite case, though one could extend to infinite act partitions (e.g., proper filters or ultrafilters).

6 While an agent’s algebra may be atomless, their act partition may be finite-reflecting a maximum level of precision in the control they can exercise, even if they can entertain finer distinctions regarding states.

. A binary refinement of act A ∈ A 0 . The initial act partition A 0 consists of acts A and ¬A. Refinement produces A 1 by splitting A into the more fine-grained acts A ∧ B 1 and A ∧ B 2 .

3.3.2. The Bounded Agent. The agents we study have not undergone arbitrary refinement. Their initial representation is D 0 = ⟨A 0 , A 0 , P 0 , U 0 ⟩ where A 0 ⊂ A is a coarse-grained subalgebra; A 0 is the maximally fine-grained coarsening of A within A 0 ; and P 0 and U 0 are restricted to A 0 and A ′ 0 , respectively. How does such an agent refine their understanding of acts A 0 and reason about propositions in A \ A 0 that they have not yet considered? 3.3.3. Refinement Operations. We model bounded agents as exploring their decision problem by considering available acts in greater detail. An agent takes a given act A and considers distinct ways it might be realized with respect to previously unconsidered propositions B 1 and B 2 . For example, our arborist might refine “split the resource” into “split the resource and give the rind to the baker” and “split the resource and give the fruit to the baker.” This is modeled as follows.

Definition 1 (Binary Refinement). Given an act partition A 0 and A ∈ A 0 , a binary refinement of A produces the partition

Since any k-ary refinement decomposes into sequential binary refinements, 8 we focus on the binary case throughout.

One can also model the addition of wholly unconsidered acts via a catch-all proposition A CA ∈ A 0 representing acts not yet contemplated (Walker 2013;Balocchi et al. 2025). Expansion of the act partition then becomes a special case of refinement-refinement of the catch-all.

Refinement. An agent contemplating refinement faces a decision under uncertainty-she must estimate refinement’s value without knowing what distinctions she’ll discover. To model this metauncertainty, we equip them with a probability space (Ω, F, µ A ) A where each ω ∈ Ω represents one possible refinement outcome for act A-one way their credences and utilities might settle after careful consideration.

Define the refinement outcome mapping ξ

is the utility assigned to the i-th refined act in state ω, and p i (ω) = P ω 1 (A ∧ B i ) is the corresponding credence. The agent’s beliefs about refinement outcomes are captured by the induced distribution:

As refinement may lead the agent to revise P (A) from P 0 (A) to p 1 (ω) + p 2 (ω), maintaining probabilistic coherence requires rescaling. Post-refinement probabilities and utilities satisfy:

where for any

and the normalizing factor

We assume µ A ({p 1 + p 2 ∈ (0, 1)}) = 1; the agent doesn’t expect refinement to reveal that A is impossible or certain. We do not require P 1 (A) = P 0 (A); refinement may reveal that the original act is more or less probable than initially believed. 9 Moving forward, we denote the conditional probability q = p 1 p 1 +p 2 = P 1 (B 1 |A), representing the post-refinement probability of B 1 given A, which is well-defined as we assume p 1 + p 2 > 0 almost surely.

This framework enables ex ante reasoning about refinement’s value. Defining V i (X) = P i (X) • U i (X), the agent can compute:

That is, the agent can reason about the expected value of their best option after refinement and compare it to their current best option. 3.3.5. The Refinement Reflection Principle. An agent must decide whether to refine their understanding of an option. To do so, there must be some structure constraining their expectations regarding this process. To this end, we impose two conditions: a reflection principle relating current valuations to expected postrefinement valuations, and a non-triviality assumption ensuring genuine uncertainty.

Definition 2 (Refinement Reflection Principle). For A ∈ A 0 and binary refinement R A = {A ∧ B 1 , A ∧ B 2 }, a rational agent’s valuation obeys:

where (u 1 , u 2 , q) 10 ∼ µ A .

9 Though, as we will see, rationality requires E[p1 + p2] = P0(A); probability is preserved in expectation.

10 Recall that q = p 1 p 1 +p 2 where p1 + p2 > 0.

The refinement reflection principle (RRP) requires an agent’s current valuation of A equals their expected valuation after more careful consideration. It rules out the case where an agent expects that an act will be better (or worse) than the value they currently assign it. This parallels classical reflection principles where current credence equals expected future credence (van Fraassen 1984; Greaves and Wallace 2006; Huttegger 2013). 11 The RRP generalizes to k-ary refinements: U 0 (A) = E µ A k i=1 q i u i where q i = p i / j p j are the conditional probabilities P 1 (B i |A).

Our second condition ensures genuine uncertainty about refinement outcomes:

Assumption 3 (Refinement Uncertainty). An agent has refinement uncertainty regarding an act A if their credence ∼ µ A with respect to the outcomes of refinement (u 1 , u 2 , p 1 , p 2 ) satisfies:

An agent satisfying this condition considers it possible that refinement reveals something new. In particular, they think that it is possible that the refined acts differ in value from one another, u 1 ̸ = u 2 , which implies that they think it is possible that at least one differs in value from U 0 (A) as well.

Together, these conditions ensure positive probability of discovering strict improvements. If an agent has genuine uncertainty about refinement outcomes and their current valuation reflects expected post-refinement valuation, then with positive probability they identify superior options-and, being rational, selects them.

We now apply our framework to analyze value refinement for a single agent. Recall: an agent with decision problem D 0 = ⟨A 0 , A 0 , P 0 , U 0 ⟩ maintains uncertainty about how their act valuations decompose when refined, captured by distributions {µ A } A∈A 0 constrained by the RRP.

Before the formal result, consider why refinement generates expected value. When an agent chooses a coarse-grained act A, they commit to whatever mixture of outcomes that act represents-like buying a bundle without knowing exactly what’s inside. Refinement unbundles the act, allowing them to see the finer components and choose among them. Since they can select the best component rather than accepting the average, their expected utility improves. 12 (See figure 2.)

Consider an agent with act partition

11 See Dorst (2024) for recent critical discussion of reflection principles. 12 This parallels option value: refinement transforms a commitment to an average into a choice among components.

Figure 2. Refinement transforms commitment to an average of a coarse-grained bundle into the ability to select the best component among it fine-grained elements. Under RRP and refinement uncertainty,

The expected maximum of a non-uniform bundle exceeds its expected mean.

Theorem 4 (Value of Value Refinement). Consider an agent with decision problem D 0 = ⟨A 0 , A 0 , P 0 , U 0 ⟩ and a rationalizable act A * ∈ arg max A∈A 0 U 0 (A). Let the agent’s uncertainty about refinement outcomes be captured by µ A * over (u 1 , u 2 , q) ∈ R 2 × (0, 1), satisfying RRP and refinement uncertainty. Then the expected value of refinement is strictly positive:

Refinement uncertainty ensures the agent assigns positive probability to learning something new-that is, to U 1 (A * ) ̸ = U 0 (A * ). The RRP ensures their current valuation equals their expected post-refinement valuation. Together, these imply positive probability that some refined act improves on the original. What refinement adds is not a predictable change in average value, but finer-grained choice and the consequent potential to select above-average realizations.

The framework extends naturally to sequential refinement:

Corollary 5 (Monotonicity of Refinement Value). Consider successive refinements

If each refinement satisfies the conditions of Theorem 4, then

The preceding theorem and its corollary assumes refinement is costless. In practice, refinement requires time and cognitive effort. Suppose each refinement incurs cost c > 0, and let ∆

This captures diminishing marginal value as the agent exhausts the most valuable distinctions.

Theorem 7 (Optimal Refinement with Fixed Costs). Consider sequential refinements each costing c > 0.

denote the expected marginal gain from the i-th refinement, and suppose {∆ R i } i∈N exhibits vanishing returns. Then:

(i) If c > ∆ R 0 , the optimal policy is to never refine.

(ii) If c ≤ ∆ R 0 , the optimal stopping time is t * = max{t ∈ N : ∆ Rt ≥ c}, and the agent performs refinements 0, 1, . . . , t * , yielding net gain

with strict inequality when c < ∆ R 0 .

The policy is intuitive: refine while expected marginal gain exceeds cost; stop when it no longer does. This mirrors classic results in optimal search and information acquisition (Stigler 1961;McCall 1970;Lippman and McCall 1976;Mortensen 1986). Agents should be more likely to refine when stakes are high, costs low, or initial uncertainty large.

Having established that refinement increases expected utility for an individual, we turn to cases where the agent’s values themselves conflict.

Our model also applies to theories of choice that deviate from expected utility maximization. Recall Levi’s (1990) framework in which agents may be uncertain about how to commensurate distinct values. An agent committed to both compassion and honesty might confront actions realizing these values to different degrees: how great a gain in harm aversion justifies how great a loss in truthfulness? While ranking actions within each dimension may be straightforward, determining how to trade off between dimensions is often unclear or normatively contested.

Standard expected utility theory resolves such cases by commensuration: choose weights and maximize the aggregate. We ask whether value refinement can sometimes eliminate the need for commensuration by revealing actions that are jointly better across multiple dimensions.

Let V 1 , . . . , V k : A ′ → R represent distinct value dimensions. Under standard aggregation, a weight vector w = (w 1 , . . . , w k ) with w i ≥ 0 and i w i = 1 yields U (A) = k i=1 w i V i (A). Uncertainty about w-or the normative inadmissibility of commensuration-complicates choice when actions trade off values.

Consider the simplest case:

The agent faces a dilemma:

Resolution within expected utility theory requires commensuration. For example, if all-told value U is a linear combination of values, it requires determining a real-valued weight w ∈

Value refinement opens a third path. Rather than resolving uncertainty about the correct weight, the agent refines their understanding of available actions. In certain instances, refinement may eliminate the dilemma by revealing an action that excels along both dimensions, obviating the need for commensuration.

Following our framework, the agent refines A by considering previously unconsidered propositions B 1 , B 2 ∈ A \ A 0 , producing

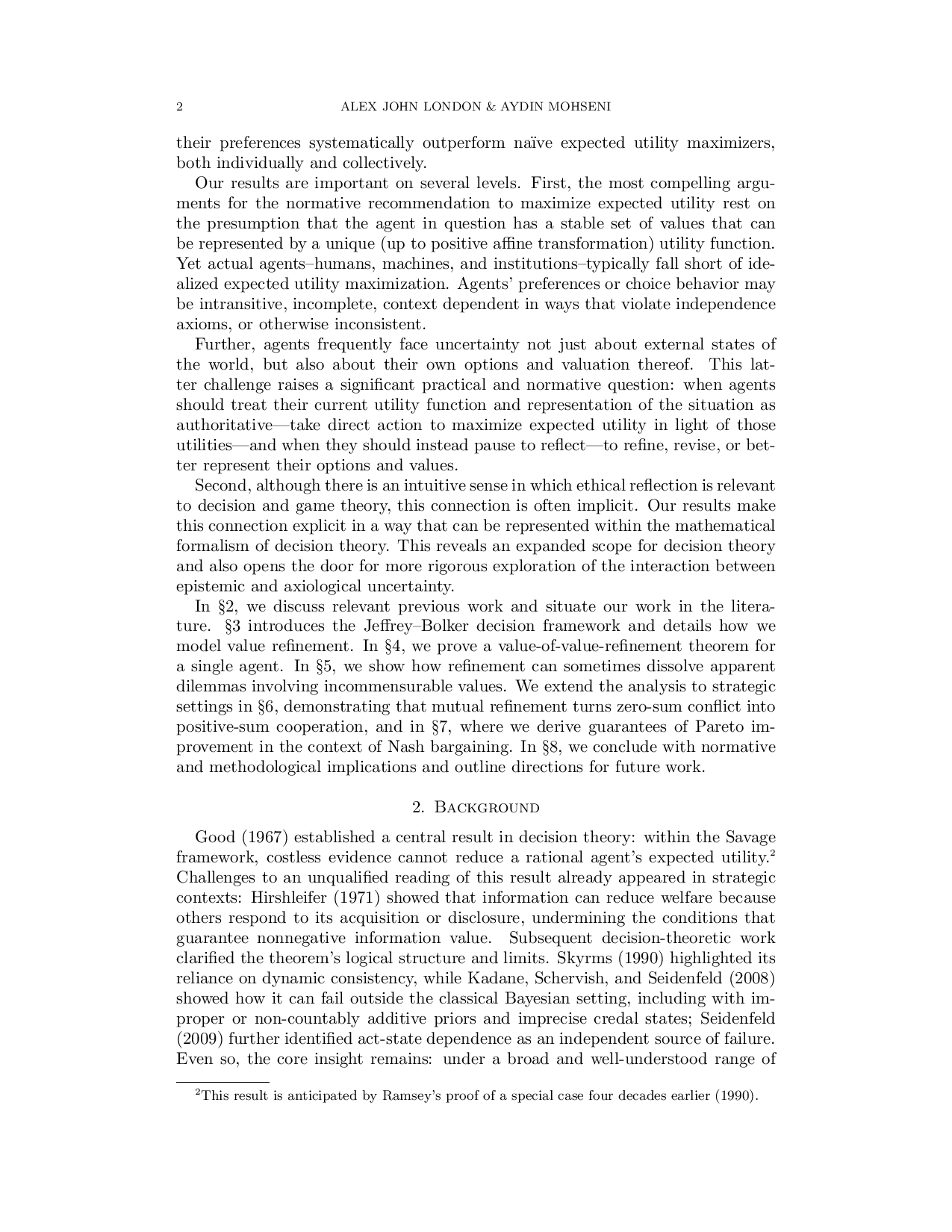

Figure 3. Visualization of how refinement can dissolve a value conflict with two value dimensions, V 1 , V 2 , and two acts, A, and ¬A. The left vertical axis denotes the degree of realization of the first value V 1 ; the right vertical axis denotes the degree of realization of the second V 2 . Figure 3a shows the dilemmas: A is favored by V 1 and ¬A is favored by V 2 . Figures 3b and3c show two possible results of refining act A: In 3b the dilemma remains, while in 3c a dominating action A ∧ B is revealed making commensuration unnecessary.

This refinement affects both value dimensions simultaneously. For each V i , the agent maintains uncertainty about refined values, captured by distributions µ

for all i, with strict inequality for at least one i.

When such an action exists, the agent can choose it without determining w, since it remains optimal for any admissible weighting.

Let the agent’s refinement uncertainty over A be captured by a joint distribution µ A whose support contains an open subset of the region where some refined action multi-value dominates {¬A, A ∧ B 1 , A ∧ B 2 }. Then with positive probability, refinement reveals a multi-value-dominating action, resolving the dilemma without commensuration.

Figure 3 provides geometric intuition. The vertical axes show the degree to which each action realizes V 1 (left) and V 2 (right). Figure 3a depicts the initial dilemma where A favors V 1 while ¬A favors V 2 . Figures 3b and3c illustrate two possible refinements: in the first, refinement provides more options but preserves the tension; in the second, refinement reveals A ∧ B 1 excelling along both dimensions, eliminating the need to determine relative weights.

Just as single-valued refinement creates option value by allowing selection of above-average components, multi-valued refinement can reveal components that simultaneously exceed the average across multiple dimensions. Importantly, this outcome is not guaranteed-refinement may simply provide additional options without revealing a dominating action. Yet when the joint distribution includes such possibilities, refinement can bypass rather than resolve uncertainty about value weights, providing a third path between the horns of a dilemma.

Having established refinement’s value for individual agents, including its potential to resolve apparent dilemmas, we turn to strategic settings where classical value of information results break down.

Having established the benefits of value refinement for individual agents, we now examine strategic environments where classical information acquisition can be harmful. Value-of-information guarantees break down in game-theoretic contexts where actions influence state probabilities (Hirshleifer 1971;Gibbard and Harper 1978;Skyrms 1985). When players’ actions are interdependent, additional information can decrease expected utility by producing unfavorable responses from opponents. Given this breakdown, one might expect value refinement to offer no advantage in strategic settings. We demonstrate otherwise: even in the maximally adversarial context of zero-sum games, value refinement yields positive expected gains at equilibrium, and-if refinement is not forced to preserve zero-sum structure-these gains will tend to produce positive-sum opportunities.

Consider a 2 × 2 zero-sum game G 0 = (N , S, U ) with players N = {1, 2}, strategy sets S 1 = {A 1 , ¬A 1 } and S 2 = {A 2 , ¬A 2 }, and payoffs satisfying U 1 + U 2 ≡ 0. From classic results, we know this admits a unique interior mixed Nash equilibrium. 13 Player 1 then refines action A 1 into {A 1 ∧B 1 , A 1 ∧B 2 }, transforming the interaction into a 3×2 strategic normal form game G 1 which may no longer be strictly zero-sum with realized payoffs with can be modeled by perturbed random variables ϵ i jk as shown in Figure 4. We are interested in the equilibrium outcomes of the game which simplifies analysis of agents’ credences by restricting them to those further satisfying common knowledge. Given this, our agents credences µ A 1 that encode their shared beliefs regarding one other agent’s strategies and the outcomes of the refinement of the focal act A 1 .

Given RRP, each refined act has the same ex ante expected value as the original act. This is reflected in the fact that perturbations to agents’ payoffs have mean zero E µ A 1 [ϵ i jk ] = 0. We will augment refinement uncertainty with the assumption that the agent’s believe that it is possible that their refinement payoffs are not perfectly anti-correlated. That is, there is positive probability of deviating the zero-sum structure of the game, though deviation may result in either positive or negative sum outcomes. 14 Thus, while G 0 is zero-sum, a realized G 1 is non-zerosum with positive probability; zero-sum holds only in expectation.

We are interested in what happens to the aggregate welfare of the agents’ in the refined game G 1 . Denote the Nash equilibria of the game G k by NE(G k ) with k ∈ {0, 1} for the pre-and post-refinement games, then welfare-optimal equilibrium payoff for the game is

13 Given the initial zero-sum structure, this is the min-max solution (von Neumann and Morgenstern 1944).

14 If players have beliefs guaranteeing that refinement preserves zero-sum structure, our results do not hold. This is appropriate for artificial zero-sum games like chess or poker, but in markets, diplomacy, or evolutionary processes, rigid zero-sum structures are atypical. is zero-sum, W * 0 = 0. We show that refinement yields strict expected improvement in welfare.

Theorem 10 (Zero-Sum Escape from Unilateral Value Refinement). Consider a 2 × 2 zero-sum, normal form game G 0 = (N , S, U ) with players N = {1, 2}, strategy sets S 1 = {A 1 , ¬A 1 } and S 2 = {A 2 , ¬A 2 }. Let either player unilaterally refine one of their acts A to {A ∧ B 1 , A ∧ B 2 } and let both players’ credences µ A regarding refinement satisfy RRP and refinement uncertainty, a believe that payoff perturbations are not anti-correlated, then the expected value of welfareoptimal equilibrium strictly increases after refinement:

Intuitively the mechanism for improvement is optional plus asymmetric filtering. Refinement splits an act into two variants whose expected values, by RRP, equal that of the original act. But equilibrium behavior responds asymmetrically: when both players agree that one refined variant dominates the other, the inferior variant is excluded from equilibrium play. This agreement occurs precisely when the perturbations favor the same variant for both players-an event with positive probability under non-adversarial refinement. On such realizations, equilibrium selects the mutually preferred variant, whose welfare exceeds zero because both players’ perturbations are positive. On realizations where players disagree about which variant is better, neither dominates, and equilibrium welfare remains zero in expectation. The asymmetry-filtering out bad realizations while retaining good ones-generates strictly positive expected welfare.

The broader insight is that apparent zero-sum conflicts are fragile under finegraining. Unilateral refinement can reveal options that both players prefer, transforming perceived opposition into latent coordination. Because realized games need not preserve zero-sum structure, these gains manifest as positive-sum equilibria absent in their coarser, intial representations of the interaction.

We now analyze value refinement in the context of Nash bargaining games (Nash 1950). When a one-dimensional resource split is refined, separable dimensions become explicit and independently allocable, generating a larger feasible set. We show that bargaining solutions in the larger set Pareto-dominate in expectation when agents weight the dimensions differently, with gains increasing as preferences become more complementary.

Consider a Nash bargaining game G 0 = (N , X 0 , (U 1 0 , U 2 0 ), A 0 , d) where N = {1, 2}, allocation space X 0 = [0, 1] gives Agent 1 fraction x and Agent 2 fraction 1-x, and U i 0 : [0, 1] → R are continuous, increasing, and concave utility functions. The feasible utility set is

. We use A to emphasize the continuity with act partitions: refinement here operates as simultaneous binary refinement on every element of the feasible set. 15 The Nash bargaining solution uniquely satisfies Pareto optimality, symmetry, independence of irrelevant alternatives, and invariance to affine utility transformations. 16 On (A 0 , d):

yielding allocation x * with utilities U 1 * 0 and U 2 * 0 for Agents 1 and 2. Refinement transforms

The allocation space expands from one to two dimensions: X 1 = [0, 1] 2 , where Agent 1 receives (x 1 , x 2 ) and Agent 2 receives (1 -x 1 , 1 -x 2 ). Utilities become additively separable:

, where v j : [0, 1] → R + are common value functions for each dimension and w i 1 (ω)+ w i 2 (ω) = 1 are agent-specific weights. Pre-refinement allocations embed as bundled allocations where

Let (Ω, Σ, µ) be a probability space over refinement realizations satisfying refinement uncertainty. RRP requires that pre-refinement utilities equal expected utilities under forced bundling:

1 ] = 1/2 and the dimensions have equal value (v 1 = v 2 = v), this reduces to U i 0 (x) = v(x), so both agents share the same pre-refinement utility function.

Before, in the context of 0-sum games, refinement uncertainty consisted in agents assigning positive probability to the event that their revealed utilities were non-identical to their current utilities and that their utilities were not perfectly anti-correlated.

Refinement uncertainty here consists in the condition that agents assign positive probability to the event that their revealed weights on the value of each dimension are not identical to to their current implicit weight on the forced bundling, and that their utilities are not perfectly anti-correlated. Given symmetric preferences, this simplifies to the condition that they believe it is possible that they have distinct weights over dimension of value µ(w 1 j ̸ = w 2 j ) > 0 for j ∈ {0, 1}.

15 The standard assumptions ensure A0 is compact, convex, and comprehensive above d-i.e., (u1, u2) ∈ A0 and d ≤ u ′ ≤ u implies u ′ ∈ A0. 16 These axioms jointly imply f NB (A, d) = arg max v∈A, v≥d (v1 -d1)(v2 -d2). Alternative solution concepts include Kalai-Smorodinsky (proportional gains), egalitarian (equal gains), and utilitarian (maximal sum).

(1, 0)

(1, 1)

Figure 5. Refinement expands the feasible set from a line (left) to a rectangle (right) when preferences are orthogonal. The dashed line shows bundled allocations; the full shaded region includes allocations where dimensions are allocated independently. When θ = π/2, the Nash solution moves from ( 1 2 , 1 2 ) to (1, 1), doubling both agents’ payoffs.

Theorem 11 (Value of Refinement in Nash Bargaining). Consider a symmetric Nash bargaining game

Refinement transforms the game into G 1 (ω) = (N , X 1 , (U 1 , U 2 ), A 1 (ω), d) with allocation space X 1 = [0, 1] 2 , value functions v 1 , v 2 : [0, 1] → R + (continuous, strictly increasing, strictly concave), and additively separable utilities

Let the agents’ credences µ satisfy RRP and refinement uncertainty: µ(w 1 1 ̸ = w 2 1 ) > 0. Then the expected Nash payoffs post-refinement strictly Pareto-dominate pre-refinement payoffs:

The mechanism driving the result is comparative advantage through dimensional specialization. When agents weight dimensions differently, the bundled allocation-where both dimensions are split identically-is Pareto inefficient. To see why, consider perturbations from the symmetric bundled allocation. Agent 1 benefits from reallocations that increase her weighted combination of dimensional gains; Agent 2 benefits from reallocations in the opposite direction, since she receives what Agent 1 does not. These two requirements are geometrically compatible-a direction exists that benefits both-exactly when the agents’ weight vectors (w 1 1 , w 1 2 ) and (w 2 1 , w 2 2 ) differ. Since both vectors have nonnegative components summing to one, they differ if and only if w 1 1 ̸ = w 2 1 . When this holds, Pareto improvements over the bundled Nash solution exist: give Agent 1 more of dimension 1 (which she values relatively more) while giving Agent 2 more of dimension 2 (which he values relatively more).

The Nash bargaining solution responds to this expansion via a standard monotonicity property: when the feasible set grows while the disagreement point remains fixed, each agent’s Nash payoff weakly increases-and strictly increases when the expansion contains Pareto improvements over the original solution. Thus, whenever agents discover they weight dimensions differently, both capture strictly higher payoffs than under forced bundling. Refinement uncertainty ensures this beneficial scenario occurs with positive probability, so taking expectations, both agents’ expected post-refinement payoffs strictly exceed their pre-refinement payoffs.

Corollary 12 (Gains Increase with Preference Divergence). In the setting of Theorem 11, fix marginal distributions for w 1 1 and w 2 1 with E[w i 1 ] = 1/2 and Var(w i 1 ) = σ 2 > 0. The expected benefit of refinement E[U i * 1 ] -U i * 0 is strictly decreasing in the correlation between the weights for for the agents, Corr(w 1 1 , w 2 1 ), and so the greatest gains are when gains occurring when preferences are perfectly negatively correlated, w 2 1 = 1 -w 1 1 almost surely. The corollary clarifies the role of preference structure. When agents’ weights are positively correlated, they expect to value the same dimensions highly, limiting opportunities for mutually beneficial trade. When weights are less correlatedmeaning agents tend to want different things-the scope for gains through specialization expands. The benefit of refinement depends on how different the agents’ preferences turn out to be, measured by |∆| = |w 1 1 -w 2 1 |. Lower covariance spreads probability mass toward larger preference divergence, amplifying expected gains. The maximum occurs with perfectly negatively correlated preferences, where Agent 1 values dimension 1 exactly as much as Agent 2 values dimension 2. At this extreme, each dimension can be allocated entirely to the agent who values it more, fully exploiting the gains from specialization.

Figure 5 illustrates such a case of perfectly complementary preferences, as in the vignette of the the Arborist and the Baker in §3.1, where the feasible set expands from a line to a rectangle and both agents’ payoffs double.

In bargaining, agents benefit in expectation from refining their understanding of what they are negotiating over. Refinement reveals dimensional structure, transforming one-dimensional division into opportunities for mutually beneficial trade across dimensions of value. The result connects to classical insights about gains from trade: 17 agents with different preferences can both benefit by specializing in what they value most, and value refinement is the epistemic process by which such opportunities are discovered. Refinement contributes to this a picture of how we might discover the latent complementarity of our values through reflection.

Our results give mathematical substance to the Delphic injunction to “know thyself.” Under broad conditions, value refinement at reasonable cost is ex ante beneficial for a rational agent (Theorem 4), can address classical dilemmas involving incommensurable values (Theorem 9), turns zero-sum conflicts into positivesum opportunities (Theorem 10), and expands Nash-bargaining frontiers so that each party secures strictly higher expected payoff (Theorem 11). These results carry consequences from individual deliberation to institutional design.

Unlike idealized agents, real decision makers-whether individuals, AI systems, or institutions-can be uncertain whether their current representation of their options and valuations is adequate. One lesson of this work is that when conflict arises, whether from individual dilemmas or coordination problems, there is value in resisting the temptation to move immediately to acting on one’s current representation of a dilemma. Taking conflict as an occasion to reflect on one’s options and values can avert or mitigate apparent incompatibilities; failing to do so can entrench suboptimal perspectives that do not reflect the agent’s ultimate interests.

The work also extends decision theory. Savage and von Neumann-Morgenstern treat the act-state-outcome division as fixed; the atomless Boolean algebra of the Jeffrey-Bolker framework permits indefinite refinement within a single model. We provide a natural formalism for this process. Classical coherence arguments-Dutch books, accuracy dominance, sequential optimality-assume a settled utility function. We show that taking settlement for granted is itself a substantive decision: foregoing refinement can be strictly dominated by clarifying one’s options and values first. Rational choice therefore has a two-stage character: refine, then maximize.

This gives rise to what we call the Paradox of Bounded Optimization: an agent who directly maximizes their current utility function can be strictly dominated by one who first refines the values from which that function derives. The paradox echoes the paradox of hedonism. Just as pursuing pleasure directly can preclude achieving it-maximizing a coarse utility function can preclude outcomes that better realize the agent’s underlying values. Resolution requires treating act partitions and utilities as provisional: hypotheses about one’s options and values, open to disciplined revision. This analysis may favor pluralistic over monistic approaches to value. Monistic frameworks, where a single utility function governs choice, may resist incoherence but can fail to capture an agent’s uncertainty about their underlying values. Value pluralism, by contrast, treats multiple irreducible values as legitimate inputs to deliberation. Our results show that pluralism need not entail decision paralysis: finer descriptions of available acts can expose alternatives that dominate on every value dimension. Consulting diverse values before committing to tradeoffs systematically improves outcomes.

Good-style value-of-information theorems guarantee that learning about the world provides non-negative expected value. We provide the parallel guarantee for learning about one’s values. Agents who treat their utility functions as provisional hypotheses can expect to fare better individually and collectively. Rationality is not merely calculation with a fixed utility function; it is the iterative work of making that function worthy of calculation.

Important limitations remain. Our results are formulated within the Jeffrey-Bolker framework; analogous results could be developed in frameworks of Savage, von Neumann-Morgenstern, or Anscombe-Aumann. We assumed negligible cognitive and temporal costs; introducing stochastic refinement costs would permit optimal-stopping analysis of how much reflection suffices under various conditions. Our strategic results rely on common knowledge; relaxing this assumption may attenuate positive-sum guarantees, and bounding the resulting effect sizes remains open. In iterated interactions, early-round refinement shapes later payoffs and information flows; richer dynamics-including signaling about value-learning intentions-await investigation.

Appendix A. Mathematical Appendix Proposition 13 (k-ary Refinements via Binary Refinements). Any k-ary refinement of A ∈ A 0 can be achieved through k -1 binary refinements.

Proof. Let {B i } k i=1 partition A. We proceed by induction on the number of refinement steps. At step j ∈ {1, . . . , k -1}, the current partition of A consists of the singletons A ∧ B 1 , . . . , A ∧ B j-1 together with the residual element R j = A ∧ (B j ∨ • • • ∨ B k ). We perform a binary refinement of R j into A ∧ B j and

For the base case j = 1, we have R 1 = A, and the binary refinement produces {A ∧ B 1 , R 2 }. For the inductive step, assume after step j -1 the partition is {A ∧ B 1 , . . . , A ∧ B j-1 , R j }. After step j, it becomes {A ∧ B 1 , . . . , A ∧ B j , R j+1 }. After step k -1, the residual is R k = A ∧ B k , yielding the desired partition {A ∧ B 1 , . . . , A ∧ B k }. □

Proof of Theorem 4 (Value of Value Refinement). Consider an agent with decision problem D 0 = ⟨A 0 , A 0 , P 0 , U 0 ⟩ who refines a rationalizable act A * ∈ arg max A∈A 0 U 0 (A) into two finer acts {A * ∧ B 1 , A * ∧ B 2 }. The agent’s uncertainty about postrefinement utilities and probabilities is captured by a distribution µ A * over (u 1 , u 2 , p 1 , p 2 ), where u i = U 1 (A * ∧ B i ) and p i = P 1 (A * ∧ B i ). We require three conditions: refinement uncertainty, meaning µ A * (u 1 ̸ = u 2 ) > 0; non-degeneracy, so that p 1 , p 2 > 0 almost surely; and the Refinement Reflection Principle, which ensures U 0 (A * ) = E[(p 1 u 1 + p 2 u 2 )/(p 1 + p 2 )]. Let V 0 = U 0 (A * ) denote the pre-refinement value and V 1 = max A∈A 1 U 1 (A) the post-refinement value. Since the refined partition includes A * ∧ B 1 and A * ∧ B 2 , we have V 1 ≥ max{u 1 , u 2 }.

It suffices to show E[max{u 1 , u 2 }] > E[qu 1 + (1 -q)u 2 ] where q = p 1 /(p 1 + p 2 ) ∈ (0, 1). For any q ∈ (0, 1) and any u 1 , u 2 ∈ R, the inequality max{u 1 , u 2 } ≥ qu 1 + (1 -q)u 2 holds, with equality if and only if u 1 = u 2 . This is because the maximum of two numbers weakly exceeds any convex combination thereof, with strict inequality when they differ. By refinement uncertainty, µ A * (u 1 ̸ = u 2 ) > 0, so the inequality is strict on a set of positive measure. Taking expectations yields

where the second equality uses RRP and the third uses rationalizability of A * . Since

□ Proof of Corollary 5 (Monotonicity of Refinement Value). Consider successive refinements producing increasingly fine algebras A 0 ⊂ A 1 ⊂ • • • ⊂ A k , with corresponding optimal values V 0 , V 1 , . . . , V k . If each refinement from A j-1 to A j satisfies the conditions of Theorem 4-namely RRP, refinement uncertainty, and nondegeneracy-then applying that theorem iteratively yields E[V j | A j-1 ] > V j-1 at each step. By the law of iterated expectations, E[V j ] > E[V j-1 ], yielding the chain

maximum in distribution, and each dimension is allocated entirely to the agent who values it relatively more, fully exploiting gains from specialization. □

Specifically, when there is act-state independence, information is costless, and agents have countably additive, proper priors(Kadane et al.

2008;Skyrms 1985;Gibbard and Harper 1978).

This result is anticipated by Ramsey’s proof of a special case four decades earlier (1990).

This particular type of interaction is modeled in §7 on refinement in Nash bargaining games.

📸 Image Gallery