Dual-Path Knowledge-Augmented Contrastive Alignment Network for Spatially Resolved Transcriptomics

📝 Original Info

- Title: Dual-Path Knowledge-Augmented Contrastive Alignment Network for Spatially Resolved Transcriptomics

- ArXiv ID: 2511.17685

- Date: 2025-11-21

- Authors: Wei Zhang, Jiajun Chu, Xinci Liu, Chen Tong, Xinyue Li

📝 Abstract

Spatial Transcriptomics (ST) is a technology that measures gene expression profiles within tissue sections while retaining spatial context. It reveals localized gene expression patterns and tissue heterogeneity, both of which are essential for understanding disease etiology. However, its high cost has driven efforts to predict spatial gene expression from whole slide images. Despite recent advancements, current methods still face significant limitations, such as under-exploitation of high-level biological context, over-reliance on exemplar retrievals, and inadequate alignment of heterogeneous modalities. To address these challenges, we propose DKAN, a novel Dual-path Knowledge-Augmented contrastive alignment Network that predicts spatially resolved gene expression by integrating histopathological images and gene expression profiles through a biologically informed approach. Specifically, we introduce an effective gene semantic representation module that leverages the external gene database to provide additional biological insights, thereby enhancing gene expression prediction. Further, we adopt a unified, onestage contrastive learning paradigm, seamlessly combining contrastive learning and supervised learning to eliminate reliance on exemplars, complemented with an adaptive weighting mechanism. Additionally, we propose a dual-path contrastive alignment module that employs gene semantic features as dynamic cross-modal coordinators to enable effective heterogeneous feature integration. Through extensive experiments across three public ST datasets, DKAN demonstrates superior performance over state-of-the-art models, establishing a new benchmark for spatial gene expression prediction and offering a powerful tool for advancing biological and clinical research.📄 Full Content

Despite the transformative potential of ST techniques, they still face limitations, including relatively low resolution, typically at the multicellular level, and high technical costs, which hinder their broader adoption (Moses and Pachter 2022). In contrast, Hematoxylin and Eosin (H&E) stained WSIs, as the gold standard in pathology, offer a cost-effective and widely accessible alternative. Their widespread availability and low costs make them suitable for supporting numerous downstream tasks such as survival prediction (Zhang et al. 2024a(Zhang et al. , 2025b)), stain transfer (Li et al. 2023;Zhang et al. 2024b) and especially spatial transcriptomics (Lin et al. 2024;Zhang et al. 2025a). The potential of WSIs to predict spatially resolved gene expression has been successfully demonstrated (He et al. 2020;Schmauch et al. 2020), utilizing morphological and spatial details. More recently, several models have expanded on this foundation, further improving prediction accuracy through innovative approaches to leverage the rich tissue information in WSIs (Chung et al. 2024;Wang et al. 2024).

Current approaches for spatial gene expression prediction predominantly exploit the rich spatial information embedded in WSIs, extracting image features at various levels, including local (Xie et al. 2023;Mejia et al. 2023), global (Pang, Su, andLi 2021;Zeng et al. 2022), and multiscale representations (Wang et al. 2024;Lin et al. 2024). Furthermore, several models incorporate multimodal contrastive learning to align imaging data with gene expression profiles within a shared low-dimensional embedding space (Xie et al. 2023;Min et al. 2024). This alignment enables the models to effectively capture the intricate relationships between image-derived features and gene expression patterns.

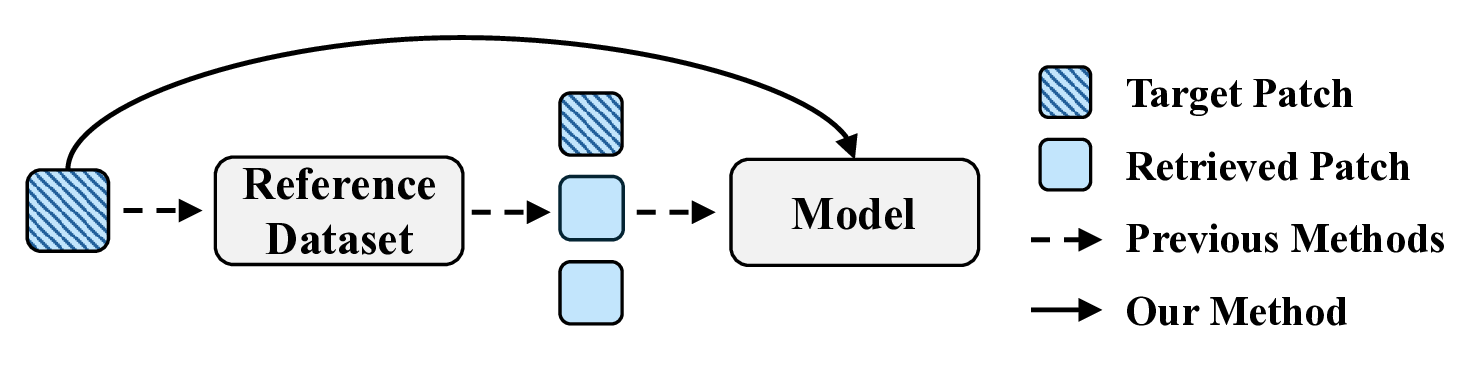

Despite recent advancements, several challenges persist. First, many models rely heavily on image features derived from pixel intensity (e.g., color distribution) and cellular structure (e.g., shape and texture) (He et al. 2020;Pang, Su, and Li 2021). While these low-level visual cues are informative, they often fail to capture high-level semantic information, such as gene functions, biological pathways, or disease associations, limiting the depth of biological interpretation. Second, one notable challenge lies in the inclusion of additional and potentially redundant steps in models based on contrastive learning and exemplar-guided strategies. As shown in Figure 1, these pipelines typically involve constructing a reference dataset from all patches in the training set, retrieving similar patches, and feeding both the retrieved patches and the target patch into the model. While effective, this multi-step process introduces complexity that may not always be necessary (Xie et al. 2023;Min et al. 2024;Lin et al. 2024). Streamlining such workflows into a more cohesive approach, particularly in constrained settings or with limited datasets, remains challenging. Lastly, while existing methods leverage multi-scale image features (Zeng et al. 2022;Chung et al. 2024) or incorporate auxiliary modalities (Yang et al. 2024a) to address modality-specific semantics, their fusion strategies often fail to adequately preserve biologically relevant interactions. This limitation constrains performance, a gap further exacerbated by the absence of frameworks that explicitly incorporate gene functional semantics into multimodal alignment.

To address these challenges, we propose DKAN, a novel Dual-path Knowledge-Augmented contrastive alignment Network for spatial gene expression prediction. Unlike previous methods, DKAN integrates gene functional semantics into contrastive learning, enabling the biologically grounded fusion of histopathological images and expression profiles through a unified one-stage paradigm. Our major contributions are summarized as follows:

Spatial gene expression prediction seeks to model gene activity from WSIs by capturing both visual and spatial features. Existing methods fall into three main categories: Local Methods. Local approaches focus on the target patch and its immediate surroundings (Yang et al. 2023(Yang et al. , 2024b,a;,a;Xie et al. 2023;Min et al. 2024). ST-Net (He et al. 2020) uses a pretrained DenseNet-121 (Huang et al. 2017) to extract patch-level features for prediction. EGN (Yang et al. 2023) enhances patch representations through image reconstruction and exemplar retrieval. EGGN (Yang et al. 2024b) extends EGN by applying graph convolutional networks to model relationships between the patch and its exemplars. SEPAL (Mejia et al. 2023) constructs a neighborhood graph and applies a graph neural network to capture local dependencies. These methods prioritize localized information and may not account for the broader spatial context.

Global Methods. Global methods incorporate positional and contextual information across the entire WSI (Jia et al. 2023;Yang et al. 2024a). HisToGene (Pang, Su, and Li 2021) uses vision transformers (Dosovitskiy et al. 2021) with positional encoding to model inter-patch relationships. HE2RNA (Schmauch et al. 2020) clusters patches into supertiles and aggregates them to form global contextual features. THItoGene (Jia et al. 2023) extracts deep molecular features using dynamic convolution and capsule modules, integrates them with positional data via ViT, and refines predictions using a graph attention network. Unlike local methods, global models leverage the full image context for more informed predictions.

Multi-Scale Methods. Multi-scale methods capture biological patterns at various resolutions (Zeng et al. 2022;Chung et al. 2024;Wang et al. 2024;Lin et al. 2024). TRIPLEX (Chung et al. 2024) combines features from multiple views. M2OST (Wang et al. 2024) decouples intra-and inter-scale feature extraction for many-to-one spatial prediction. ST-Align (Lin et al. 2024) clusters patches into nichelevel groups to integrate both local and regional contexts. These methods aim to balance the fine granularity of local models with the broader context provided by global ones.

Contrastive learning is a self-supervised method that learns discriminative representations by pulling similar pairs together and pushing dissimilar pairs apart (Oord, Li, and Vinyals 2018). In spatial gene expression tasks, contrastive learning aligns visual and transcriptomic modalities in a shared embedding space (Xie et al. 2023;Min et al. 2024;Lin et al. 2024). BLEEP (Xie et al. 2023), inspired by CLIP (Radford et al. 2021), embeds images and gene profiles jointly to enable retrieval-based inference. mclST-Exp (Min et al. 2024) refines this by encoding gene expression with learnable position embeddings for better spatial integration. ST-Align (Lin et al. 2024)

Spatial gene expression prediction is framed as a regression task. Given N p image patches extracted from a WSI, represented as X ∈ R Np×H×W ×3 , where H and W are the height and width of each patch, the goal is to predict gene expression levels. A learnable mapping function f is applied to produce predictions Ŷ = f (X) ∈ R Np×Ng , where N g denotes the number of target genes.

As illustrated in Figure 2, our framework integrates highlevel gene semantics by retrieving information from an external gene database (Sayers et al. 2024) and leveraging prompts to tap into the summarization capabilities and domain knowledge of large language models (LLMs). To extract informative visual features from WSIs, we adopt a multi-scale strategy that captures representations at the patch, region, and whole-slide levels. These features are then fused to form a comprehensive visual embedding. To effectively align gene expression, image, and textual modalities, we introduce a dual-path knowledge-augmented contrastive alignment module, which employs two distinct contrastive pathways for robust multimodal integration.

As shown in Figure 2(a), for N g genes of interest, we designed a workflow to extract semantic features f text . We retrieved gene-related knowledge from a well-established gene database NCBI (Sayers et al. 2024). However, the retrieved gene knowledge lacks structural uniformity, with some information being redundant or incomplete. To address this issue, we leveraged the summarization capabilities and embedded knowledge of LLM (GPT-4o) to generate accurate and efficient gene semantic texts.

Specifically, we embedded the gene knowledge into a prompt, which includes role definitions, task requirements, and output specifications, before feeding it into the LLM to produce the gene semantic text. The prompt is provided in the supplementary materials. Subsequently, we employed BioBERT (Lee et al. 2019) as our text embedding model to extract textual features, generating semantic embeddings of dimensionality d t = 1024. This model, pre-trained on the extensive biomedical semantic corpus, excels at capturing domain-specific contextual representations effectively. The semantic features are processed by a standard transformer module, preceded by a linear projection to ensure dimensional alignment for multimodal fusion. The transformer module efficiently captures global dependencies and commonalities among semantic embeddings, ultimately yielding the final semantic features f text .

As illustrated in Figure 2(b), the gene expression with shape N p × N g is processed by the gene expression encoder to generate gene expression features f exp ∈ R Np×d , ensuring feature dimension consistency between gene semantic and image features. Specifically, the expression encoder first projects the input to a d-dimensional space via a linear layer, applies GELU activation, and then processes features through a second linear layer with dropout. To stabilize gradient flow, we employ residual connections: the initial linear projection’s output is directly added to the final dropout output, followed by layer normalization for feature standardization. This design mitigates gradient vanishing while maintaining feature discriminability.

Given the large size of WSIs, relying solely on either WSIlevel images (I wsi ) or patch-level images (I patch ) is insufficient to fully capture their morphological complexity. While WSIs offer rich global context, a significant gap remains between the global view and the localized detail at the patch level. To bridge this gap, as illustrated in Figure 2(c), we introduce a region-level representation (I region ) by selecting the k nearest neighboring patches around each target patch.

Our model extracts image patches from the WSI at these three hierarchical levels and processes them using dedicated encoders. Specifically, for the WSI-level features f wsi and the region-level features f region , we utilize UNI (Chen et al. 2024), a general-purpose foundation model pre-trained on extensive WSI datasets for computational pathology. Due to the scale constraints and computational demands of WSIs and region-level images, UNI serves as a fixed feature extractor without updating its weights during training. To enhance feature adaptability, we append a multi-head transformer after each UNI encoder. For the patch-level feature f patch , we employ ResNet18 (Ciga, Xu, and Martel 2022) as the encoder. To adapt it to feature extraction, we remove the final pooling and fully connected layers, retaining only the activations of the last hidden layer as the output. Notably, the parameters of ResNet18 remain trainable.

To effectively integrate multi-scale features, we employ two cross-attention mechanisms: one fuses the WSI and the region-level images, while the other combines the WSI and the patch-level images, with WSI-level features serving as the query in both cases. The resulting fused features from these two groups are then summed to produce the final multi-scale feature of the image f img .

After extracting the image, semantic, and expression features, we propose a novel dual-path knowledge-augmented contrastive alignment paradigm for multimodal alignment as shown in Figure 2(d). Our approach leverages gene semantic features as dynamic cross-modal coordinators, operating through two parallel pathways. In the image pathway, gene semantic knowledge serves as a “functional query instruction” to filter morphology-related regions from image features. Similarly, in the expression pathway, gene semantic knowledge acts as a “distribution correction factor” to constrain the predicted gene expression features, ensuring alignment with the established biological pathway logic. In the implementation, we employ a cross-attention module, using the semantic feature as the query. Each semantic feature independently queries the image and expression features, ul-timately generating gene knowledge-augmented representations, denoted as e ti and e te respectively.

Inspired by CLIP (Radford et al. 2021), we apply contrastive learning to align e ti and e te in the latent embedding space. One distinctive aspect of this method is that, instead of forcing the alignment of the heterogeneous image and gene expression modalities directly, each modality interacts independently with the gene semantic knowledge, achieving implicit alignment through knowledge-guided queries. The decoupling of the image and gene expression modules enhances flexibility and reduces inter-modal dependencies.

To eliminate the dependency on exemplars and streamline the workflow, we adopt a unified one-stage framework for contrastive learning and seamlessly integrate it with supervised training. In the training phase, all modalities are utilized, whereas during inference, only the image and semantic modalities are used. Consequently, the loss function combines a contrastive loss and a supervised loss. The contrastive loss is indicated in Equation 1, where positive samples are representations of the same gene paired together, and negative samples are drawn from representations of different genes. Here sim(•, •) denotes the cosine similarity function that measures the alignment between feature vectors, and τ is a temperature parameter that controls the sharpness of the similarity distribution.

For the supervised loss, we calculate the mean squared error (MSE) between the predicted gene expression Ŷ and the ground truth gene expression Y . This can be further enhanced by knowledge distillation (Chung et al. 2024), which improves prediction consistency and generalization by aligning intermediate representations with the final output. These intermediate predictions, Ŷimg , Ŷpatch , Ŷwsi , and Ŷregion , are obtained through linear transformations of the model features f img , f patch , f wsi , and f region . To enforce both accuracy and coherence, we compute the MSE between these intermediate predictions and two targets: the ground truth Y and the final predicted output Ŷ , with their contributions balanced by a hyperparameter λ.

The distillation-aware supervised loss for each intermediate prediction d ∈ D is defined in Equation 2:

where D = {img, patch, wsi, region}. The total supervised loss is then aggregated across all intermediate predictions, combined with the MSE between the ground truth Y and the final predicted output Ŷ :

(3)

To ensure balanced optimization between the supervised loss (L sup ) and the contrastive loss (L cont ) which exhibit different numerical scales and convergence characteristics, we propose an adaptive weighting scheme. The weights are dynamically adjusted based on the real-time loss values to maintain appropriate gradient contributions from each objective. Specifically, the weighting coefficients are computed as the normalized reciprocals of the respective losses, to ensure that the loss with a smaller value could receive a higher weight, allowing the model to dynamically prioritize the more reliable objective during training. The final composite loss function is thus formulated as:

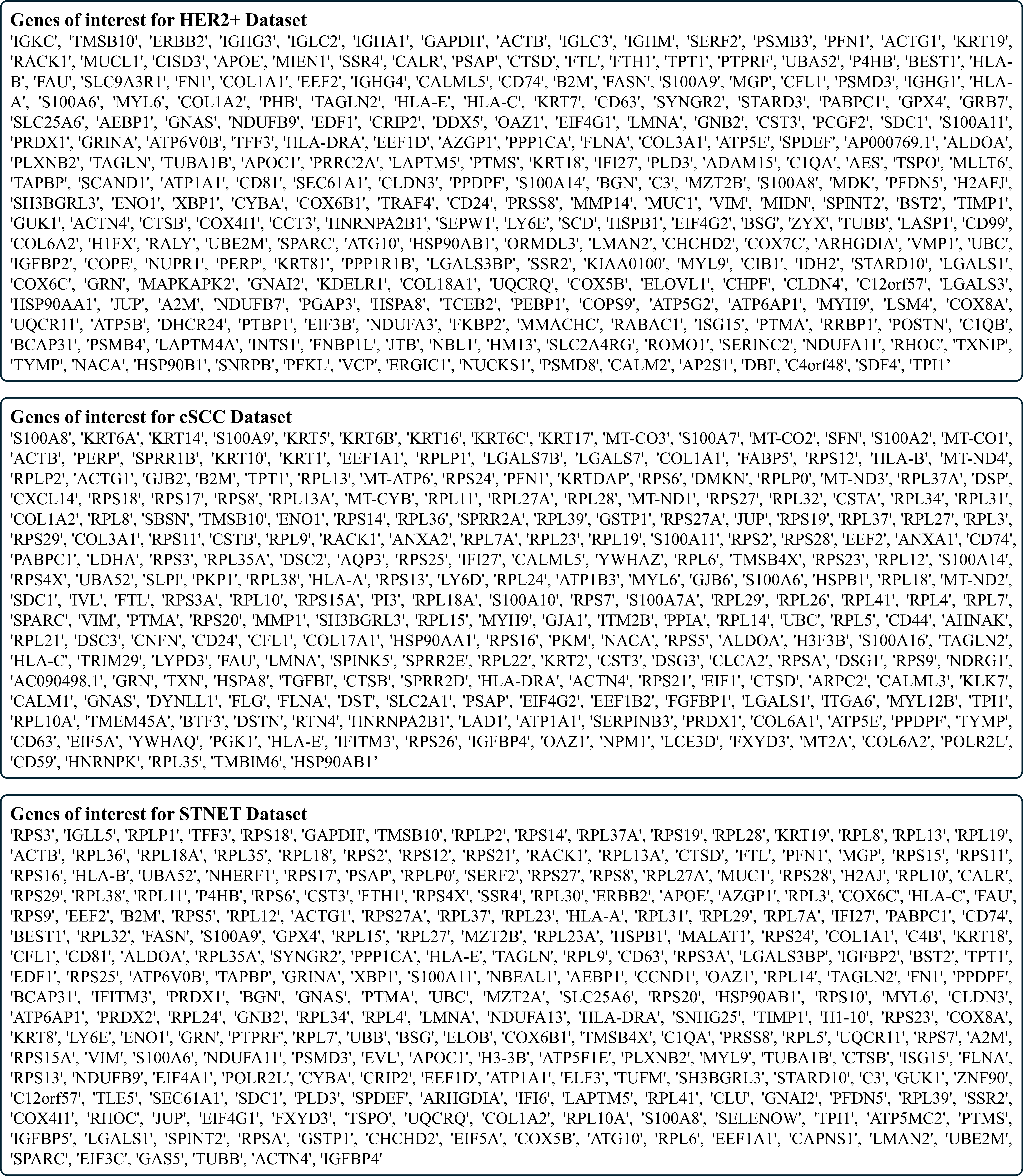

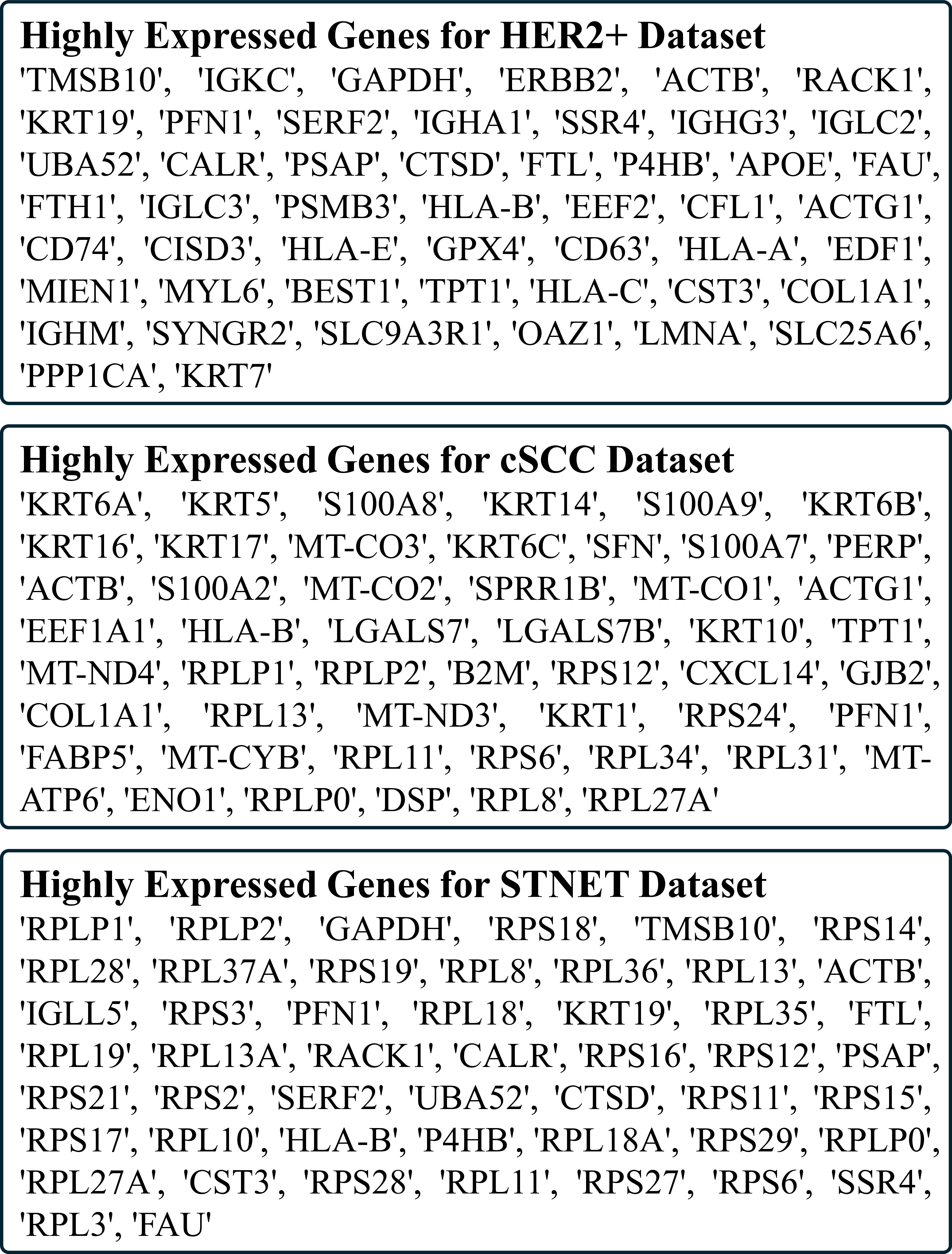

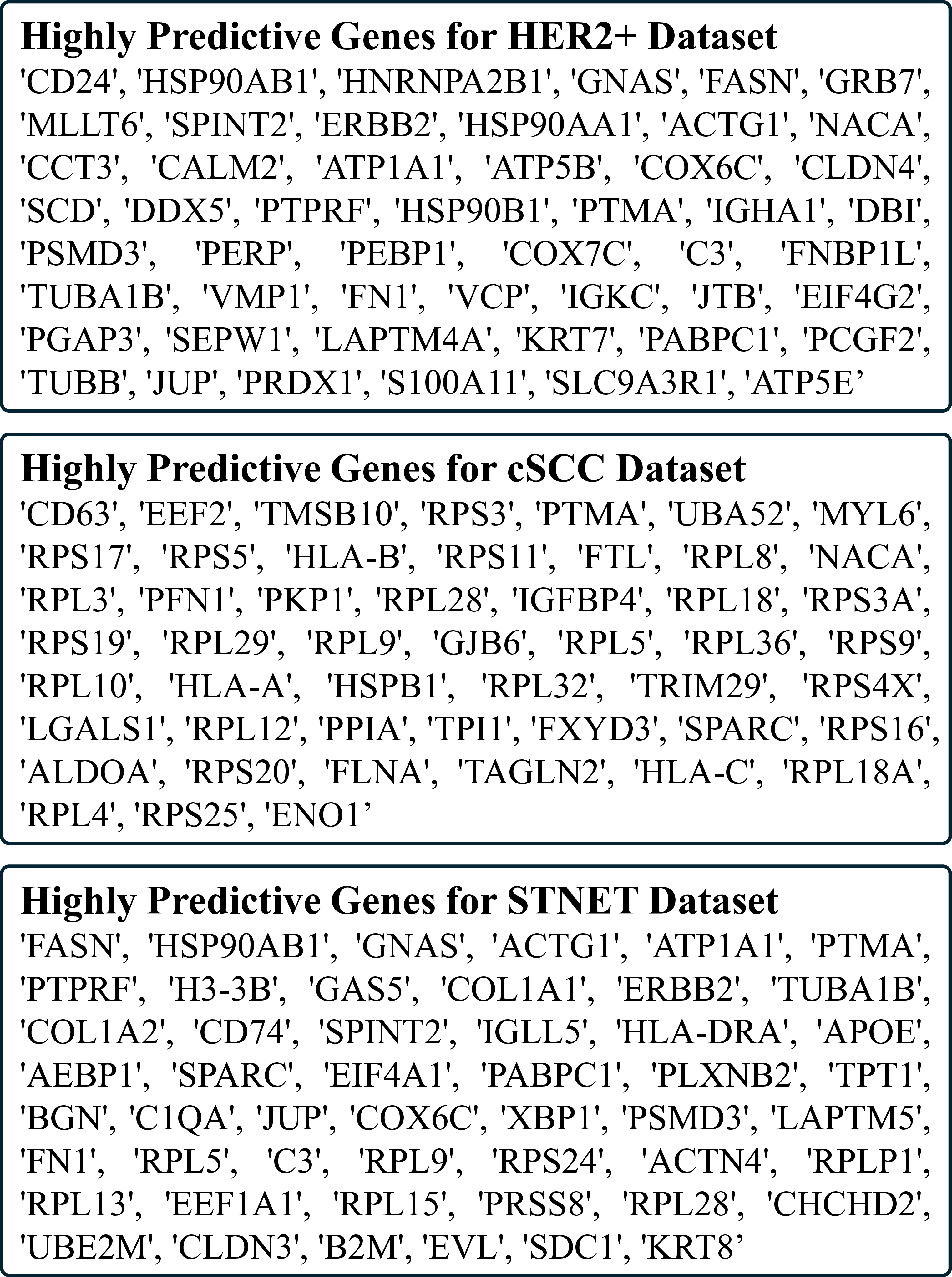

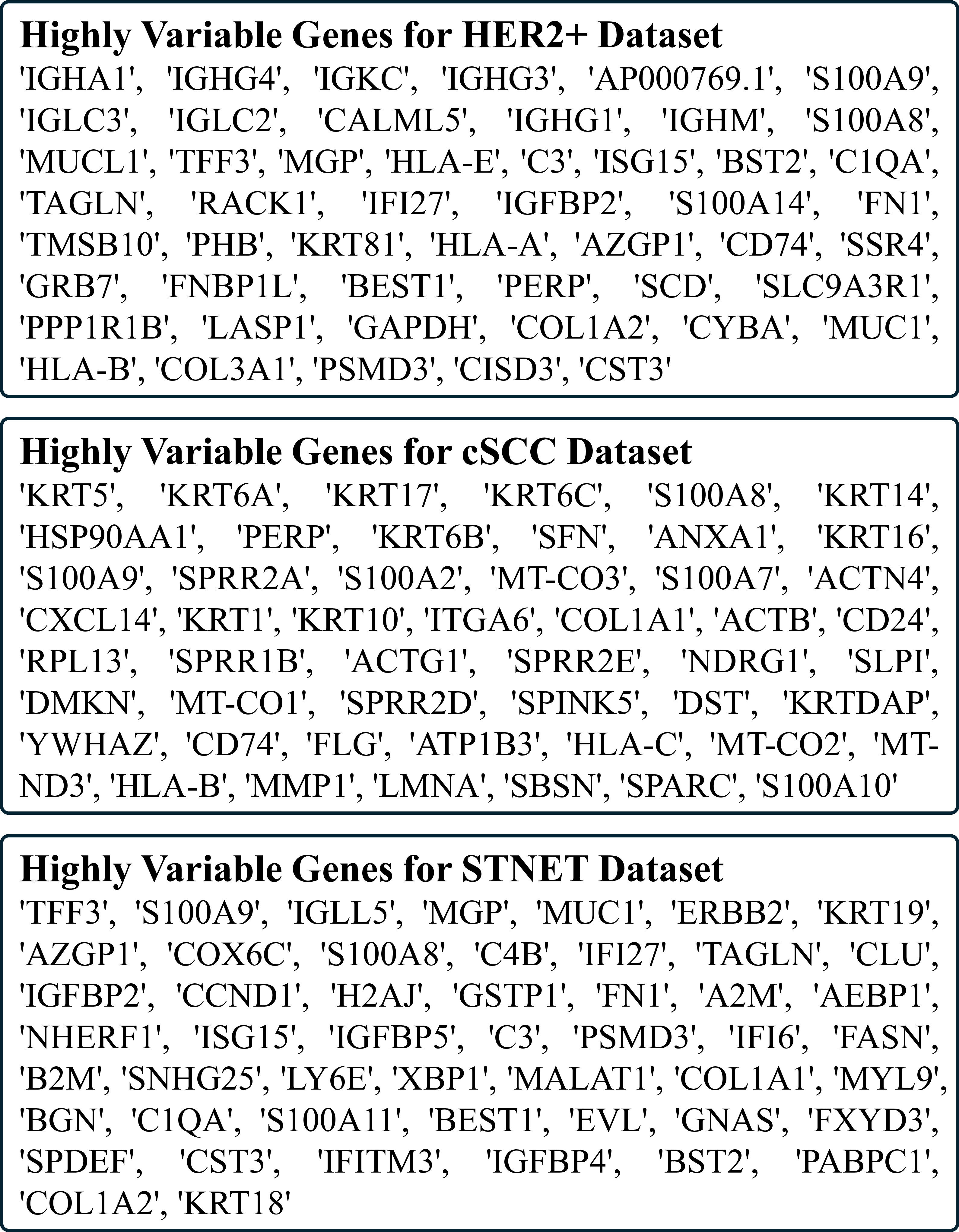

We evaluated our approach on three public ST datasets: two human breast cancer (BC) datasets and one cutaneous squamous cell carcinoma (cSCC) dataset.

To ensure robust evaluation, we applied cross-validation strategies tailored to each dataset, ensuring no patient overlap between training and test sets. For STNET, we used 8-fold cross-validation. For the smaller HER2+ and cSCC datasets, we adopted leave-one-patient-out cross-validation, with 8 folds for HER2+ and 4 folds for cSCC, where each fold used one patient’s samples for testing and the rest for training. This setup aligns with prior work (Chung et al. 2024) to ensure fair comparison. We evaluated our model using six metrics to ensure comprehensive assessment and comparability with prior stud-

To align with previous studies (He et al. 2020;Chung et al. 2024), all patches were segmented with dimensions of H=W =224 pixels, and regions were constructed using k=25 (a 5×5 patch grid). We select N g =250 spatially variable genes for training to align with previous studies. We use the Adam optimizer with a learning rate of 0.0001 and a StepLR scheduler (step size=50, gamma=0.9). The temperature τ in the contrastive loss was set to 0.1 for HER2+ and STNET and 0.08 for cSCC. Image encoders included UNI for WSI and region levels (d h =d r =1024) and ResNet18 for patch level (d p =512), while the text embedding model, BioBERT, produced embeddings with d t =1024. All models were trained on a NVIDIA RTX A800 GPU with a batch size of 128.

We 1, DKAN consistently outperforms all baselines across datasets and evaluation metrics. Take the HER2+ dataset as an example, DKAN achieves the lowest MAE (0.361) and MSE (0.224), along with the highest PCC values for all genes (0.330), HPG (0.531), HEG (0.317), and HVG (0.304). In comparison, the current SOTA method TRIPLEX reports 0.364 (MAE), 0.234 (MSE), and PCCs of 0.304 (all genes), 0.491 (HPG), 0.271 (HEG), and

To evaluate the model’s ability to capture spatial gene expression patterns both quantitatively and qualitatively, we visualize the log-normalized expression levels of two wellestablished cancer biomarkers in Figure 3. Specifically, FN1, frequently overexpressed in breast cancer (Zhang, Luo, and Wu 2022), and HSPB1, implicated in cancer progression (Liang et al. 2023), are highlighted. We also report their PCCs with the ground truth to assess spatial consistency and predictive accuracy. Additional visualizations are available in the supplementary materials.

To validate the effectiveness of our model design, we conducted ablation studies on several key components: the choice of text and image encoders, textual representations with different prompt strategies and LLMs, the contributions of individual modules, and the selection of fusion strategies and loss functions. For clarity, we present mean results on the STNET dataset in the main text. Consistent trends were also observed on the HER2+ and cSCC datasets, with detailed results provided in the supplementary materials.

( (2) Gene Semantic Representation. To evaluate textual representations of genes, we compared model performance using three prompt strategies: (a) gene summaries without constraints on function or phenotype, (b) gene symbols without summaries, and (c) gene summaries enriched with specific constraints on function and phenotype. We also evaluated four widely used LLMs: DeepSeek-R1 (DeepSeek-AI 2025), DeepSeek-v3 (Liu et al. 2024), LLaMA2 (7B-chathf) (Touvron et al. 2023), andGPT-4o (OpenAI 2024). As shown in Table 3, our proposed prompt strategy (c) achieved the best performance, as it effectively captures more informative and concise gene semantics. Among the LLMs, GPT-4o consistently outperformed the others.

(3) Individual Modules. We evaluated the contributions of key components in our model, including multi-scale spatial context, gene semantic features, contrastive learning, and the use of text features as Key and Value (referred to as text as KV) in dual-path contrastive learning. As shown in Table 4, removing multi-scale spatial context, gene semantics, or contrastive learning led to a decline in performance in terms of PCC. Interestingly, removing contrastive learning resulted in a slight improvement in MAE, but our configuration achieved the best overall performance across all metrics. Additionally, using text features as Query rather than as Key/Value proved more effective for multimodal integration. These findings highlight the effectiveness of our architectural design and the importance of each module.

(4) Fusion Strategy and Loss Design. We investigated the impact of different fusion strategies and loss function designs, as shown in cross-attention mechanism used in our contrastive alignment module against four alternatives: addition (Sum.), concatenation (Concat.), concatenation followed by a transformer layer (Concat. + Trans.), and addition followed by a transformer layer (Sum. + Trans.). For the loss functions, we ablated two key components, our dynamic weight balancing strategy and the knowledge distillation loss, to assess their contributions. The results show a consistent performance drop across metrics, particularly in MAE and MSE, when alternative fusion strategies or simplified loss designs are used. In contrast, our full model design achieves the best overall performance in PCC, while also maintaining the lowest MAE and MSE, demonstrating the effectiveness of both our fusion strategy and our loss function design.

In this study, we propose DKAN, a dual-path knowledgeaugmented contrastive alignment framework that integrates high-level biological gene knowledge into multimodal feature alignment for spatial gene expression prediction. Comprehensive experiments demonstrate the superior performance of DKAN compared to existing state-of-the-art methods, highlighting the effectiveness of structured biological priors in enhancing cross-modal representation learning. This approach offers a practical pathway for linking histological morphology with spatial gene expression, supporting future discoveries in tissue microenvironments and biomarker identification.

In the supplementary materials, we first present the structured prompt design used by the Large Language Model (LLM) in the gene semantic representation module. This is followed by comprehensive ablation studies on the HER2+ and cSCC datasets to assess the contributions of different components within DKAN. We also include additional ablation results on the STNET dataset, a computational comparison with baseline models, extended visualizations of cancer marker gene expression, and details on the preprocessing and evaluation protocols for gene data.

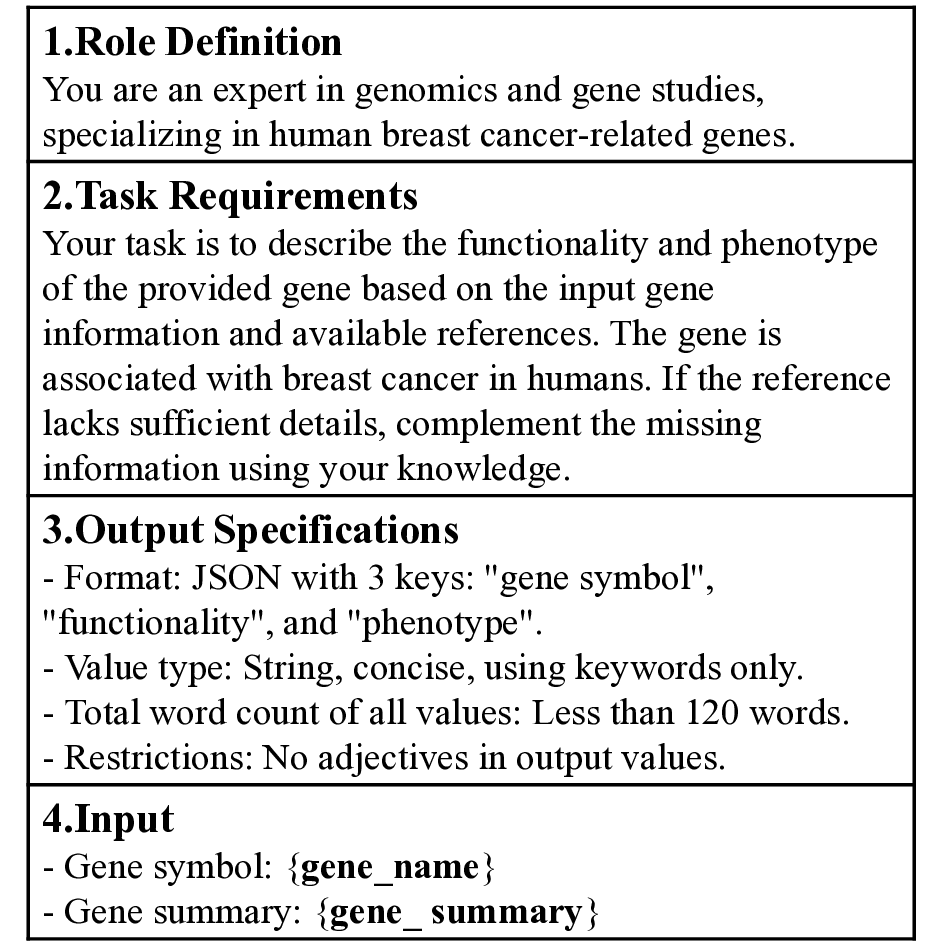

In the gene semantic representation module, we leverage the summarization capabilities and embedded biomedical knowledge of the LLM (GPT-4o) to preserve both structural consistency and informational integrity in gene-level representations. As illustrated in Figure S1, we designed a structured prompt tailored for breast cancer datasets, which explicitly defines the context and task requirements through three key components: role definitions, task descriptions, and output specifications. The prompt further clarifies that the provided genes are associated with human breast cancer. Gene summaries retrieved from an external database are subsequently incorporated as input to the model.

In this section, we present additional ablation study results on the HER2+ and cSCC datasets, shown in Table S1 and Table S2, respectively. Specifically, we evaluate the effectiveness of various components, including the text encoder, image encoder, LLM prompt strategies, different LLMs, individual models, fusion strategies, and loss function designs. The evaluation is conducted using six metrics: Mean Absolute Error (MAE), Mean Squared Error (MSE), Pearson Correlation Coefficient (PCC) across all genes, as well as PCC for highly predictive genes, highly expressed genes, and highly variable genes, to comprehensively assess and validate our model design. Details of these metrics are provided in Section . Additionally, we report the complete ablation results, including standard deviations, on the STNET dataset in Table S3, to supplement the findings presented in the main text. Collectively, these results provide strong empirical evidence supporting the design rationale of DKAN’s architecture.

To assess the computational complexity of our proposed method relative to existing State-Of-The-Art (SOTA) models, we report the number of FLOPs, model parameters, training time, and inference time in Table S4 on cSCC dataset. All experiments were conducted on an NVIDIA A800 GPU to ensure fair and consistent comparisons. Inference time was measured as the average per spot across all cross-validation folds. The results demonstrate that the integration of gene semantic representation and dual-path

You are an expert in genomics and gene studies, specializing in human breast cancer-related genes.

Your task is to describe the functionality and phenotype of the provided gene based on the input gene information and available references. The gene is associated with breast cancer in humans. If the reference lacks sufficient details, complement the missing information using your knowledge.

-Format: JSON with 3 keys: “gene symbol”, “functionality”, and “phenotype”.

-Value type: String, concise, using keywords only.

-Total word count of all values: Less than 120 words.

-Restrictions: No adjectives in output values.

-Gene symbol: {gene_name} -Gene summary: {gene_ summary} contrastive learning introduces minimal computational overhead. Moreover, DKAN exhibits strong computational efficiency, particularly in terms of training and inference time, when compared to baseline methods.

In this section, we present additional visualizations of cancer marker gene expression across the three datasets, along with the corresponding PCC values in comparison to SOTA baselines. We select genes that exhibit strong correlations with specific cancer types in their respective datasets. Specifically, ERBB2, GNAS, and HSP90AB1 are selected for the HER2+ and STNET datasets (Figures S2 andS3), while SPARC, TRIM29, and FTL are chosen for the cSCC dataset (Figure S4). These visualizations further illustrate the effectiveness of our model in capturing both the absolute expression levels and expression trends of key marker genes, underscoring DKAN’s superior performance in gene expression prediction.

To address the inherent sparsity in spatial transcriptomics data, we first filtered out genes with low variability, following the criteria established in a previous study (He et al. 2020). Each spot’s gene expression values were then normalized by dividing by the total expression sum, followed by a logarithmic transformation to stabilize variance. To further mitigate experimental noise, we applied a smoothing technique (He et al. 2020)

ies(Yang et al. 2023;Xie et al. 2023;Chung et al. 2024). These include Mean Absolute Error (MAE), Mean Squared Error (MSE), and Pearson Correlation Coefficient (PCC) across: (1) all genes of interest, (2) the top 50 Highly Predictive Genes (HPG), (3) the top 50 Highly Expressed Genes (HEG), and (4) the top 50 Highly Variable Genes (HVG). PCC was computed per gene across all spots within each sample and averaged over all cross-validation folds.

ies(Yang et al. 2023;Xie et al. 2023;Chung et al. 2024

ies

Method FLOPs (G) # Parameter (M) Training Time (h) Inference Time (s)

📸 Image Gallery