Noise-Robust Abstractive Compression in Retrieval-Augmented Language Models

Reading time: 2 minute

...

📝 Original Info

- Title: Noise-Robust Abstractive Compression in Retrieval-Augmented Language Models

- ArXiv ID: 2512.08943

- Date: 2025-11-19

- Authors: Singon Kim

📝 Abstract

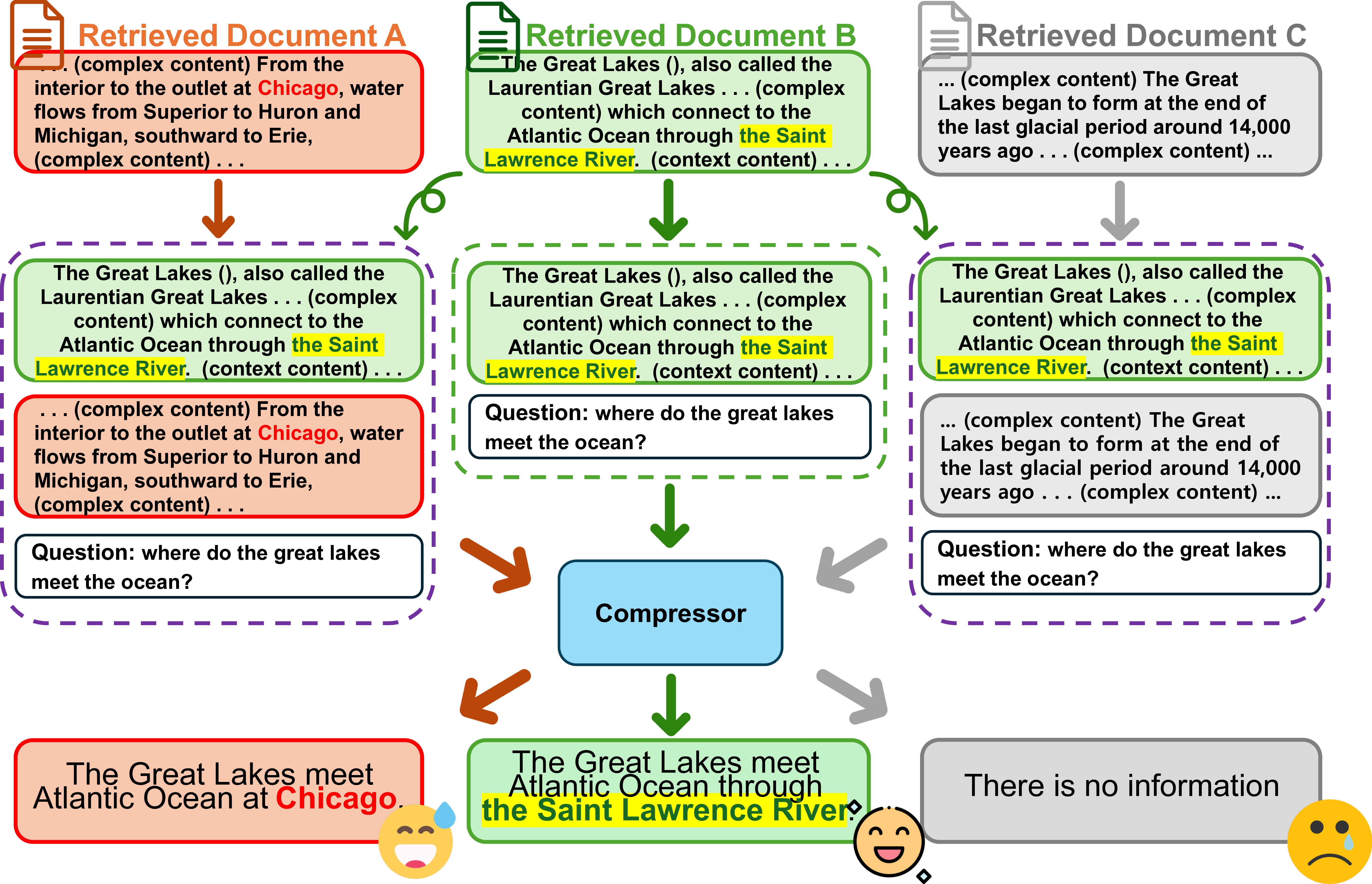

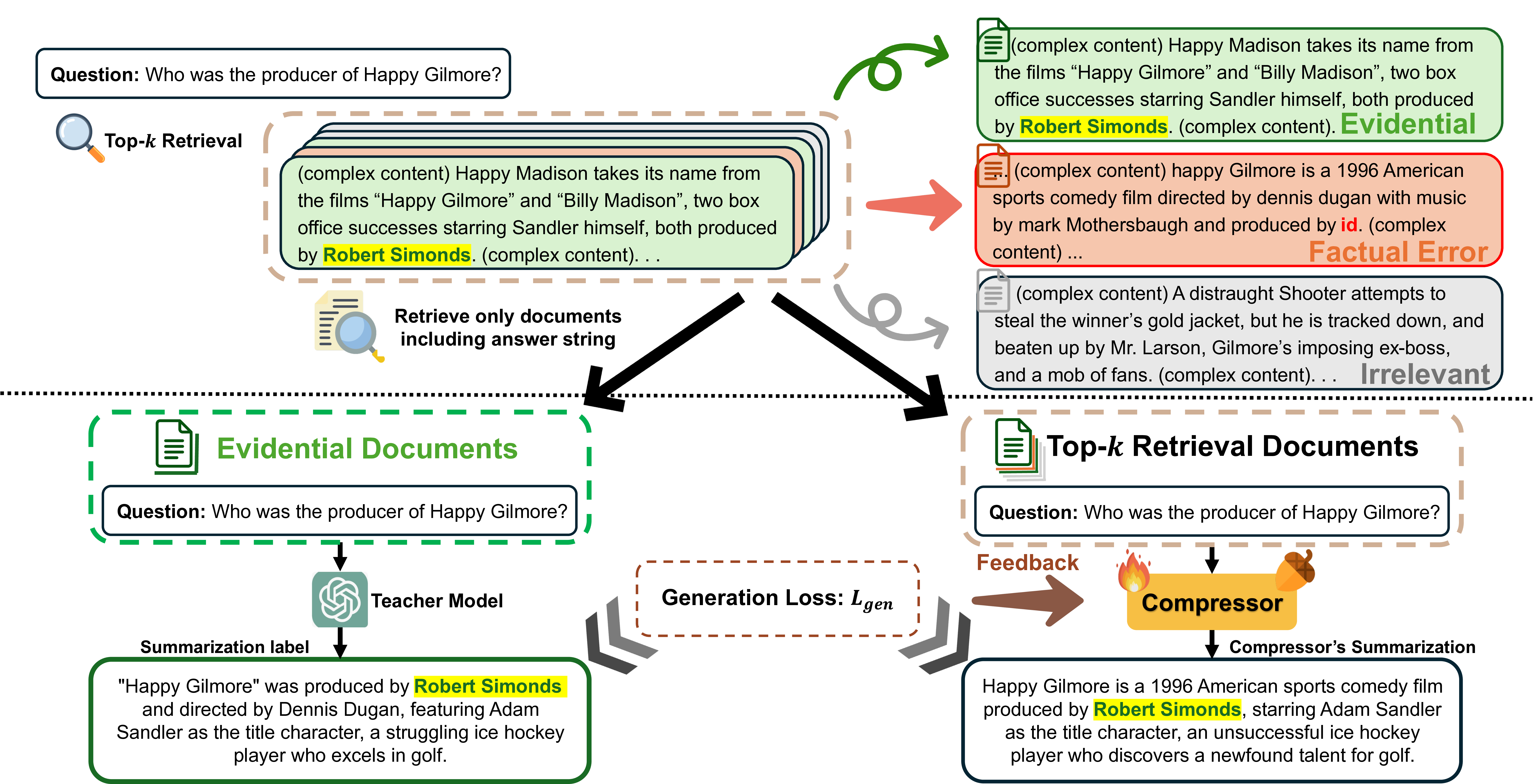

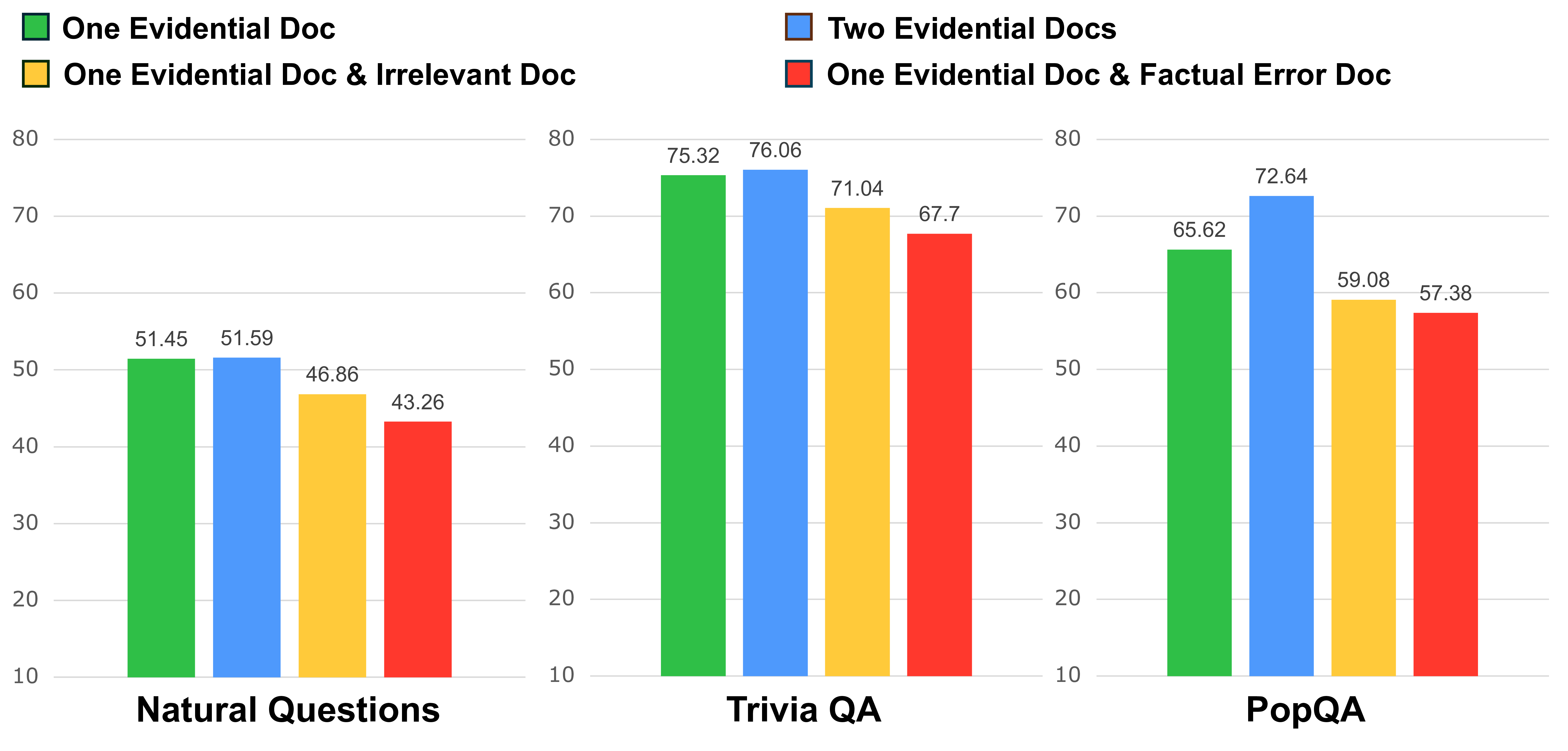

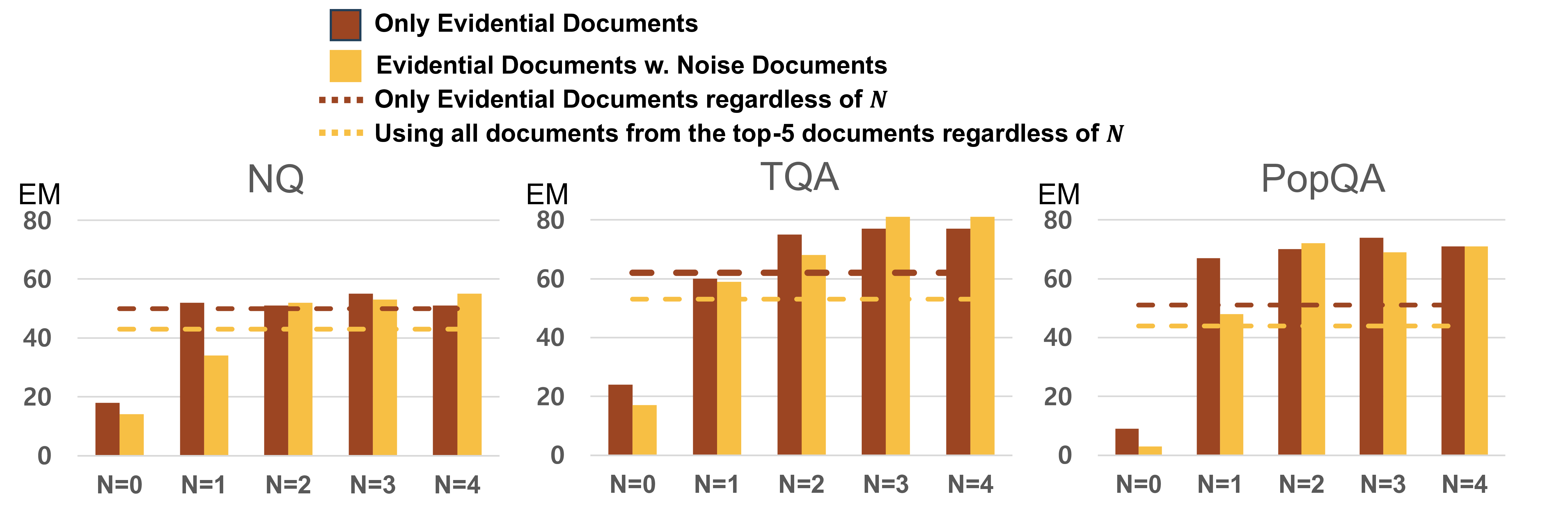

Abstractive compression utilizes smaller langauge models to condense query-relevant context, reducing computational costs in retrieval-augmented generation (RAG). However, retrieved documents often include information that is either irrelevant to answering the query or misleading due to factual incorrect content, despite having high relevance scores. This behavior indicates that abstractive compressors are more likely to omit important information essential for the correct answer, especially in long contexts where attention dispersion occurs. To address this issue, we categorize retrieved documents in a more fine-grained manner and propose Abstractive Compression Robust against Noise (ACoRN), which introduces two novel training steps. First...📄 Full Content

📸 Image Gallery

Reference

This content is AI-processed based on open access ArXiv data.