Computer Science / Artificial Intelligence

Computer Science / Multiagent Systems

Computer Science / Robotics

Adversarially Guided Self-Play for Adopting Social Conventions

📝 Original Info

- Title: Adversarially Guided Self-Play for Adopting Social Conventions

- ArXiv ID: 2001.05994

- Date: 2020-10-09

- Authors: Mycal Tucker, Yilun Zhou, Julie Shah

📝 Abstract

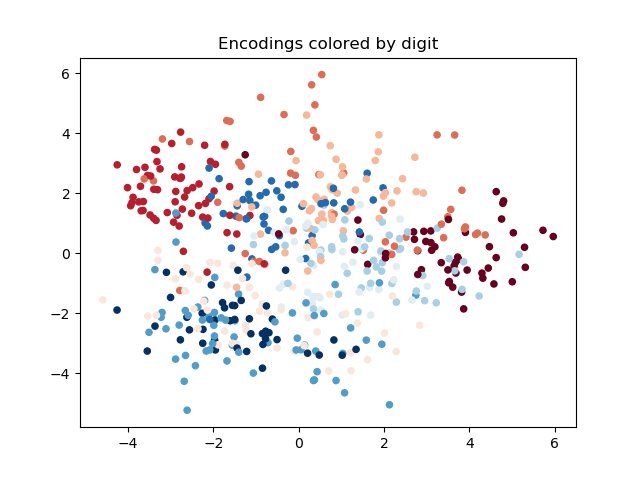

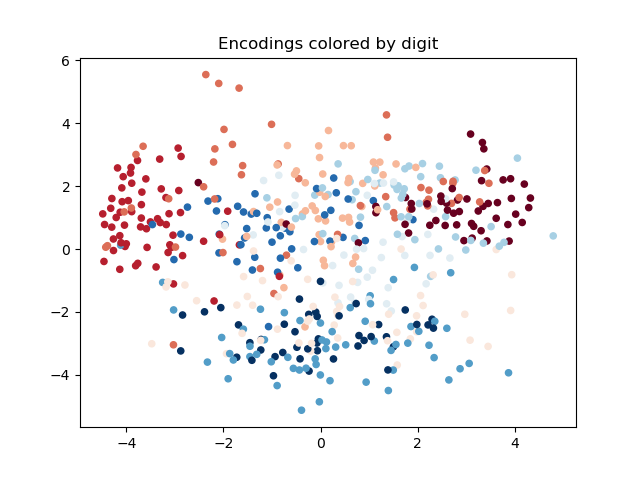

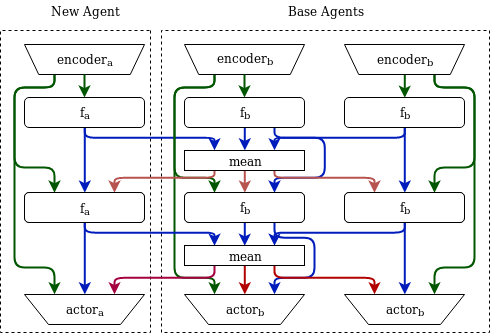

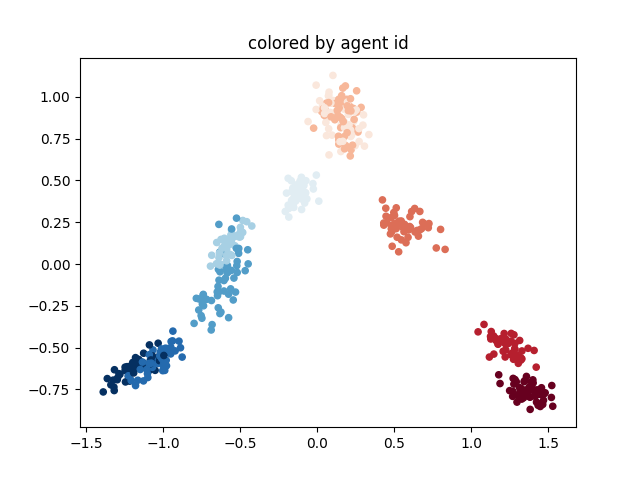

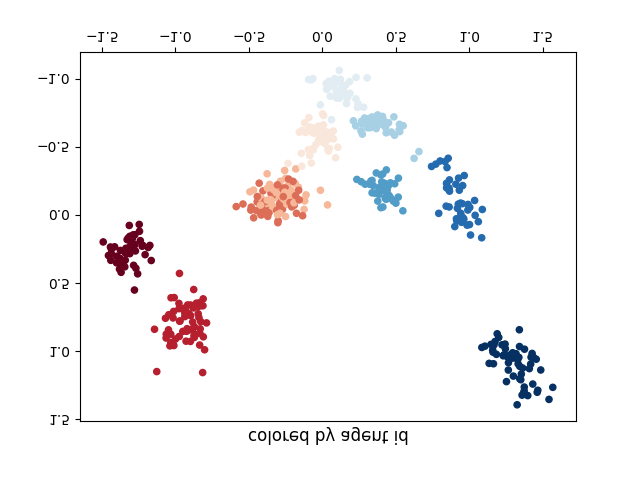

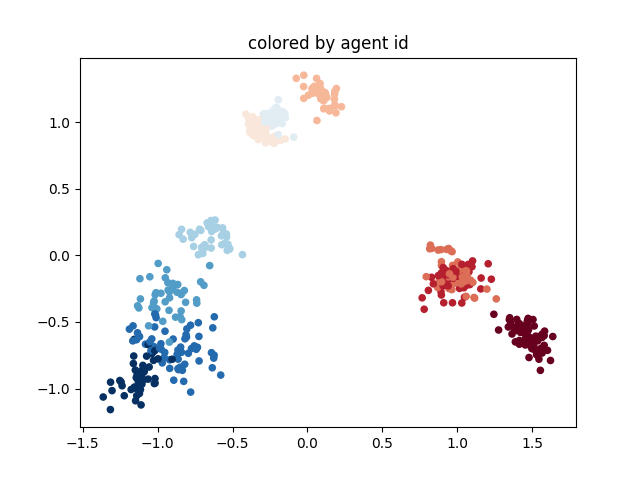

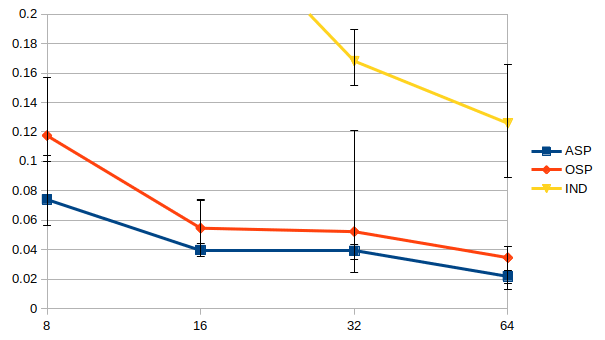

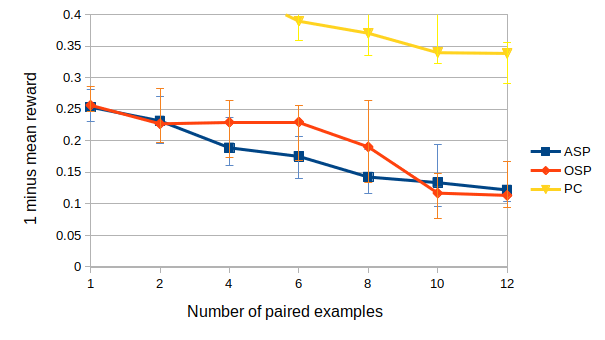

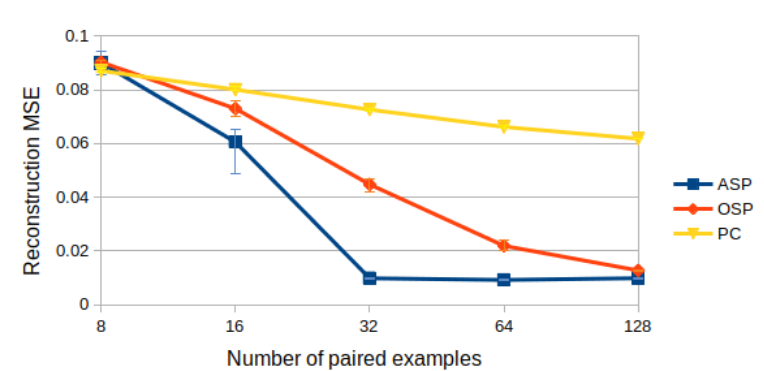

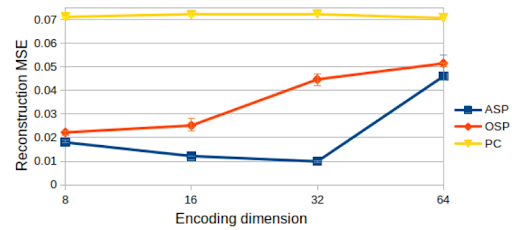

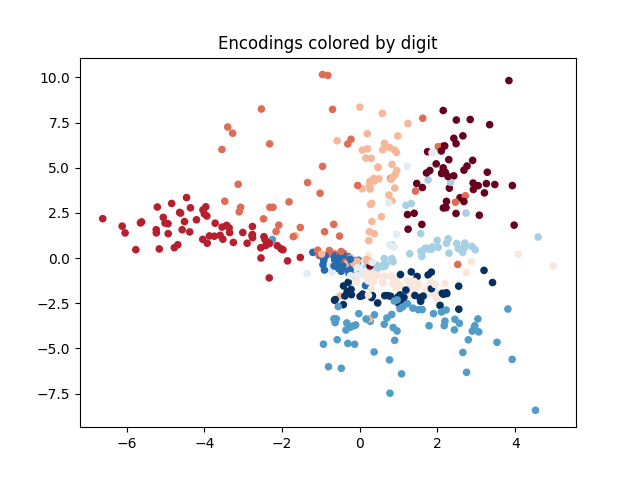

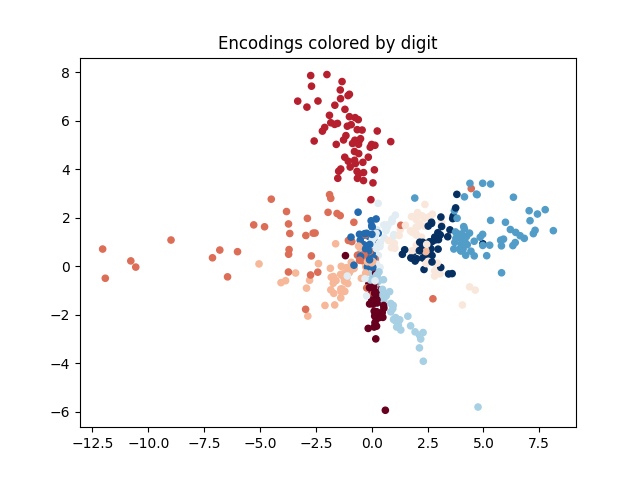

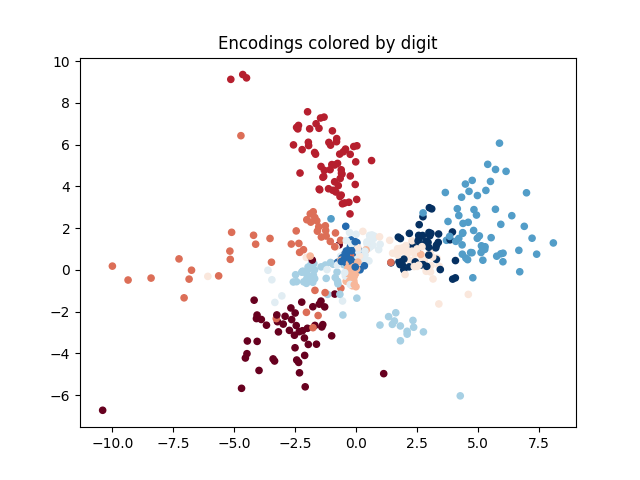

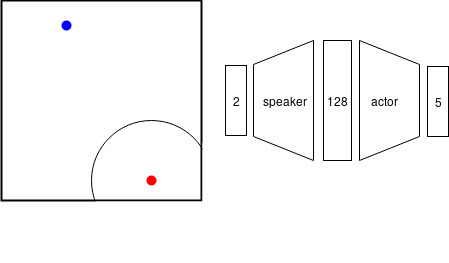

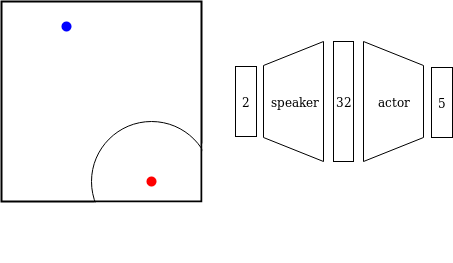

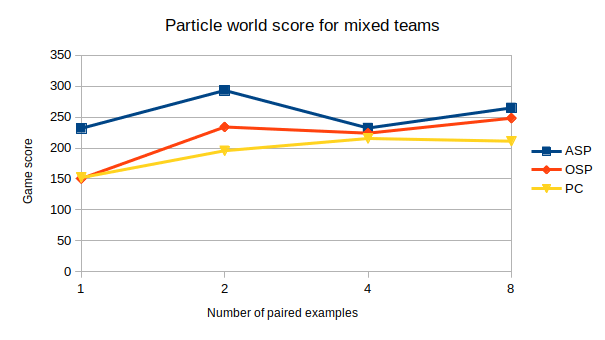

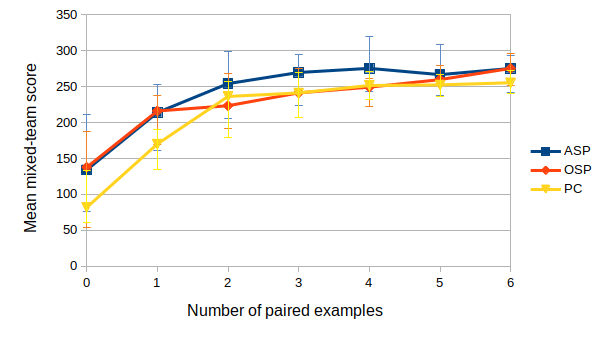

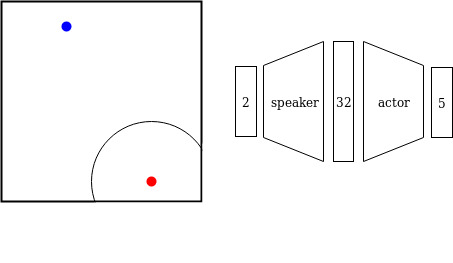

Robotic agents must adopt existing social conventions in order to be effective teammates. These social conventions, such as driving on the right or left side of the road, are arbitrary choices among optimal policies, but all agents on a successful team must use the same convention. Prior work has identified a method of combining self-play with paired input-output data gathered from existing agents in order to learn their social convention without interacting with them. We build upon this work by introducing a technique called Adversarial Self-Play (ASP) that uses adversarial training to shape the space of possible learned policies and substantially improves learning efficiency. ASP only requires the addition of unpaired data: a dataset of outputs produced by the social convention without associated inputs. Theoretical analysis reveals how ASP shapes the policy space and the circumstances (when behaviors are clustered or exhibit some other structure) under which it offers the greatest benefits. Empirical results across three domains confirm ASP's advantages: it produces models that more closely match the desired social convention when given as few as two paired datapoints.📄 Full Content

📸 Image Gallery

Reference

This content is AI-processed based on open access ArXiv data.