Inducing Sparsity and Shrinkage in Models with Time-Varying Parameters

📝 Original Paper Info

- Title: Inducing Sparsity and Shrinkage in Time-Varying Parameter Models- ArXiv ID: 1905.10787

- Date: 2019-12-18

- Authors: Florian Huber, Gary Koop, Luca Onorante

📝 Abstract

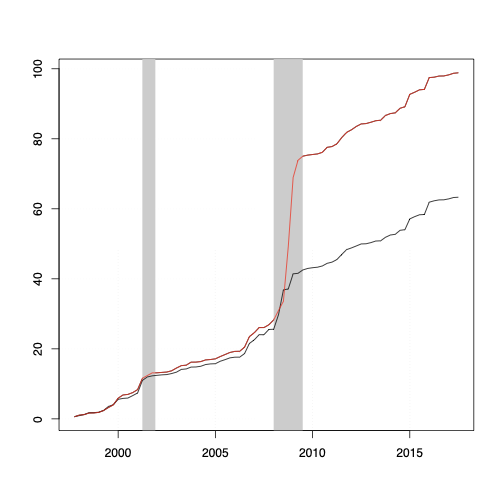

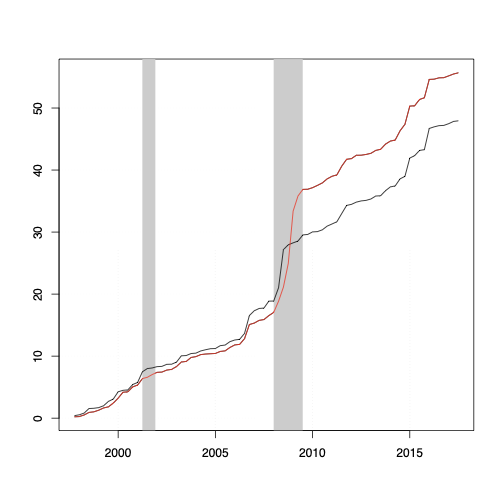

Time-varying parameter (TVP) models have the potential to be over-parameterized, particularly when the number of variables in the model is large. Global-local priors are increasingly used to induce shrinkage in such models. But the estimates produced by these priors can still have appreciable uncertainty. Sparsification has the potential to reduce this uncertainty and improve forecasts. In this paper, we develop computationally simple methods which both shrink and sparsify TVP models. In a simulated data exercise we show the benefits of our shrink-then-sparsify approach in a variety of sparse and dense TVP regressions. In a macroeconomic forecasting exercise, we find our approach to substantially improve forecast performance relative to shrinkage alone.💡 Summary & Analysis

This paper addresses the issue of over-parameterization in time-varying parameter (TVP) models, a common problem when the number of variables in the model is large. Over-parameterization can lead to complex models with significant estimation uncertainty. While global-local priors are commonly used to induce shrinkage and reduce complexity, they still leave considerable uncertainty in estimates. The authors propose an approach that combines both shrinkage and sparsification to improve model performance and prediction accuracy.The core of the paper involves a method for applying shrink-then-sparsify techniques to TVP models in a computationally efficient manner. This method simplifies the model by removing unnecessary variables, thereby reducing estimation uncertainty and enhancing predictive power. The effectiveness of this approach is demonstrated through both simulated data exercises and macroeconomic forecasting experiments.

The results show that combining shrinkage with sparsification significantly improves forecast performance over using shrinkage alone in various sparse and dense TVP regressions. This improvement highlights the potential benefits of integrating both techniques to achieve better model efficiency and prediction accuracy.

This research has significant implications for fields requiring accurate economic predictions, such as financial analysis and macroeconomic forecasting. By simplifying models while improving their predictive capabilities, this approach could lead to more reliable forecasts in various applications.

📄 Full Paper Content (ArXiv Source)

📊 논문 시각자료 (Figures)