Computer Science / Emerging Technologies

Computer Science / Hardware Architecture

Computer Science / Neural Computing

Shenjing: A low power reconfigurable neuromorphic accelerator with partial-sum and spike networks-on-chip

Reading time: 2 minute

...

📝 Original Info

- Title: Shenjing: A low power reconfigurable neuromorphic accelerator with partial-sum and spike networks-on-chip

- ArXiv ID: 1911.10741

- Date: 2019-12-02

- Authors: Bo Wang, Jun Zhou, Weng-Fai Wong, and Li-Shiuan Peh

📝 Abstract

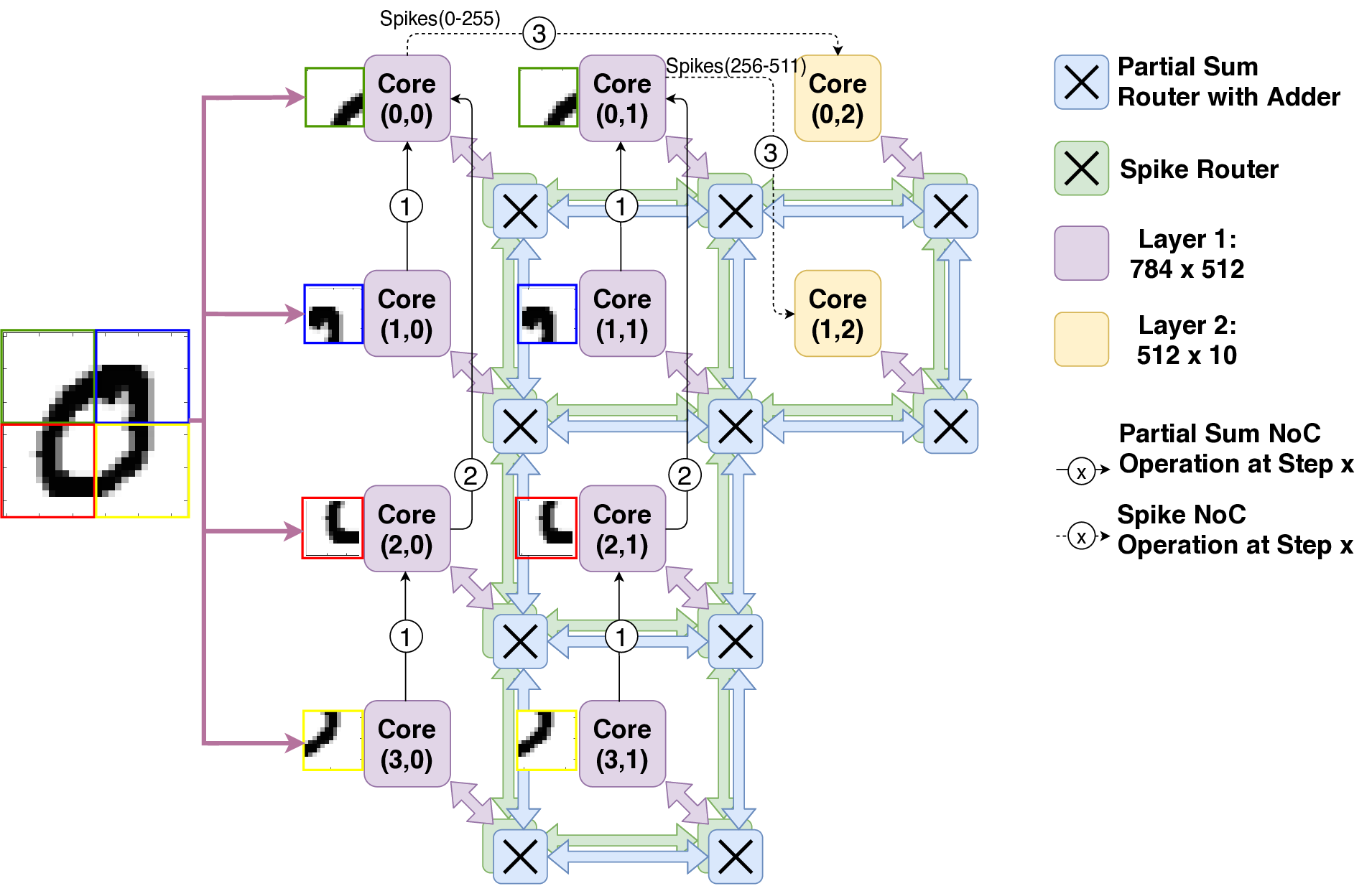

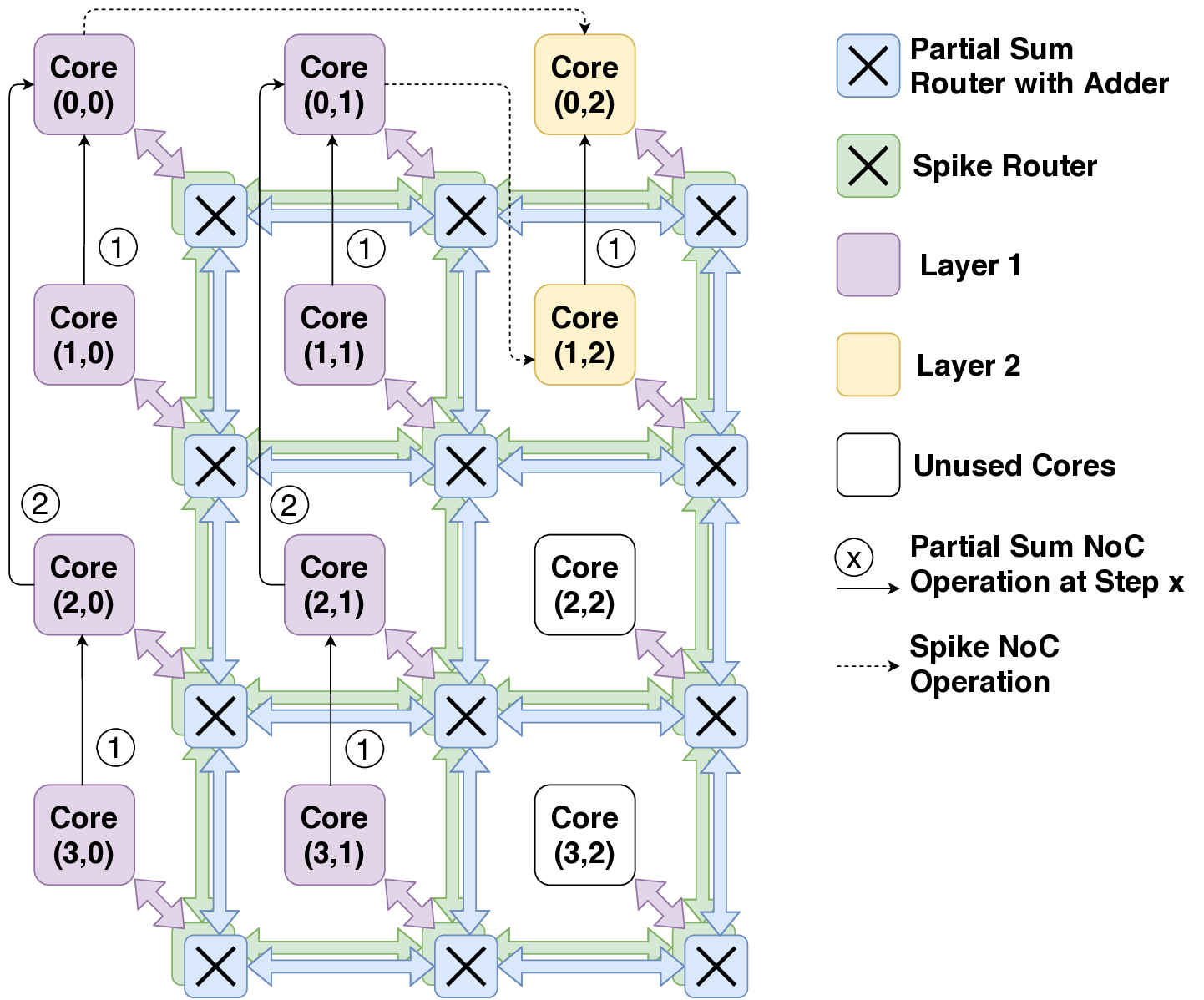

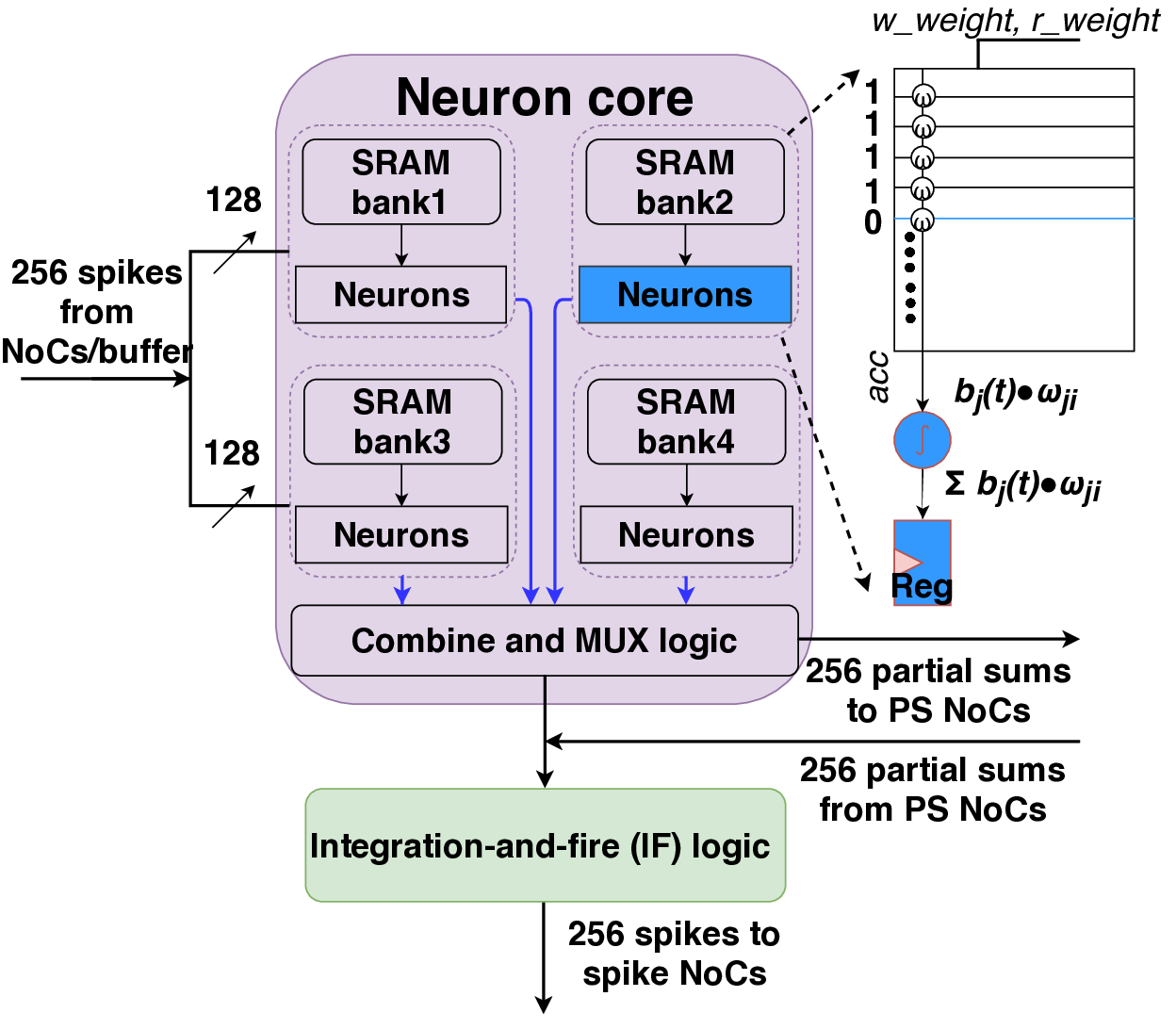

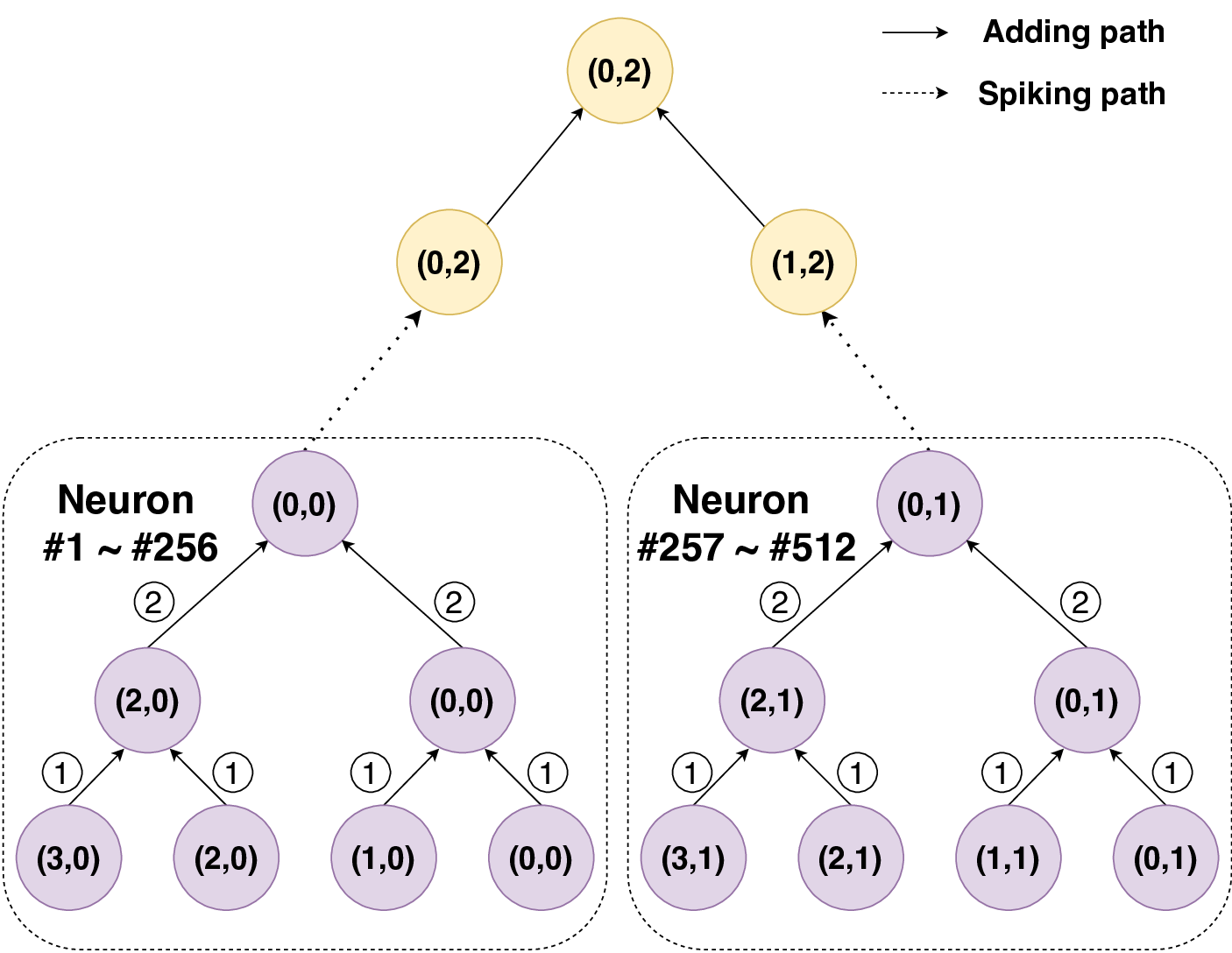

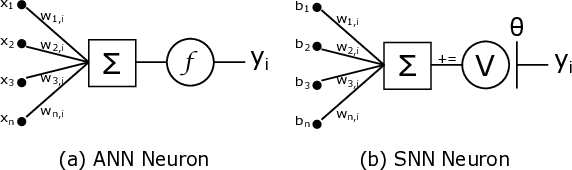

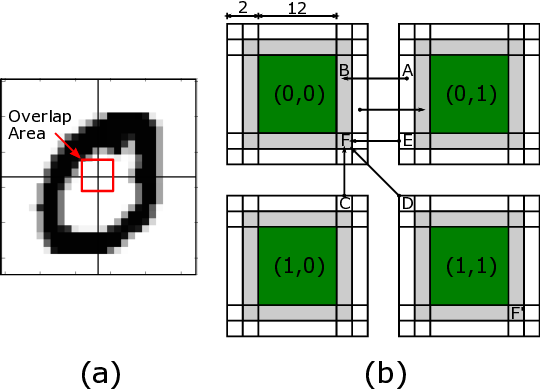

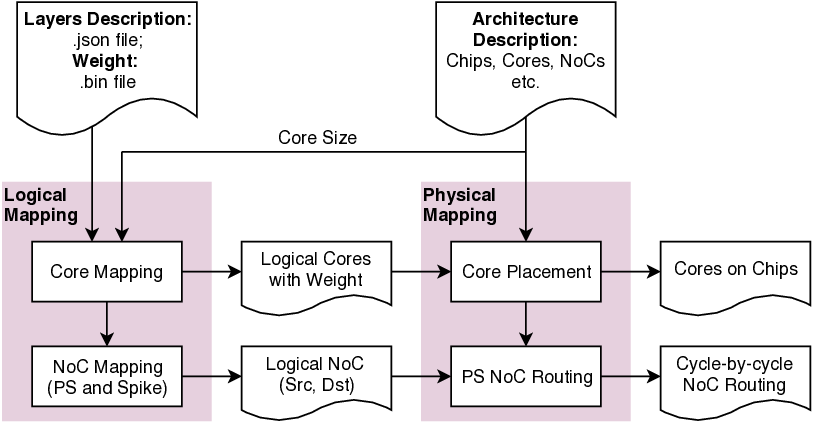

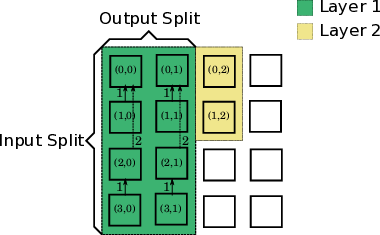

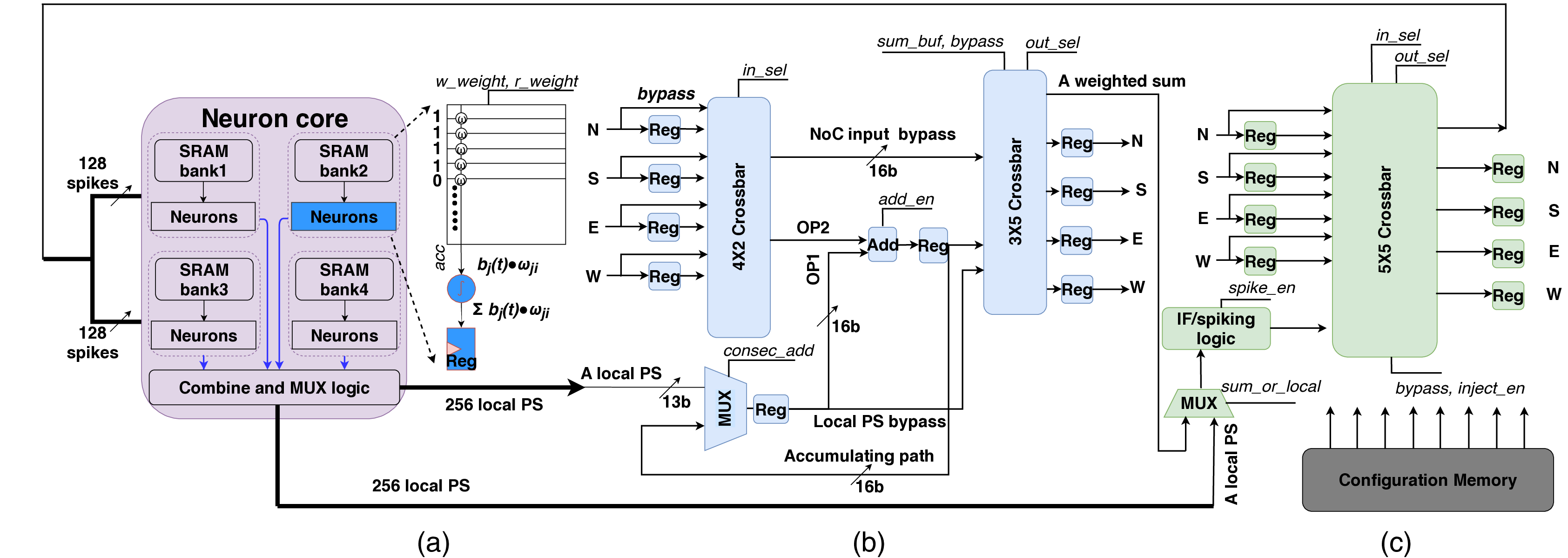

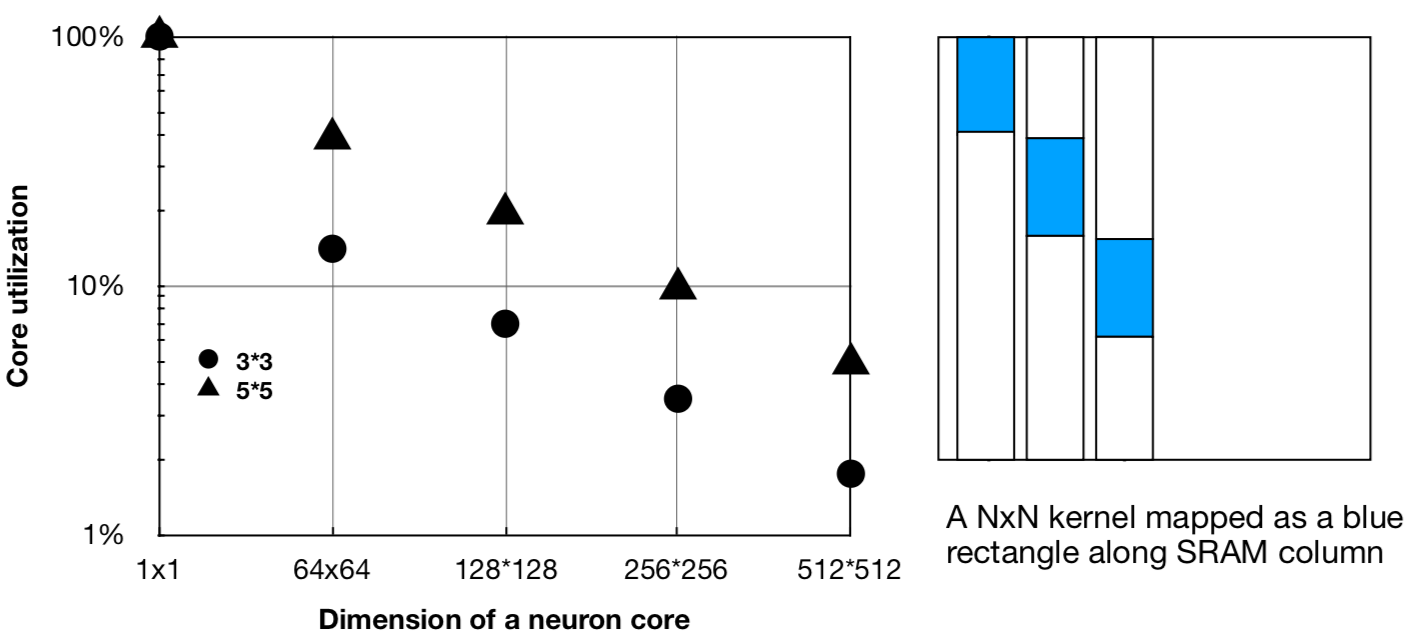

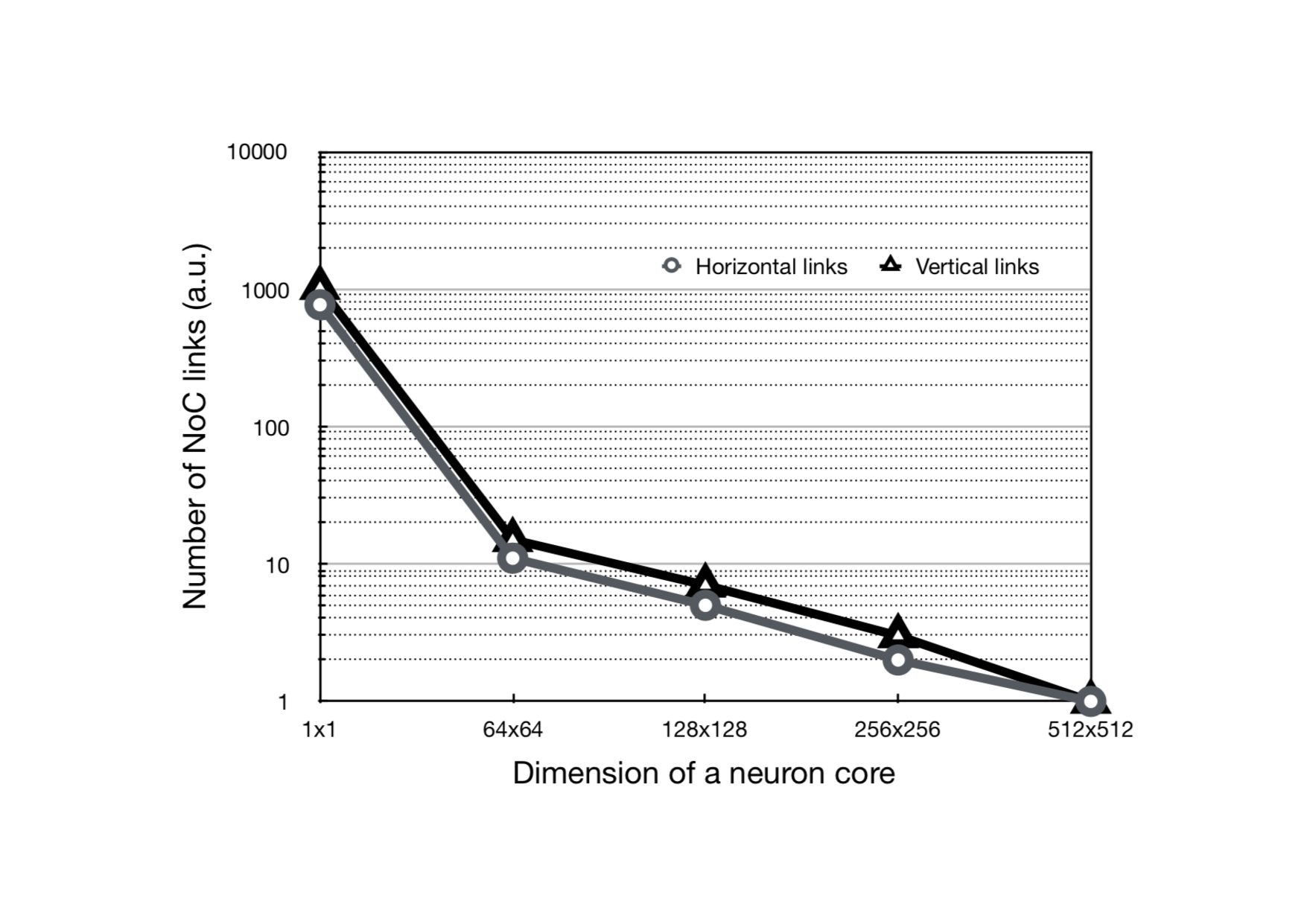

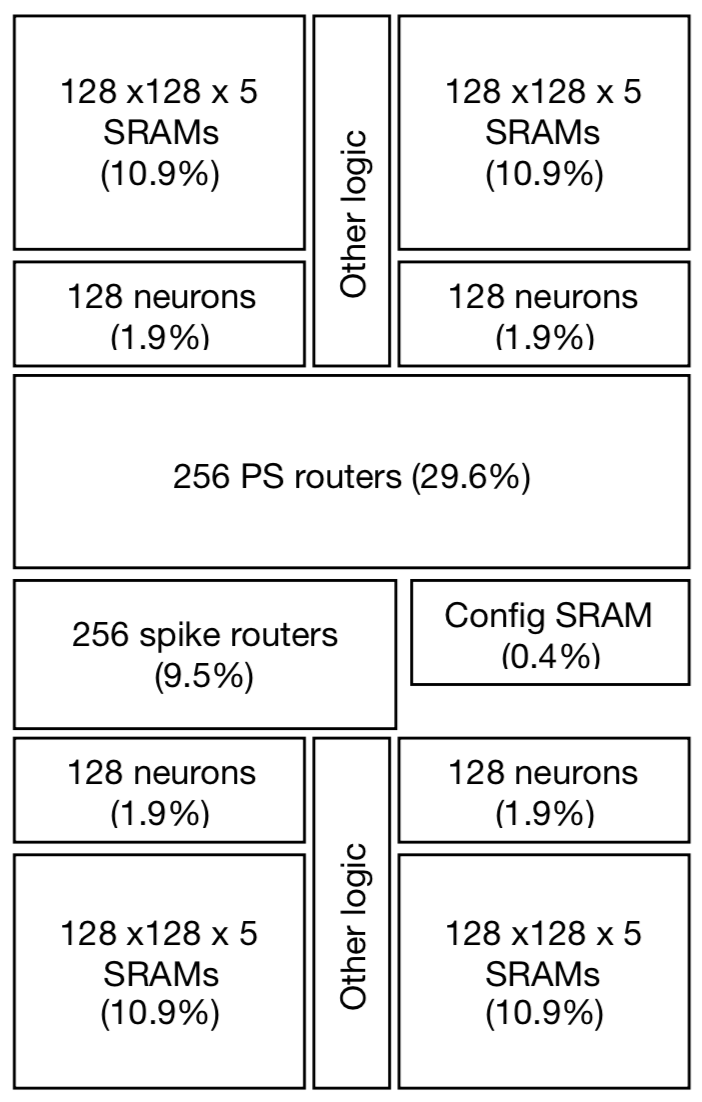

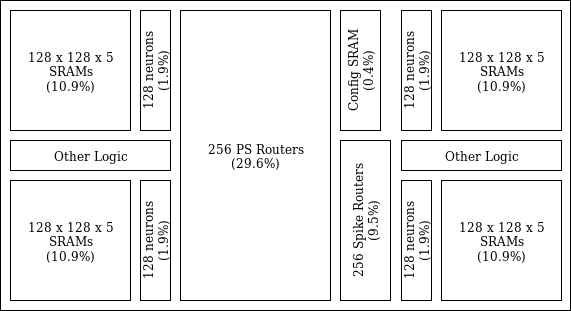

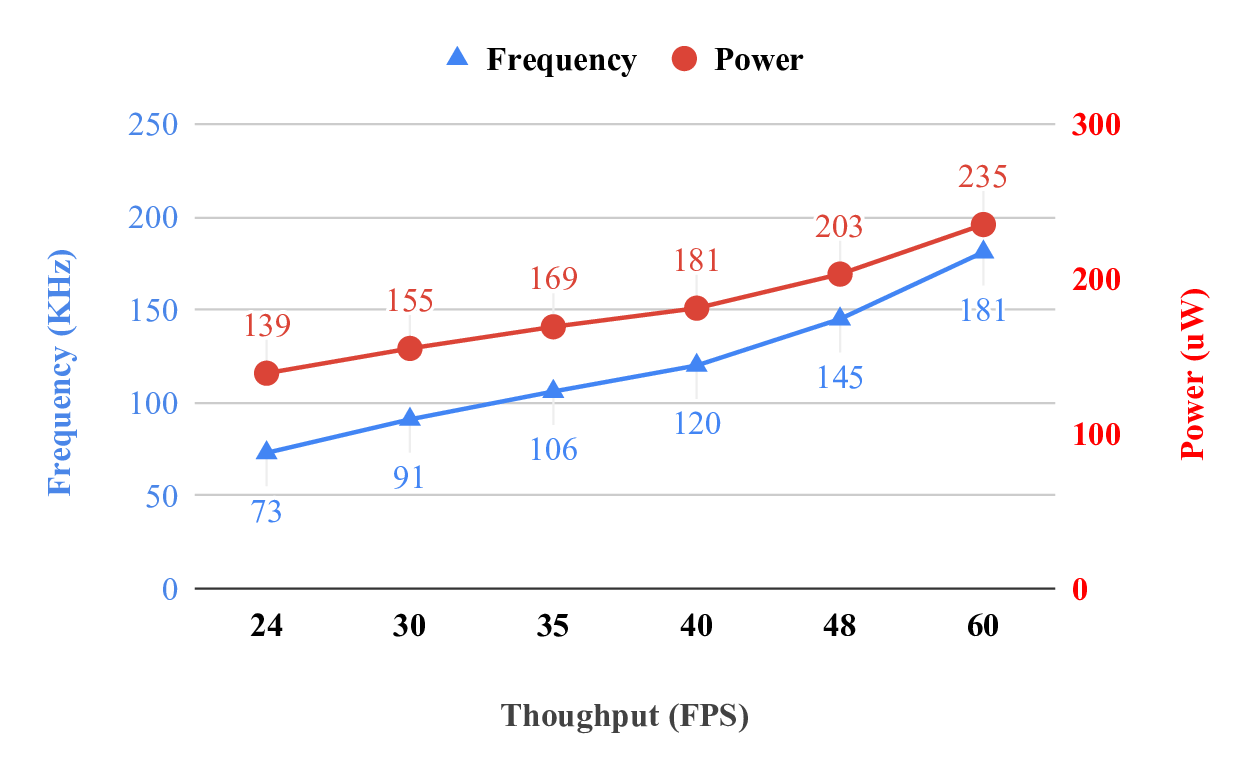

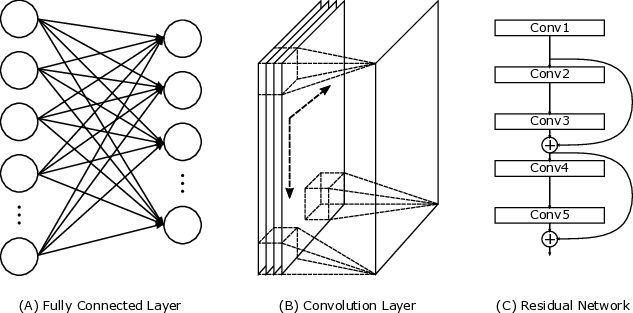

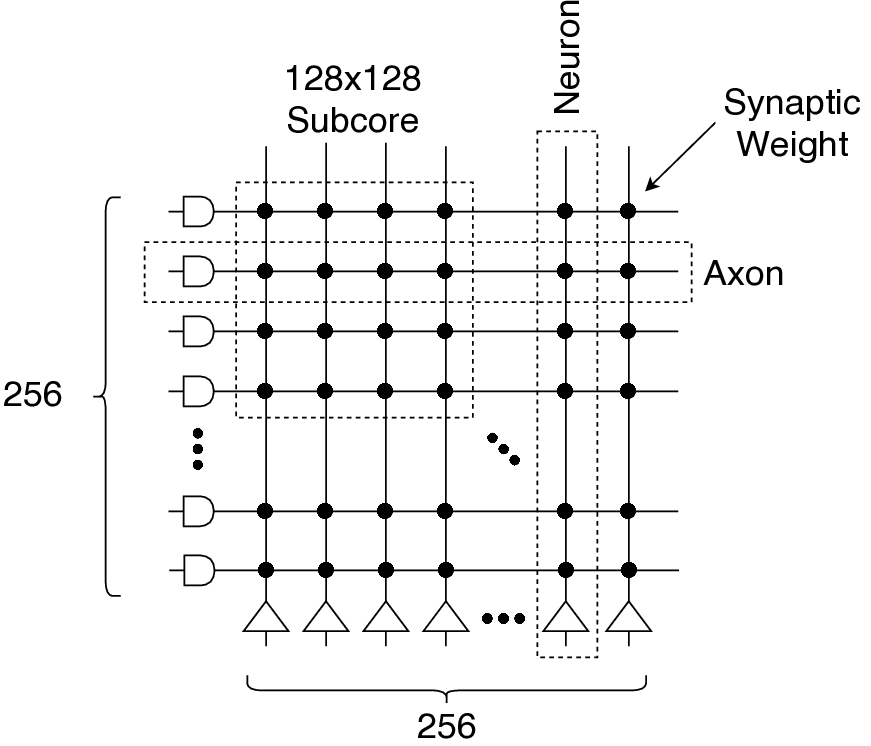

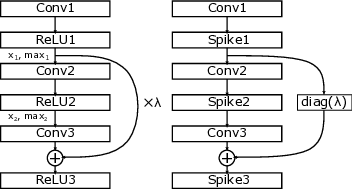

The next wave of on-device AI will likely require energy-efficient deep neural networks. Brain-inspired spiking neural networks (SNN) has been identified to be a promising candidate. Doing away with the need for multipliers significantly reduces energy. For on-device applications, besides computation, communication also incurs a significant amount of energy and time. In this paper, we propose Shenjing, a configurable SNN architecture which fully exposes all on-chip communications to software, enabling software mapping of SNN models with high accuracy at low power. Unlike prior SNN architectures like TrueNorth, Shenjing does not require any model modification and retraining for the mapping. We show that conventional artificial neural networks (ANN) such as multilayer perceptron, convolutional neural networks, as well as the latest residual neural networks can be mapped successfully onto Shenjing, realizing ANNs with SNN's energy efficiency. For the MNIST inference problem using a multilayer perceptron, we were able to achieve an accuracy of 96% while consuming just 1.26mW using 10 Shenjing cores.📄 Full Content

📸 Image Gallery

Reference

This content is AI-processed based on open access ArXiv data.