An Introduction to Decentralized Stochastic Optimization with Gradient Tracking

📝 Original Paper Info

- Title: An introduction to decentralized stochastic optimization with gradient tracking- ArXiv ID: 1907.09648

- Date: 2019-11-14

- Authors: Ran Xin and Soummya Kar and Usman A. Khan

📝 Abstract

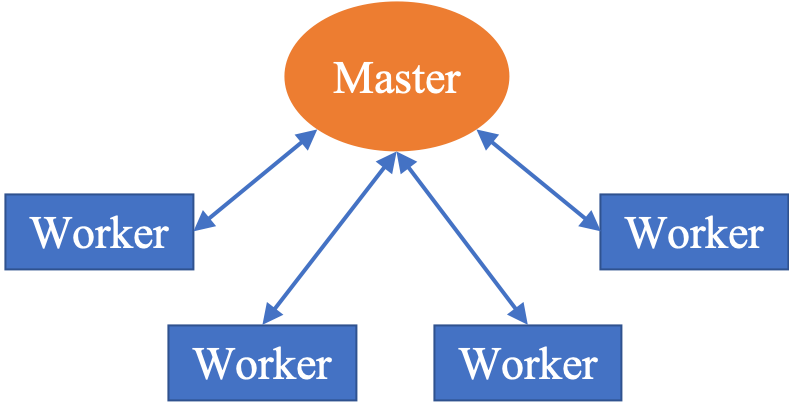

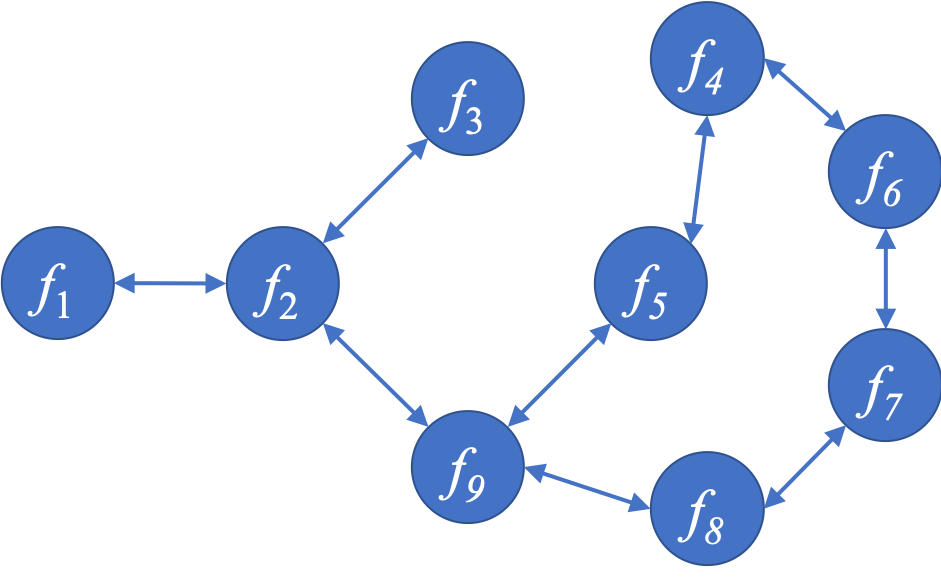

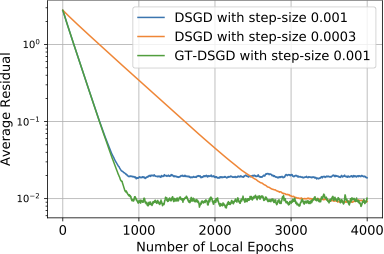

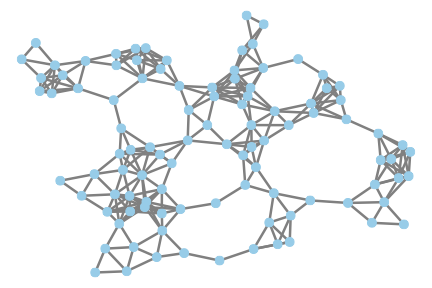

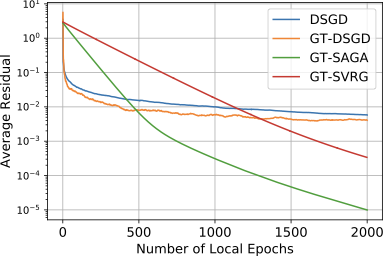

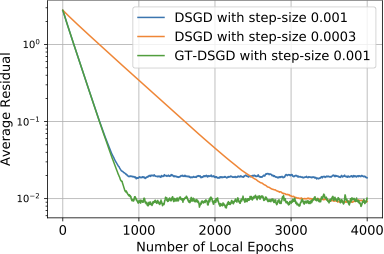

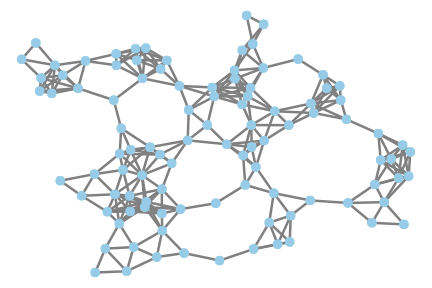

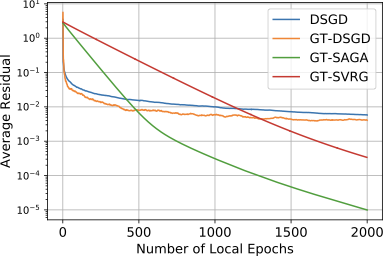

Decentralized solutions to finite-sum minimization are of significant importance in many signal processing, control, and machine learning applications. In such settings, the data is distributed over a network of arbitrarily-connected nodes and raw data sharing is prohibitive often due to communication or privacy constraints. In this article, we review decentralized stochastic first-order optimization methods and illustrate some recent improvements based on gradient tracking and variance reduction, focusing particularly on smooth and strongly-convex objective functions. We provide intuitive illustrations of the main technical ideas as well as applications of the algorithms in the context of decentralized training of machine learning models.💡 Summary & Analysis

**Summary**: This research paper reviews decentralized stochastic optimization methods, focusing on their application in signal processing, control systems, and machine learning. It addresses scenarios where data is distributed across network nodes with arbitrary connections, making raw data sharing impractical due to communication or privacy constraints.Problem Statement: Traditional centralized learning requires all data to be aggregated at a single point for processing. However, practical issues such as high communication costs and privacy concerns often make it infeasible to share raw data among different entities.

Solution (Core Technology): The paper introduces decentralized optimization methods leveraging gradient tracking and variance reduction techniques. Each node computes gradients based on its local data, updates an estimate of the global gradient, and minimizes the noise introduced during this process, leading to efficient learning even in a distributed setting.

Key Achievements: The authors demonstrate that their approach can effectively solve strongly convex optimization problems with reduced communication costs and improved efficiency in decentralized training environments. This method not only optimizes the use of local data but also reduces overall network load while ensuring privacy.

Significance & Applications: By providing robust methods for distributed machine learning, this research opens up new possibilities for secure and efficient processing across various domains where raw data sharing is constrained by practical limitations.

📄 Full Paper Content (ArXiv Source)

📊 논문 시각자료 (Figures)