EntropyDB A Probabilistic Method for Approximate Query Processing

📝 Original Paper Info

- Title: EntropyDB A Probabilistic Approach to Approximate Query Processing- ArXiv ID: 1911.04948

- Date: 2019-11-13

- Authors: Laurel Orr, Magdalena Balazinska, and Dan Suciu

📝 Abstract

We present EntropyDB, an interactive data exploration system that uses a probabilistic approach to generate a small, query-able summary of a dataset. Departing from traditional summarization techniques, we use the Principle of Maximum Entropy to generate a probabilistic representation of the data that can be used to give approximate query answers. We develop the theoretical framework and formulation of our probabilistic representation and show how to use it to answer queries. We then present solving techniques, give two critical optimizations to improve preprocessing time and query execution time, and explore methods to reduce query error. Lastly, we experimentally evaluate our work using a 5 GB dataset of flights within the United States and a 210 GB dataset from an astronomy particle simulation. While our current work only supports linear queries, we show that our technique can successfully answer queries faster than sampling while introducing, on average, no more error than sampling and can better distinguish between rare and nonexistent values. We also discuss extensions that can allow for data updates and linear queries over joins.💡 Summary & Analysis

This paper introduces EntropyDB, a system that uses the Maximum Entropy Principle to generate probabilistic models for datasets. The goal is to provide approximate query answers efficiently and with minimal resource usage. Here's an in-depth look at the key aspects:Core Summary: The paper presents EntropyDB, which leverages the Maximum Entropy Principle to create probabilistic representations of datasets for providing approximate query responses.

Problem Statement: Processing exact queries on large datasets is time-consuming and resource-intensive, especially when real-time analysis or rapid exploration of massive data volumes is required.

Solution (Core Technology): EntropyDB employs the Maximum Entropy Principle to generate a probabilistic model of the dataset. This model assigns probabilities to each possible instance in the dataset, enabling approximate query responses:

- Probabilistic Representation: The system learns probability distributions related to expected values of data points within the dataset.

- Query Processing: Using this probabilistic model, it processes queries quickly and returns approximate results.

Key Results: EntropyDB demonstrated high efficiency on real datasets, significantly reducing query times for large-scale data while maintaining accuracy with minimal resource usage.

Significance and Applications: This approach is valuable in various fields requiring real-time analysis or rapid exploration of massive datasets. For example, it can be useful in finance for real-time trading data queries and in healthcare for quick patient data analysis.

📄 Full Paper Content (ArXiv Source)

For example, consider a data scientist who analyzes a dataset of flights in the United States for the month of December 2013. All she knows is that the dataset includes all flights within the 50 possible states and that there are 500,000 flights in total. She wants to know how many of those flights are from CA to NY. Without any extra information, our approach would assume all flights are equally likely and estimate that there are $`500,000/50^2 = 200`$ flights.

Now suppose the data scientist finds out that flights leaving CA only go to NY, FL, or WA. This changes the estimate because instead of there being $`500,000/50 = 10,000`$ flights leaving CA and uniformly going to all 50 states, those flights are only going to 3 states. Therefore, the estimate becomes $`10,000/3 = 3,333`$ flights.

This example demonstrates how our summarization technique takes into account these existing statistics over flights going to and from specific states to answer queries, and the rest of this section covers its theoretical foundation.

Possible World Semantics

To model a probabilistic database, we use a slotted possible world semantics where rows have an inherent unique identifier, meaning the order of the tuples matters. Our set of possible worlds is generated from the active domain and size of each relation. Each database instance is one possible world with an associated probability such that the probabilities of all possible worlds sum to one.

In contrast to typical probabilistic databases where a relation is tuple-independent and the probability of a relation is calculated from the product of the probability of each tuple, we calculate a relation’s probability from a formula derived from the MaxEnt principle and a set of constraints on the overall distribution1. This approach captures the idea that the distribution should be uniform except where otherwise specified by the given constraints.

The Principle of Maximum Entropy

The Principle of Maximum Entropy (MaxEnt) states that subject to prior data, the probability distribution which best represents the state of knowledge is the one that has the largest entropy. This means given our set of possible worlds, $`PWD`$, the probability distribution $`\Pr(I)`$ is one that agrees with the prior information on the data and maximizes

\begin{equation*}

-\sum_{I \in PWD}\Pr(I)\log(\Pr(I))

\end{equation*}where $`I`$ is a database instance, also called possible world. The above probability must be normalized, $`\sum_I \Pr(I)=1`$, and must satisfy the prior information represented by a set of $`k`$ expected value constraints:

\begin{equation}

\label{eq:e:s}

s_{j} = \E[\phi_{j}(I)], \ \ j=1,k

\end{equation}where $`s_{j}`$ is a known value and $`\phi_{j}`$ is a function on $`I`$ that returns a numerical value in $`\mathbb{R}`$. One example constraint is that the number of flights from CA to WI is 0.

Following prior work on the MaxEnt principle and solving constrained optimization problems , the MaxEnt probability distribution takes the form

\begin{equation}

\label{eq:pr:i}

\Pr(I) = \frac{1}{Z}\exp\left(\sum_{j = 1}^{k}\theta_{j}\phi_{j}(I)\right)

\end{equation}where $`\theta_{j}`$ is a parameter and $`Z`$ is the following normalization constant:

\begin{equation*}

Z \eqdef \sum_{I \in PWD}\left(\exp\left(\sum_{j = 1}^{k}\theta_{j}\phi_{j}(I)\right)\right).

\end{equation*}To compute the $`k`$ parameters $`\theta_j`$, we must solve the non-linear system of $`k`$ equations, [eq:e:s], which is computationally difficult. However, it turns out that [eq:e:s] is equivalent to $`\partial \Psi / \partial \theta_j = 0`$ where the dual $`\Psi`$ is defined as:

\begin{equation*}

\Psi \eqdef \sum_{j = 1}^{k} s_{j}\theta_{j} - \ln\left(Z\right).

\end{equation*}Furthermore, $`\Psi`$ is concave, which means solving for the $`k`$ parameters can be achieved by maximizing $`\Psi`$. We note that $`Z`$ is called the partition function, and its log, $`\ln(Z)`$, is called the cumulant.

Lastly, we adopt a slightly different notation where instead of $`e^{\theta}`$ we use $`\alpha`$. [eq:pr:i] now becomes

\begin{equation}

\label{eq:pr:i:new}

\Pr(I) = \frac{1}{Z}\prod_{j = 1}^k \alpha_{j}^{\phi_{j}(I)}.

\end{equation}Future Work

The above evaluation shows that is competitive with stratified sampling overall and better at distinguishing between infrequent and absent values. Importantly, unlike stratified sampling, ’s summaries permit multiple 2D statistics. Further, as is based on modeling the data, it does not actually need access to the original, underlying data. If a data scientist only has access to, for example, various 2-dimensional histogram queries of the entire dataset, would still be able to build a model of the data and answer queries. Sampling would only be able to handle queries that are directly over one of the histograms. The main limitations of are the dependence on the size of the active domain, correlation-based 2D statistic selection, manual bucketization, and limited query support.

To address the first problem, our future work is to investigate using standard algebraic factorization techniques on non-materializable polynomials. By further reducing the polynomial size, we will be able to handle larger domain sizes. We also will explore using statistical model techniques to more effectively decompose the attributes into 2D pairs, similar to . To no longer require bucketizing categorical variables (like city), we will research hierarchical polynomials. These polynomials will start with coarse buckets (like states), and build separate polynomials for buckets that require more detail. This may require the user to wait while a new polynomial is being loaded but would allow for different levels of query accuracy without sacrificing polynomial size.

Addressing our queries not reporting error is non-trivial and requires combining the errors in the statistics with the errors in the model parameters with the errors in making the uniformity assumption for the attributes not covered by a statistic. Our future work will be to understand how the error depends on the each of these facets and developing an error equation that propagates these errors through polynomial evaluation.

Handling Joins and Data Updates

When building our MaxEnt summary, we assume there was only a single relation being summarized and the underlying data is not updated. We now discuss two extensions of our summarization technique to address both of these assumptions.

Joins

In 3, we introduce the MaxEnt model over a single, universal (pre-joined) relation $`R`$ and an instance $`\mathbf{I}`$ of $`R`$. Now, suppose the data we want to summarize consists of $`r`$ relations, $`R_1,\ldots,R_r`$, each with an associated instance $`\mathbf{I}_1,\ldots,\mathbf{I}_r`$. To describe our approach, we assume each $`R_i`$ joins with $`R_{i+1}`$ by an equi-join on attribute $`A_{j_{i,i+1}}`$; $`R = R_1 \bowtie_{R_1.A_{j_{1,2}} = R_2.A_{j_{1,2}}} R_2 \bowtie \ldots \bowtie R_r`$. Our technique can easily be extended to work for multiple equi-join attributes, but for simplicity, we describe the approach for a single join attribute. Let $`R(A_1, \ldots, A_m)`$ be the global schema of $`R_1 \bowtie R_2 \bowtie \ldots \bowtie R_r`$ with active domains as described in 3.

The simplest approach to handle joins is to join all relations and build a summary over the universal relation. Once the summary is built, the universal relation can be removed. While this summary can now handle queries over $`R`$, it requires $`R`$ to be computed once, which can be an expensive procedure.

Our approach is to build a separate MaxEnt data summary for each instance: $`\{(P_1, \{\alpha_j\}_1, \Phi_1), \ldots, (P_r, \{\alpha_j\}_r, \Phi_r)\}`$. A linear query $`\mathbf{q}`$ with associated predicate $`\pi_{\mathbf{q}}`$ over $`R`$ is answered by iteration over the distinct values in the join attributes;

\begin{align*}

\E[\inner{\mathbf{q}}{\mathbf{I}}] &= \sum_{d_1 \in D_{j_{1,2}}}\ldots\sum_{d_{r-1} \in D_{j_{r-1,r}}} \E[\inner{\mathbf{q}'}{\mathbf{I}_1}]\ldots\E[\inner{\mathbf{q}'}{\mathbf{I}_r}]\\

\textrm{s.t. } \pi_{\mathbf{q}'} &= \pi_{\mathbf{q}} \land (R_1.A_{j_{1,2}} = d_1) \land (R_2.A_{j_{1,2}} = d_1) \land \\

&\ldots \land (R_r.A_{j_{r-1,r}} = d_{r-1}).

\end{align*}where $`\mathbf{q}'`$ is the linear query associated with $`\pi_{\mathbf{q}'}`$ and $`R_i.A_j`$ denotes attribute $`A_j`$ in relation $`R_i`$. We abuse notation slightly in that $`\E[\inner{\mathbf{q}'}{\mathbf{I}_i}]`$ is the answer to $`\mathbf{q}'`$ projected on to the attributes of $`R_i`$ (setting $`\rho \equiv true`$ for attributes not in $`R_i`$). Note that if $`\mathbf{q}`$ is only over a subset of relations, then the summation only needs to be over the distinct join values of the relations in the query.

While this method will return an approximate answer, it does rely on iteration over the active domain of the join attributes, which, as we shown in 9, can be expensive for larger domain sizes. However, for each relation $`R_i`$, if we modify the statistic constraints associated with the 1D statistics of the join attribute $`A_{j_{i, i+1}}`$, we can improve runtime by decreasing the number of iterations in the summation.

At a high level, before learning multi-dimensional statistics, for each $`\ell \in J_{j_{i, i+1}}`$ (each 1D statistic index for attribute $`A_{j_{i, i+1}}`$), we replace $`(\mathbf{c}_{\ell}, s_{\ell})`$ by $`(\mathbf{c}_{\ell}, \bar{s})`$ where $`\bar{s}`$ is the average $`s_{\ell}`$ value of a group of statistics in $`J_{j_{i, i+1}}`$. This is similar to building a COMPOSITE statistic over $`A_{j_{i, i+1}}`$ except instead of replacing each individual statistic by the composite, we are modifying the constraint for each statistic. We do do not replace the 1D statistics because we still want to be able to query at the level of an individual tuple. As our querying technique is equivalent to derivation, if we remove the fine-grained 1D statistics, there is nothing to derivate by if a query is issued over a 1D statistic.

Specifically, with $`B'_s \leq B_s`$ as the budget for the 1D statistic, suppose we learn that $`\setof{g_k^{i,i+1} = [l^{i}_k,u^{i}_k]}{k = 1, B'_s}`$ is the optimal set of boundaries for join attribute $`A_{j_{i, i+1}}`$ from relation $`R_i`$ to $`R_{i+1}`$. These can be learned with the K-D tree method in 6 by sorting and then repeatedly splitting on the single axis until the budget $`B'_s`$ is reached. We then apply the same bounds of $`\setof{[l^{i}_k,u^{i}_k]}{k = 1, B'_s}`$ on any multi-dimensional statistic covering $`A_{j_{i, i+1}}`$ in $`R_{i}`$ and $`R_{i+1}`$ and the 1D statistic covering $`A_{j_{i, i+1}}`$ in $`R_{i+1}`$ (see [ex:join]). As this boundary is learned before multi-dimensional statistics are built, when we build a 2-dimensional statistic covering $`A_{j_{i, i+1}}`$, we seed the K-D tree with the $`A_{j_{i, i+1}}`$ axis splits of $`\setof{g_k^{i,i+1}}{k = 1, B'_s}`$ and repeatedly split on the other axis until reaching our budget $`B_s`$.

Using this transfer boundary technique and rewriting the summation, we can answer queries over joins by iterating over a single point in each range boundary rather than all individual values. The following example gives intuition as to how this boundary transfer works.

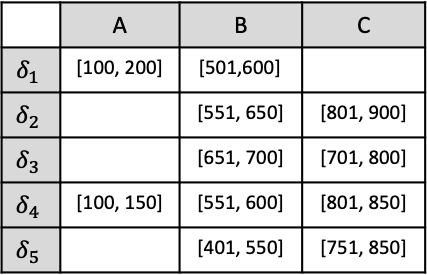

Suppose we have two relations $`R(A, B)`$ and $`S(B, C)`$ with instances $`\mathbf{I}_R`$ and $`\mathbf{I}_S`$ where each attribute has an active domain size of 3. We also have a query $`\mathbf{q}`$ with predicate $`\pi_{\mathbf{q}} = (A = a_1 \land C = c_1)`$ over $`R \bowtie S`$. Let each relation build a data summary $`(P, \{\alpha_j\}, \Phi)`$ using a 1D composite statistic over $`B`$ and a 2D statistic over their two attributes with $`B_s = 4`$ and $`B'_s = 2`$. Lastly, for the composite statistic, suppose we learn that the optimal boundaries for $`B`$ are $`[b_1,b_2]`$ and $`[b_3]`$, meaning the statistics over $`B`$ will be

\begin{align*}

&(B = b_1,\ (s_{b_1} + s_{b_2})/2) \\

&(B = b_2,\ (s_{b_1} + s_{b_2})/2) \\

&(B = b_3,\ s_{b_3})

\end{align*}where $`s_{b_i}`$ is the number of tuples where $`B = b_i`$. Note that the constraint for $`b_1`$ and $`b_2`$ is the same which implies $`n\beta_1\partial P/P\partial \beta_1 = n\beta_2\partial P/P\partial \beta_2`$ by [eq:e:s].

By our naïve strategy, the answer to $`\mathbf{q}`$ is

\begin{align*}

\E[\inner{\mathbf{q}}{\mathbf{I_R \bowtie \mathbf{I}_S}}] &= \sum_{b \in [b_1, b_3]} \E[\inner{\mathbf{q}'}{\mathbf{I}_R}]\E[\inner{\mathbf{q}'}{\mathbf{I}_S}]\\

\textrm{s.t. } \pi_{\mathbf{q}'} &= (A = a_1 \land C = c_1 \land B = b).

\end{align*}This can be rewritten in terms of polynomial derivation as

\begin{align*}

&= \frac{n_R \alpha_1 \beta_1}{P_R} \frac{\partial P_R}{\partial \alpha_1 \partial \beta_1} \frac{n_S \beta_1 \gamma_1}{P_S} \frac{\partial P_S}{\partial \beta_1 \partial \gamma_1}\\

&+ \frac{n_R \alpha_1 \beta_2}{P_R} \frac{\partial P_R}{\partial \alpha_1 \partial \beta_2} \frac{n_S \beta_2 \gamma_1}{P_S} \frac{\partial P_S}{\partial \beta_2 \partial \gamma_1}\\

&+ \frac{n_R \alpha_1 \beta_3}{P_R} \frac{\partial P_R}{\partial \alpha_1 \partial \beta_3} \frac{n_S \beta_3 \gamma_1}{P_S} \frac{\partial P_S}{\partial \beta_3 \partial \gamma_1}.

\end{align*}Consider two cases: when the other 2-dimensional statistics have the same boundaries on $`B`$ and when they do not. For the first case, let the 2D statistics over $`R`$ and $`S`$ be as shown by the rectangles all in black with the red line for $`S`$.

Using these statistics, $`\E[\inner{\mathbf{q}}{\mathbf{I_R \bowtie \mathbf{I}_S}}]`$ now becomes

\begin{align*}

&= \frac{n_R \alpha_1 \beta_1}{P_R}\delta_1 \frac{n_S \beta_1 \gamma_1}{P_S} \delta_5 + \frac{n_R \alpha_1 \beta_2}{P_R} \delta_1 \frac{n_S \beta_2 \gamma_1}{P_S} \delta_5\\

&+ \frac{n_R \alpha_1 \beta_3}{P_R} \delta_2 \frac{n_S \beta_3 \gamma_1}{P_S} \delta_7 \\

&= 2\frac{n_R \alpha_1 \beta_1}{P_R}\delta_1 \frac{n_S \beta_1 \gamma_1}{P_S} \delta_5 + \frac{n_R \alpha_1 \beta_3}{P_R} \delta_2 \frac{n_S \beta_3 \gamma_1}{P_S} \delta_7

\end{align*}where the second line follows because $`n\beta_1\partial P/P\partial \beta_1 = n\beta_2\partial P/P\partial \beta_2`$. Notice how instead of summing over all distinct values in $`B`$, we are summing over $`B'_s`$ values.

In we had statistics over $`S`$ that were the rectangles in black with the green line (the boundaries on $`B`$ do not match those of the composite 1D statistic), $`\E[\inner{\mathbf{q}}{\mathbf{I_R \bowtie \mathbf{I}_S}}]`$ would be

\begin{align*}

&= \frac{n_R \alpha_1 \beta_1}{P_R}\delta_1 \frac{n_S \beta_1 \gamma_1}{P_S} \delta_5 + \frac{n_R \alpha_1 \beta_2}{P_R} \delta_1 \frac{n_S \beta_2 \gamma_1}{P_S} \delta_6\\

&+ \frac{n_R \alpha_1 \beta_3}{P_R} \delta_2 \frac{n_S \beta_3 \gamma_1}{P_S} \delta_7

\end{align*}which does not simplify.

Formally, suppose we only use the transfer boundary technique for $`A_{j_{r-1,r}}`$, the last join attribute; we make the 1D composite statistic boundaries of $`A_{j_{r-1,r}}`$ the same in $`R_{r-1}`$ and $`R_r`$. Using $`|g_k^{i,i+1}| = u^{i}_k - l^{i}_k + 1`$ (the size of the range), we can rewrite $`\E[\inner{\mathbf{q}}{\mathbf{I}}]`$ as

\begin{align*}

&\E[\inner{\mathbf{q}}{\mathbf{I}}] = \sum_{d_1 \in D_{j_{1,2}}}\ldots\sum_{d_{r-1} \in D_{j_{r-1,r}}}\prod_{i=1}^{r}\E[\inner{\mathbf{q}'}{\mathbf{I}_i}] \\

&= \sum_{d_1 \in D_{j_{1,2}}}\ldots\sum_{g_k^{r-1,r}}\sum_{d_{r-1} \in g_k^{r-1,r}}\prod_{i=1}^{r}\E[\inner{\mathbf{q}'}{\mathbf{I}_i}] \\

&= \sum_{d_1 \in D_{j_{1,2}}}\ldots\sum_{g_k^{r-1,r}}\E[\inner{\mathbf{q}'_{r}}{\mathbf{I}_{r}}]\sum_{d_{r-1} \in g_k^{r-1,r}}\prod_{i=1}^{r-1}\E[\inner{\mathbf{q}'}{\mathbf{I}_i}] \\

&= \sum_{d_1 \in D_{j_{1,2}}}\ldots\sum_{g_k^{r-1,r}}\Big[|g_k^{r-1,r}|*\E[\inner{\mathbf{q}'_{r-1}}{\mathbf{I}_{r-1}}]\\

&\hspace{80pt} *\E[\inner{\mathbf{q}'_{r}}{\mathbf{I}_{r}}]*\prod_{i=1}^{r-2}\E[\inner{\mathbf{q}'}{\mathbf{I}_i}]\Big]

\end{align*}\begin{align*}

\textrm{s.t. } &\pi_{\mathbf{q}'_{r-1}} = \pi \land (R_1.A_{j_{1,2}} = d_1) \land \ldots \land (R_{r}.A_{j_{r-1,r}} = true) \\

&\hspace{24pt}\land (R_{r-1}.A_{j_{r-1,r}} = D_{j_{r-1, r}}[l^{i}_k]) \\

&\pi_{\mathbf{q}'_{r}} = \pi \land (R_1.A_{j_{1,2}} = d_1) \land \ldots \land (R_{r-1}.A_{j_{r-1,r}} = true) \\

&\hspace{24pt}\land (R_r.A_{j_{r-1,r}} = D_{j_{r-1, r}}[l^{i}_k]).

\end{align*}The step from line one to line two is replacing the sum over $`d_{r-1} \in D_{j_{r-1,r}}`$ to a sum over boundaries $`\set{g_k^{r-1,r}}`$ and a sum over distinct values in the boundary. In line three, we pull out the query over $`\mathbf{I}_{r}`$ because the answer for $`\E[\inner{\mathbf{q}'}{\mathbf{I}_{r}}]`$ is the same for each $`d_{r-1} \in g_k^{r-1,r}`$ as they use the same composite statistic. Therefore, we pull out the query and modify the query’s predicate to be over the lower boundary value (any value in the boundary would produce equivalent results).

In line four, we perform the same trick and pull out the query over $`\mathbf{I}_{r-1}`$ because $`\E[\inner{\mathbf{q}'}{\mathbf{I}_{r-1}}]`$ will also be the same for each $`d_{r-1} \in g_k^{r-1,r}`$. Lastly, because $`\prod_{i=1}^{r-2}\E[\inner{\mathbf{q}'}{\mathbf{I}_i}]`$ is independent of $`R_{r-1}`$ as it does not contain $`\mathbf{I}_{r-1}`$, we can also pull it out of the sum. At the end, we get a summation over the value one that repeats $`|g_k^{r-1,r}|`$ times. This sum rewriting trick can be applied to all attributes with shared boundaries on all statistics covering the join attributes.

By performing this boundary transfer trick, we have replaced the sum for distinct values of $`A_{j_{r-1,r}}`$ with the sum over lower boundary points of $`\set{g_k^{i,i+1}}`$. We can repeat this boundary transfer for any of the dense distinct join values to make the final join algorithm efficient. Note that this technique does lose accuracy as we are no longer building building fine-grained 1D statistics over the join attributes and are using potentially suboptimal boundaries for other multi-dimensional statistics.

Updates

Another key assumption made in 3 is that the data being summarized is read only and not updated. If we relax that assumption and let the underlying data change, our model needs to be updated, too. We make the assumption that data updates are represented as single tuple additions or deletions. For example, a value change can be represented as a tuple deletion followed by a tuple addition. Alg. [alg:update_model] describes our update technique.

for (*@$\Delta t$@*) do

*@$\Phi$@* = *@\textcolor{Maroon}{updateStats}@*(*@$\Phi$@*, *@$\Delta t$@*)

if (not *@$\{\alpha_j\}$@* being updated) do

if *@\textcolor{Maroon}{timeToRebuild}@* do

*@$(P, \{\alpha_j\}, \Phi)$@* = *@\textcolor{Maroon}{rebuildModel}@*(*@$R$@*)

else

*@$\{\alpha_j\}$@* = *@\textcolor{Maroon}{updateParams}@*(*@$\Phi$@*, *@$\{\alpha_j\}$@*)The intuition behind our algorithm is that as updates come in, it is satisfactory to initially only update the polynomial parameters $`\{\alpha_j\}`$ while keeping the statistic predicates the same. However, as the data continues to be updated, the underlying correlations and relationships of the attributes may change, meaning the statistic predicates are no longer optimal. When this occurs, the entire summary needs to be rebuilt. Ideally, the rebuilding would happen overnight or when the summary is not in high demand.

Out algorithm works as follows. For each tuple update, updateStats modifies $`s_j`$ for each predicate $`\pi_j`$ that $`t`$ satisfies. $`s_j`$ increases or decreases by one depending of it $`t`$ is being added or removed. It is important to realize that updateStats does not update the predicates defining the statistics, just the predicate values.

After the statistics are updated, we check if either updateParams or rebuildModel is currently running or in progress. If it is, we move on to the next update, effectively batching our changes. If no update is in progress, we update or rebuild our model. updateParams simply updates the polynomial parameters $`\{\alpha_j\}`$ by running Alg. [alg:solve] initialized by the last solved for parameters. By initializing our model at the last know solution, we decrease convergence time because many of the parameters are already solved for and do not need to change. In contrast to simply updating our parameters, rebuildModel starts from scratch and regenerates the statistics, polynomial, and parameters.

The final method to discuss, timeToRebuild, decides whether to update or rebuild the model. There are numerous different ways to defining timeToRebuild, and we give three such possibilities below.

When the number of tuple updates reaches some predefined threshold $`B`$.

When the system does not have many users, meaning there is more compute power to rebuild the summary.

When attribute correlations are not accurately represented in $`\Phi`$. when some attribute pair in $`\Phi`$ is uniformly distributed or when some attribute pair in $`R`$ is correlated but not included in $`\Phi`$.

Connection to Probabilistic Databases

At a high level, learned a probability distribution of the data so that each possible instance has some associated probability of existing. Since this possible world semantics is the same semantics as used by probabilistic databases, how does relate to probabilistic databases ?

Recall that probabilistic databases store uncertain data, and, like , represent the probability of a tuple as $`\Pr(t) = \sum_{I \in PWD | t \in I}\Pr(I)`$. The uncertainty in the data arises from the application such as data extraction, data integration, or data cleaning. Probabilistic databases are commonly stored as tuple independent (TI) databases where each tuple has an associated marginal probability and is an independent probabilistic event.

, on the other hand, does not store the marginal probabilities. Recall that for , if we let $`\mathbf{q} = \mathbf{t}_{\ell}`$ for some tuple $`t_{\ell}`$, then, from [eq:eq], we can calculate the expected number of times tuple $`t_{\ell}`$ appears in an instance. If we divide by $`n`$, we calculate the expected percent of times tuple $`t_{\ell}`$ appears in an instance. This, in general, is not the same as the marginal probability. It turns out that when $`n = 1`$, the two are equivalent.

For some tuple $`t_{\ell}`$ and associated linear query $`\mathbf{t}_{\ell}`$ (query with 1 in the place of tuple $`t_{\ell}`$ and 0 elsewhere), when $`n = 1`$, $`\E[\inner{\mathbf{t}_{\ell}}{\mathbf{I}}]`$ is the marginal probability of a tuple; , $`\Pr(t_{\ell} \in I)`$.

Proof. We will use the same trick as in 3.2 by extending the polynomial with a new variable $`\beta = 1`$ representing the query $`\mathbf{t}_{\ell}`$. Denote this extended polynomial as $`P_{\mathbf{t}_{\ell}}`$. Following [eq:pq], we get

\begin{align}

(P_{\mathbf{t}_{\ell}})^n &= \left(\sum_{i=1,d} \prod_{j=1,k} \alpha_j^{\inner{\mathbf{c}_j}{\mathbf{t}_i}} \beta^{\inner{\mathbf{t}_{\ell}}{\mathbf{t}_i}}\right)^n \nonumber \\

&= \left(\prod_{j=1,k} \alpha_j^{\inner{\mathbf{c}_j}{\mathbf{t}_{\ell}}}\beta + \sum_{\substack{i=1,d \\ i \neq \ell}} \prod_{j=1,k} \alpha_j^{\inner{\mathbf{c}_j}{\mathbf{t}_i}}\beta^{\inner{\mathbf{t}_{\ell}}{\mathbf{t}_i}}\right)^n \nonumber \\

&= \left(\left(\beta \frac{\partial P_{\mathbf{t}_{\ell}}}{\partial \beta}\right) + \left(P_{\mathbf{t}_{\ell}} - \beta \frac{\partial P_{\mathbf{t}_{\ell}}}{\partial \beta} \right) \right)^n.

\end{align}Note that $`\beta^{\inner{\mathbf{t}_{\ell}}{\mathbf{t}_i}} = 0`$ when $`\ell \neq i`$. We use this rewriting in finding the marginal probability.

\begin{align*}

\Pr(t_{\ell} \in I) &= 1 - \Pr(t_{\ell} \notin I)\\

&= 1 - \frac{1}{(P_{\mathbf{t}_{\ell}})^n} \sum_{I : t_{\ell} \notin I}\prod_{j=1,k} \alpha_j^{\inner{\mathbf{c}_j}{\mathbf{I}}}\beta^{\inner{\mathbf{t}_{\ell}}{\mathbf{I}}}\\

&= 1 - \frac{1}{(P_{\mathbf{t}_{\ell}})^n} \left(\sum_{\substack{i=1,d \\ i \neq \ell}} \prod_{j=1,k} \alpha_j^{\inner{\mathbf{c}_j}{\mathbf{t}_i}}\beta^{\inner{\mathbf{t}_{\ell}}{\mathbf{t}_i}}\right)^n \\

&= 1 - \frac{1}{(P_{\mathbf{t}_{\ell}})^n} \left(P_{\mathbf{t}_{\ell}} - \beta \frac{\partial P_{\mathbf{t}_{\ell}}}{\partial \beta} \right)^n\\

&= 1 - \left(1 - \frac{\beta}{P_{\mathbf{t}_{\ell}}}\frac{\partial P_{\mathbf{t}_{\ell}}}{\partial \beta} \right)^n\\

\end{align*}The third line follows a similar proof as [eq:p]. From [eq:eq] and since $`\beta = 1`$ and $`n = 1`$, we get

\begin{align*}

\Pr(t_{\ell} \in I) &= \frac{1}{P_{\mathbf{t}_{\ell}}}\frac{\partial P_{\mathbf{t}_{\ell}}}{\partial \beta} \\

&= \E[\inner{\mathbf{t}_{\ell}}{\mathbf{I}}]

\end{align*}◻

It is important to note that although we can calculate the marginal probability, the probabilities are not independent; , we do not have a TI probabilistic database.

Connection to Graphical Models

The Principle of Maximum Entropy is well studied, and it is known that

the maximum entropy solution with marginal constraints (, COUNT(*)

constraints) is equivalent to the maximum likelihood solution for

exponential family models . This can be seen by

transforming [eq:pr:i] into exponential form

\begin{align}

\label{eq:pr:i:exponential}

\Pr(I) = &\exp\left(\left(\sum_{j = 1}^{k}\theta_{j}\phi_{j}(I)\right)\right. \\

& - \left.\log\sum_{I \in PWD}\left(\exp\left(\sum_{j = 1}^{k}\theta_{j}\phi_{j}(I)\right)\right)\right).

\end{align}Further, this exponential form is equivalent to the probability distribution defined over an exponential family Markov Network or, more generally, factor graph . Markov networks are factor graphs where the factors representing the parameterization of the probability distribution are defined solely on cliques in the graph.

This connection implies that we can use MLE techniques to solve for the parameters $`\theta_j`$, and, in fact, the modified gradient descent technique we use in 3.3 is the same as the iterative proportional fitting (also called iterative scaling algorithm) used to solve the parameters in exponential family graphical models.

It is important to note that because we use the slotted possible world semantics, we are able to factorize our partition function $`Z`$ to a multi-linear polynomial raised to the power $`n`$ (see [eq:z:p]). This simplification allows for drastic performance benefits in terms of solving and query answering 4. In 4, we discussed two main optimizations: polynomial compression and query answering by setting certain 1D variables to zero. We now discuss how to implement these optimizations efficiently and analyze the runtime.

Building the Polynomial

Recall from 1 that building our compressed polynomial starts with the product of the sum of all 1D statistics and then uses the inclusion/exclusion principle to modify the terms to include the correct multi-dimensional statistics. The terms that need to be modified are those that satisfy the predicates associated with the sets of non-conflicting multi-dimensional statistics. , for some $`\mathcal{I}^+`$, the terms to be modified are the 1D terms $`\alpha_j`$ such that $`\pi_j \wedge \pi_{S} \not\equiv \texttt{false}`$ for $`S \in J_{\mathcal{I}^+}`$. The algorithmic challenge is how we find non-conflicting statistics $`J_{\mathcal{I}^+}`$ for some $`\mathcal{I}^+`$ and, once we know $`J_{\mathcal{I}^+}`$, how we find the 1D statistics that need to be modified.

To solve the latter problem, assume we have some $`J_{\mathcal{I}^+}`$. Since each multi-dimensional statistic is a range predicate over the elements in our domain and we have complete 1D statistics over the elements in our domain, once we know the range predicate, $`\pi_S`$ for $`S \in J_{\mathcal{I}^+}`$, we can easily find the associated 1D statistics.

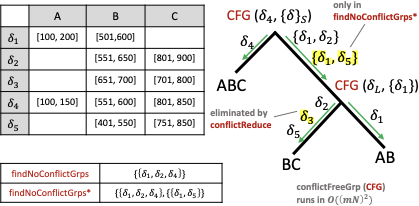

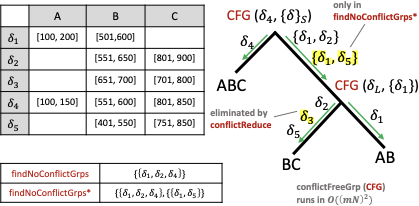

Take the example in 13 which we will refer to throughout this section. If we know that $`J_{\{\{1,2\},\{2,3\},\{1,2,3\}\}} = \{\{j_1, j_2, j_4\}\}`$, then, by examining the range predicates associated with those three multi-dimensional statistics, we can determine that $`\alpha_i`$ for $`i \in [101,150]`$, $`\beta_j`$ for $`j \in [551, 600]`$, and $`\gamma_k`$ for $`k \in [801, 850]`$ are the 1D statistics that need to be modified to include $`\delta_1`$, $`\delta_2`$, and $`\delta_4`$.

The other problem is how we find the groups of multi-dimensional

statistics that do not conflict for some group of attribute sets (the

$`J_{\mathcal{I}^+} \neq \emptyset`$). To solve this, we assume we are

given four inputs: a list of attributes, a list of $`B_a`$

multi-dimensional attribute sets

(for 13), a dictionary of the 1D statistics

with attributes $`A_i`$ as keys (denoted

1DStats), and a dictionary of

multi-dimensional statistics with indices into the list of $`B_a`$

attributes sets as keys (denoted

multiDStats).

A straightforward algorithm to build the polynomial is shown in

Alg. [alg:P_building_unop] where the

red notations indicate the time complexity of each section of pseudo

code. The function combinations(k,

$`B_a`$) generates a list of all possible length $`k`$ index sets from

$`[1, B_a]`$. Note that we abuse the notation for dictionary selection

slightly in that if idx is $`\{1, 2\}`$,

multiDStats[idx] would select

both the multi-dimensional stats of 1 and 2, $`AB`$ and $`BC`$, and

1DStats[not idx] would select

no 1D stats since all attributes are used in $`AB`$ and $`BC`$. The

function buildTerm(group) builds

the term shown in the last line

of 1. It generates a sum of the 1D

statistics associated with the group and multiplies the sum by one minus

the multi-dimensional variables in the group.

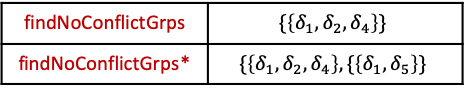

The last function to discuss is

findNoConflictGrps which returns a

dictionary with keys as sets of multi-dimensional attribute indices and

values of groups of conflict free statistics. For example, for

$`k = 2`$, a key would be $`\{1, 2\}`$ with value

$`\{\delta_1, \delta_2\}`$ indicating that $`\delta_1`$ and $`\delta_2`$

do not conflict. The algorithm works by treating each multi-dimensional

index set in idx as a relation with rows of the statistics associated

with that index set. It then computes a theta-join over these relations

with the join condition being if the statistics are conflict free.

For example, $`\delta_1`$ and $`\delta_2`$ are conflict free but not $`\delta_1`$ and $`\delta_3`$. Further, statistics over disjoint attributes sets are also conflict free. If we had a relation $`R(A,B,C,D)`$ and some statistic over $`AB`$ and another over $`CD`$, all of those multi-dimensional statistics from $`AB`$ would be satisfiable with all other from $`CD`$.

// add part *@\textcolor{Gray}{\textbf{i}}@* to P

P = *@\textcolor{Maroon}{1DProdSum}@*(*@\tt\textcolor{OliveGreen}{1DStats}@*)*@\tikzmark{line1}@*

for (k in [1:*@$B_a$@*]) do

for (idx in *@\textcolor{Maroon}{combinations}@*(k, *@$B_a$@*)) do

// add part *@\textcolor{Gray}{\textbf{ii}}@* to P

P.addTerms(*@\textcolor{Maroon}{1DProdSum}@*(*@\tt\textcolor{OliveGreen}{1DStats}@*[not idx]))

satGrps = *@\textcolor{Maroon}{findNoConflictGrps}@*(*@\tt\textcolor{OliveGreen}{multiDStats}@*[idx])*@\tikzmark{line3}@*

// add part *@\textcolor{Gray}{\textbf{iii}}@* to P *@\tikzmark{line4}@*

for (group in satGrps) do *@\tikzmark{top3}@*

P.addTerms(*@\textcolor{Maroon}{buildTerm}@*(group)) *@\tikzmark{bottom3}@*To understand the runtime complexity of the algorithm, start with the

function buildTerm(group). For ease

of notation, we will denote $`N = \max_i N_i`$. Recall that $`mN`$ is

the total number of distinct values across all attributes, $`B_a`$ is

the number of attribute sets, and $`\hat{B}_s`$ is the largest number of

statistics per attribute set. The runtime of

buildTerm(group) for a single

satGrps of size $`k`$ is $`O(mN + \hat{B}_s^k)`$ because a single

satGrps will only add each 1D statistic at most once and at most

$`\hat{B}_s^k`$ correction terms. This runtime also includes the time

for 1DProdSum because the 1D

statistics that are not in satGrps will be added in

1DProdSum.

The runtime of findNoConflictGrps involves computing the cross product of the multi-dimensional statistics and comparing the 1D statistics associated with each multi-dimensional statistic to determine if there is a conflict. Specifically, it computes a right deep join tree of the multi-dimensional statistics, and at each step in the tree, iterates over the 1D statistics in each right child conflict free group to see if there is a conflict or not with one of the incoming left child multi-dimensional statistics.

1 shows the findNoConflictGrps join algorithm for the attribute sets $`AB`$, $`BC`$, and $`ABC`$ with one added statistic $`\delta_5`$ on $`BC`$ of $`B \in [401,550] \land C \in [751,850]`$. The function to find and return a single conflict free group is CFG($`\delta_L`$, $`\{\delta\}_S`$) (stands for conflict free group) where $`\delta_L`$ stands for the left multi-dimensional statistic and $`\{\delta\}_S`$ stands for the right, current conflict free group. The $`S`$ subscript is because we are building a new set of multi-dimensional statistics to add to some $`J_{\mathcal{I}^+}`$. We are abusing notation slightly because in 4, $`S`$ stood for the set of indices whereas here, it sands for the set of statistic variables.

CFG first determines which attributes are shared by $`\delta_L`$ and $`\{\delta\}_S`$. Then, for each such attribute, it iterates over $`\delta_L`$’s associated 1D statistics (at most $`mN`$ of them) and checks if at least one of these statistics also exists in the 1D statistics associated with $`\{\delta\}_S`$ (containment has runtime $`mN`$). This ensures, for all attributes $`A_i`$, that $`\rho_{iL} \land \rho_{iS} \not\equiv \texttt{false}`$. If $`A_i`$ is shared, then $`\rho_{iL} \land \rho_{iS}`$ will contain the shared 1D statistic found earlier, and if $`A_i`$ is not shared, then $`\rho_{iL} \land \rho_{iS} \equiv \texttt{true}`$. Note that a conflict free group is not always found. Therefore, the runtime of the join is $`O(2(mN)^2\hat{B}_s^3)`$. For joins of arbitrary size, the runtime is $`O((mN)^2(k-1)\hat{B}_s^k)`$.

Putting it all together we get the runtime of

\begin{multline*}

=mN + \sum_{k=1}^{B_a}\binom{B_a}{k}[mN + (k-1)(mN)^{2}\hat{B}_s^k + \hat{B}_s^k]

\end{multline*}\begin{multline*}

=mN + (2^{B_a}-1)mN + (\hat{B}_s+1)^{B_a} - 1 +\\

(mN)^2\left[\left[\sum_{k=0}^{B_a}\binom{B_a}{k}[k\hat{B}_s^k - \hat{B}_s^k]\right] + 1\right]

\end{multline*}\begin{multline*}

=mN + (2^{B_a}-1)mN + (\hat{B}_s+1)^{B_a} - 1 + (mN)^2 +\\

(mN)^2\left[\sum_{k=0}^{B_a}\binom{B_a}{k}k\hat{B}_s^k\right] - (mN)^2(\hat{B}_s + 1)^{B_a}

\end{multline*}\begin{multline*}

=mN + (2^{B_a}-1)mN + (\hat{B}_s+1)^{B_a} - 1 + (mN)^2 +\\

(mN)^2B_a\hat{B}_s(\hat{B}_s + 1)^{B_a - 1} - (mN)^2(\hat{B}_s + 1)^{B_a}

\end{multline*} style="width:47.0%" />

style="width:47.0%" />

This algorithm, however, is suboptimal because it must run findNoConflictGrps for all $`2^{B_a}`$ attribute sets. It is better to run findNoConflictGrps once for the all multi-dimensional statistics (compute the full theta-join of all $`B_a`$ attribute sets) and reconstruct the smaller groups without paying the cost of checking for conflicts (perform selections over the full theta-join). Further, there are some statistics that will not appear in any other term besides when $`k = 1`$ in the loop above. Take $`\delta_3`$, for example. It is handled in line 2 of 13 but does not appear later on. These insights lead to a more optimized algorithm in Alg. [alg:P_building].

The function conflictReduce is like a

semi-join reduction. It removes multi-dimensional statistics that do not

appear in any conflict free group later on. For example, redMDStats

would only contain $`\delta_1`$, $`\delta_2`$, and $`\delta_4`$. The

function findNoConflictGrps* acts

just as findNoConflictGrps except

instead of computing an inner theta join, it computes a full outer theta

join. The reason being that satGrps needs to keep track of all

conflict free groups even if they contain less that $`B_a`$ statistics.

For example, take the $`\delta_5`$ statistic of $`BC`$ as shown

in 1. $`\delta_1`$ and

$`\delta_5`$ are conflict free, but $`\delta_1`$, $`\delta_5`$, and

$`\delta_4`$ are not conflict free because $`\delta_5`$ conflicts with

$`\delta_4`$ in attribute $`B`$. In this case,

findNoConflictGrps* would return a

dictionary with the keys $`\{1, 2, 3\}`$ and $`\{1, 2\}`$ and values

$`\{\delta_1, \delta_2, \delta_4\}`$ and $`\{\delta_1, \delta_5\}`$,

respectively. The outer join ensures $`\{\delta_1, \delta_5\}`$ is not

lost. Note the time complexity of

findNoConflictGrps and

findNoConflictGrps* is the same

because they must compute the full cross product and then filter.

Lastly, the group[idx] index selection selects the statistics

associated with the attribute sets in idx.

// add part *@\textcolor{Gray}{\textbf{i}}@* to P

P = *@\textcolor{Maroon}{1DProdSum}@*(*@\tt\textcolor{OliveGreen}{1DStats}@*)*@\tikzmark{line1}@*

// add terms when k = 1

for (idx in [1:*@$B_a$@*]) do *@\tikzmark{top1}@*

// add part *@\textcolor{Gray}{\textbf{ii}}@* to P

P.addTerms(*@\textcolor{Maroon}{1DProdSum}@*(*@\tt\textcolor{OliveGreen}{1DStats}@*[not idx]))

// add part *@\textcolor{Gray}{\textbf{iii}}@* to P

for (group in *@\tt\textcolor{OliveGreen}{multiDStats}@*[idx]) do

P.addTerms(*@\textcolor{Maroon}{buildTerm}@*(group)) *@\tikzmark{bottom1}@*

redMDStats = *@\textcolor{Maroon}{conflictReduce}@*(*@\tt\textcolor{OliveGreen}{multiDStats}@*)*@\tikzmark{line2}@*

satGrps = *@\textcolor{Maroon}{findNoConflictGrps*}@*(redMDStats)*@\tikzmark{line3}@*

*@\tikzmark{line4}@*

for (k in [2:*@$B_a$@*]) do *@\tikzmark{top2}@*

for (idx in *@\textcolor{Maroon}{combinations}@*(k, *@$B_a$@*)) do

// add part *@\textcolor{Gray}{\textbf{ii}}@* to P

P.addTerms(*@\textcolor{Maroon}{1DProdSum}@*(*@\tt\textcolor{OliveGreen}{1DStats}@*[not idx]))

// add part *@\textcolor{Gray}{\textbf{iii}}@* to P

for (group in satGrps) do

P.addTerms(*@\textcolor{Maroon}{buildTerm}@*(group[idx]))*@\tikzmark{bottom2}@*We will now show that this algorithm’s time complexity is more optimal

than Alg. [alg:P_building_unop] because

although it loops through satGrps, selecting out a subterm is faster

than rebuilding one, especially after semi-join reduction. Note that if

group[idx] is has already been added to the polynomial from a previous

group, it is just ignored when addTerms is called. As the red notation

indicates, the runtime of the first for loop is

$`O(mNB_a+B_a\hat{B}_s)`$ because for each idx, there are

$`\hat{B}_s`$ multi-dimensional statistics and $`mN`$ 1D statistics to

add the term.

The runtime of conflictReduce involves comparing pairs of multi-dimensional statistics to see if they will participate in any conflict free groups of size two or more. For each $`\binom{B_a}{2}\hat{B}_s^2`$ possible pairs of multi-dimensional statistics, the conflict checking requires examining the 1D statistics of the pair, just like in CFG($`\delta_L`$, $`\{\delta\}_S`$). The next function, findNoConflictGrps*, has the same runtime as before except instead of being run for all $`k`$, it is run only once for $`k=B_a`$.

The last part to analyze is the for loop that iterates over all

satGrps. In our runtime analysis, we add a percentage $`p \in [0, 1]`$

to indicate that only a fraction of the possible $`\hat{B}_s^{B_a}`$

groups are used in the last loop. This decrease is because of

conflictReduce and because, in

practice, there are drastically fewer than $`\hat{B}_s^{B_a}`$ resulting

conflict free groups. In practice, $`p \leq 0.1`$. As the inner most for

loop is that same as in

Alg. [alg:P_building_unop] except

for $`k = B_a`$, the runtime is $`O(\hat{B}_s^{B_a} + mN)`$. As this

happens for all combinations from $`k = 2, B_a`$, the overall runtime is

$`O((2^{B_a} - B_a - 1)(p\hat{B}_s^{B_a} + mN))`$ where the minus is

because $`k`$ starts at two instead of zero.

Adding up the different runtime components, we get the overall runtime of Alg. [alg:P_building] is

\begin{multline*}

=mN + B_a(mN + \hat{B}_s) + \binom{B_a}{2}(mN\hat{B}_s)^2 + \\(B_a-1)(mN)^{2}\hat{B}_s^{B_a} + (2^{B_a}-B_a-1)(mN+p\hat{B}_s^{B_a})

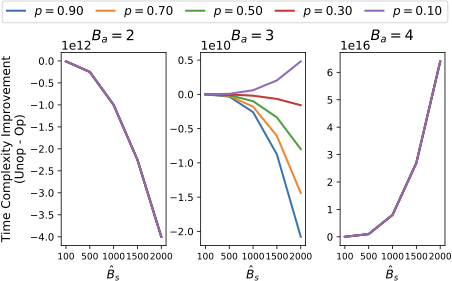

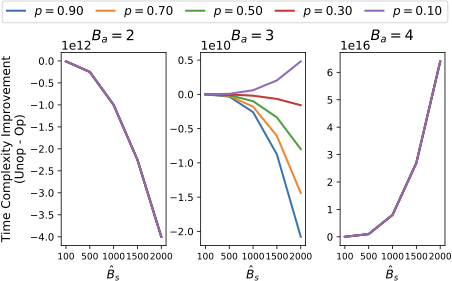

\end{multline*}To show the improvement of the optimized algorithm, 2 shows the runtime difference between Alg. [alg:P_building_unop] and Alg. [alg:P_building] (Alg. [alg:P_building_unop] - Alg. [alg:P_building]) when $`mN = 5000`$ (the trends are similar for other values of $`mN`$). The three columns are for $`B_a = 2, 3, 4`$, the colors represents the different values of $`p`$, and $`\hat{B}_s`$ varies from 100 to 2000. Note that the y-axis of the three columns are on a different scale in order to show the variation between the different values of $`p`$.

style="width:48.0%" />

style="width:48.0%" />

We see that $`p`$ matters for $`B_a = 3`$, and when $`p`$ falls between 0.3 and 0.1, the optimized version is faster. As, in practice, $`p \leq 0.1`$, the optimized version is best for $`B_a > 2`$. The trend shown in $`B_a = 4`$ is the same for $`B_a > 4`$ and thus not included in the plot. This shows that asymptotically, Alg. [alg:P_building] is optimal.

Polynomial Evaluation

Recall from 4.2 and 2 that for a linear query $`\mathbf{q}`$ defined by some predicate $`\pi`$, we can answer the query in expectation ($`\E[\inner{\mathbf{q}}{\mathbf{I}}`$) by setting all 1D variables $`\alpha_j`$ that correspond to values that do not satisfy $`\pi`$ to zero. This is more efficient than taking multiple derivatives, but simply looping over all variables can still be too slow as the compressed polynomial can, at worst, have exponentially many variables (see 1).

To improve performance, we implement four main optimizations: (1) storing the compressed polynomial in memory, (2) parallelizing the computation, (3) fast containment check using bit vectors, (4) caching of subexpression evaluation. The first and second, storing in memory and parallelization, are straightforward, standard techniques that improve looping computations. Note, we can parallelize the computation because each polynomial term can be evaluated independently.

The next optimization, using bit vectors, is to optimize both findNoConflictGrps and determining if a variable needs to be set to zero or not during query evaluation. It is important to understand that the polynomial is hierarchical with nested levels of sums of products of sums. For each subterm (sum or product term in our polynomial), we store (a) a map with variable keys and values of the nested subterms containing that variable, (b) a bit vector of which attributes are contained in the subterm, and (c) a bit vector of which multi-dimensional attribute sets are contained in the subterm.

Take 2 which references 13. Take the subterm referenced by $`\textbf{i}`$. This subterm has a map of all variables pointing to one of three nested sum subterms. The attribute bit vector has a 1 in all places, representing it contains all possible attributes, and the multi-dimensional bit vector is all 0s. Now take the subterm $`(\sum_{551}^{650} \beta_j)(\sum_{801}^{900} \gamma_k)(\delta_2-1)`$. It has a map of only $`\beta`$ variables from $`[551, 650]`$, $`\gamma`$ variables from $`[801, 900]`$, and $`\delta_2`$ all pointing to one of three nested subterms. The attribute bit vector would only have 1 in the $`B`$ and $`C`$ dimensions, and the multi-dimensional bit vector would have a 1 in the dimension representing the attribute set $`BC`$.

These objects allow us to quickly check if some 1-dimensional or multi-dimensional statistic is contained in the term or if there are any variables that need to be set to zero (by using the attribute bit vectors) and which subterms those variables are in. To further see the benefit of this optimization, recall the runtime analysis from 5.1 where findNoConflictGrps required $`(mN)^2`$ steps to check if two statistics were conflict free as it iterates over all 1D statistics. Using maps with variable keys allows us to quickly check if a 1D statistic is contained in another, bringing the runtime down to $`mN`$ for a single pair. The attribute bit vectors can also allow us to skip iterating over subsets of 1D statistics by quickly checking which attributes two statistics share. If some attribute is not shared between two statistics, then that attribute can cause no conflicts and does not need to be iterated over.

This leads to the last technique of caching. Caching is used to avoid recomputing subterms and takes advantage of the attribute bit vectors and variable hash maps described above. Since we solve for all the variables $`\alpha_j`$ of our model once and they remained fixed throughout query answering, if there is a subterm of our model that does not contain any variable the needs to be set to zero, we can reuse that subterm’s value. We store this value along with the map and bit vectors.

By utilizing these techniques, we reduced the time to learn the model (solver runtime) from 3 months to 1 day and saw a decrease in query answering runtime from around 10 sec to 500 ms (95% decrease). More runtime results are in 7.

In this section, we evaluate the performance of EntropyDB in terms of query accuracy and query execution time. We compare our approach to uniform sampling and stratified sampling.

Implementation

We implemented our polynomial solver and query evaluator in Java 1.8, in a prototype system that we call EntropyDB. We created our own polynomial class and variable types to implement our factorization. We parallelized our polynomial evaluator (see 5.2) using Java’s parallel streaming library. We also used Java to store the polynomial factorization in memory.

Lastly, we stored the polynomial variables in a Postgres 9.5.5 database and stored the polynomial factorization in a text file. We perform all experiments on a 64bit Linux machine running Ubuntu 5.4.0. The machine has 120 CPUs and 1 TB of memory2. For the timing results, the Postgres database, which stores all the samples, also resides on this machine and has a shared buffer size of 250 GB.

Experimental Setup

For all our summaries, we ran our solver for 30 iterations or until the error was below $`1 \times 10^{-6}`$ using the method presented in Sec. 3.3. Our summaries took under 1 day to compute with the majority of the time spent building the polynomial and solving for the parameters.

We evaluate EntropyDB on two real datasets as opposed to benchmark data

to measure query accuracy in the presence of naturally occurring

attribute correlations. The first dataset comprises information on

flights in the United States from January 1990 to July 2015 . We load

the data into PostgreSQL, remove null values, and bin all real-valued

attributes into equi-width buckets. We further reduce the size of the

active domain to decrease memory usage and solver execution time by

binning cities such that the two most popular cities in each state are

separated and the remaining less popular cities are grouped into a city

called ‘Other’. We use equi-width buckets to facilitate transforming a

user’s query into our domain and to avoid hiding outliers, but it is

future work to try different bucketization strategies. The resulting

relation,

FlightsFine(fl_date, origin_city, dest_city, fl_time, distance), is 5

GB in size.

To vary the size of our active domain, we also create

FlightsCoarse(fl_date, origin_state, dest_state, fl_time, distance),

where we use the origin state and destination state as flight locations.

The left table in

3 shows the resulting active domain

sizes.

The second dataset is 210 GB in size. It comprises N-body particle

simulation data , which captures the state of astronomy simulation

particles at different moments in time (snapshots). The relation

Particles(density, mass, x, y, z, grp, type, snapshot) contains

attributes that capture particle properties and a binary attribute, grp,

indicating if a particle is in a cluster or not. We bucketize the

continuous attributes (density, mass, and position coordinates) into

equi-width bins. The right table in

3 shows the resulting domain sizes.

|M39pt|M33pt|M33pt|

& Flights Coarse &

Flights Fine

fl_date (FD) & 307 & 307

origin (OS/OC) & 54 & 147

dest (DS/DC) & 54 & 147

fl_time (ET) & 62 & 62

distance (DT) & 81 & 81

# possible tuples & 4.5 × 109 & 3.3 × 1010

Particles |

|

|---|---|

density |

58 |

mass |

52 |

x |

21 |

y |

21 |

z |

21 |

grp |

2 |

type |

3 |

snapshot |

3 |

| # possible | |

| tuples | 5.0 × 108 |

_state. Query Accuracy

We first compare EntropyDB using our best statistic selection techniques of COMPOSITE and 2D sort (see 7.5) to uniform and stratified sampling on the flights dataset. We use one percent samples, which require approximately 100 MB of space when stored in PostgreSQL. To approximately match the sample size, our largest summary requires only 600 KB of space in PostgreSQL to store the polynomial variables and approximately 200 MB of space in a text file to store the polynomial factorization. This, however, could be improved and compressed further beyond what we did in our prototype implementation.

We compute correlations on FlightsCoarse across all attribute pairs

and identify the following pairs as having the largest correlations (C

stands for “coarse”): 1C = (origin_state, distance), 2C =

(destination_state, distance), 3CF = (fl_time, distance)3, and 4C

= (origin_state, destination_state). We use the corresponding

attributes, which are also the most correlated, for the finer-grained

relation and refer to those attribute pairs as 1F, 2F, and 4F.

Following the discussion in 6, we have two parameters to vary: $`B_a`$ (“breadth”) and $`B_s`$ (“depth”). In order to keep the total number of statistics constant, we require that $`B_a*B_s = 3000`$. This threshold allows for the polynomial to be built and solved in under a day. Using this threshold, we build four summaries to show the difference in choosing statistics based solely on correlation (choosing statistics in order of most to least correlated) versus attribute cover (choosing statistics that cover the attributes with the highest combined correlation). The first summary, No2D, contains only 1D statistics. The next two, Ent1&2 and Ent3&4, use 1,500 statistics across the attribute pairs (1, 2) and (3, 4), respectively. The final one, Ent1&2&3, uses 1,000 statistics for the three attribute pairs (1, 2, 3). We do not include 2D statistics related to the flight date attribute because this attribute is relatively uniformly distributed and does not need a 2D statistic to correct for the MaxEnt’s underlying uniformity assumption. 4 summarizes the summaries.

For sampling, we choose to compare with a uniform sample and four different stratified samples. We choose the stratified samples to be along the same attribute pairs as the 2D statistics in our summaries; , pair 1 through pair 4.

| MaxEnt Method | No2D | 1&2 | 3&4 | 1&2&3 | |

|---|---|---|---|---|---|

| Pair 1 | (origin, distance) | X | X | ||

| Pair 2 | (dest, distance) | X | X | ||

| Pair 3 | (time, distance) | X | X | ||

| Pair 4 | (origin, dest) | X |

To test query accuracy, we use the following query template:

SELECT A1,..., Am, COUNT(*)

FROM R WHERE A1=`v1' AND ... AND Am=`vm'

GROUP BY A1,..., Am

We test the approaches on 400 unique (A1,.., Am) values. We choose the

attributes for the queries in a way that illustrates the strengths and

weaknesses of EntropyDB. For the selected attributes, 100 of the values

used in the experiments have the largest count (heavy hitters), 100 have

the smallest count (light hitters), and 200 (to match the 200 existing

values) have a zero true count (nonexistent/null values). To evaluate

the accuracy of EntropyDB, we compute a $`|true-est|/(true+est)`$ (a

measure of relative difference) on the heavy and light hitters. To

evaluate how well EntropyDB distinguishes between rare and nonexistent

values, we compute the F measure,

2*\textrm{precision}*\textrm{recall}/(\textrm{precision}+\textrm{recall})with

\textrm{precision} = \frac{|\{est_t > 0\ :\ t \in \textrm{light hitters}\}|}{|\{est_t > 0\ :\ t \in (\textrm{light hitters}\ \cup\ \textrm{null values})\}|}and

\textrm{recall} = \frac{|\{est_t > 0\ :\ t \in \textrm{light hitters}\}|}{100}.We do not compare the execution time of EntropyDB to sampling for the flights data because the dataset is small, and the execution time of EntropyDB is, on average, below 0.5 seconds and at most 1 sec. Sec. 7.4.1 reports execution time for the larger data.

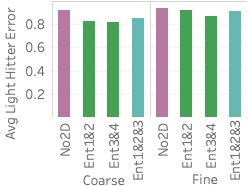

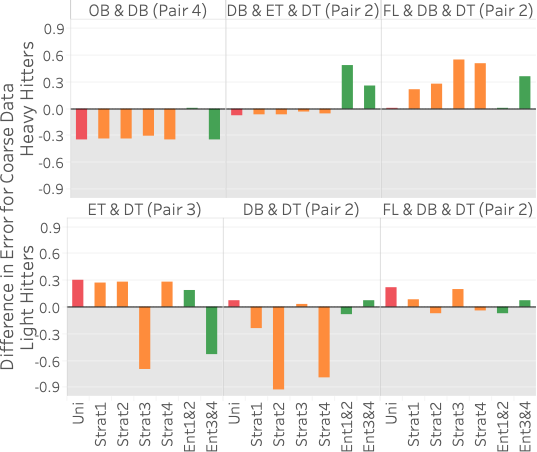

style="width:48.0%" />

style="width:48.0%" />

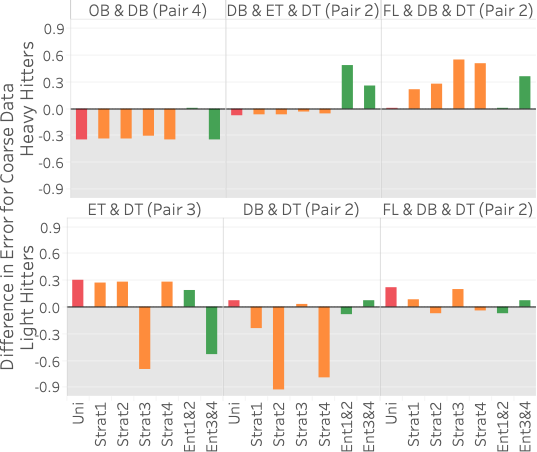

FlightsCoarse. The pair in

parenthesis in the column header corresponds to the 2D statistic pair(s)

used in the query template. For reference, pair 1 is (origin/OB,

distance/DT), pair 2 is (dest/DB, distance/DT), pair 3 is (time/ET,

distance/DT), and pair 4 is (origin/OB, dest/DB). 5 (top) shows query error

differences between all methods and Ent1&2&3 (, average error for method

X minus average error for Ent1&2&3) for three different heavy hitter

queries over FlightsCoarse. Hence, bars above zero indicate that

Ent1&2&3 performs better and vice versa. Each of the three query

templates uses a different set of attributes that we manually select to

illustrate different scenarios. The attributes of the query are shown in

the column header in the figure, and any 2D statistic attribute-pair

contained in the query attributes is in parentheses. Each bar shows the

average of 100 query instances selecting different values for each

template.

As the figure shows, Ent1&2&3 is comparable or better than sampling on two of the three queries and does worse than sampling on query 1. The reason it does worse on query 1 is that it does not have any 2D statistics over 4C, the attribute-pair used in the query, and 4C is fairly correlated. Our lack of a 2D statistic over 4C means we cannot correct for the MaxEnt’s uniformity assumption. On the other hand, all samples are able to capture the correlation because the 100 heavy hitters for query 1 are responsible for approximately 25% of the data. This is further shown by Ent3&4, which has 4C as one of its 2D statistics, doing better than Ent1&2&3 on query 1.

Ent1&2&3 is comparable to sampling on query 2 because two of its 2D statistics cover the three attributes in the query. It is better than both Ent1&2 and Ent3&4 because each of those methods has only one 2D statistic over the attributes in the query. Finally, Ent1&2&3 is better than stratified sampling on query 3 because it not only contains a 2D statistic over 2C but also correctly captures the uniformity of flight date. This uniformity is also why Ent1&2 and a uniform sample do well on query 3. Another reason stratified sampling performs poorly on query 3 is because the result is highly skewed in the attributes of destination state and distance but remains uniform in flight date. The top 100 heavy hitter tuples all have the destination of ‘CA’ with a distance of 300. This means even a stratified sample over destination state and distance will likely not be able to capture the uniformity of flight date within the strata for ‘CA’ and 300 miles.

5 (bottom) shows results for

three different light hitter queries over FlightsCoarse. In this case,

EntropyDB always does better than uniform sampling. Our performance

compared to stratified sampling depends on the stratification and query.

Stratified sampling outperforms Ent1&2&3 when the stratification is

exactly along the attributes involved in the query. For example, for

query 1, the sample stratified on pair 3 outperforms EntropyDB by a

significant amount because pair 3CF is computed along the attributes in

query 1. Interestingly, Ent3&4 and Ent1&2 do better than Ent1&2&3 on

query 1 and query 2, respectively. Even though both of the query

attributes for query 1 and query 2 are statistics in Ent1&2&3, Ent1&2

and Ent3&4 have more statistics and are thus able to capture more zero

elements. Lastly, we see that for query 3, we are comparable to

stratified sampling because we have a 2D statistic over pair 2C, and the

other attribute, flight date, is relatively uniformly distributed in the

query result.

We ran the same queries over the FlightsFine dataset and found

identical trends in error difference. We therefore omit the graph.

style="width:98.0%" />

style="width:98.0%" />

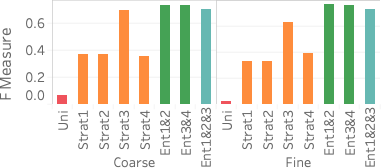

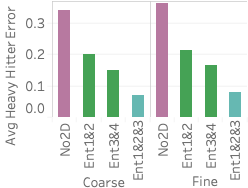

FlightsCoarse (left) and FlightsFine

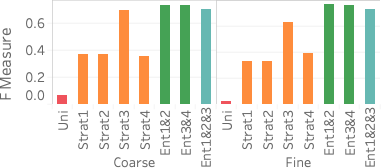

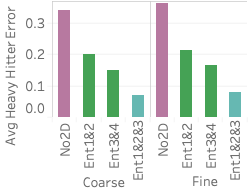

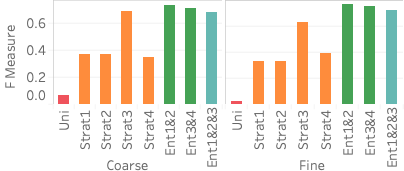

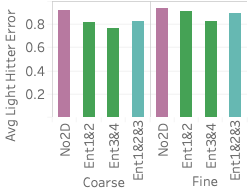

(right).An important advantage of our approach is that it more accurately distinguishes between rare values and nonexistent values compared with stratified sampling, which often does not have samples for rare values when the stratification does not match the query attributes. To assess how well our approach works on those rare values, 6 shows the average F measure over fifteen 2- and 3-dimensional queries selecting light hitters and null values.

We see that Ent1&2 and 3&4 have F measures close to 0.72, beating all stratified samples and also beating Ent1&2&3. The key reason why they beat Ent1&2&3 is that these summaries have the largest numbers of statistics, which ensures they have more fine grained information and can more easily identify regions without tuples. Ent1&2&3 has an F measure close to 0.69, which is slightly lower than the stratified sample over pair 3CF but better than all other samples. The reason the sample stratified over pair 3CF performs well is that the flight time attribute has a more skewed distribution and has more rare values than other dimensions. A stratified sample over that dimensions will be able to capture this. On the other hand, Ent1&2&3 will estimate a small count for any tuple containing a rare flight time value and will be rounded to 0.

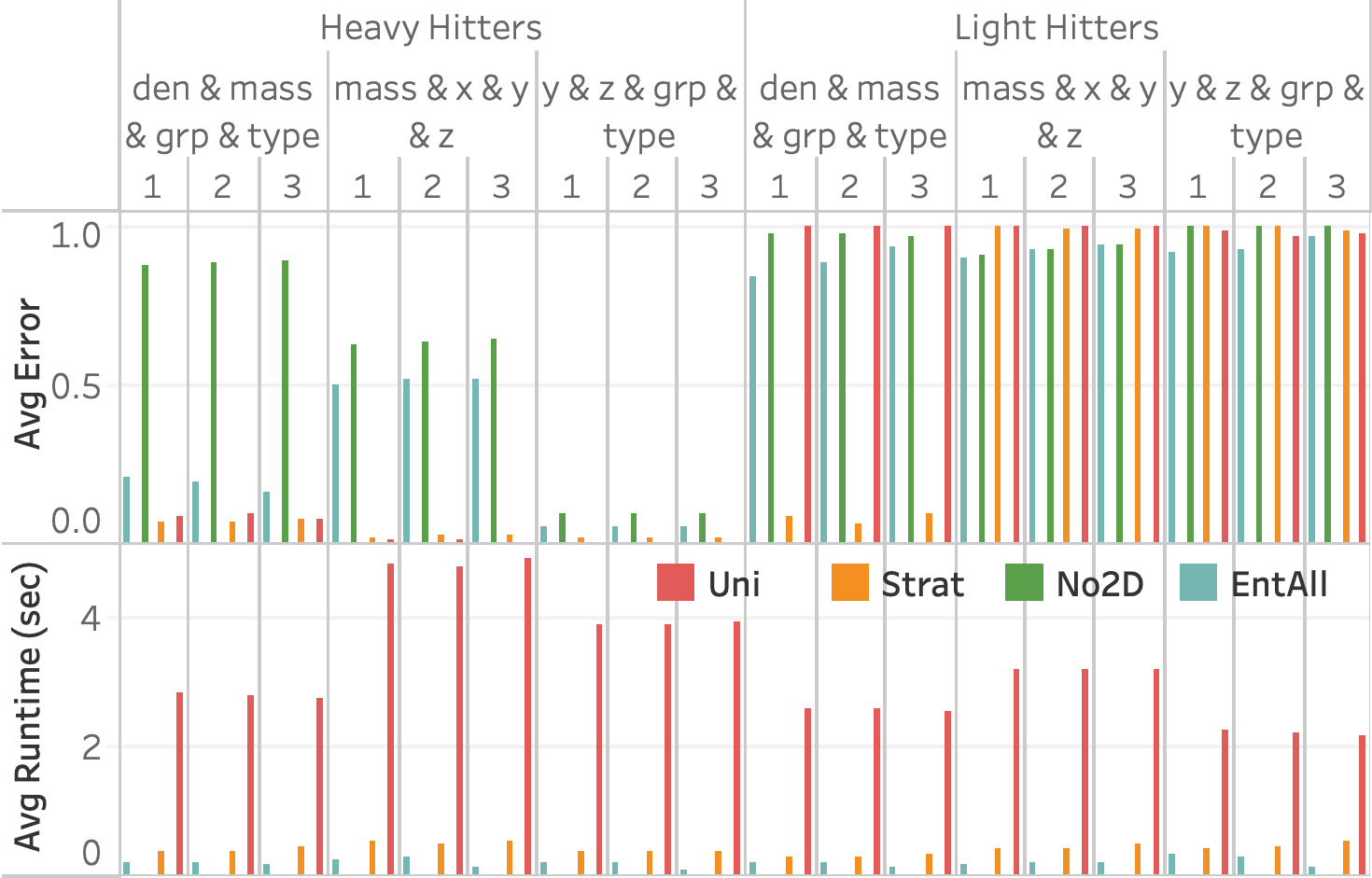

Execution Times

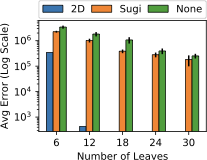

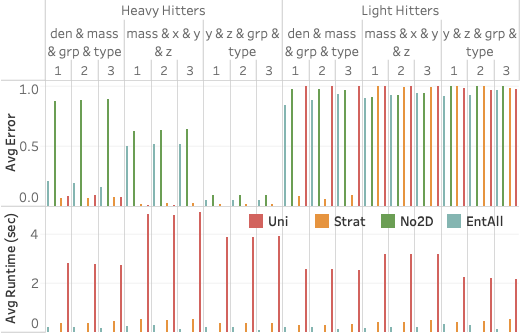

Scalability

To measure the performance of EntropyDB on large-scale datasets, we use

three subsets of the 210 GB Particles table. We select data for one,

two, or all three snapshots (each snapshot is approximately 70 GB in

size). We build a 1 GB uniform sample for each subset of the table as

well as a stratified sample over the pair density and group with the

same sampling percentage as the uniform sample. We then build two MaxEnt

summaries; EntNo2D uses no 2D statistics, and EntAll contains 5 2D

statistics with 100 statistics over each of the most correlated

attributes, not including snapshot. We do not use any presorting method

for this experiment. We run a variety of 4D selection queries such as

the ones from

Sec. 7.3, split into heavy

hitters and light hitters. We record the query accuracy and execution

time.

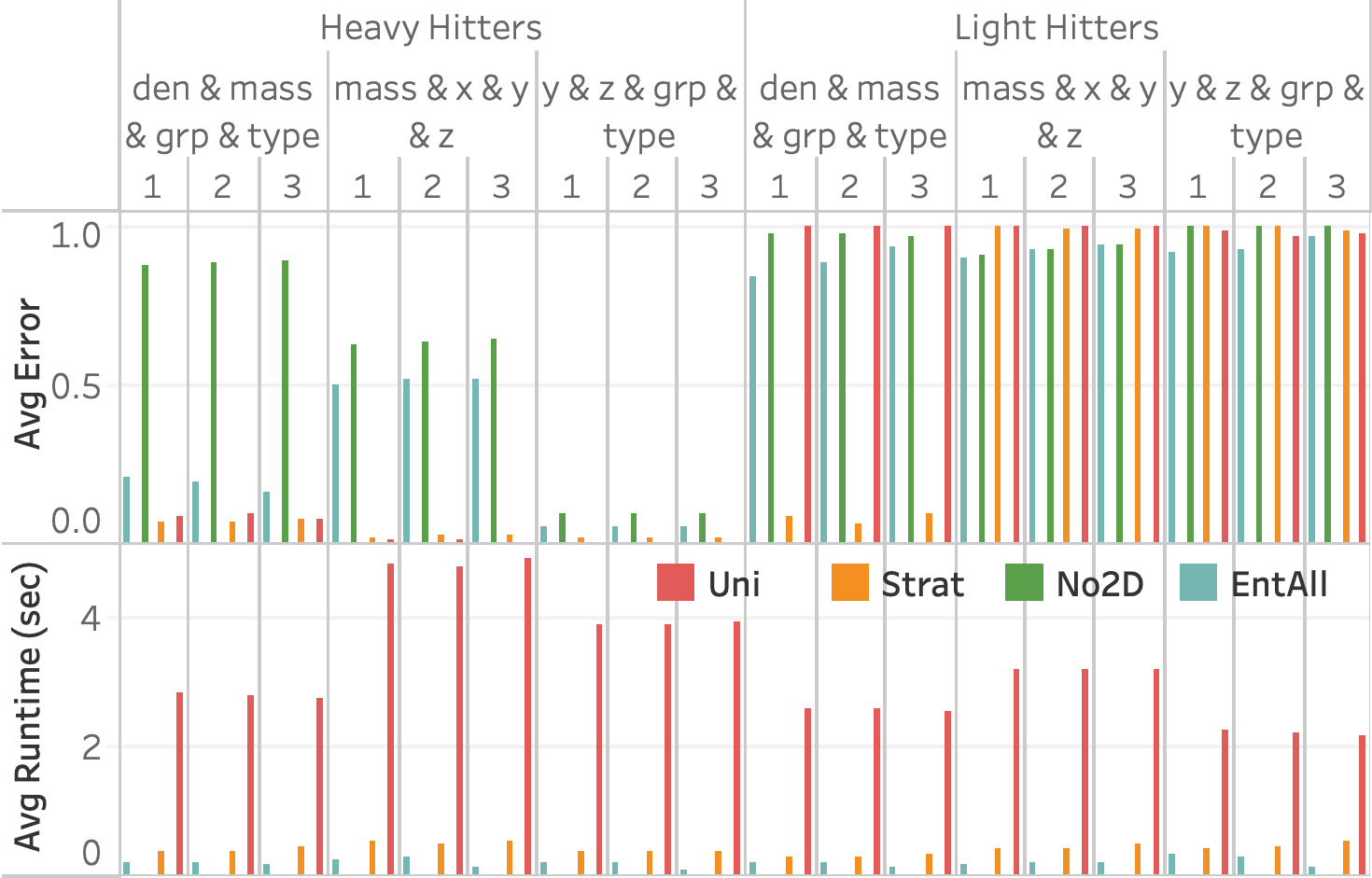

Particles table. The stratified

sample (orange) is stratified on (den, grp).7 shows the query accuracy and execution time for three different selection queries as the number of snapshots increases. We see that EntropyDB consistently does better than sampling on query execution time, although both EntropyDB and stratified sampling execute queries in under one second. Stratified sampling outperforms uniform sampling because the stratified samples are generally smaller than their equally selective uniform sample.

In terms of query accuracy, sampling always does better than EntropyDB

for the heavy hitter queries. This is expected because the bucketization

of Particles is relatively coarse grained, and a 1 GB sample is

sufficiently large to capture the heavy hitters. We do see that EntAll

does significantly better than EntNo2D for query 1 because three of its

five statistics are over the attributes of query 1 while only 1

statistic is over the attributes of queries 2 and 3. However, the query

results of query 3 are more uniform, which is why EntNo2D and EntAll do

well.

For the light hitter queries, none of the methods do well except for the stratified sample in query 1 because the query is over the attributes used in the stratification. EntAll does slightly better than stratified sampling on queries 2 and 3.

Solving Time

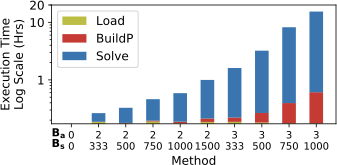

To show the data loading and model solving time of EntropyDB, we use

FlightsFine and measure the time it takes for EntropyDB to read in a

dataset from Postgres to collect statistics, to build the polynomial,

and to solve for the model parameters for various $`B_a`$ and $`B_s`$

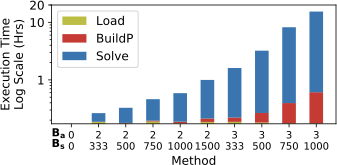

(see 8). When $`B_a = 2`$, we gather

statistics over pair 1 and pair 2 (MaxEnt1&2), and when $`B_a = 3`$, we

gather statistics over pair 1, 2, and 3 (MaxEnt3).)

style="width:47.0%" />

style="width:47.0%" />

FlightsFine.We see that the overall polynomial building and solving execution time grows exponentially as $`B_a`$ and $`B_s`$ increase while the data loading time remains constant. The smallest model has a execution time of 10.5 minutes while largest model (MaxEnt3) has a execution time of 15.4 hours. The experiment further demonstrates that $`B_a`$ impacts execution time more than $`B_s`$. The method with $`B_a = 2, B_s = 750`$ has a faster execution time than the method with $`B_a = 3, B_s = 500`$ even though the total number of statistics, 1,500 in both, is the same.

Note that the data loading time (yellow) will increase as the dataset gets larger, but once all the histograms and statistics are computed, the time to build the polynomial and solver time are independent of the original data size; they only depend on the model complexity.

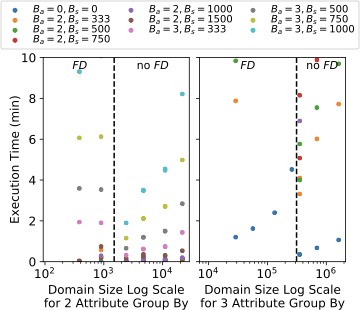

Group By Queries

To further expand on execution time results, we measure the execution time to compute eight various 2- and 3-dimensional group-by queries instead of single point queries (sixteen group-by queries in total) to show how the execution time depends on the active domain. As EntropyDB can only issue a single point query at a time (the query evaluation is already parallelized), the group-by queries are run as sequences of point queries over the domain of the query attributes.

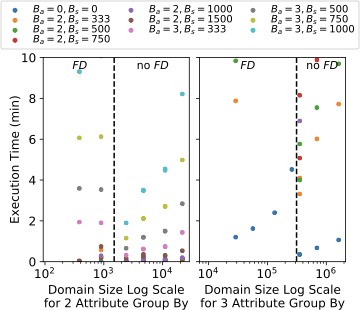

9 shows a scatter plot of the

query domain size versus the execution time for 2- and 3-dimensional

group-by queries for the same models as used

in 7.4.2

($`B_a = 0, 2, 3`$ and $`B_s`$ varying form 333 to 1500). If a model

takes longer than 10 minutes to compute a group-by query, we terminate

its execution. Each color represents a different combination of $`B_a`$

and $`B_s`$. Note that running a 3-dimensional group-by query on

Postgres on the full FlightsFine can take up to 17 minutes.

style="width:47.0%" />

style="width:47.0%" />

FlightsFine for various configurations of Ba and Bs. The dashed

line demarcates queries with fl_date as a group-by

attribute and those that do not.The overall trend we see is that models with a larger $`B_a`$ are slower to execute, and for models with the same $`B_a`$, larger $`B_s`$ is slower. For example, the average execution for 2-dimensional group-by queries for $`B_a = 2`$ is 8 seconds while it is 87 seconds for $`B_a = 3`$. This is not surprising and matches the results from 8. We again see that $`B_a = 2, B_s = 750`$ is faster than $`B_a = 3, B_s = 500`$ even though the total number of statistics is the same.

Some of the large models had an execution time of longer than 10 minutes for some of the 3-dimensional queries, which is why their scatter point is not shown on all queries. Even though their execution time was more than 10 minutes, each individual point query still ran in under a second.

The results also show a surprising trend in that the execution time dips

after the black dashed line and then starts slowing increasing again.

This dashed black line demarcates queries containing the fl_date

attribute, the one attribute not included in any 2-dimensional

statistic. Note that because the active domain of FD is small, the

smaller domain queries happen to contain FD, but the size of the

domain is independent of the dip in the execution time.

This unintuitive result is explained by the optimizations in 5.2. By using bit vectors and maps to indicate which attributes and variables are contained in a polynomial subterm, we can quickly decide if that subterm needs to be set to zero or not for query evaluation. This mainly improves evaluation of correction subterms ($`(\delta - 1)`$ times 1D sums) because the 1D sums contain subsets of the active domain and are more likely overlap with the variables that can be set to zero. The more quickly we can decide if a subterm is zero, the faster the evaluation.

For example, take the polynomial in 13. If we are evaluating a query for $`A = 155 \land B = 700 \land C = 700`$, then all other $`\alpha`$, $`\beta`$, and $`\gamma`$ variables need to be set to zero except $`\alpha_{155}`$, $`\beta_{700}`$, and $`\gamma_{700}`$. This means the polynomial sums on lines 3, 5, 6, and 7 can all be set to zero without having to evaluate each individual subterm on those lines because $`\alpha_{155}`$, $`\beta_{700}`$, and $`\gamma_{700}`$ are not contained in any of those subterms and $`A`$, $`B`$, and $`C`$ attributes are meant to be zero.

The FD attribute being one of the group-by attributes indicates that

all variables representing FD except for the one being selected can be

set to zero. However, as FD is not part of a statistic, there are no

correction terms being multiplied by subsets of the FD active domain.

Therefore, there are fewer chances to set a subterm to zero, meaning the

overall query execution is slower.

This evaluation presents and interesting tradeoff between model size, statistic attributes, and query execution. One the one hand, a larger model will take longer to run, in general. On the other hand, more statistics allow for more zero setting optimizations in query evaluation. We also see that while EntropyDB can handle 2-dimensional group-by queries, especially if $`B_a = 2`$, it struggles to perform for 3-dimensional ones. However, as the strength of EntropyDB is in querying for light hitters, EntropyDB will miss fewer groups than sampling techniques which are more impacted by heavy hitters. We leave it as future work further optimize large domain group-by queries.

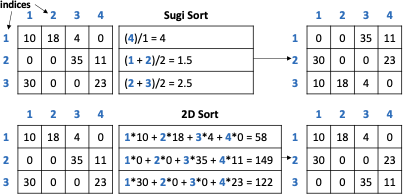

Statistic Selection

Selection Technique

style="width:30.0%" />

style="width:30.0%" />

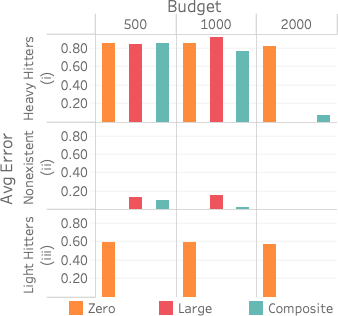

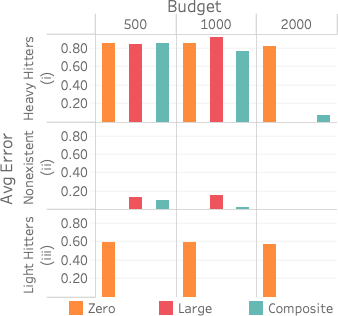

We evaluate the three different statistic selection heuristics,

described in 6.1, on

FlightsCoarse restricted to the attributes (date, time, distance). We

gather statistics using the three different techniques and using

different budgets on the attribute pair (time, distance). There are

5,022 possible 2D statistics, 1,334 of which exist in FlightsCoarse.

We evaluate the accuracy of the resultant count of the query

SELECT time, dist, COUNT(*)

FROM Flights WHERE time = x AND dist = y

GROUP BY time, dist

for 100 heavy hitter (x, y) values, 100 light hitter (x, y) values, and 200 random (x, y) nonexistent/zero values. We choose 200 zero values to match the 100+100 heavy and light hitters.

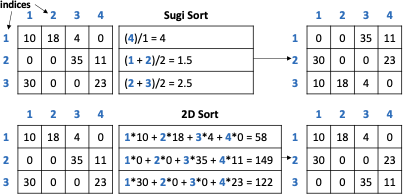

10 (i) plots the query accuracy versus method and budget for 100 heavy hitter values. Both LARGE and COMPOSITE achieve almost zero error for the larger budgets while ZERO gets around 60 percent error no matter the budget.

(ii) plots the same for nonexistent values, and clearly ZERO does best because it captures the zero values first. COMPOSITE, however, gets a low error with a budget of 1,000 and outperforms LARGE. Interestingly, LARGE does slightly worse with a budget of 1,000 than 500. This is a result of the final value of $`P`$ being larger with a larger budget, and this makes our estimates slightly higher than 0.5, which we round up to 1. With a budget of 500, our estimates are slightly lower than 0.5, which we round down to 0.

Lastly, (iii) plots the same for 100 light hitter values, and while LARGE eventually outperforms COMPOSITE, COMPOSITE gets similar error for all budgets. In fact, COMPOSITE outperforms LARGE for a budget of 1,000 because LARGE predicts that more of the light hitter values are nonexistent than it does with a smaller budget as less weight is distributed to the light hitter values.

Unsurprisingly, we see that COMPOSITE is the best method to use across all queries. However, the COMPOSITE method is more complex and takes more time to compute. We do learn that if heavy hitter queries are the only relevant queries in a particular workload, it is unnecessary to use the COMPOSITE method as LARGE does just as well. Also, ZERO is the best if existence queries are the most important (if determining set containment). So while COMPOSITE is best for a handling a variety of queries, it may not be necessary, depending on the query workload.

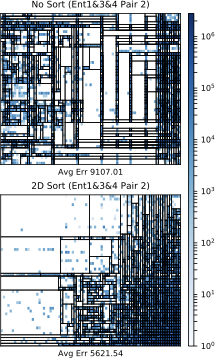

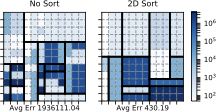

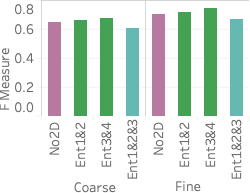

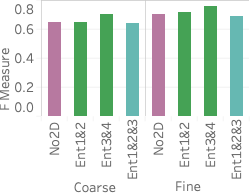

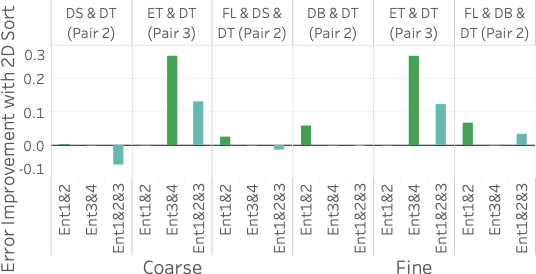

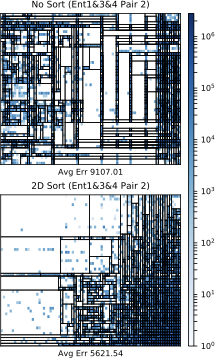

Statistic Accuracy

We now investigate, in more detail, how the different 2D statistic

attribute choices and how presorting the matrix impacts query accuracy.

We look at the query accuracy of the four different MaxEnt methods used

in 5 using both 2D sort and no

sort. We also include the MaxEnt method No2D for comparison although it

does not use any sorting. The no sort technique maintains the natural

ordering of the domains. We use FlightsCoarse and FlightsFine and

the query templates

from 7.3. We run six different

two-attribute selection queries over all possible pairs of the

attributes covered by pair 1 through 4; , origin, destination, time, and

distance. We select 100 heavy hitters, 100 light hitters, and 200 null

values.

FlightsCoarse and FlightsFine using no sort

(left side) and 2D sort (right

side).11 shows the average error for the heavy hitters and the light hitters and shows the average F measure across the six queries. The left side shows the error when no presorting is used, and the right side shows the error when 2D sort is used. We first consider the different attribute selections. We see that the summary with more attribute pairs but fewer buckets (more “breadth”), Ent1&2&3, does best on the heavy hitters. On the other hand, for the light hitters, we see that the summary with fewer attribute pairs but more buckets (more “depth”) and still covers the attributes, Ent3&4, does best. Ent3&4 doing better than Ent1&2 implies that choosing the attribute pairs that cover the attributes yields better accuracy than choosing the most correlated pairs because even though Ent1&2 has the most correlated attribute pairs, it does not have a statistic containing flight time. Lastly, Ent1&2&3 does best on the heavy hitter queries yet slightly worse on the light hitter queries because it does not have as many buckets as Ent1&2 and Ent3&4 and can thus not capture as many regions in the active domains with no tuples.

When considering presorting the data, we see that 2D sort does not have any significant impact on heavy hitter accuracy, with an improvement on the order of 0.001. For light hitters, we see a slight average error improvement using 2D sort, and for the F measure, we see a slight decrease in measure. Ent2&3 has the largest improvement in light hitter query error because of the improvement in resorting pair 3 (distance and time) along with its large K-D tree leaf budget. Ent2&3 has 1,500 K-D tree leaves which is enough to capture the 1,334 nonzero values of pair 3.

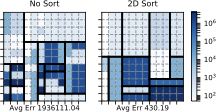

style="width:50.0%" />

style="width:50.0%" />

FlightsCoarse that is unsorted

(left) and sorted using the 2D sort

algorithm (right). The average K-D tree error is shown

below.The decrease in F measure and limited improvement for query accuracy is

best explained by looking at the sorted and unsorted frequency heatmaps

and K-D trees of the pair 2C for Ent1&2&3 on FlightsCoarse, shown

in 12. We see that

the average K-D tree error does, in fact, decrease, which should

indicate an improvement in accuracy and F measure. However, upon closer

inspection of the K-D tree leaves, we see that the sorted tree actually

has put more zeros in leaves with some small, nonzero values. This, in

turn, causes MaxEnt1&2&3 to believe those zeros actually exist,

therefore decreasing the F measure. This result implies that improving

K-D tree error is not always enough to guarantee a high F measure

because every zero that is in a leaf with some nonzero value will be

misclassified as existing. We presented, EntropyDB, a new approach to

generate probabilistic database summaries for interactive data

exploration using the Principle of Maximum Entropy. Our approach is

complementary to sampling. Unlike sampling, EntropyDB’s summaries strive

to be independent of user queries and capture correlations between

multiple different attributes at the same time. Results from our

prototype implementation on two real-world datasets up to 210 GB in size

demonstrate that this approach is competitive with sampling for queries

over frequent items while outperforming sampling on queries over less

common items.

Acknowledgments This work is supported by NSF 1614738 and NSF 1535565. Laurel Orr is supported by the NSF Graduate Research Fellowship.

We now discuss two logical optimizations: (1) summary compression in Sec. 4.1 and (2) optimized query processing in Sec. 4.2. In 5, we discuss the implementation of these optimizations.

Compression of the Data Summary

The summary consists of the polynomial $`P`$ that, by definition, has $`|Tup|`$ monomials where $`|Tup| = \prod_{i=1}^m N_i`$. We describe a technique that compresses the summary by factorizing the polynomial to a size closer to $`O(\sum_i N_i)`$ than $`O(\prod_i N_i)`$.

Before walking through a more complex example describing the factorization process, we show the factorized version of the polynomial from 4.

Example 1. *Recall that our relation has three attributes $`A`$, $`B`$, and $`C`$ with domain size of 2, and our summary has four multidimensional statistics. The factorization of P is

\begin{align}

\label{eq:pnew_factored}

P = &(\alpha_{1} + \alpha_{2})(\beta_{1} + \beta_{2})(\gamma_{1} + \gamma_{2}) + \nonumber \\

&(\gamma_{1} + \gamma_{2})(\alpha_{1}\beta_{1}(\mathcolor{red}{[\alpha\beta]_{1,1}} - 1) + \alpha_{2}\beta_{2}(\mathcolor{red}{[\alpha\beta]_{2,2}} - 1)) + \nonumber \\