This paper introduces Admissibility Alignment: a reframing of AI alignment as a property of admissible action and decision selection over distributions of outcomes under uncertainty, evaluated through the behavior of candidate policies. We present MAP-AI (Monte Carlo Alignment for Policy) as a canonical system architecture for operationalizing admissibility alignment, formalizing alignment as a probabilistic, decision-theoretic property rather than a static or binary condition.

MAP-AI, a new control-plane system architecture for aligned decision-making under uncertainty, enforces alignment through Monte Carlo estimation of outcome distributions and admissibility-controlled policy selection rather than static model-level constraints. The framework evaluates decision policies across ensembles of plausible futures, explicitly modeling uncertainty, intervention effects, value ambiguity, and governance constraints. Alignment is assessed through distributional properties including expected utility, variance, tail risk, and probability of misalignment rather than accuracy or ranking performance. This approach distinguishes probabilistic prediction from decision reasoning under uncertainty and provides an executable methodology for evaluating trust and alignment in enterprise and institutional AI systems. The result is a practical foundation for governing AI systems whose impact is determined not by individual forecasts, but by policy behavior across distributions and tail events. Finally, we show how distributional alignment evaluation can be integrated into decision-making itself, yielding an admissibility-controlled action selection mechanism that alters policy behavior under uncertainty without retraining or modifying underlying models.

As artificial intelligence systems transition from passive prediction engines to systems that act, intervene, and optimize in the world, the question of alignment necessarily changes in kind. Predictive accuracy, ranking performance, and static safety checks are no longer sufficient to characterize system behavior once decisions propagate through uncertain environments, interact with human values, and generate irreversible outcomes. In such settings, alignment is not a property that can be asserted pointwise or certified once; it is a property that emerges from how a decision Policy behaves across distributions of possible futures. Alignment is not a property of internal cognition or belief correctness; it is a property of the external behavior induced by a decision Policy operating under uncertainty.

The alignment system architecture introduced in this paper operationalizes this definition through Monte Carlo-based alignment stress testing. By simulating Policy rollouts across ensembles of plausible environments and value specifications, the approach makes explicit the distribution of outcomes a system is likely to induce, including low-probability but high-impact events. Monte Carlo simulation is not introduced as a novel algorithmic contribution, but as a canonical instantiation of a more general requirement: alignment must be evaluated by examining Policy behavior under uncertainty, not inferred from isolated predictions or training-time objectives.

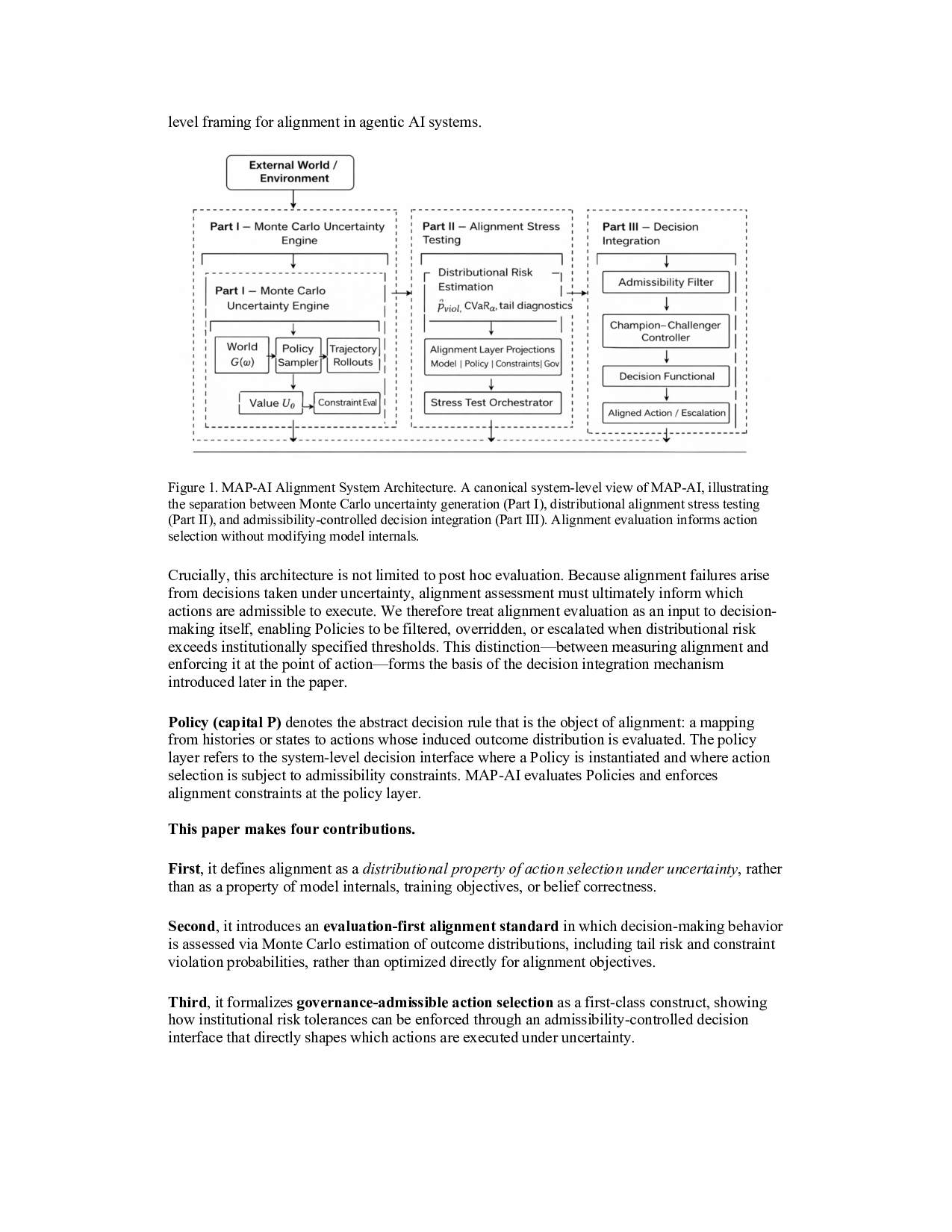

To operationalize alignment as a distributional property of decision-making under uncertainty, we introduce the MAP-AI Alignment System Architecture (Figure 1). The architecture decomposes alignment evaluation and control into three interacting components. Part I is a Monte Carlo uncertainty engine that generates distributions over possible futures by jointly sampling world realizations, Policy behavior, trajectory evolution, value uncertainty, and constraint realization. Part II performs cross-layer alignment stress testing by estimating distributional risk measuressuch as constraint violation probability and tail risk-and projecting these risks across the model, Policy, constraint, and governance layers of the system. Part III integrates these risk signals into decision control through an admissibility filter and a champion-challenger decision loop, ensuring that only policies satisfying institutionally specified governance thresholds are eligible for execution. Together, these components close the alignment loop: uncertainty is transformed into decision-relevant constraints that directly shape which actions an agent is permitted to take. This architecture is agnostic to model internals and task domain, and is intended as a canonical system-level framing for alignment in agentic AI systems. Crucially, this architecture is not limited to post hoc evaluation. Because alignment failures arise from decisions taken under uncertainty, alignment assessment must ultimately inform which actions are admissible to execute. We therefore treat alignment evaluation as an input to decisionmaking itself, enabling Policies to be filtered, overridden, or escalated when distributional risk exceeds institutionally specified thresholds. This distinction-between measuring alignment and enforcing it at the point of action-forms the basis of the decision integration mechanism introduced later in the paper.

Policy (capital P) denotes the abstract decision rule that is the object of alignment: a mapping from histories or states to actions whose induced outcome distribution is evaluated. The policy layer refers to the system-level decision interface where a Policy is instantiated and where action selection is subject to admissibility constraints. MAP-AI evaluates Policies and enforces alignment constraints at the policy layer.

First, it defines alignment as a distributional property of action selection under uncertainty, rather than as a property of model internals, training objectives, or belief correctness.

Second, it introduces an evaluation-first alignment standard in which decision-making behavior is assessed via Monte Carlo estimation of outcome distributions, including tail risk and constraint violation probabilities, rather than optimized directly for alignment objectives.

Third, it formalizes governance-admissible action selection as a first-class construct, showing how institutional risk tolerances can be enforced through an admissibility-controlled decision interface that directly shapes which actions are executed under uncertainty.

Fourth, it demonstrates via stress tests that systems with equivalent predictive performance can exhibit sharply divergent alignment behavior in the tails, underscoring the insufficiency of expectation-based evaluation for alignment-critical systems.

Conceptually, this work is aligned with prior decision-theoretic treatments of AI alignment, particularly those emphasizing uncertainty over objectives and the evaluation of policies rathe

This content is AI-processed based on open access ArXiv data.