Human conversation is organized by an implicit chain of thoughts that manifests as timed speech acts. Capturing this causal pathway is key to building natural full-duplex interactive systems. We introduce a framework that enables reasoning over conversational behaviors by modeling this process as causal inference within a Graph-of-Thoughts (GoT). Our approach formalizes the intent-to-action pathway with a hierarchical labeling scheme, predicting high-level communicative intents and low-level speech acts to learn their causal and temporal dependencies. To train this system, we develop a hybrid corpus that pairs controllable, event-rich simulations with human-annotated rationales and real conversational speech. The GoT framework structures streaming predictions as an evolving graph, enabling a multimodal transformer to forecast the next speech act, generate concise justifications for its decisions, and dynamically refine its reasoning. Experiments on both synthetic and real duplex dialogues show that the framework delivers robust behavior detection, produces interpretable reasoning chains, and establishes a foundation for benchmarking conversational reasoning in full duplex spoken dialogue systems.

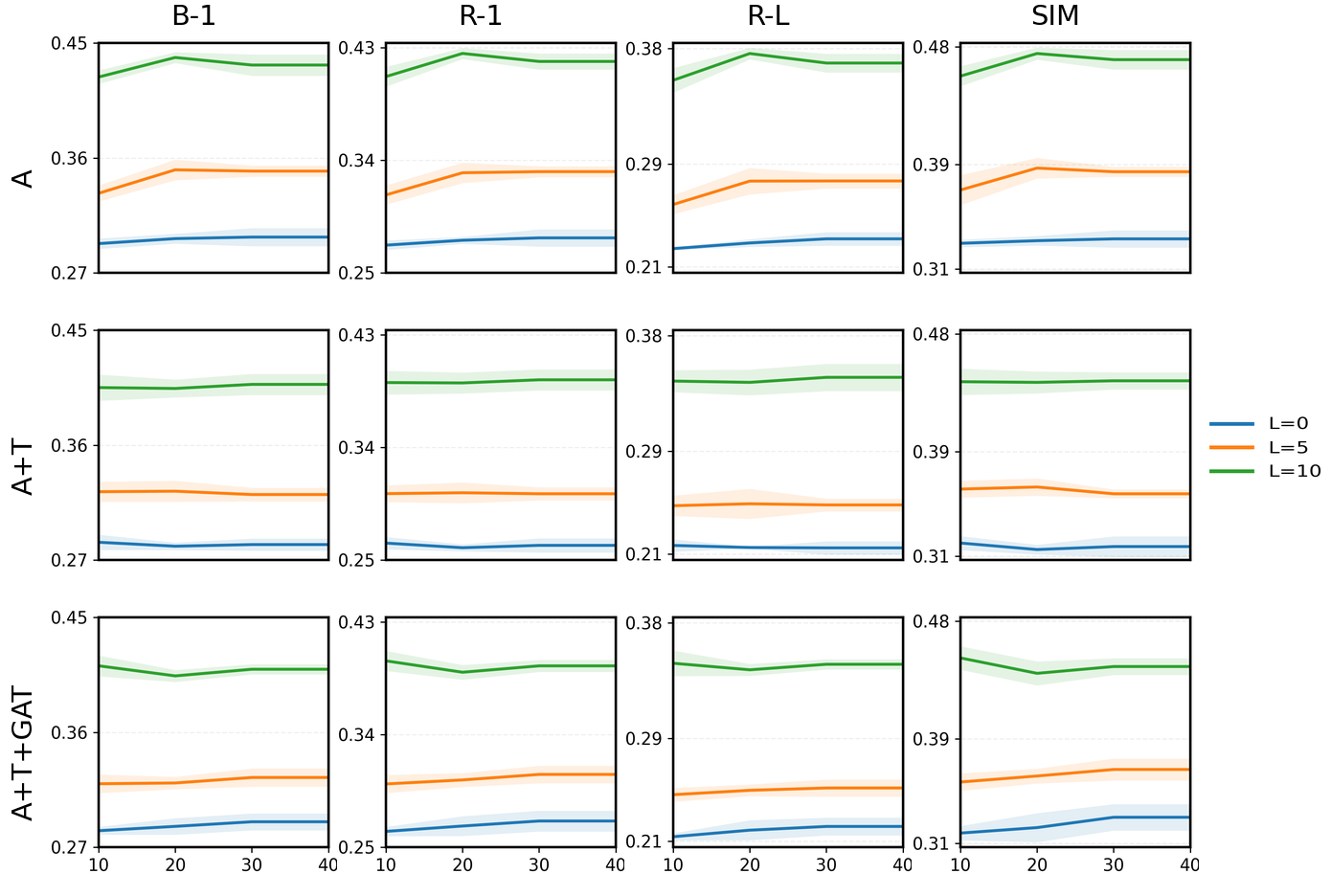

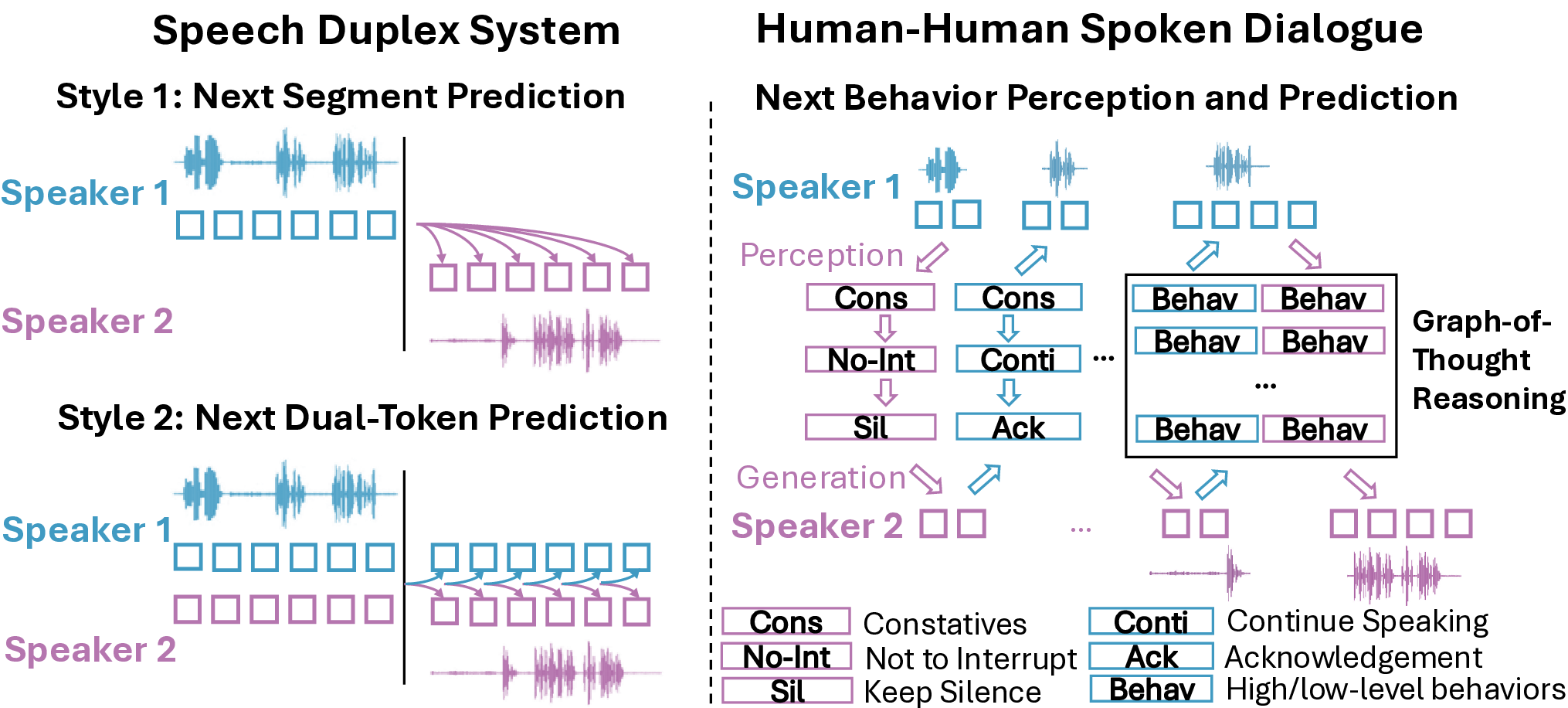

Recent advances in spoken dialogue systems have shifted from turn-based, half-duplex models to full-duplex systems capable of simultaneous listening and speaking (Arora et al., 2025b;Nguyen et al., 2022b;Inoue et al., 2025). As illustrated in Figure 1 (left), the dominant paradigms frame this task as prediction. The first approach, Next Segment Prediction, models the agent's response as a complete turn (Hara et al., 2018;Li et al., 2022;Lee & Narayanan, 2010). A more recent approach, Next Dual-Token Prediction, generates simultaneous token streams for both speakers to better handle overlap and real-time interaction (Nguyen et al., 2022a;Défossez et al., 2024). While these methods have improved system responsiveness, they treat conversation as a sequence generation problem, bypassing the cognitive layer of reasoning that governs human interaction.

Human conversation, however, operates on a more abstract and causal level. We argue for a paradigm shift from black-box prediction to an explicit process of Next Behavior Perception and Prediction, as depicted in Figure 1 (right). When Speaker 1 produces an utterance, Speaker 2 does not simply predict the next sequence of words. Instead, they first perceive the behavior (e.g., recognizing a constative speech act), which triggers an internal chain of thought (e.g., deciding not to interrupt and to remain silent). This reasoning process culminates in a generated action (e.g., an acknowledgement). This gap between pattern matching and causal reasoning is a fundamental barrier to creating truly natural AI agents. Our work addresses the core scientific question: How can a machine model this perception-reasoning-generation loop to make principled, interpretable decisions in real time?

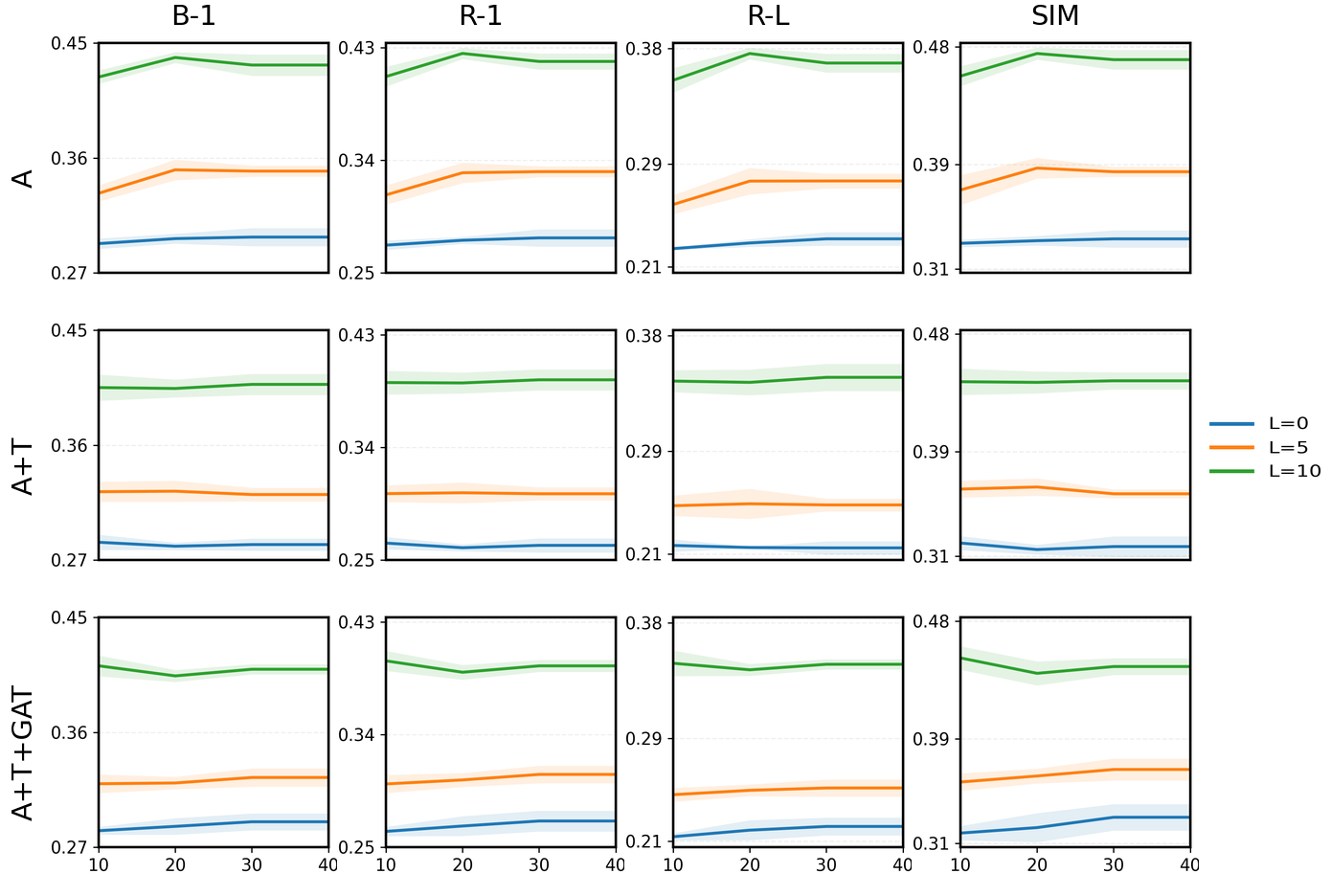

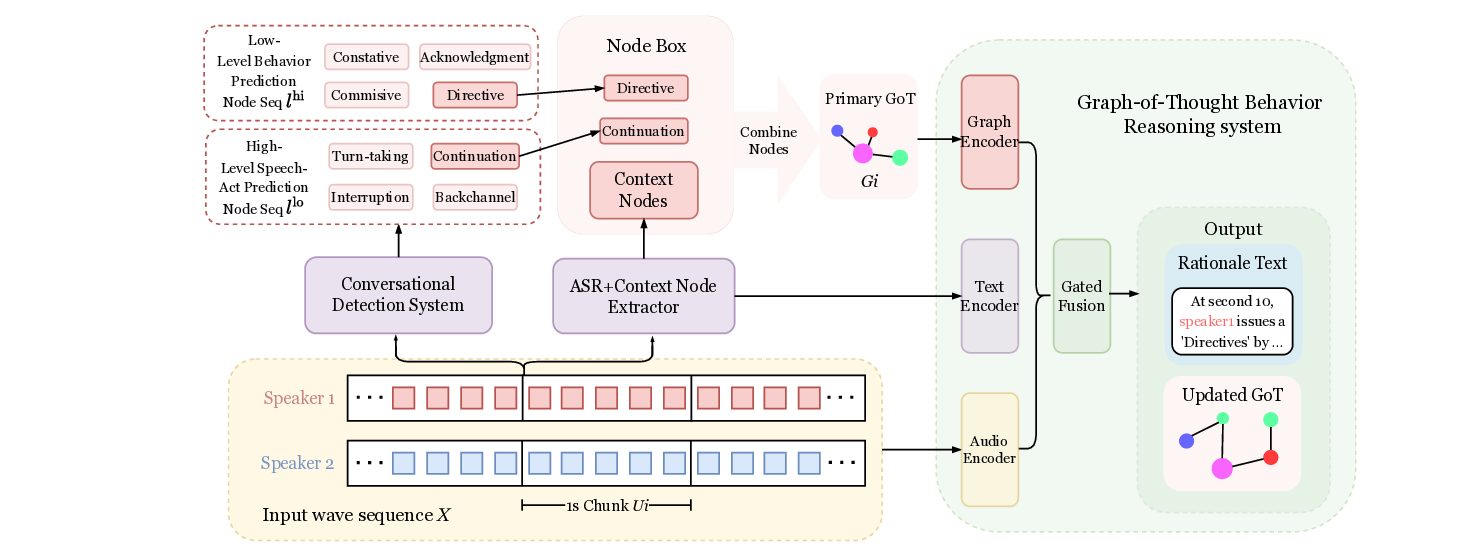

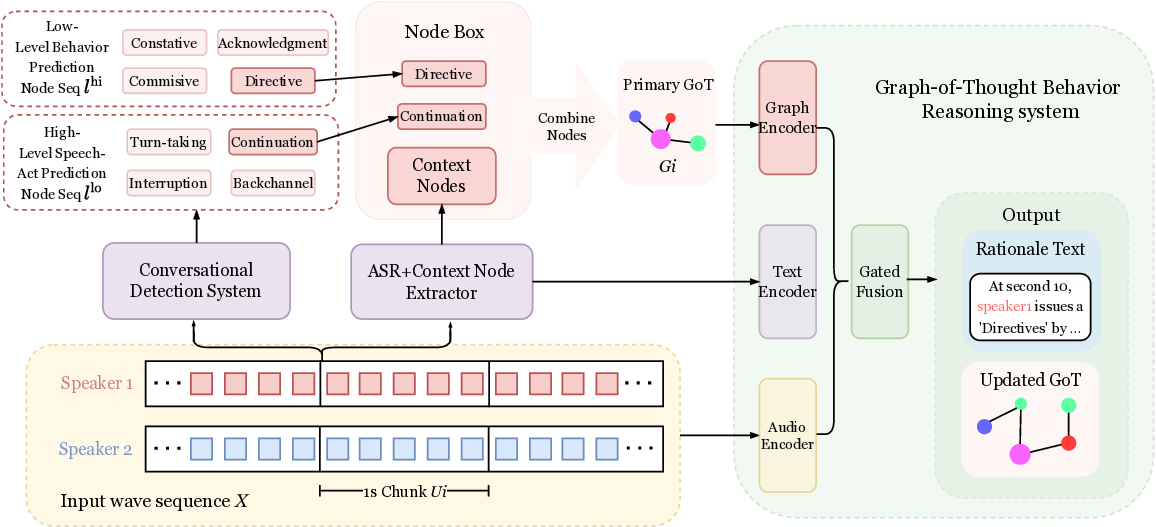

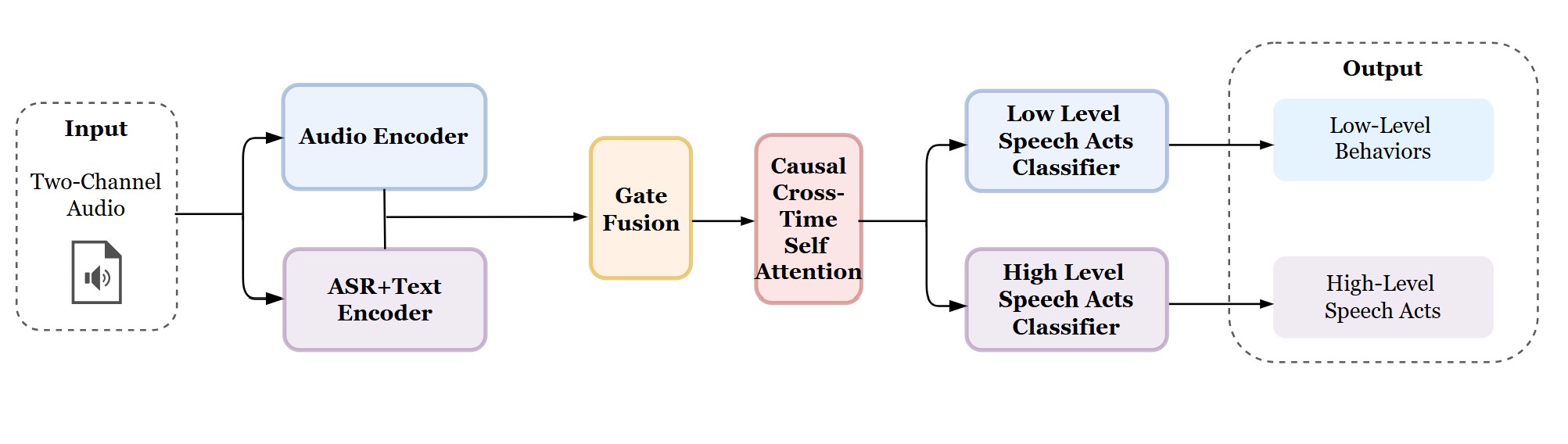

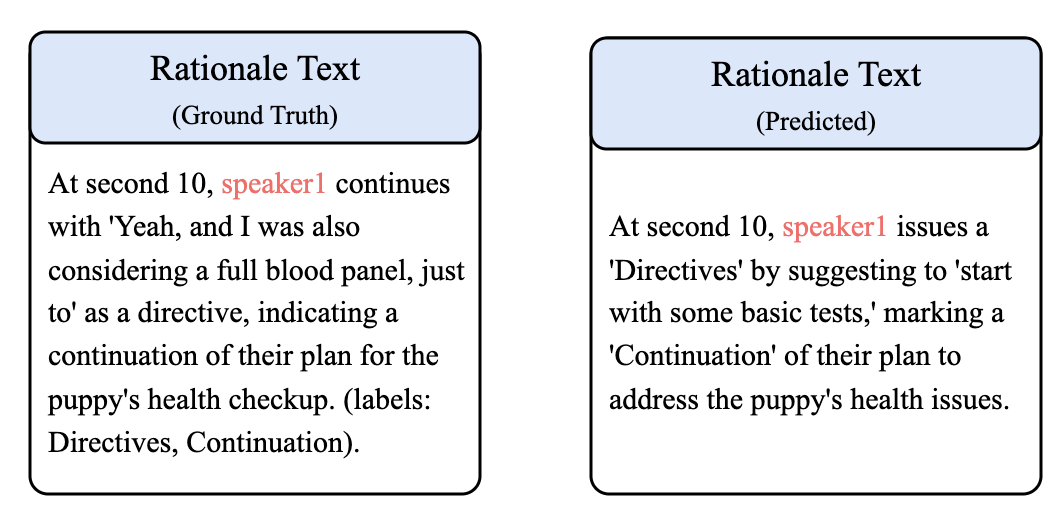

To tackle this challenge, we introduce a framework that operationalizes the process. Our approach is twofold. First, we formalize the Perception stage with a hierarchical conversational behavior detection model. This module learns to identify conversational behaviors at two dimensions: high-level speech acts (e.g., constative, directive) (Jurafsky & Martin, 2025) that capture communicative intent, and low-level acts (e.g., turn-taking, backchannel) that describe interaction mechanics (Schegloff, 1982;Gravano & Hirschberg, 2011;Duncan, 1972;Raux & Eskenazi, 2012;Khouzaimi et al., 2016;Marge et al., 2022;Lin et al., 2025b;Arora et al., 2025b;Nguyen et al., 2022b). This provides the system with a structured understanding of the ongoing dialogue. Second, we model the explicit reasoning process with a Graph-of-Thoughts (GoT) system (Yao et al., 2024). This system constructs a dynamic causal graph from the sequence of perceived speech acts, capturing the evolving chain of thought within the conversation. By performing inference over this graph, our model can not only predict the most appropriate subsequent behavior but also generate a natural language rationale explaining its decision. This transforms the opaque prediction task into an auditable reasoning process, which provides a unified benchmark for evaluating conversational behavior in duplex speech systems.

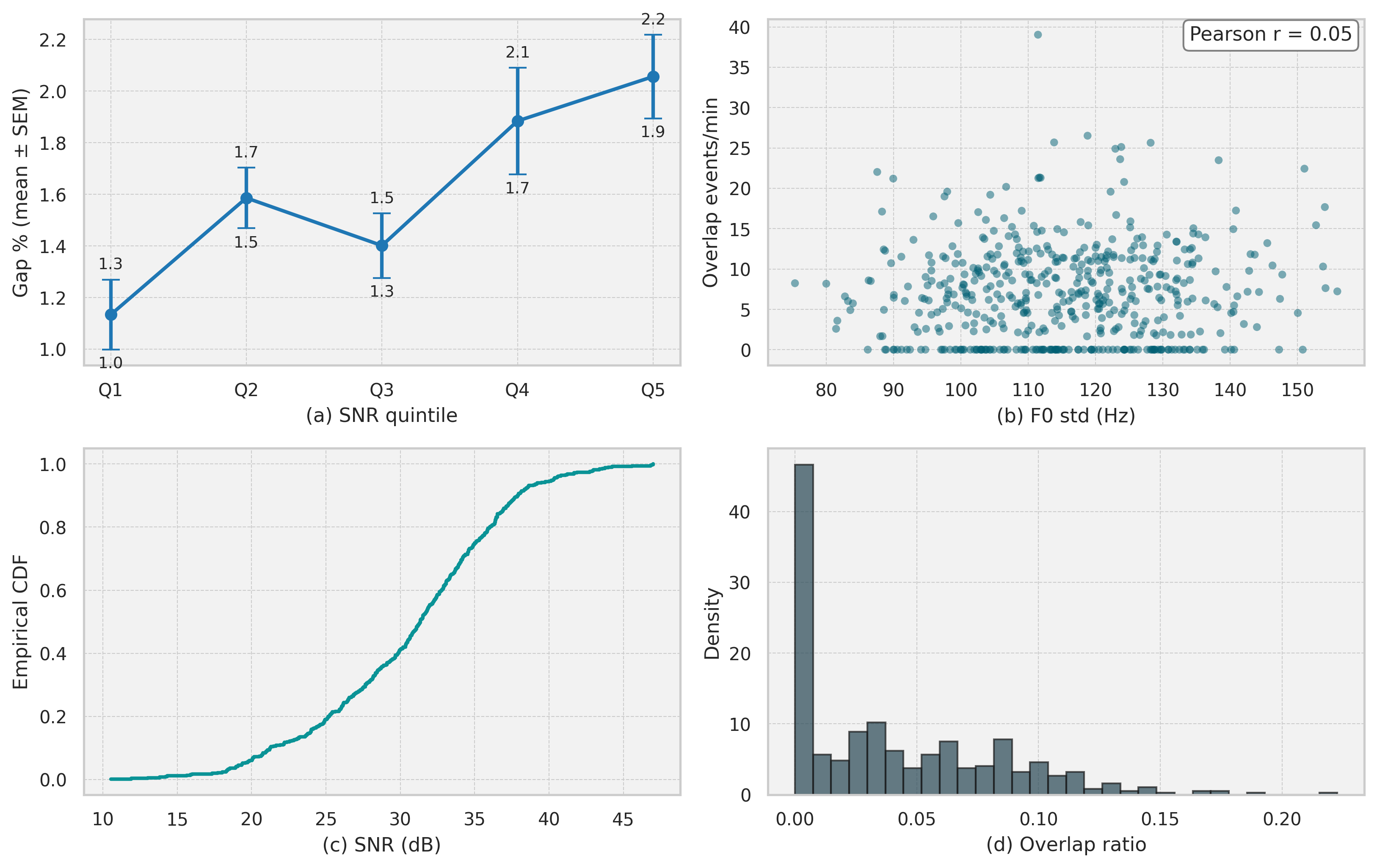

To train our framework, we developed a hybrid corpus that combines behavior-rich simulated dialogues with real-world conversational data annotated with human rationales. Our analysis confirms that the synthetic data reproduces key interactional structures of human conversation, such as turntaking dynamics.

In summary, our contributions are:

• A conceptual reframing of full-duplex interaction from next-token prediction to next-behavior reasoning, arguing that modeling the causal chain from intent to action is critical for natural dialogue. • A hierarchical speech act detection model that perceives conversational behaviors at both high (intent) and low (action) levels, serving as a foundational module for reasoning-driven dialogue systems. • A GoT framework for conversational reasoning that models intent-act dynamics as a causal graph, enabling real-time, interpretable decision-making and rationale generation. • A comprehensive empirical validation demonstrating that our system effectively detects conversational behaviors, generates plausible rationales, and successfully transfers its reasoning capabilities from simulated to real-world full-duplex audio.

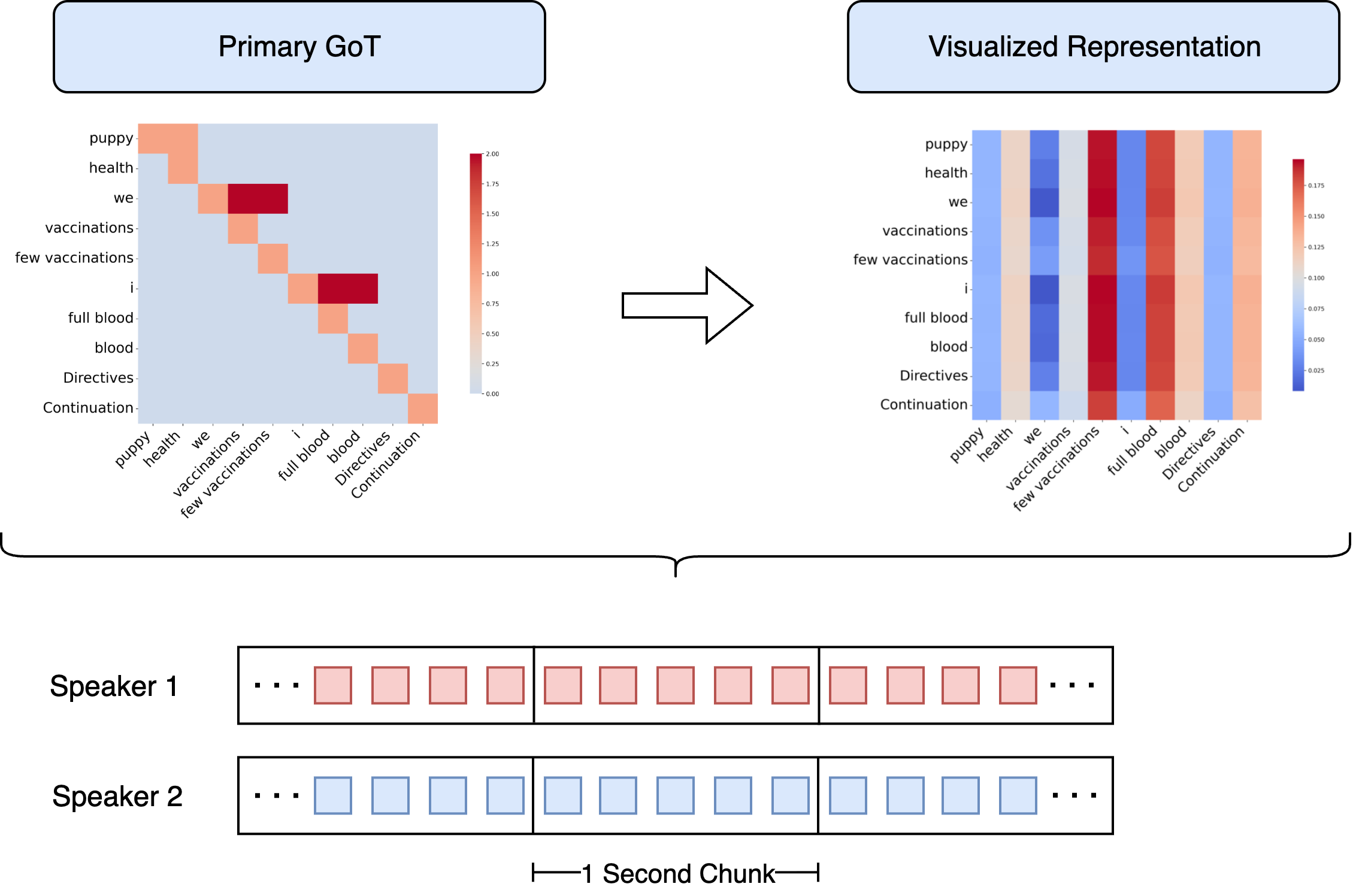

Duplex Models. Recent work in spoken dialogue systems (SDMs) increasingly draws on human conversational behaviors. Building on insights from human conversation, recent SDMs have progressed toward duplex capabilities-systems that listen and speak concurrently. These two nodes, together with the context nodes extracted via OpenIE, constitute the primary GoT. In the Graph-of-Thought (GoT) Behavior-Reasoning System, the primary GoT, the transcript, and the raw audio are processed by separate encoders and then combined via gated fusion, producing the rationale text for this chunk as well as an updated GoT graph.

2018; Li et al., 2022;Lee & Narayanan, 2010) where the system predicts the ag

This content is AI-processed based on open access ArXiv data.