The rapid growth in both the scale and complexity of Android malware has driven the widespread adoption of machine learning (ML) techniques for scalable and accurate malware detection. Despite their effectiveness, these models remain vulnerable to adversarial attacks that introduce carefully crafted feature-level perturbations to evade detection while preserving malicious functionality. In this paper, we present LAMLAD, a novel adversarial attack framework that exploits the generative and reasoning capabilities of large language models (LLMs) to bypass ML-based Android malware classifiers. LAMLAD employs a dual-agent architecture composed of an LLM manipulator, which generates realistic and functionality-preserving feature perturbations, and an LLM analyzer, which guides the perturbation process toward successful evasion. To improve efficiency and contextual awareness, LAMLAD integrates retrieval-augmented generation (RAG) into the LLM pipeline. Focusing on Drebin-style feature representations, LAMLAD enables stealthy and high-confidence attacks against widely deployed Android malware detection systems. We evaluate LAMLAD against three representative ML-based Android malware detectors and compare its performance with two state-of-the-art adversarial attack methods. Experimental results demonstrate that LAMLAD achieves an attack success rate (ASR) of up to 97%, requiring on average only three attempts per adversarial sample, highlighting its effectiveness, efficiency, and adaptability in practical adversarial settings. Furthermore, we propose an adversarial training-based defense strategy that reduces the ASR by more than 30% on average, significantly enhancing model robustness against LAMLAD-style attacks.

Smartphones have become an integral component of modern society, functioning as essential platforms for communication, productivity, and personal data management. As of the first quarter of 2025, Android maintained its dominance in the global mobile operating system market with a share of 71.88% [1]. However, this widespread adoption, together with Android's open-source nature, has also made it an attractive target for malicious actors. Contemporary Android malware is capable of exfiltrating sensitive information, including geolocation data, health-related records, and financial credentials. Consequently, the development of effective Android malware detection techniques has become a critical focus for both the cybersecurity industry and the academic research community.

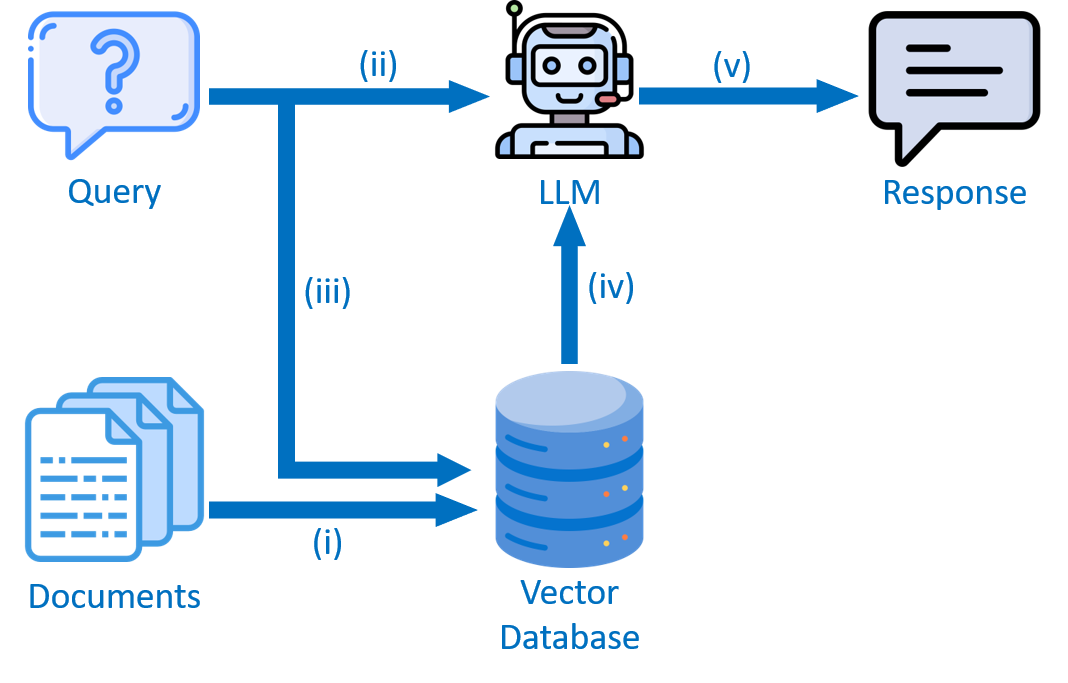

Over the past decade, Machine Learning (ML)-based approaches have demonstrated strong performance and flexibility in Android malware detection. These approaches typically rely on features such as Application Programming Interfaces (APIs) and permission requests to perform binary malware detection or malware family classification [2], [3]. Despite their success, prior studies have shown that ML models are susceptible to adversarial attacks [4], [5]. In such scenarios, attackers introduce carefully designed and imperceptible perturbations to generate adversarial examples, leading the model to misclassify malicious applications as benign. Once malware is transformed into an adversarial example, it can readily evade detection, giving rise to an ongoing arms race between the advancement of adversarial attack techniques [6], [7] and the development of robust defense mechanisms [8], [9] in Android malware detection systems.

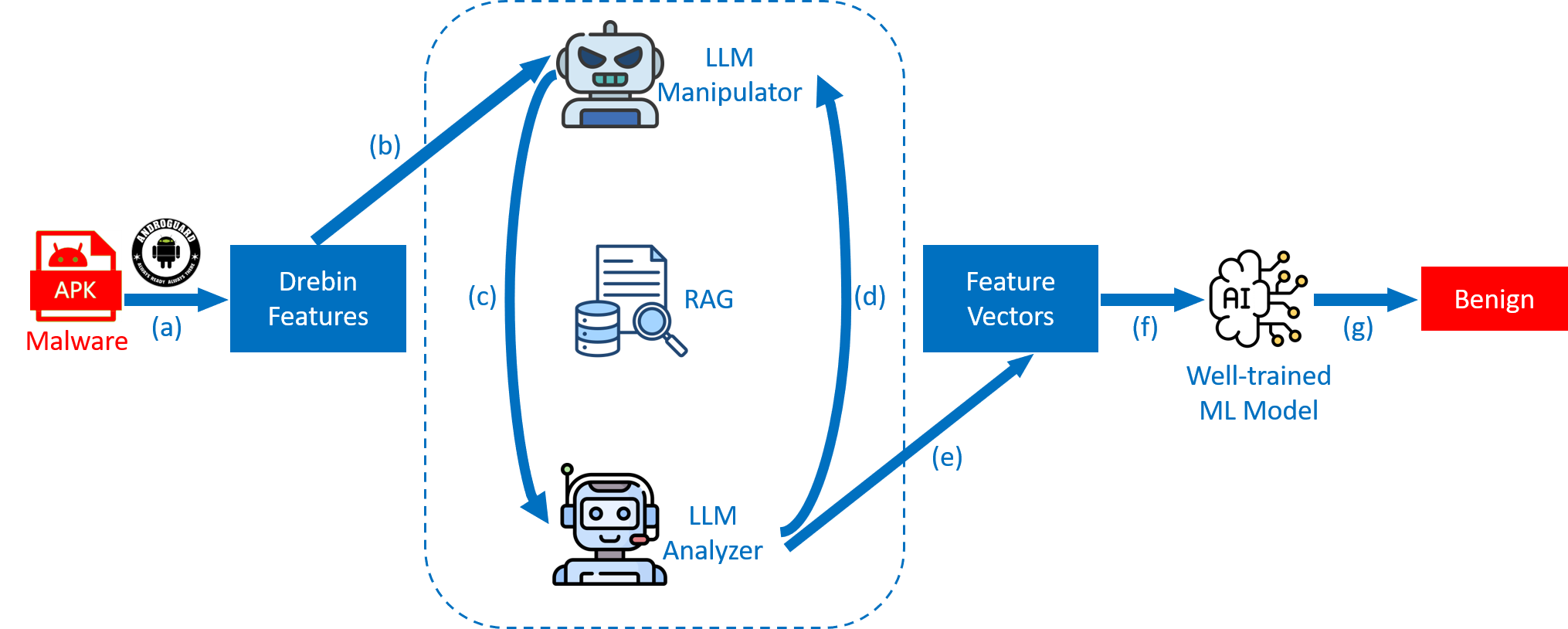

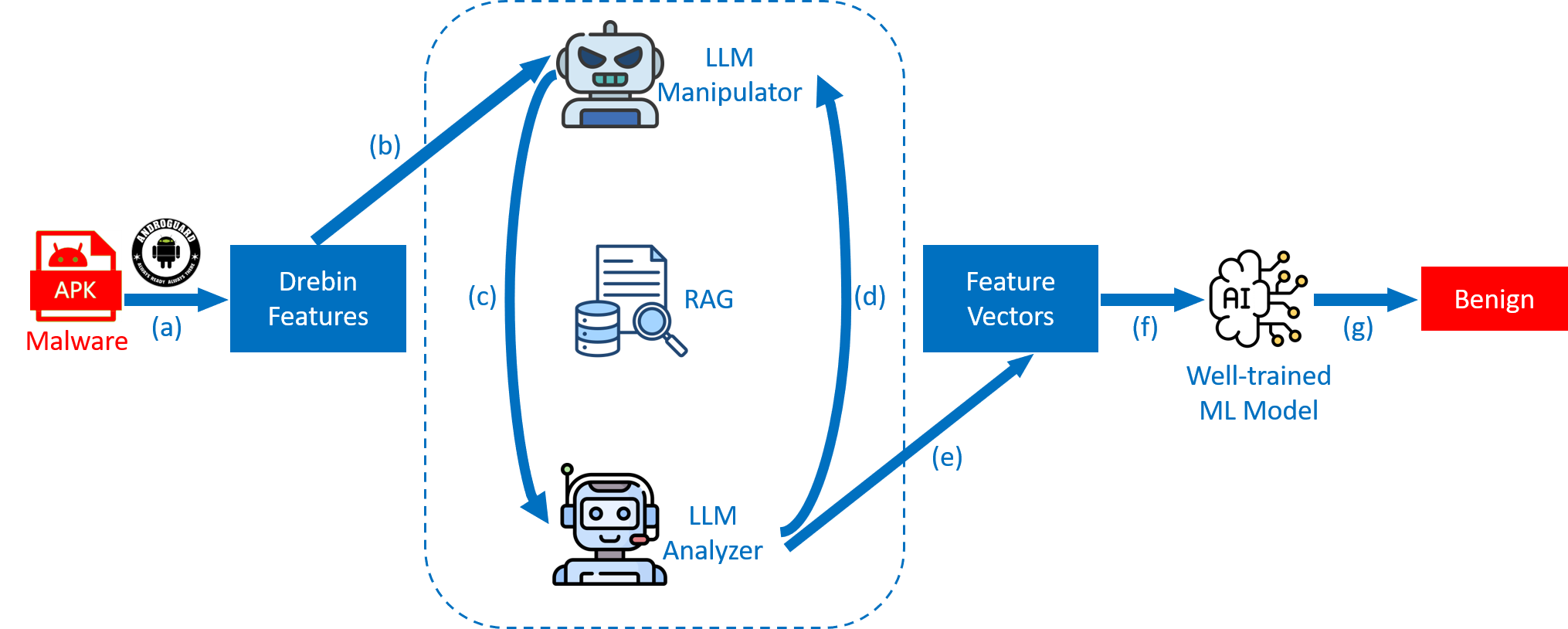

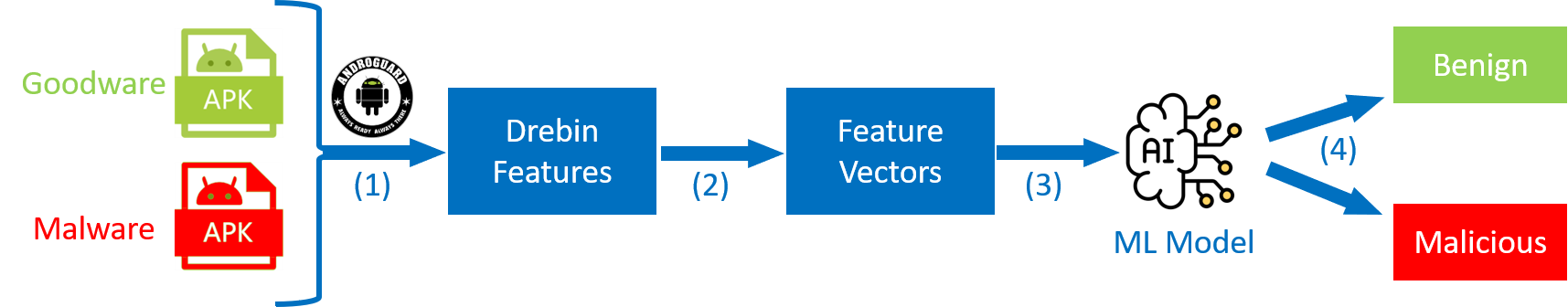

In this paper, we propose LAMLAD, a novel adversarial attack framework that exploits the generative and reasoning capabilities of Large Language Models (LLMs) to evade ML-based Android malware detectors. LAMLAD adopts a dualagent architecture consisting of an LLM manipulator and an LLM analyzer. The manipulator generates realistic featurelevel perturbations while preserving the core malicious functionality, whereas the analyzer steers the modification process to achieve successful evasion. Specifically, after introducing perturbations, the LLM manipulator forwards the modified feature representation of a malicious Android Package Kit (APK) to the LLM analyzer. The analyzer, which is unaware of the applied perturbations, evaluates the features and returns both its detection outcome and an explanatory rationale when the sample is classified as Malicious. Guided by this explanation, the LLM manipulator produces an alternative adversarial variant and resubmits the modified features to the analyzer. This iterative process continues until the analyzer labels the adversarial example as Benign. Furthermore, LAMLAD incorporates Retrieval-Augmented Generation (RAG) into the LLM pipeline to enhance efficiency and contextual understanding. By targeting Drebin [10] feature representations, LAMLAD enables stealthy and high-confidence attacks against widely adopted Android malware classifiers.

We evaluate the effectiveness of LAMLAD against three representative ML-based Android malware detection models and compare its performance with two state-of-the-art adversarial attack methods [6], [11]. In addition, we investigate the impact of employing different LLMs within the LAMLAD framework. Experimental results demonstrate that LAMLAD achieves an Attack Success Rate (ASR) of 97%, requiring an average of only 3 attempts per adversarial sample, thereby highlighting its effectiveness, efficiency, and adaptability in practical adversarial environments. Moreover, we propose an adversarial training-based defense strategy that reduces the ASR by more than 30% on average, effectively enhancing the robustness of malware classifiers against LAMLAD-driven attacks.

The rest of this paper is organized as follows. Section II introduces the background on the APK structure, Drebin features, ML-based Android malware detection, adversarial attacks, and LLMs. Section III presents the threat model and the detailed design of the proposed LAMLAD framework. Section IV describes the experimental setup and reports the evaluation results with comprehensive analysis. Section V introduces an adversarial training-based defense mechanism and evaluates its effectiveness. Section VI discusses limitations and outlines potential future research directions, while Section VII surveys related work. Finally, Section VIII concludes the paper.

The Android operating system distributes and installs applications in the form of APKs. An APK is a compressed archive that contains multiple files and directories required for application deployment on Android devices. These include the AndroidManifest.xml file, the classes.dex file, the lib directory (compiled native libraries), the META-INF directory (verification metadata), the assets directory (raw application resources), the res director

This content is AI-processed based on open access ArXiv data.