Sensitivity-Aware Mixed-Precision Quantization for ReRAM-based Computing-in-Memory

Reading time: 1 minute

...

📝 Original Info

- Title: Sensitivity-Aware Mixed-Precision Quantization for ReRAM-based Computing-in-Memory

- ArXiv ID: 2512.19445

- Date: 2025-12-22

- Authors: ** 제공된 정보에 저자 명단이 포함되어 있지 않습니다. **

📝 Abstract

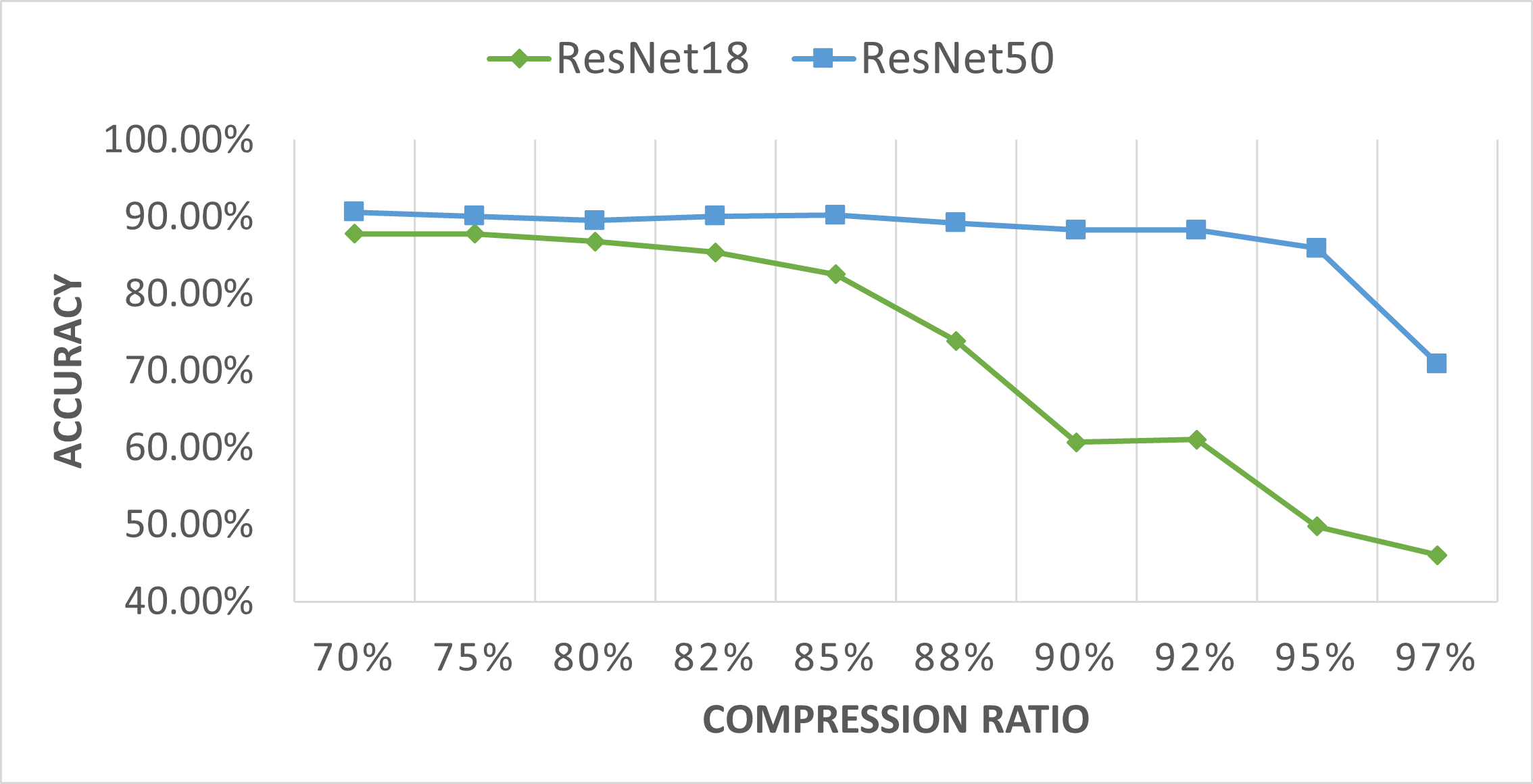

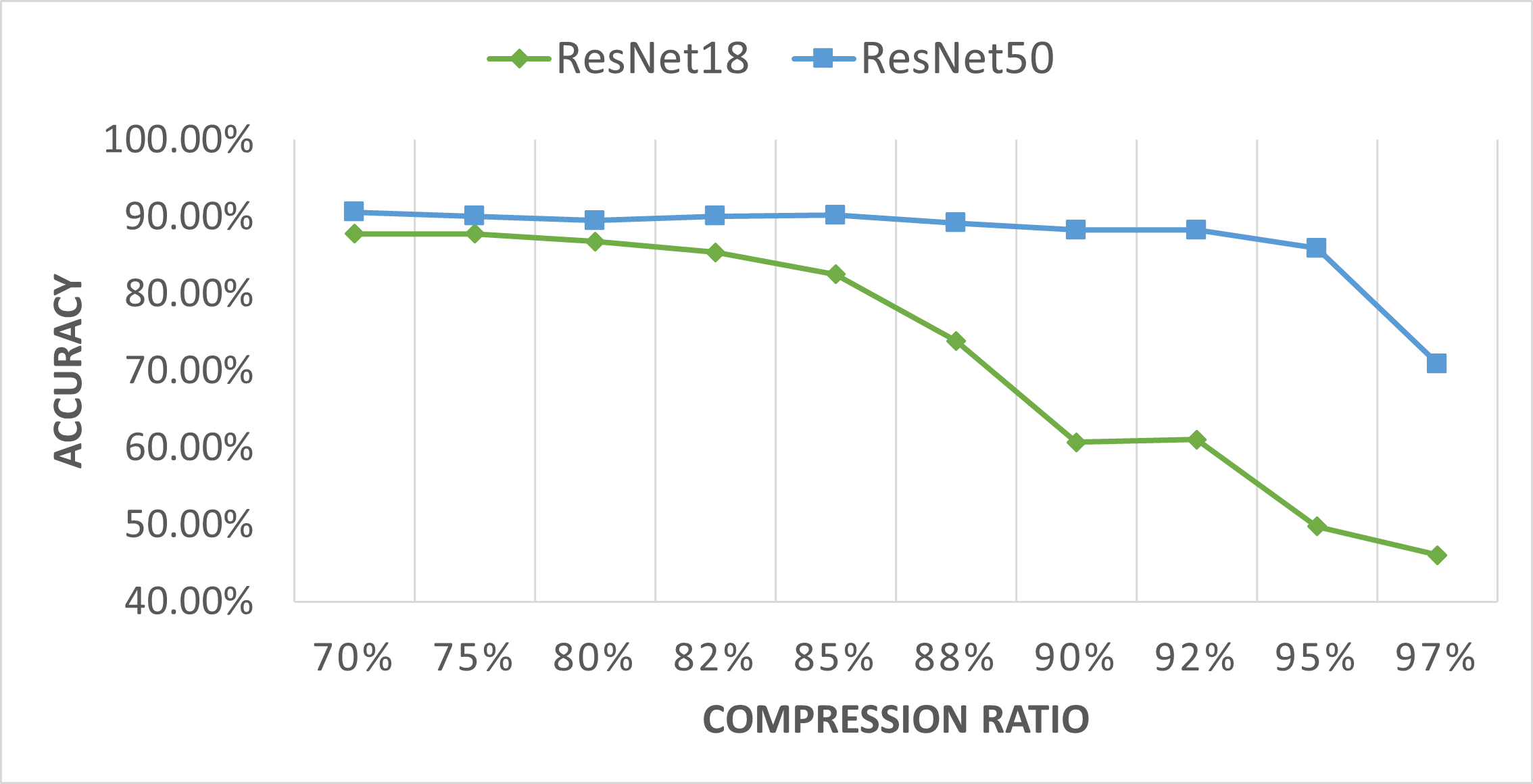

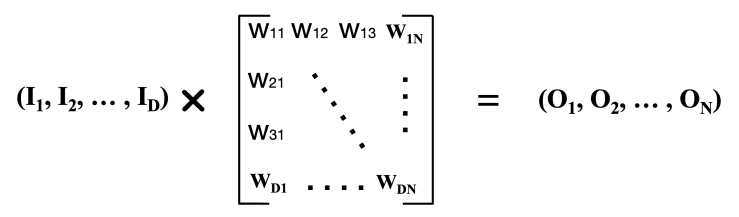

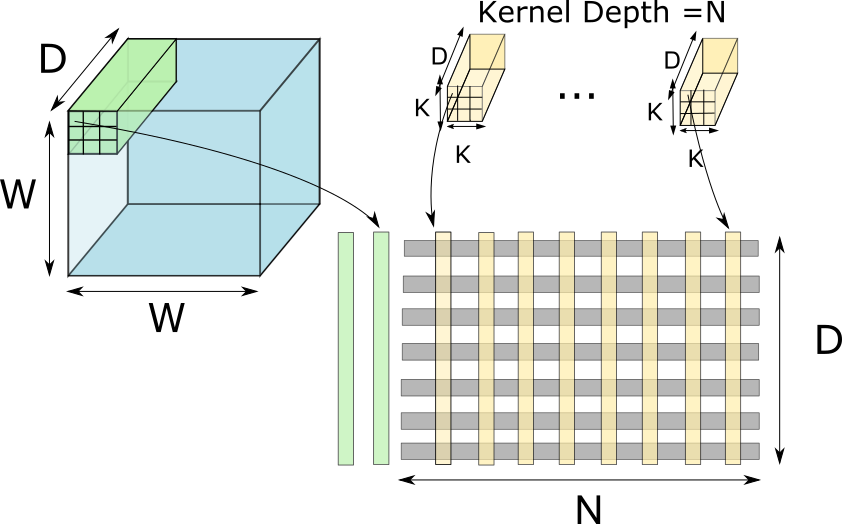

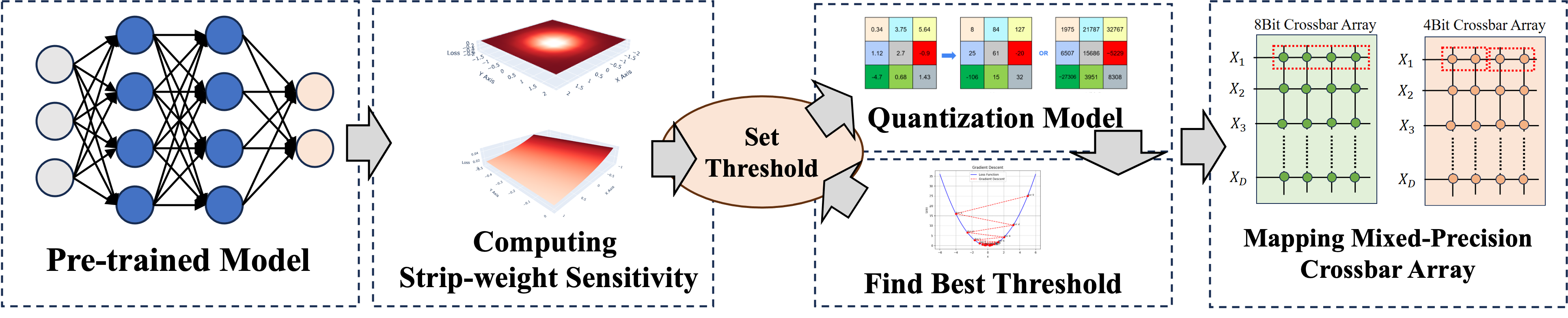

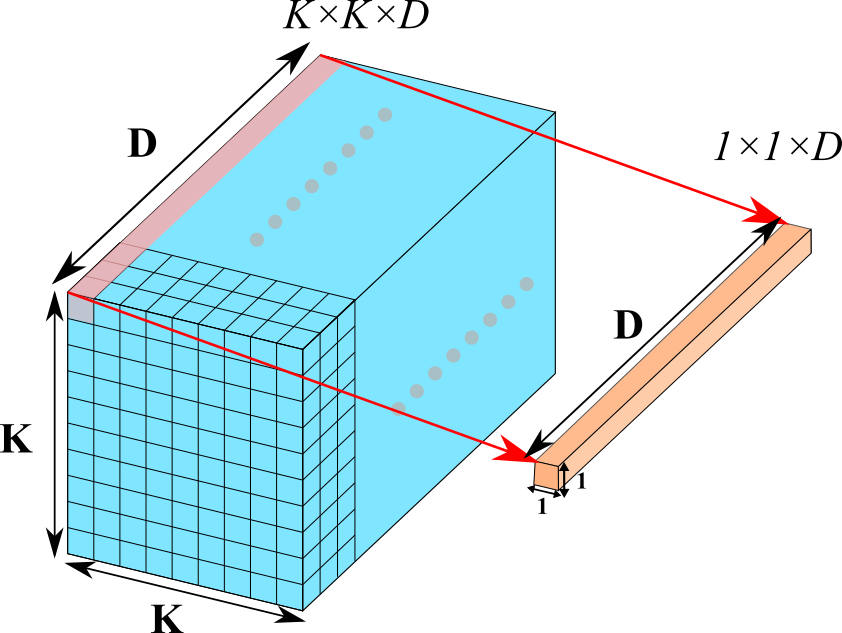

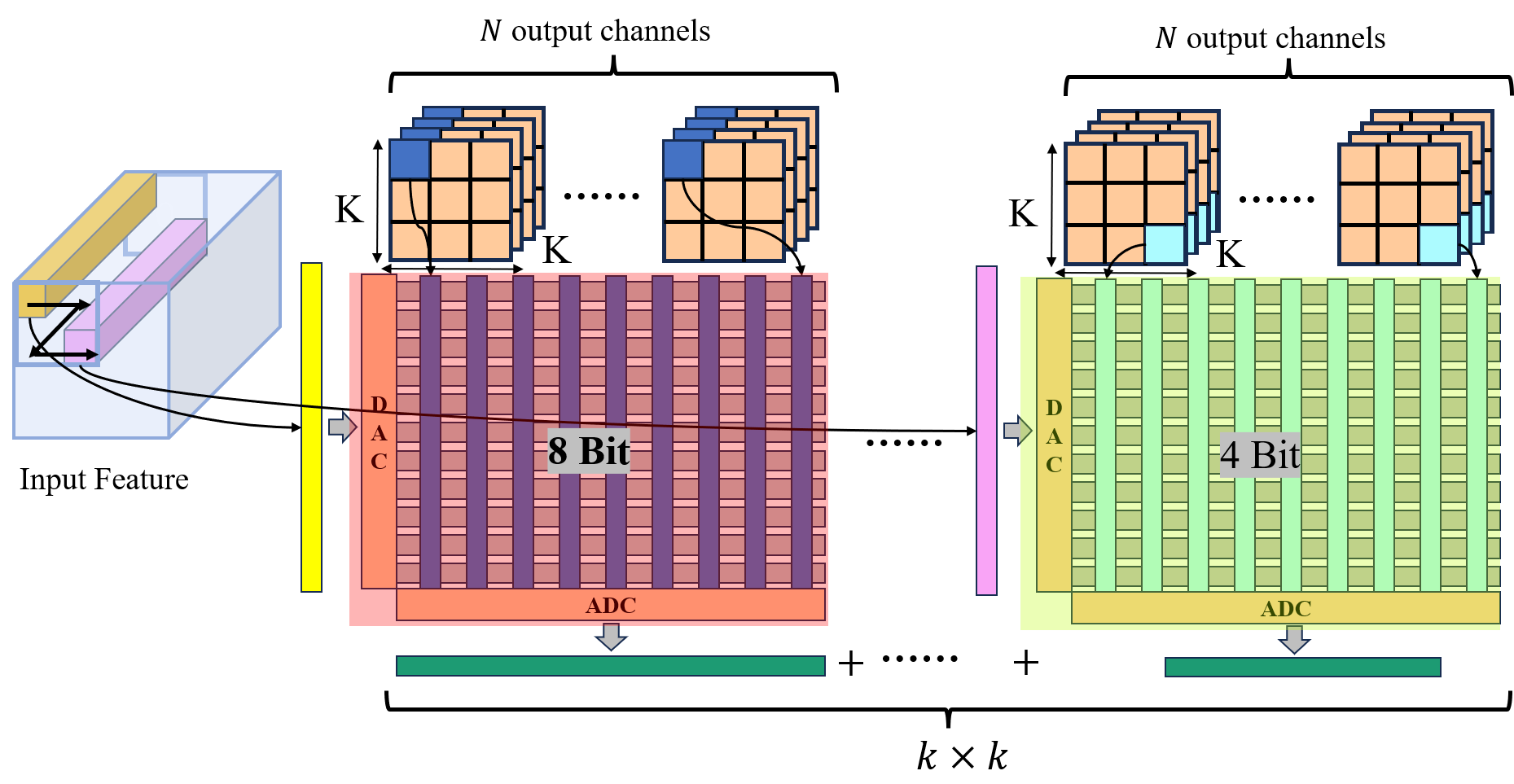

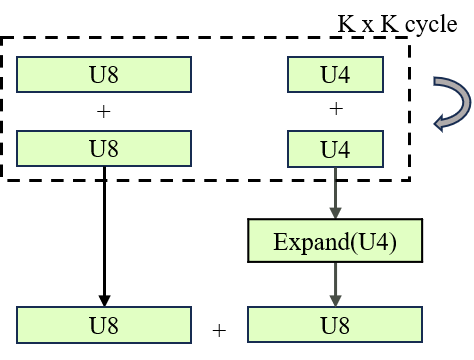

Compute-In-Memory (CIM) systems, particularly those utilizing ReRAM and memristive technologies, offer a promising path toward energy-efficient neural network computation. However, conventional quantization and compression techniques often fail to fully optimize performance and efficiency in these architectures. In this work, we present a structured quantization method that combines sensitivity analysis with mixed-precision strategies to enhance weight storage and computational performance on ReRAM-based CIM systems. Our approach improves ReRAM Crossbar utilization, significantly reducing power consumption, latency, and computational load, while maintaining high accuracy. Experimental results show 86.33% accuracy at 70% compression, alongside a 40% reduction in power consumption, demonstrating the method's effectiveness for power-constrained applications.💡 Deep Analysis

📄 Full Content

📸 Image Gallery

Reference

This content is AI-processed based on open access ArXiv data.