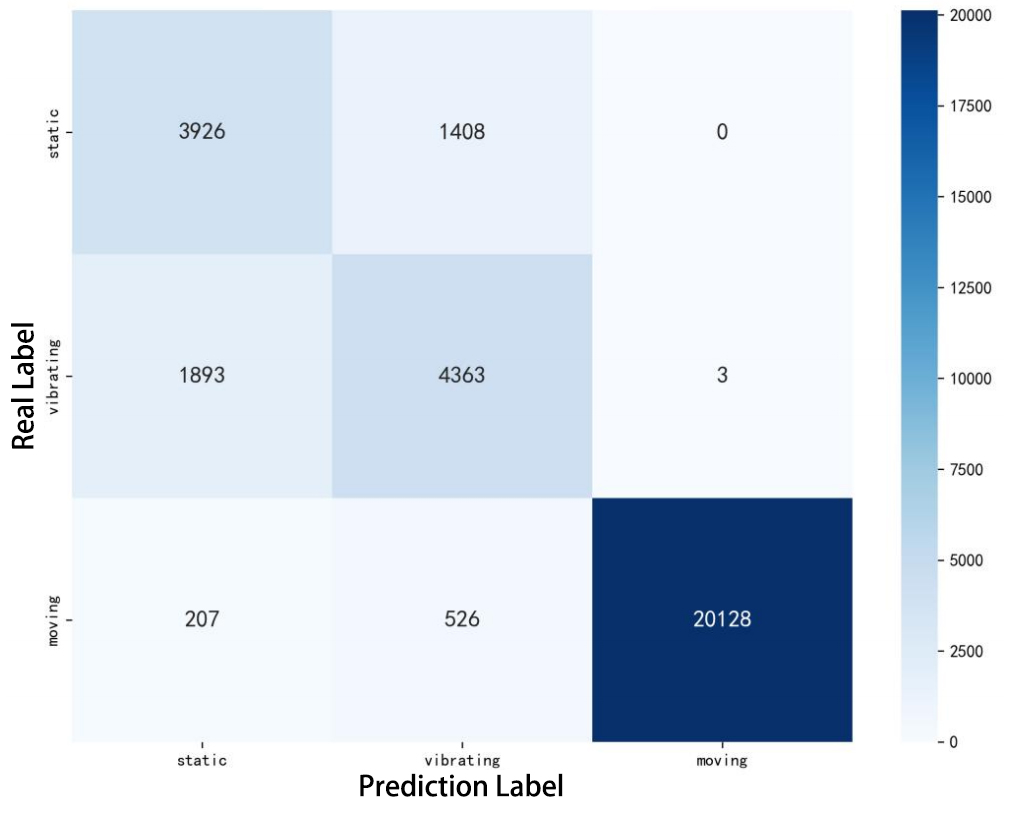

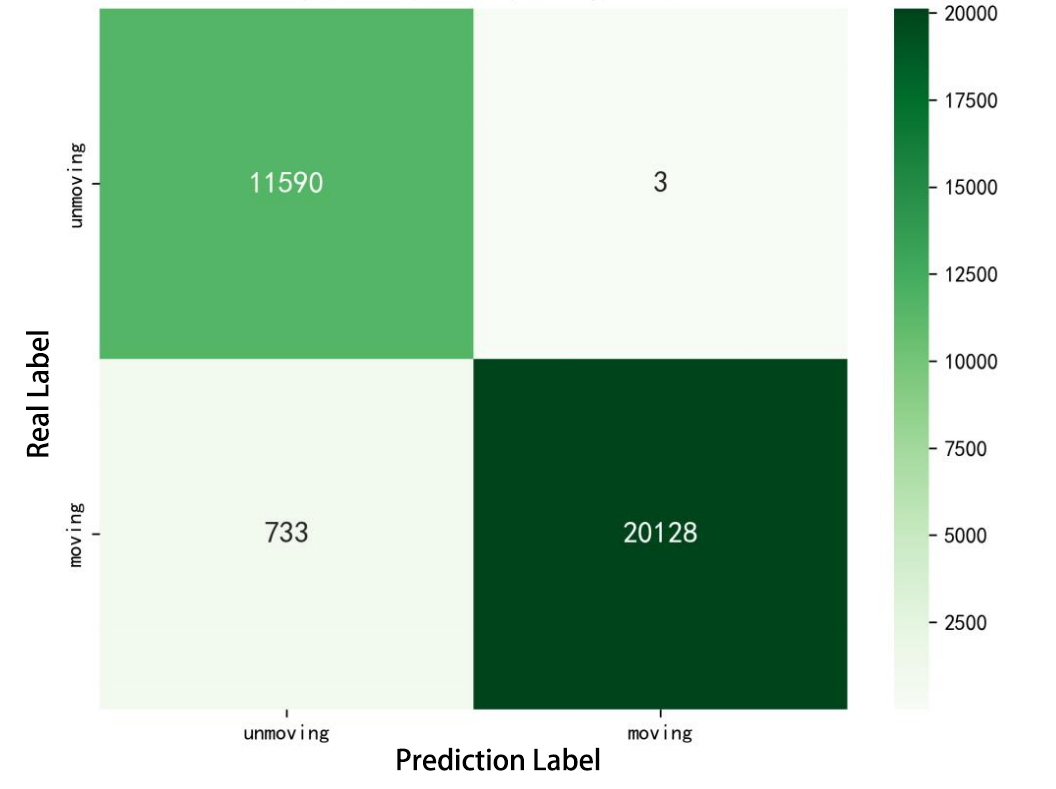

A vision-based trajectory analysis solution is proposed to address the "zero-speed braking" issue caused by inaccurate Controller Area Network (CAN) signals in commercial vehicle Automatic Emergency Braking (AEB) systems during low-speed operation. The algorithm utilizes the NVIDIA Jetson AGX Xavier platform to process sequential video frames from a blind spot camera, employing self-adaptive Contrast Limited Adaptive Histogram Equalization (CLAHE)-enhanced Scale-Invariant Feature Transform (SIFT) feature extraction and K-Nearest Neighbors (KNN)-Random Sample Consensus (RANSAC) matching. This allows for precise classification of the vehicle's motion state (static, vibration, moving). Key innovations include 1) multiframe trajectory displacement statistics (5-frame sliding window), 2) a dual-threshold state decision matrix, and 3) OBD-II driven dynamic Region of Interest (ROI) configuration. The system effectively suppresses environmental interference and false detection of dynamic objects, directly addressing the challenge of low-speed false activation in commercial vehicle safety systems. Evaluation in a real-world dataset (32,454 video segments from 1,852 vehicles) demonstrates an F1-score of 99.96% for static detection, 97.78% for moving state recognition, and a processing delay of 14.2 milliseconds (resolution 704x576). The deployment on-site shows an 89% reduction in false braking events, a 100% success rate in emergency braking, and a fault rate below 5%.

Between 2016 and 2017, Great Britain recorded approximately 174,510 road casualties, including 27,010 fatalities or serious injuries ( [9]). The US Department of Transportation reports that more than 90% of traffic accidents are the result of driver errors ( [26]). Connected and Automated Vehicles (CAVs) have transformative potential to improve driving safety, traffic flow efficiency, and energy consumption optimization ( [5]). As a critical active safety system, Automatic Emergency Braking (AEB) plays an important part in obstacle avoidance maneuvers ( [36]). But its practical application in commercial vehicles faces a persistent technical challenge: misactivation at low speeds (particularly in the range of 0-5 km/h), significantly compromising the reliability of the system and user acceptance ( [31]).

AEB operational logic is based on multiple decision criteria, with vehicle speed being the paramount. However, current implementations depend on Controller Area Network (CAN) signals that exhibit inherent precision limitations. This serial bus communication protocol, originally developed by Robert Bosch GmbH ( [22]), facilitates data exchange between ECUs but struggles to accurately represent velocities in the critical range of 0-5 km/h. The resulting “zero-speed braking” phenomenon occurs when erroneous CAN readings trigger unnecessary full brake applications despite actual vehicle motion.

Existing solutions for zero-speed detection primarily fall into two categories: velocity-based methods and Inertial Measurement Unit (IMU)-sensor fusion approaches ( [14,3,28]). This study introduces a cost-effective vision-based zerospeed detection algorithm as either a complementary or alternative solution. Leveraging blind-spot camera feeds, the proposed methodology employs adaptive feature analysis of sequential video frames to determine actual vehicle motion states. By integrating with the existing AEB decision pipeline through an auxiliary verification module, the system selectively suppresses unnecessary braking interventions only when true static conditions (including vibratory states) are confirmed, while maintaining robustness against environmental confounders such as illumination variations, precipitation, and dynamic object intrusions (e.g., pedestrians, vehicles).

Key innovations of this research include: The IMU sensor solutions ( [3,28]) improved accuracy through acceleration compensation but suffered from vibration misjudgment, where engine idle vibrations were often mistakenly identified as motion. This study, for the first time, distinguishes between vibration and actual motion through visual trajectory displacement statistics, achieving an F1 score of 96.40%(Figure III) for vibration state detection.

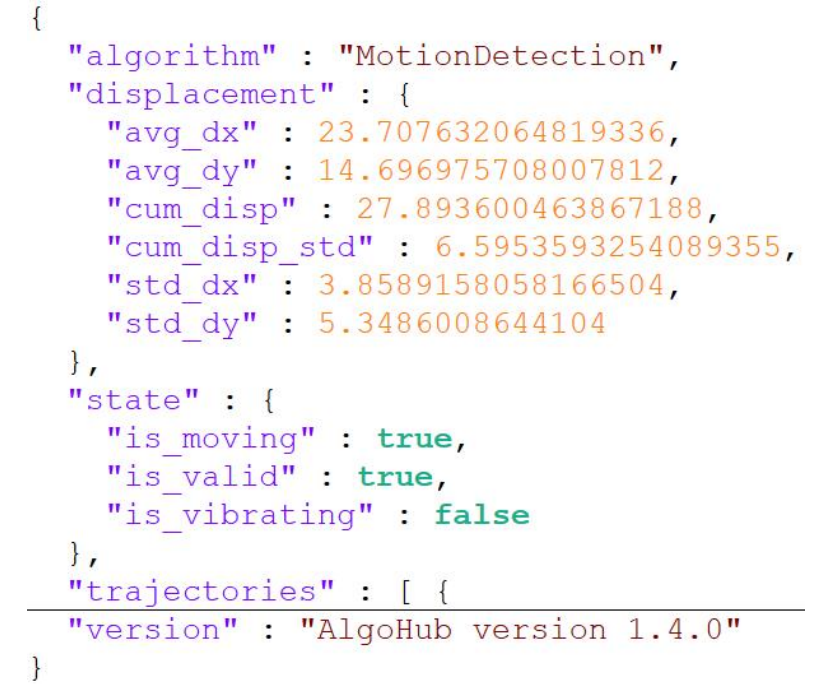

The Scale-Invariant Feature Transform (SIFT) algorithm, proposed by David Lowe in 1999 and improved in 2004, extracts local feature descriptors that are invariant to scale, rotation, and illumination by constructing a difference-of-Gaussian pyramid and employing an extremum detection mechanism. It has become one of the most influential classic algorithms in the field of computer vision ( [33,16,15,34]). In the field of vehicle dynamics analysis, SIFT features are widely applied in core tasks such as motion estimation, target tracking, and multi-modal data fusion, owing to their unique robustness. Feature-based methods dominate vehicle motion analysis. Research ( [33]) indicates that Lowe’s SIFT provides scale invariance but suffers from slow retrieval speeds and poor performance. This study utilizes Contrast Limited Adaptive Histogram Equalization (CLAHE) to enhance the feature quality of low-contrast scenes and integrates CLAHE-SIFT, which adaptively enhances contrast in low-light conditions, thereby improving the success rate of feature matching.

Commercial vehicles face more severe environmental conditions compared to passenger vehicles. Research ( [23]) indicates that harsh weather conditions, such as rain and snow, can affect the accuracy of vision-based detection methods. Subsequent studies ( [8,18]) have improved robustness by integrating radar and cameras, but this approach increases costs. Our solution employs a dynamic ROI (Region of Interest) filtering mechanism to exclude non-road areas (e.g., truck side mirrors), thereby reducing unnecessary computations.

TABLE 1 compares the intergenerational differences between this scheme and existing technologies.

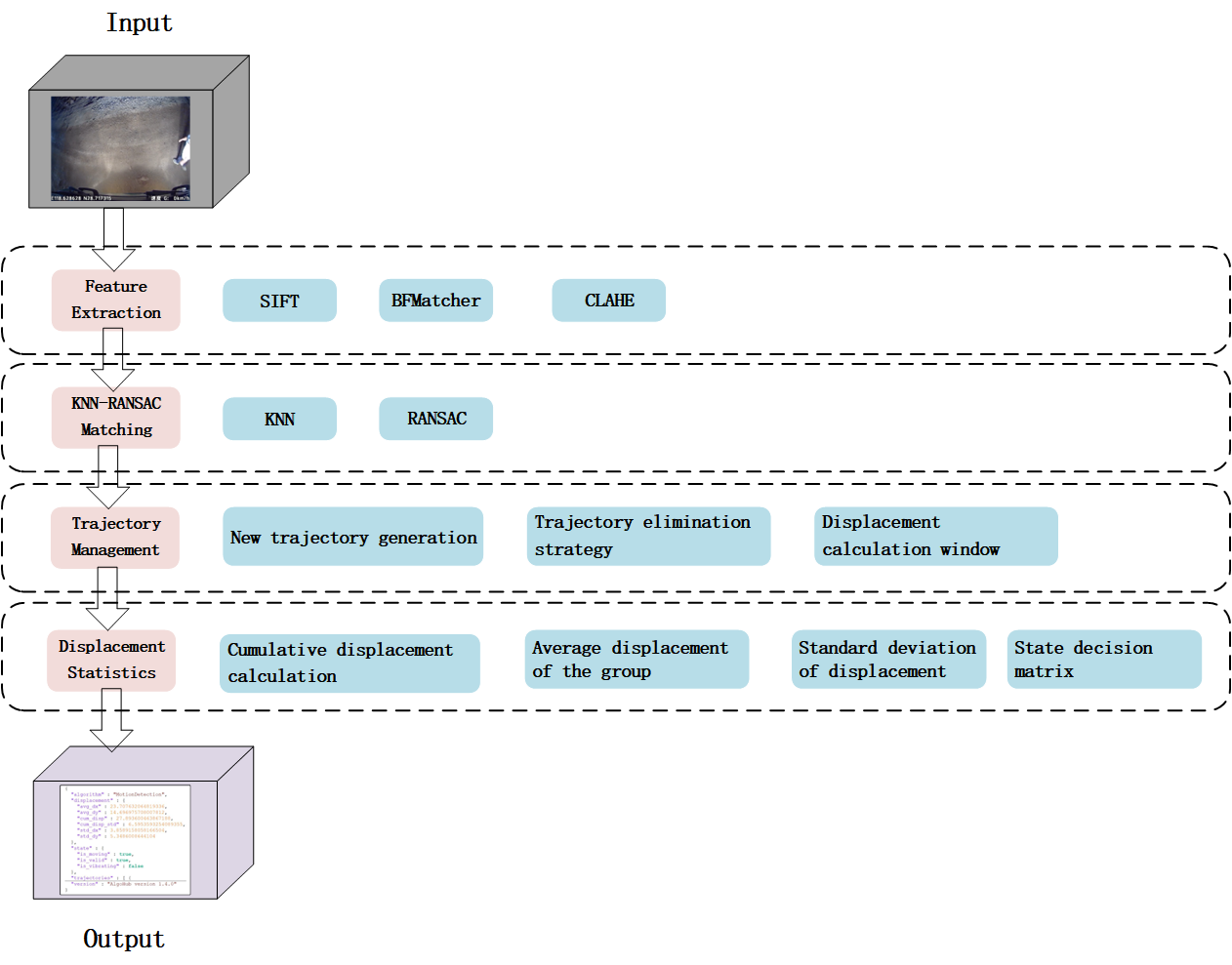

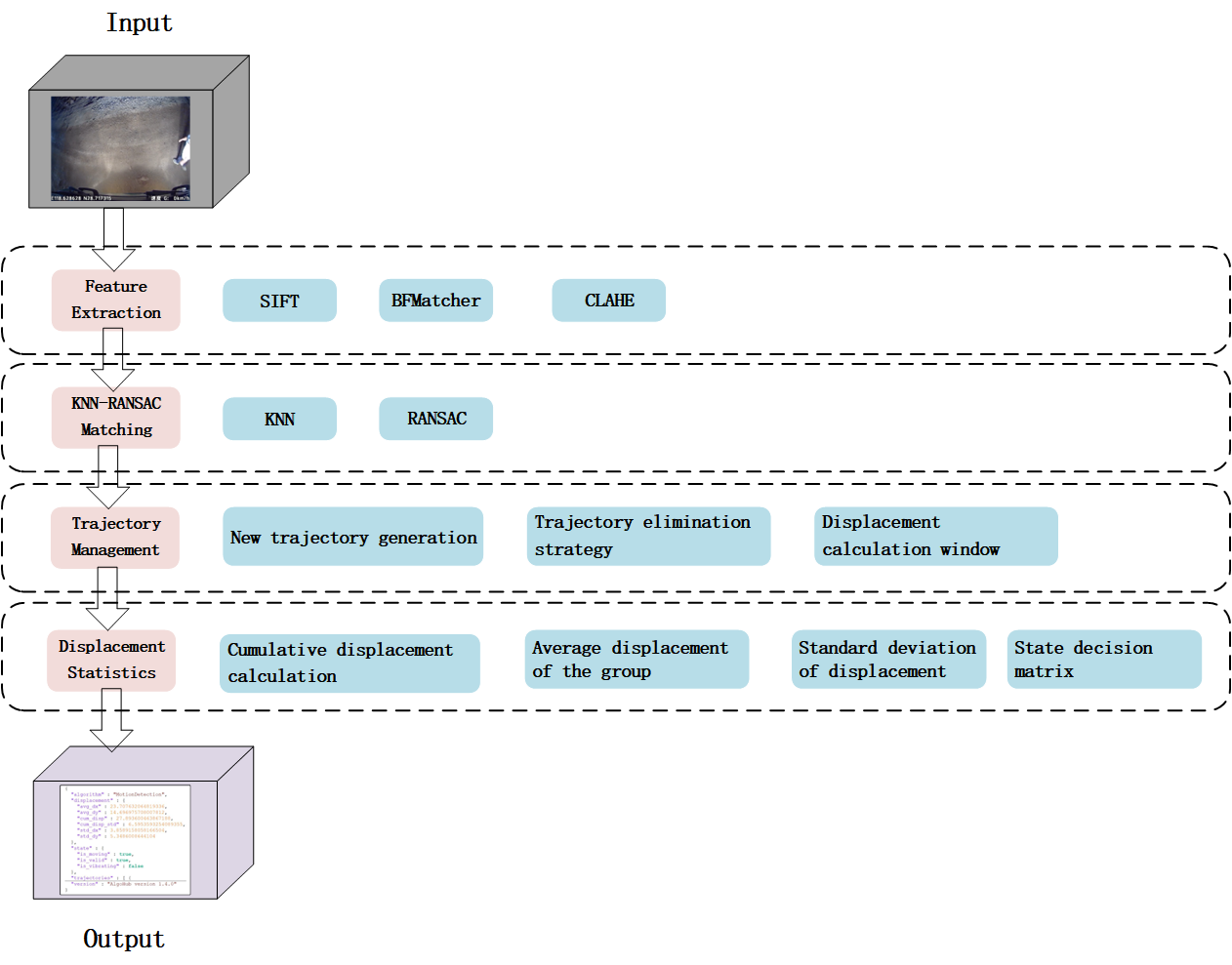

The core algorithm framework of the system is shown in Figure 1. The general workflow of the algorithm is as follows:The system receives real-time video streams from the blind spot camera as input. Scale-Invariant Feature Transform (SIFT), Binary Feature Matching (BFMatcher), and Contrast Limited Adaptive ), followed by verification through the Random Sample Consensus (RANSAC) algorithm to improve the accuracy and robustness of the matching process. Finally, through trajectory management and displacement statistics, the state of motion (sta

This content is AI-processed based on open access ArXiv data.