Modern intrusion detection systems (IDS) leverage graph neural networks (GNNs) to detect malicious activity in system provenance data, but their decisions often remain a black box to analysts. This paper presents a comprehensive XAI framework designed to bridge the trust gap in Security Operations Centers (SOCs) by making graph-based detection transparent. We implement this framework on top of KAIROS, a state-of-the-art temporal graph-based IDS, though our design is applicable to any temporal graph-based detector with minimal adaptation. The complete codebase is available at https://github.com/devang1304/provex.git. We augment the detection pipeline with post-hoc explanations that highlight why an alert was triggered, identifying key causal subgraphs and events. We adapt three GNN explanation methods - GraphMask, GNNExplainer, and a variational temporal GNN explainer (VA-TGExplainer) - to the temporal provenance context. These tools output human-interpretable representations of anomalous behavior, including important edges and uncertainty estimates. Our contributions focus on the practical integration of these explainers, addressing challenges in memory management and reproducibility. We demonstrate our framework on the DARPA CADETS Engagement 3 dataset and show that it produces concise window-level explanations for detected attacks. Our evaluation reveals that the explainers preserve the TGNN's decisions with high fidelity, surfacing critical edges such as malicious file interactions and anomalous netflows. The average explanation overhead is 3-5 seconds per event. By providing insight into the model's reasoning, our framework aims to improve analyst trust and triage speed.

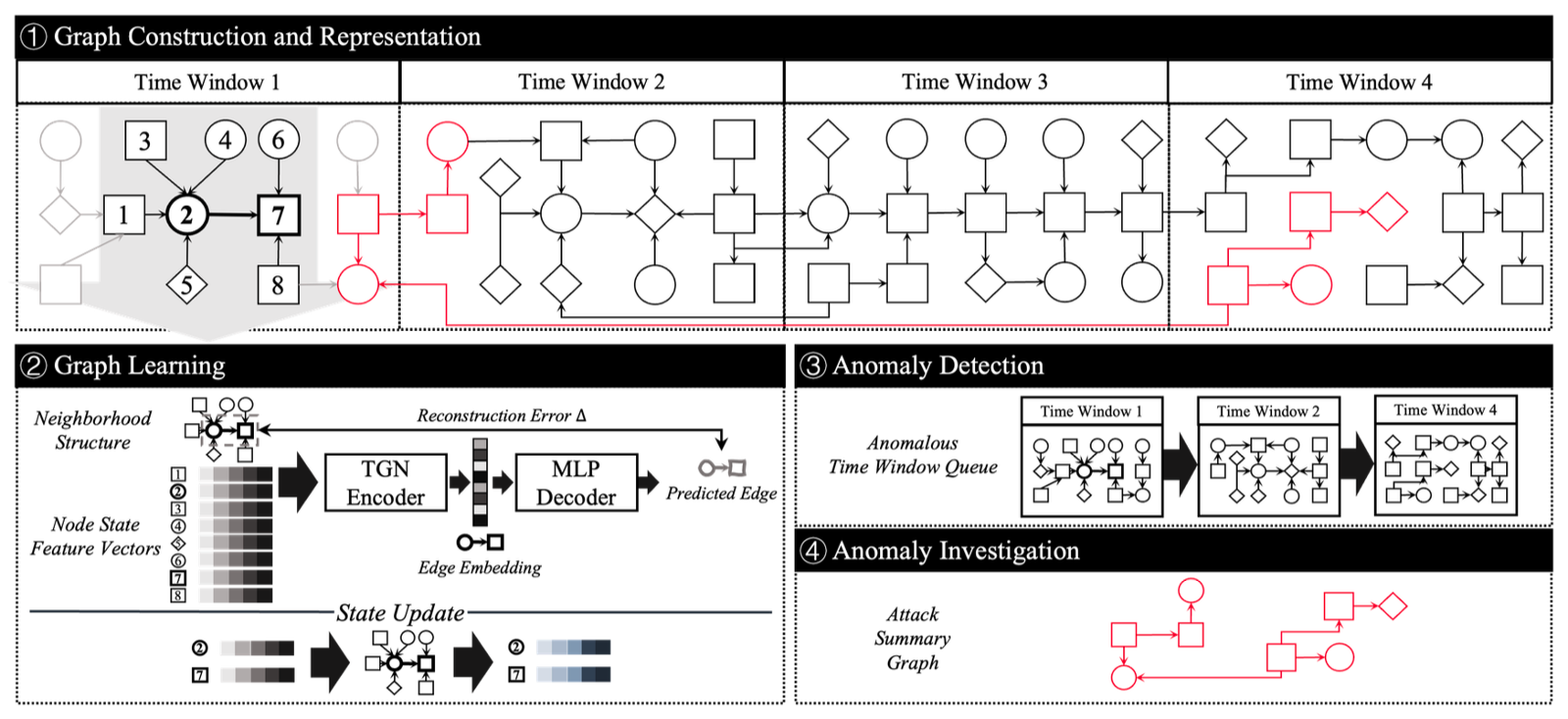

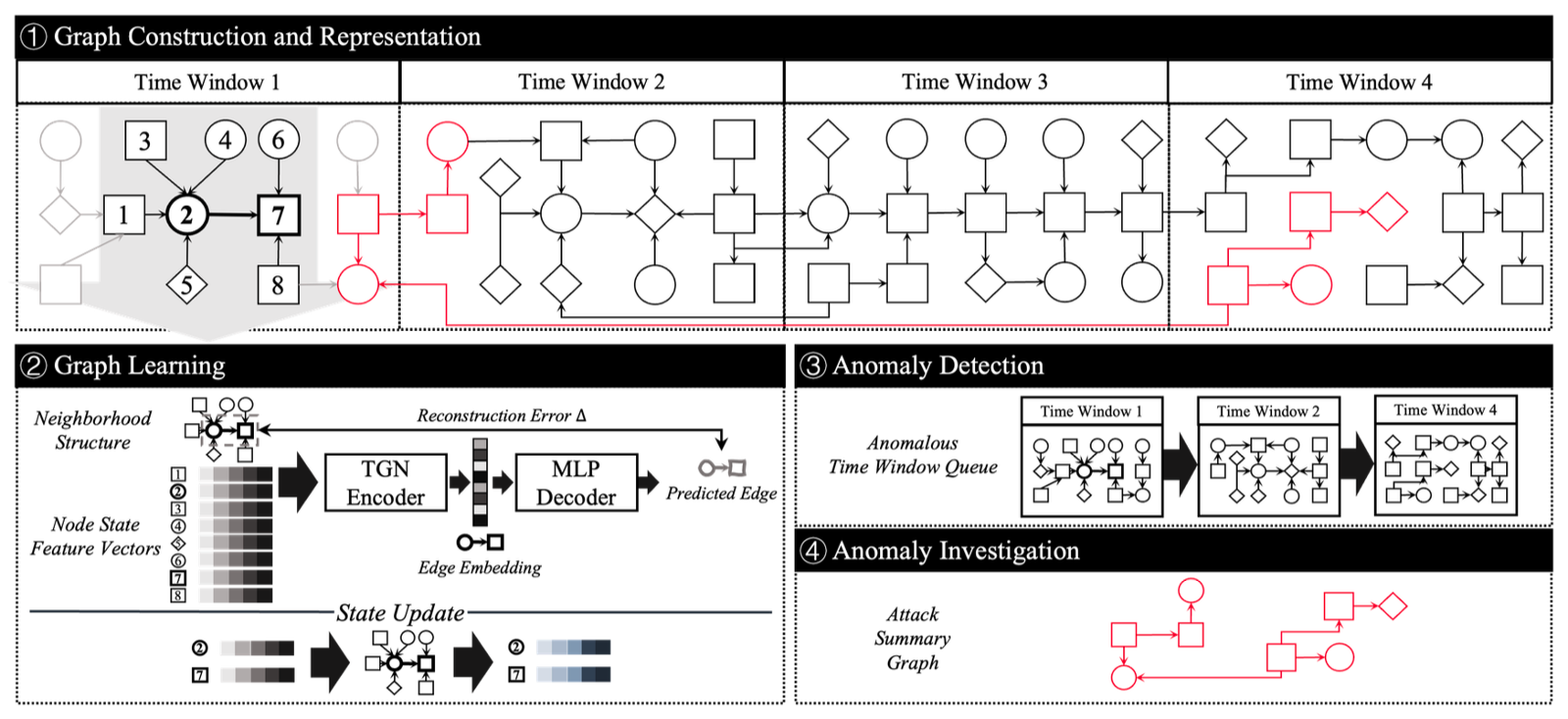

Machine-learning driven IDSs have shown great success in detecting advanced threats in complex system logs, but they often lack transparency. In security operations, this opacity creates a critical "trust gap": a detector that raises an alert without explanation provides little insight into whether the alert is a true attack or a false positive. KAIROS is a stateof-the-art provenance-based IDS that uses a Temporal Graph Neural Network (TGNN) to detect anomalies in streaming system event graphs with high accuracy [5]. However, its decision-making process remains a black box. When KAIROS flags a sequence of events as malicious, analysts are presented with a reconstructed attack subgraph but lack specific guidance on why the model considered those events anomalous.

To bridge this gap, we present a comprehensive XAI framework implemented as PROVEX, which augments the KAIROS pipeline with post-hoc explanations. Our goal is not to propose a novel explanation algorithm, but to adapt existing GNN explainability techniques to the specific constraints of provenance-based intrusion detection. We focus on generating temporal subgraph explanations that highlight the key causal events driving the model’s alerts.

A core contribution of our work is the integration of a Variational Temporal Graph Explainer (VA-TGExplainer). While standard methods like GNNExplainer [6] and GraphMask [7] provide valuable insights, they often struggle with the ambiguity inherent in provenance graphs, where multiple plausible explanations may exist for a single anomaly. Furthermore, deterministic masks can be unstable. VA-TGExplainer addresses this by modeling the explanation as a distribution, capturing the uncertainty in edge importance. This allows us to provide analysts with not just a single explanation, but a measure of confidence in which edges are truly critical versus those that are merely incidental. Our pipeline combines global windowlevel pruning (via GraphMask) with fine-grained, uncertaintyaware event analysis (via VA-TGExplainer) to provide a comprehensive view of the detected threat.

In this paper, we detail how our framework addresses these challenges and achieves explainable intrusion detection on the CADETS dataset. We make the following contributions:

• Explainable TGNN Framework: We design an end-toend pipeline that combines KAIROS’s TGNN-based detection with multiple GNN explainers to produce humanreadable explanations for each detected attack. To our knowledge, this is the first deployable system providing temporally-aware GNN explanations for streaming cyber attack detection. • Adaptation of XAI Methods: Instead of proposing a new explainer algorithm, we adapt and engineer existing methods for our domain. We integrate GraphMask for identifying critical edges in an entire attack window, GNNExplainer [6] for fine-grained per-event explanation with fidelity metrics, and a custom VA-TGExplainer (a variational temporal graph explainer) that extends prior temporal explainers to quantify uncertainty in the importance of each edge. These methods are modified to work on KAIROS’s temporal graph data and are orchestrated to provide both global (window-level) and local (eventlevel) insights. • Scalable Framework Design: We implement a suite of system improvements to handle the scale and complexity of real audit logs. This includes GPU memory management with caching and automatic fallback to CPU when GPU memory is insufficient, parallel processing of time windows for throughput, and logging/reproducibility features to ensure consistent results despite nondeterministic components. Our caching of intermediate graph data cuts peak memory usage by ≈60% in tests, avoiding out-ofmemory errors when explaining large subgraphs. Fallback logic ensures the pipeline doesn’t fail on resourceconstrained systems. • Evaluation: We evaluate the framework on the DARPA Transparent Computing (TC) Engagement 3 dataset [5] (April 2018), focusing on the CADETS host’s attack on April 6, 2018. The system successfully generates explanations for the known attack behaviors (e.g., a webserver spawning a shell and exfiltrating data) by highlighting the most anomalous edges. We report metrics on explanation fidelity and computational cost, demonstrating the feasibility of deploying this in a SOC setting.

Effective intrusion detection and explainability in dynamic graphs are active areas of research. This section reviews key developments in provenance-based IDS and the evolution of GNN explainability methods.

Provenance-based intrusion detection has evolved from rulebased systems to advanced learning-based approaches. Early systems like StreamSpot [10] used graph clustering on streaming edges to identify anomalies, while Unicorn [4] employed graph sketching to summarize provenance graphs for anomaly detection. HOLMES [3] represented a significant step in correlating information flows to detect APTs, though it relied on expert-defined rules. More recently, KAI

This content is AI-processed based on open access ArXiv data.