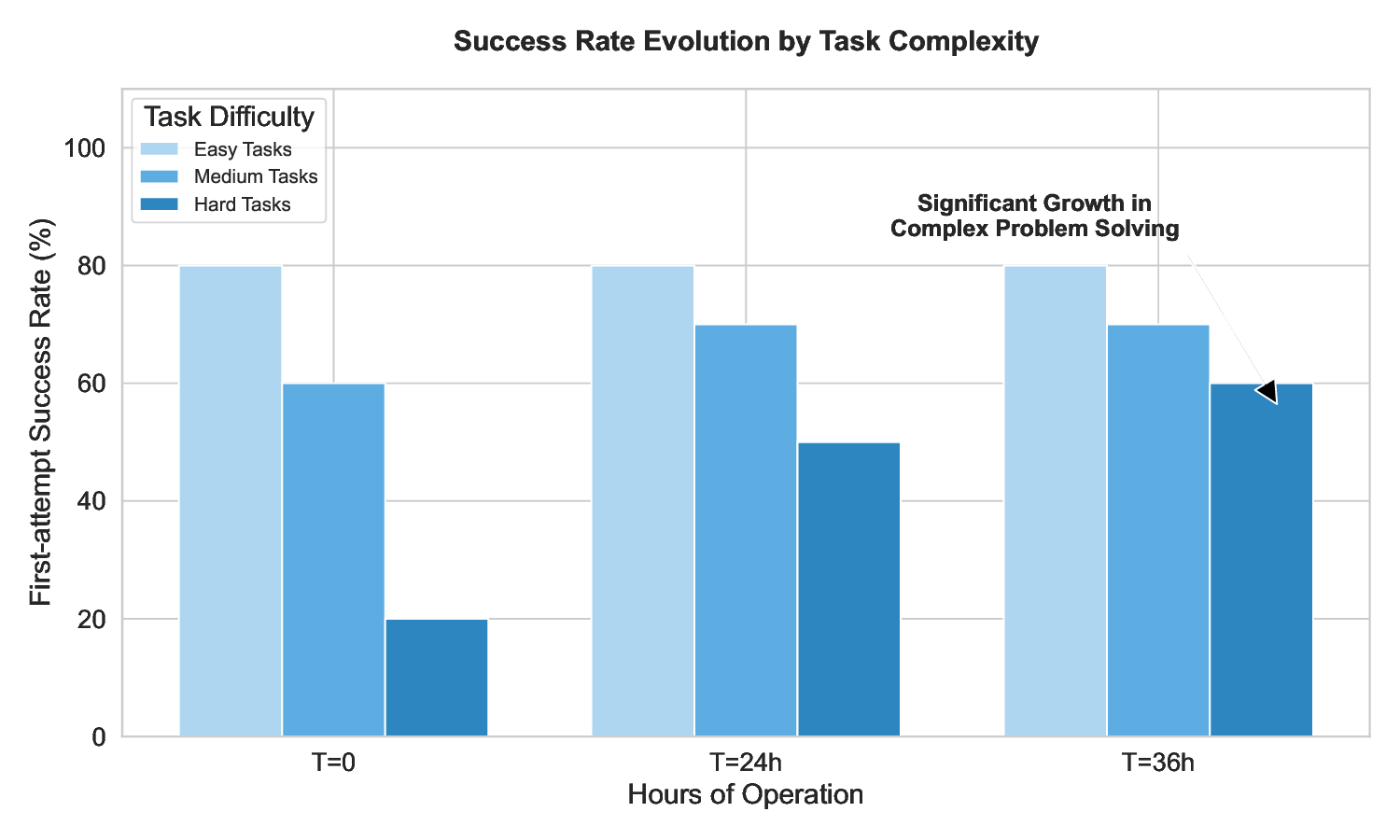

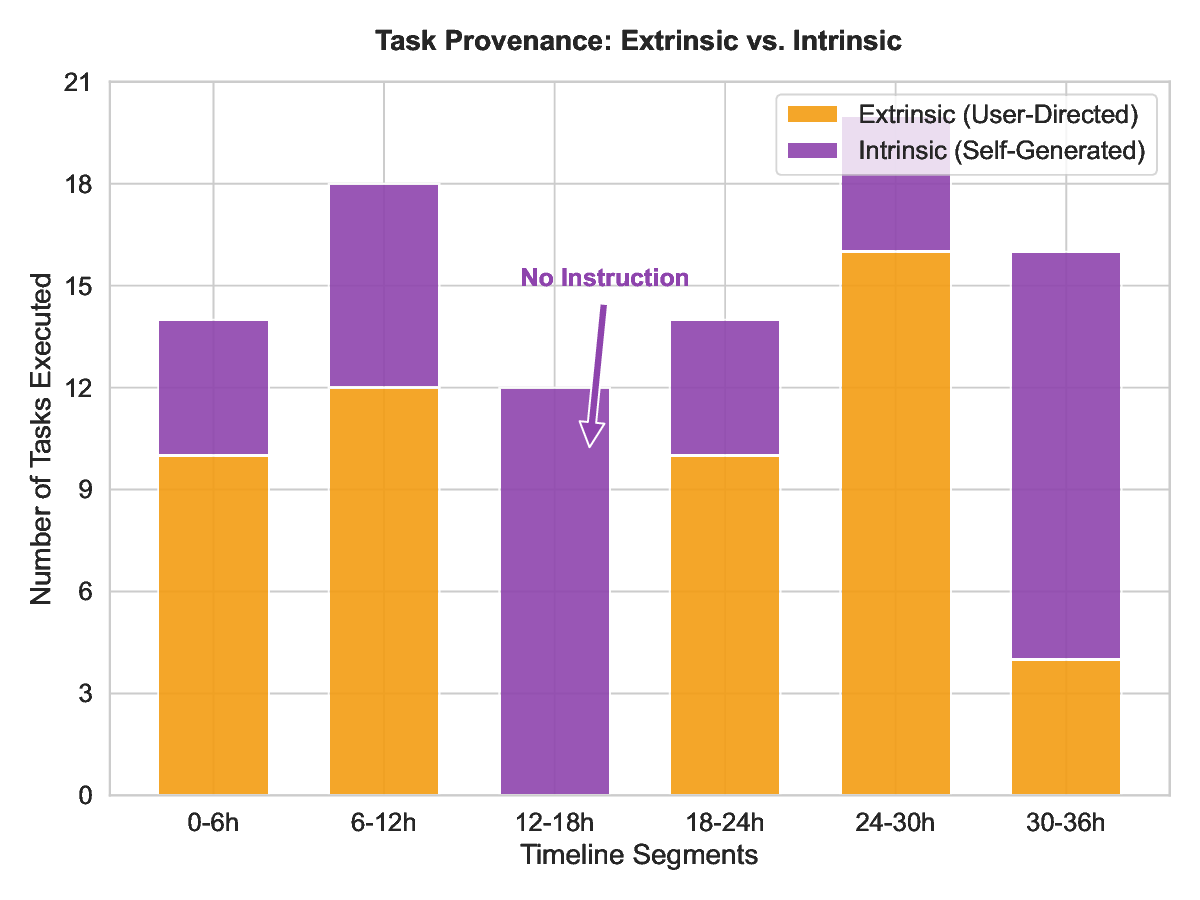

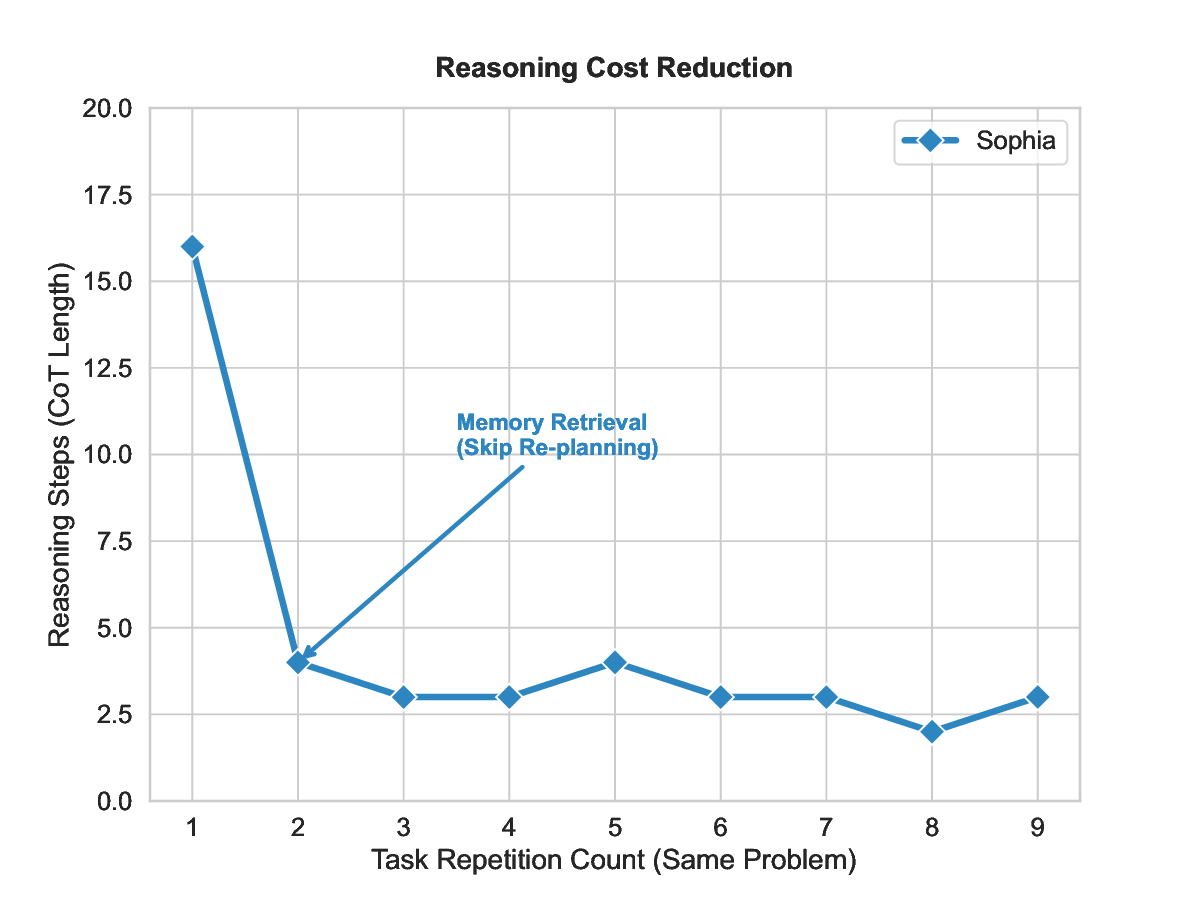

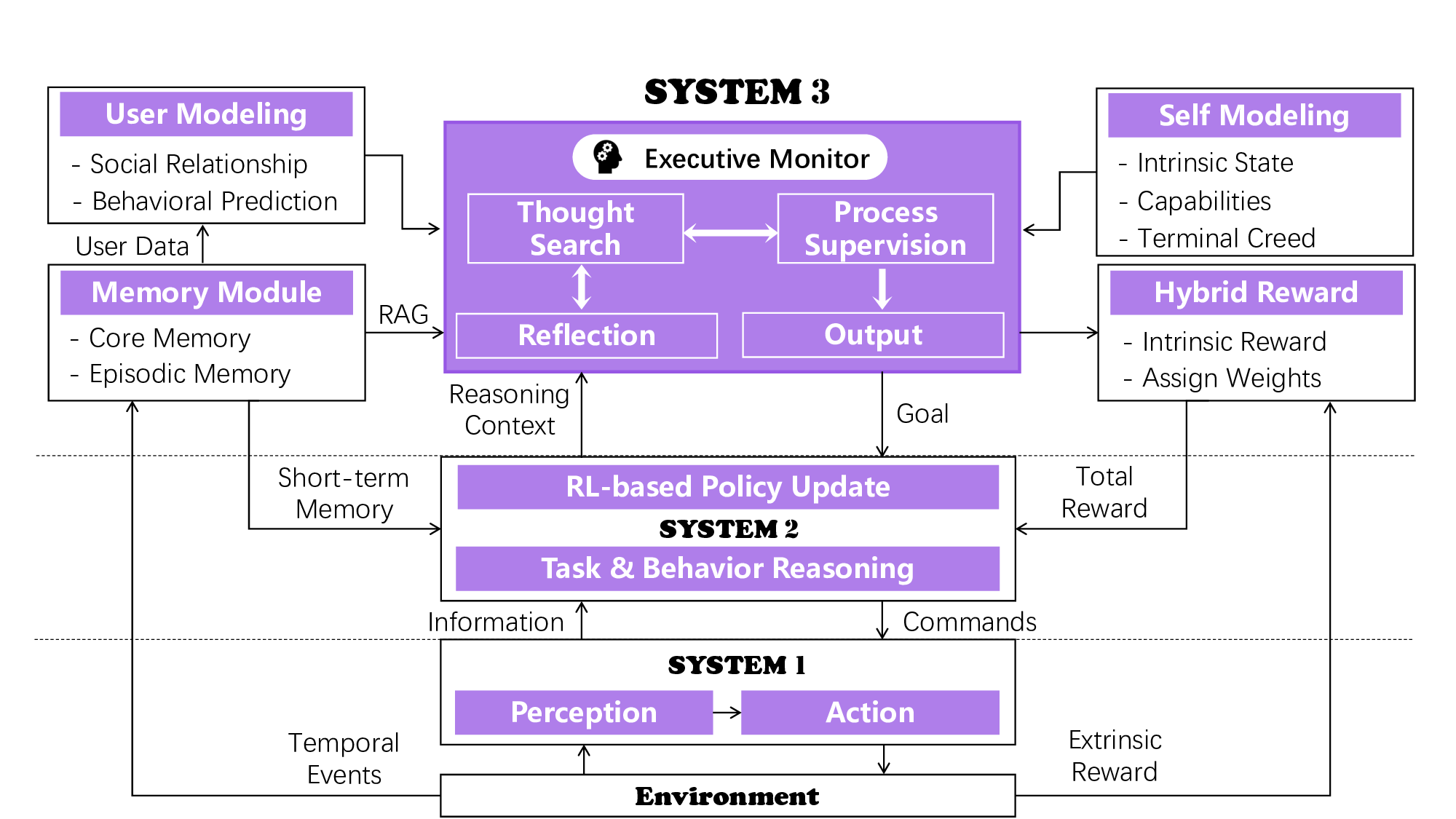

The development of LLMs has elevated AI agents from task-specific tools to long-lived, decision-making entities. Yet, most architectures remain static and reactive, tethered to manually defined, narrow scenarios. These systems excel at perception (System 1) and deliberation (System 2) but lack a persistent meta-layer to maintain identity, verify reasoning, and align short-term actions with long-term survival. We first propose a third stratum, System 3, that presides over the agent's narrative identity and long-horizon adaptation. The framework maps selected psychological constructs to concrete computational modules, thereby translating abstract notions of artificial life into implementable design requirements. The ideas coalesce in Sophia, a "Persistent Agent" wrapper that grafts a continuous self-improvement loop onto any LLM-centric System 1/2 stack. Sophia is driven by four synergistic mechanisms: process-supervised thought search, narrative memory, user and self modeling, and a hybrid reward system. Together, they transform repetitive reasoning into a self-driven, autobiographical process, enabling identity continuity and transparent behavioral explanations. Although the paper is primarily conceptual, we provide a compact engineering prototype to anchor the discussion. Quantitatively, Sophia independently initiates and executes various intrinsic tasks while achieving an 80% reduction in reasoning steps for recurring operations. Notably, meta-cognitive persistence yielded a 40% gain in success for high-complexity tasks, effectively bridging the performance gap between simple and sophisticated goals. Qualitatively, System 3 exhibited a coherent narrative identity and an innate capacity for task organization. By fusing psychological insight with a lightweight reinforcement-learning core, the persistent agent architecture advances a possible practical pathway toward artificial life.

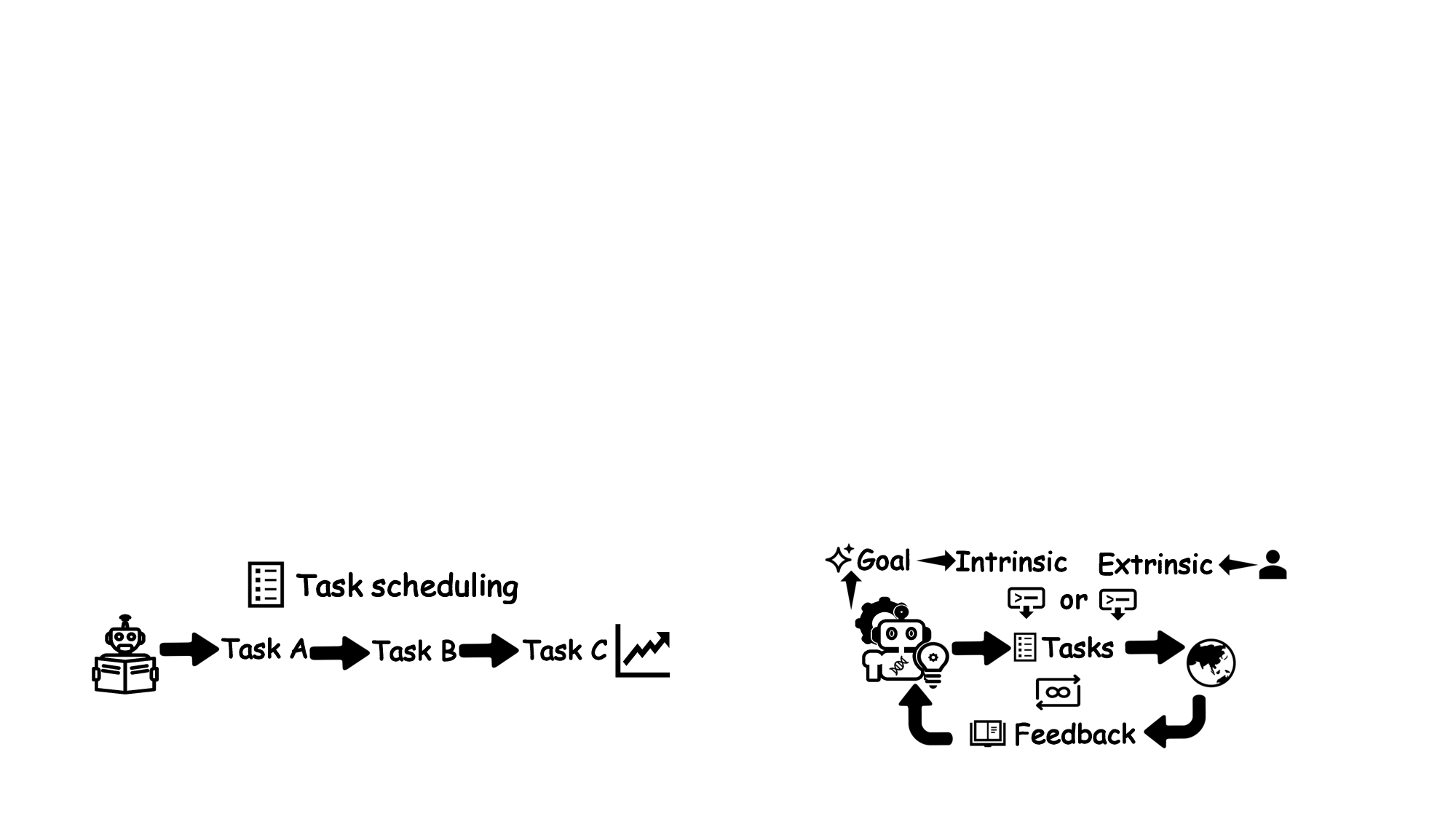

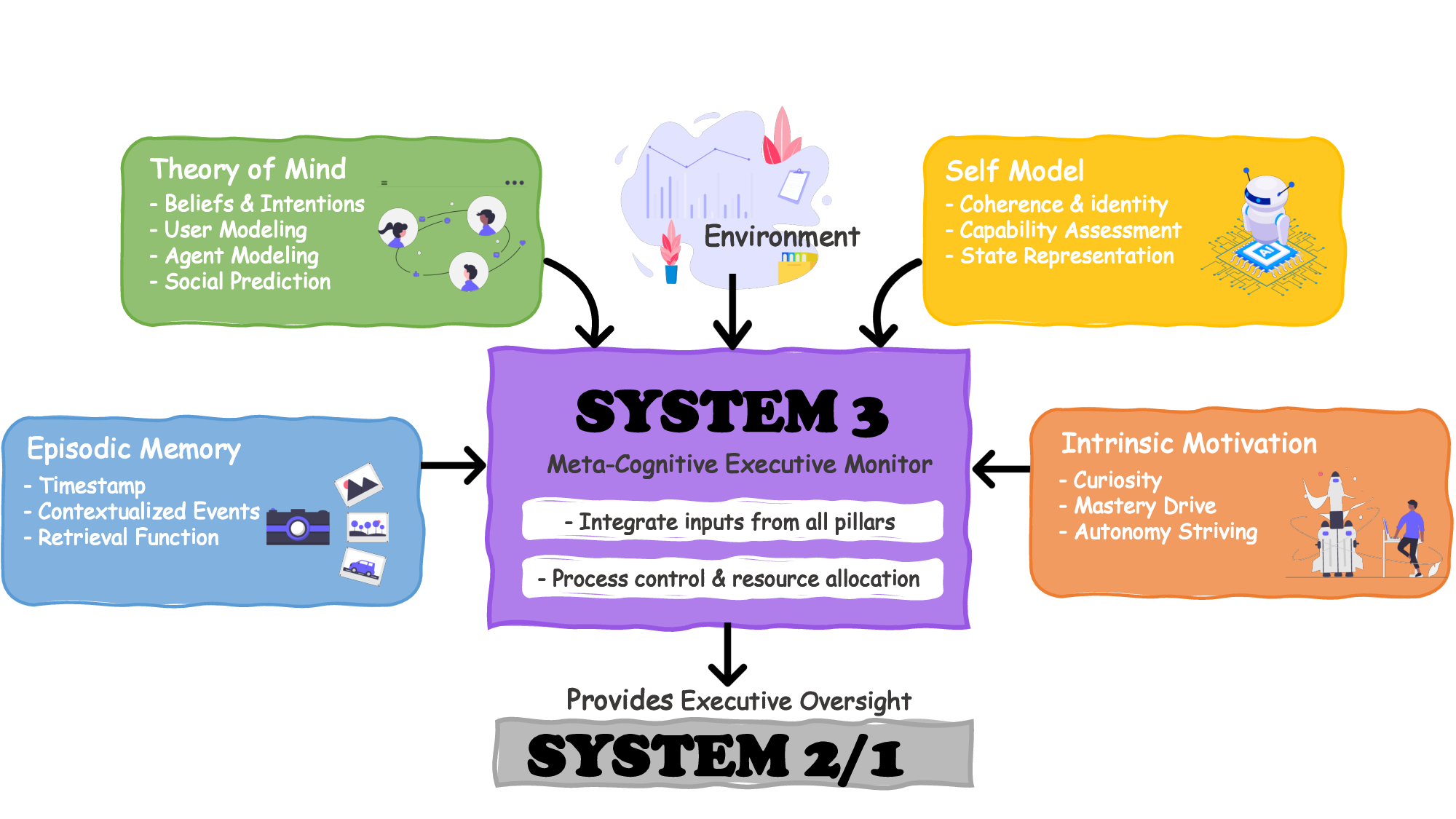

In this work, we first propose a third stratum, System 3, that presides over the agent's narrative identity and long-horizon adaptation. The framework maps selected psychological constructs (e.g., meta-cognition, theory-of-mind, intrinsic motivation, episodic memory) to concrete computational modules, thereby translating abstract notions of artificial life into implementable design requirements. These ideas coalesce in Sophia, a "Persistent Agent" wrapper that grafts a continuous self-improvement loop onto any LLM-centric System 1/2 stack. Sophia is driven by four synergistic mechanisms: process supervised thought search that curates and audits emerging thoughts, an memory module that maintains narrative identity, dynamic user and self models that track external and internal beliefs, and a hybrid reward system balancing environmental feedback with introspective drives. Together, transform the highly repetitive reasoning episodes of a primitive agent into an endless, self-driven reasoning phase dedicated to diverse goals, enabling autobiographical memory, identity continuity, and transparent narrative explanations of behavior.

Although the paper is primarily conceptual, grounding System 3 in decades of cognitive theory, we provide a compact engineering prototype to anchor the discussion. In a deployment spanning prolonged durations within a dynamic web environment, Sophia demonstrated robust operational persistence through autonomous goal generation. Quantitatively, Sophia independently initiates and executes various intrinsic tasks while achieving an 80% reduction in reasoning steps for recurring operations. Notably, meta-cognitive persistence yielded a 40% gain in success for high-complexity tasks, effectively bridging the performance gap between simple and sophisticated goals. Qualitatively, System 3 exhibited a coherent narrative identity and an innate capacity for task organization. By fusing psychological insight with a lightweight reinforcement-learning core, the persistent agent architecture advances a possible practical pathway toward artificial life.

The rapid proliferation of large language models (LLMs) has catalyzed a paradigm shift in AI agents, transforming them from single task executors into long-lived sophisticated cognitive entities endowed with capabilities for autonomous planning, strategic deliberation, and collaborative engagement (Achiam et al., 2023;Grattafiori et al., 2024;Hurst et al., 2024;Guo et al., 2025;Yang et al., 2025). This technological leap is reshaping expectations across science, industry, and everyday applications (Yang et al., 2024;Chkirbene et al., 2024;Ren et al., 2025;Li et al., 2024). Yet despite these breakthroughs, most existing agent frameworks remain anchored to manually crafted configurations that remain static after deployment. Once shipped, they cannot revise their skill set, develop new tasks, or integrate unfamiliar knowledge without human engineers in the loop. Lacking the intrinsic motivation and self-improvement capabilities inherent to living systems, today’s agents remain unable to achieve sustained growth or open-ended adaptation. Infusing AI agents with these lifelike principles, enabling autonomous self-reconfiguration while maintaining operational coherence, has thus emerged as a critical frontier in AI research.

Within prevailing agent architectures, cognition is typically partitioned into two complementary subsystems (Li et al., 2025). System 1 embodies rapid, heuristic faculties-perception, retrieval, and instinctive response. System 2, by contrast, governs slow, deliberate reasoning. It employs chain-ofthought planning, multi-step search, counterfactual simulation, and consistency checks to refine or override System 1’s impulses. In practical LLM agents, this often manifests as a reasoning loop that expands prompts with scratch-pad deliberations, validates tool outputs, and aligns final responses with user goals. While the synergy of these two layers enables impressive task performance, both remain confined to static configurations and predetermined task scheduling. Even in cases where agents support continual learning, such updates typically follow an externally defined task schedule rather than being self-directed. Consequently, the agent can neither update its reflexive priors nor revise its thought process when encountering truly novel domains. This rigidity highlights the necessity of a higher-order “System 3”, which is a meta-cognitive layer that monitors, audits, and continuously adapts both underlying systems, thereby enabling the entire cognitive architecture to sustain ongoing learning. In this work, we ground System 3 in four foundational theories from cognitive psychology:

• Meta-cognition (Shaughnessy et al., 2008;Dunlosky & Metcalfe, 2008): a self-reflective monitor that inspects ongoing thought traces, flags logical fallacies, and selectively rewrites its own procedures;

• Theory-of-Mind (Frith & Frith, 2005;Wellman, 2018)

This content is AI-processed based on open access ArXiv data.